简述

这次,将会使用到Fashion-MNIST数据集和操作

理论基础

回归

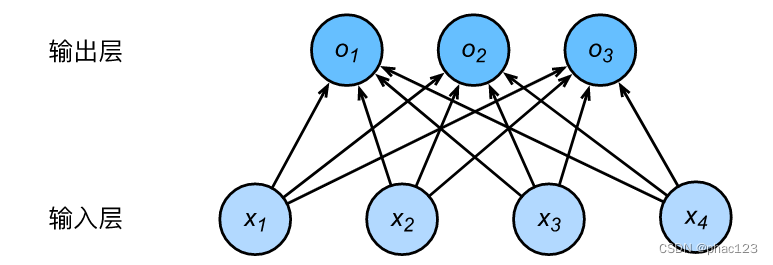

假设有输入的特征数有4个,分类的标签有3个.则回归方程为:

o

1

=

x

1

w

11

+

x

2

w

21

+

x

3

w

31

+

x

4

w

41

+

b

1

o_1=x_1w_{11}+x_2w{21}+x_3w_{31}+x_4w_{41}+b_1

o1=x1w11+x2w21+x3w31+x4w41+b1

o

2

=

x

1

w

12

+

x

2

w

22

+

x

3

w

32

+

x

4

w

42

+

b

1

o_2=x_1w_{12}+x_2w{22}+x_3w_{32}+x_4w_{42}+b_1

o2=x1w12+x2w22+x3w32+x4w42+b1

o

3

=

x

1

w

11

+

x

2

w

21

+

x

3

w

31

+

x

4

w

43

+

b

1

o_3=x_1w_{11}+x_2w{21}+x_3w_{31}+x_4w_{43}+b_1

o3=x1w11+x2w21+x3w31+x4w43+b1

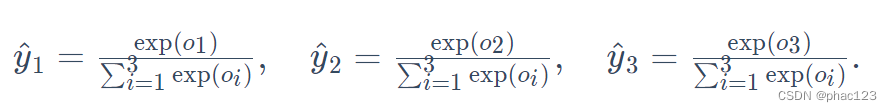

softmax

这样会得

[

y

1

′

,

y

2

′

,

y

3

′

]

[y_1^{'},y_2^{'},y_3^{'}]

[y1′,y2′,y3′],哪个数字更大,就取哪个.表示该样本属于这个标签.通过softmax可以得到:

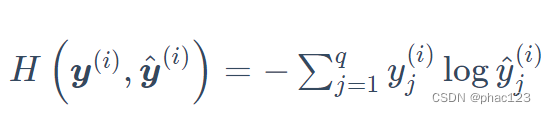

损失函数

交叉熵损失函数

读取数据

使用Fashion-MNIST数据集,并设置批量大小为256

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

初始化模型参数

型的输入向量的长度是 28×28=78428×28=784:该向量的每个元素对应图像中每个像素。由于图像有10个类别,单层神经网络输出层的输出个数为10,因此softmax回归的权重和偏差参数分别为784×10和1×101×10的矩阵

num_inputs = 784

num_outputs = 10

W = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_outputs)), dtype=torch.float)

b = torch.zeros(num_outputs, dtype=torch.float)

W.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

实现softmax运算

def softmax(X):

X_exp = X.exp()

partition = X_exp.sum(dim=1, keepdim=True)

return X_exp / partition # 这里应用了广播机制

定义模型

def net(X):

return softmax(torch.mm(X.view((-1, num_inputs)), W) + b)

定义损失函数

def cross_entropy(y_hat, y):

return - torch.log(y_hat.gather(1, y.view(-1, 1)))

计算分类准确率

def accuracy(y_hat, y):

return (y_hat.argmax(dim=1) == y).float().mean().item()

训练模型

'''训练模型'''

num_epochs, lr = 5, 0.1

d2l.train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, batch_size, [w, b], lr)

预测

X, y = iter(test_iter).next()

true_labels = d2l.get_fashion_mnist_labels(y.numpy())

pred_labels = d2l.get_fashion_mnist_labels(net(X).argmax(dim=1).numpy())

titles = [true + '\n' + pred for true, pred in zip(true_labels, pred_labels)]

d2l.show_fashion_mnist(X[0:9], titles[0:9])

整体代码

d2lzh_pytorch.py

import random

from IPython import display

import matplotlib.pyplot as plt

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import time

import sys

def use_svg_display():

# 用矢量图显示

display.set_matplotlib_formats('svg')

def set_figsize(figsize=(3.5, 2.5)):

use_svg_display()

# 设置图的尺寸

plt.rcParams['figure.figsize'] = figsize

'''给定batch_size, feature, labels,做数据的打乱并生成指定大小的数据集'''

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

random.shuffle(indices)

for i in range(0, num_examples, batch_size): #(start, staop, step)

j = torch.LongTensor(indices[i: min(i + batch_size, num_examples)]) #最后一次可能没有一个batch

yield features.index_select(0, j), labels.index_select(0, j)

'''定义线性回归的模型'''

def linreg(X, w, b):

return torch.mm(X, w) + b

'''定义线性回归的损失函数'''

def squared_loss(y_hat, y):

return (y_hat - y.view(y_hat.size())) ** 2 / 2

'''线性回归的优化算法 —— 小批量随机梯度下降法'''

def sgd(params, lr, batch_size):

for param in params:

param.data -= lr * param.grad / batch_size #这里使用的是param.data

'''MINIST,可以将数值标签转成相应的文本标签'''

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress', 'coat',

'sandal', 'shirt', 'sneaker', 'bag', 'ankle boot']

return [text_labels[int(i)] for i in labels]

'''定义一个可以在一行里画出多张图像和对应标签的函数'''

def show_fashion_mnist(images, labels):

use_svg_display()

# 这里的_表示我们忽略(不使用)的变量

_, figs = plt.subplots(1, len(images), figsize=(12, 12))

for f, img, lbl in zip(figs, images, labels):

f.imshow(img.view((28, 28)).numpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

plt.show()

'''获取并读取Fashion-MNIST数据集;该函数将返回train_iter和test_iter两个变量'''

def load_data_fashion_mnist(batch_size):

mnist_train = torchvision.datasets.FashionMNIST(root='Datasets/FashionMNIST', train=True, download=True,

transform=transforms.ToTensor())

mnist_test = torchvision.datasets.FashionMNIST(root='Datasets/FashionMNIST', train=False, download=True,

transform=transforms.ToTensor())

if sys.platform.startswith('win'):

num_workers = 0 # 0表示不用额外的进程来加速读取数据

else:

num_workers = 4

train_iter = torch.utils.data.DataLoader(mnist_train, batch_size=batch_size, shuffle=True, num_workers=num_workers)

test_iter = torch.utils.data.DataLoader(mnist_test, batch_size=batch_size, shuffle=False, num_workers=num_workers)

return train_iter, test_iter

'''评估模型net在数据集data_iter的准确率'''

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

'''训练模型,softmax'''

def train_ch3(net, train_iter, test_iter, loss, num_epochs, batch_size, params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

# 梯度清零

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

sgd(params, lr, batch_size)

else:

optimizer.step()

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) == y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'

% (epoch + 1, train_l_sum / n, train_acc_sum / n, test_acc))

main.py

import torch

import torchvision

import numpy as np

import sys

sys.path.append("..") # 为了导入上层目录的d2lzh_pytorch

import d2lzh_pytorch as d2l

# 使用Fashion-MNIST数据集

'''获取和读取数据'''

batch_size = 256

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size)

'''初始化模型参数'''

num_inputs = 784

num_outputs = 10

w = torch.tensor(np.random.normal(0, 0.01, (num_inputs, num_outputs)), dtype=torch.float)

b = torch.zeros(num_outputs, dtype = torch.float)

w.requires_grad_(requires_grad = True)

b.requires_grad_(requires_grad = True)

'''softmax运算'''

def softmax(X):

X_exp = X.exp()

partition = X_exp.sum(dim=1, keepdim=True)

return X_exp/partition

'''定义模型'''

def net(X):

return softmax(torch.mm(X.view(-1, num_inputs), w) + b)

'''定义损失函数'''

def cross_entropy(y_hat, y):

return - torch.log(y_hat.gather(1, y.view(-1, 1)))

'''计算准确率'''

def accuracy(y_hat, y):

return (y.hat.argmax(dim=1) == y).float().mean().item()

'''训练模型'''

num_epochs, lr = 5, 0.1

d2l.train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs, batch_size, [w, b], lr)

'''预测'''

X, y = iter(test_iter).next()

true_labels = d2l.get_fashion_mnist_labels(y.numpy())

pred_labels = d2l.get_fashion_mnist_labels(net(X).argmax(dim=1).numpy())

titles = [true + '\n' + pred for true, pred in zip(true_labels, pred_labels)]

d2l.show_fashion_mnist(X[0:9], titles[0:9])

3123

3123

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?