CONTENT

DESCRIPTION

*Please note: this networking configuration is not suitable for K8s and NCCL-based AI applications.

*For the RoCE recommended configuration and verification, please click here .

This post discusses the usage and testing of RoCE over a Link Aggregation (LAG) interface for ConnectX-4 adapters.

REFERENCES

- MLNX_OFED User Manual

- Bond over LAG interface using RHEL

BACKGROUND

RoCE LAG is a feature meant for mimicking Ethernet bonding for IB devices and is available for dual port cards only.

The LAG mode is entered when both the Ethernet interfaces that belong to the same card are only slaves to the same bond interface and the bonding mode is one of the following:

- active-backup (mode 1)

- balance-xor (mode 2)

- 802.3ad (LACP) (mode 4)

If there is any change of the bond configuration that negates one of the above rules (that is, bonding mode is not 1, 2 or 4, or both Ethernet interfaces that belong to the same card are not the only slaves of the bond interface), you will exit RoCE LAG mode and return to a normal InfiniBand (IB) device per port configuration.

When in RoCE LAG mode, instead of having an IB device per physical port (for example mlx5_0 and mlx5_1), only one IB device will be present for both ports with 'bond' appended to its name (for example mlx5_bond_0). This device provides an aggregation of both IB ports, just as the bond interface provides an aggregation of both Ethernet interfaces.

The method used by the IB bond device to distribute traffic depends on the Ethernet bond interface's mode, as follows:

- active-backup (mode 1): QPs are always assigned to the active slave's physical port (chosen by the bond interface). When the active slave changes, all QPs are moved to the new active slave. Note that in order for the bond driver to act on link-down events and set the other interface to be the active slave, it must be configured with the "miimon" option (for example "miimon = 100").

- balance-xor (mode 2) and 802.3ad (LACP) (mode 4): QPs are assigned to physical ports in a round-robin manner. Each time a QP is moved from RESET to the INIT state, it is assigned to one of the two physical ports at random.

Note: The connected switch or (server) should be configured on the same way.

SETUP

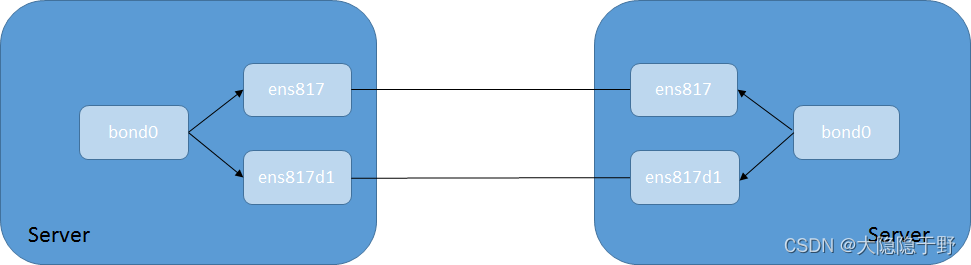

- Use two servers - each equipped with a dual port Mellanox ConnectX-4 adapter card, connected through a switch.

- Configure the ports in Ethernet mode.

- Install the MLNX_OFED Rel. 4.0 or later on both servers.

- Use Kernel 4.9 or later. Also RHEL7.4 and newer RHEL7.x include the support.

CONFIGURATION EXAMPLE

1. Make sure bonding is enabled on the server. Follow the distribution OS manuals to create a bond0 interface, see here for bonding example over RHEL OS.

2. Set the bond0 interface on both servers, edit /etc/sysconfig/network-scripts/ifcfg-bond0 as follows:

DEVICE=bond0

NAME=bond0

TYPE=bond

BONDING_MASTER=yes

IPADDR=22.22.22.6 #the other server should have different IP on the same subnet

PREFIX=24

BOOTPROTO=none

ONBOOT=yes

NM_CONTROLLED=no

BONDING_OPTS="mode=active-backup miimon=100 updelay=100 downdelay=100"

In this example, the bond mode is active-backup.

3. Set the physical port of the Mellanox ConnectX-4 adapter, edit /etc/sysconfig/network-scripts/ifcfg-ens817.

DEVICE=ens817

TYPE=Ethernet

ONBOOT=yes

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

4. Set the other physical port of Mellanox ConnectX-4 adapter, edit /etc/sysconfig/network-scripts/ifcfg-ens817d1.

DEVICE=ens817d1

TYPE=Ethernet

ONBOOT=yes

MASTER=bond0

SLAVE=yes

BOOTPROTO=none

5. Restart the port (or the driver)

# ifdown bond0

# ifup bond0

or

# /etc/init.d/network restart

# /etc/init.d/openibd restart

6. Make sure that you can ping between the two servers using the bond0 interface.

7. Check the current status of bond0:

# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: fault-tolerance (active-backup)

Primary Slave: None

Currently Active Slave: ens817

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 100

Down Delay (ms): 100

Slave Interface: ens817

MII Status: up

Speed: 40000 Mbps

Duplex: full

Link Failure Count: 6

Permanent HW addr: e4:1d:2d:26:3c:e1

Slave queue ID: 0

Slave Interface: ens817d1

MII Status: up

Speed: 40000 Mbps

Duplex: full

Link Failure Count: 6

Permanent HW addr: e4:1d:2d:26:3c:e2

Slave queue ID: 0

If you use bond mode 802.3ad, your output looks like the example:

# cat /proc/net/bonding/bond0

Ethernet Channel Bonding Driver: v3.7.1 (April 27, 2011)

Bonding Mode: IEEE 802.3ad Dynamic link aggregation

Transmit Hash Policy: layer2 (0)

MII Status: up

MII Polling Interval (ms): 100

Up Delay (ms): 100

Down Delay (ms): 100

802.3ad info

LACP rate: slow

Min links: 0

Aggregator selection policy (ad_select): stable

Active Aggregator Info:

Aggregator ID: 1

Number of ports: 2

Actor Key: 1

Partner Key: 1

Partner Mac Address: e4:1d:2d:26:33:31

Slave Interface: ens817

MII Status: up

Speed: 40000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: e4:1d:2d:26:3c:e1

Aggregator ID: 1

Slave queue ID: 0

Slave Interface: ens817d1

MII Status: up

Speed: 40000 Mbps

Duplex: full

Link Failure Count: 0

Permanent HW addr: e4:1d:2d:26:3c:e2

Aggregator ID: 1

Slave queue ID: 0

8. Run one of the perftests tools to check RDMA, for example use ib_send_bw.

Run the ib_send_bw server on one host:

#ib_send_bw -D60 -f --report_gbits

and the ib_send_bw client on the other host:

#ib_send_bw 22.22.22.6 -D60 -f --report_gbits

TESTING PROCEDURE

1. Use a simple testing procedure for active-backup mode, which involves running RoCE traffic over a bond0 interface. Within that time, close and open the ports to see that the connection is still open and traffic is running (while the traffic is being toggled between the ports).

2. For active-active bonding mode it could be more tricky, as you will need to create multiple RoCE flows, each of which is load-balanced from a different port.

IMPORTANT SWITCH NOTES

most switches are configured for MAC-address based load balancing. it is highly recommended to switch to IP-address and UDP-port based load balancing. example for Mellanox Onyx switches:

sw01 [standalone: master] (config) # port-channel load-balance ethernet source-destination-ip source-destination-port

sw01 [standalone: master] (config) # show interfaces port-channel load-balance

source-destination-ip, source-destination-port

ARTICLE NUMBER

000004641

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?