canal 简介

官网:https://github.com/alibaba/canal/wiki

************************

简 介

canal是解析增量日志(binary log)的中间件,可实现增量数据订阅与消费,目前主要支持mysql

主要应用:数据复制、缓存双写一致性

******************

工作原理

canal 模拟mysql复制过程,将自己伪装成mysql slave,向master返送dump请求;

master收到dump请求后,向canal推送binary log;

canal解析binary log并存储,供客户端订阅

******************

mysql主从复制

master开启二进制日志(默认不开启),记录二进制日志事件;

slave向master发送dump请求,master向slave推送数据;

slave将数据写入中继日志(relay log),解析relay log,在本地执行

查看二进制日志事件

mysql> show binary logs;

+-------------------------+-----------+-----------+

| Log_name | File_size | Encrypted |

+-------------------------+-----------+-----------+

| 13b18152a242-bin.000001 | 156 | No |

| 13b18152a242-bin.000002 | 436 | No |

+-------------------------+-----------+-----------+

2 rows in set (0.00 sec)

# 默认显示第一个二进制日志中的事件

mysql> show binlog events;

+-------------------------+-----+----------------+-----------+-------------+-----------------------------------+

| Log_name | Pos | Event_type | Server_id | End_log_pos | Info |

+-------------------------+-----+----------------+-----------+-------------+-----------------------------------+

| 13b18152a242-bin.000001 | 4 | Format_desc | 1 | 125 | Server ver: 8.0.25, Binlog ver: 4 |

| 13b18152a242-bin.000001 | 125 | Previous_gtids | 1 | 156 | |

+-------------------------+-----+----------------+-----------+-------------+-----------------------------------+

2 rows in set (0.00 sec)

# 显示指定二进制日志中的事件

mysql> show binlog events in "13b18152a242-bin.000001";

+-------------------------+-----+----------------+-----------+-------------+-----------------------------------+

| Log_name | Pos | Event_type | Server_id | End_log_pos | Info |

+-------------------------+-----+----------------+-----------+-------------+-----------------------------------+

| 13b18152a242-bin.000001 | 4 | Format_desc | 1 | 125 | Server ver: 8.0.25, Binlog ver: 4 |

| 13b18152a242-bin.000001 | 125 | Previous_gtids | 1 | 156 | |

+-------------------------+-----+----------------+-----------+-------------+-----------------------------------+

2 rows in set (0.00 sec)

mysql> show binlog events in "13b18152a242-bin.000002";

+-------------------------+-----+----------------+-----------+-------------+--------------------------------------+

| Log_name | Pos | Event_type | Server_id | End_log_pos | Info |

+-------------------------+-----+----------------+-----------+-------------+--------------------------------------+

| 13b18152a242-bin.000002 | 4 | Format_desc | 1 | 125 | Server ver: 8.0.25, Binlog ver: 4 |

| 13b18152a242-bin.000002 | 125 | Previous_gtids | 1 | 156 | |

| 13b18152a242-bin.000002 | 156 | Anonymous_Gtid | 1 | 235 | SET @@SESSION.GTID_NEXT= 'ANONYMOUS' |

| 13b18152a242-bin.000002 | 235 | Query | 1 | 310 | BEGIN |

| 13b18152a242-bin.000002 | 310 | Table_map | 1 | 361 | table_id: 88 (test.test) |

| 13b18152a242-bin.000002 | 361 | Write_rows | 1 | 405 | table_id: 88 flags: STMT_END_F |

| 13b18152a242-bin.000002 | 405 | Xid | 1 | 436 | COMMIT /* xid=15 */ |

+-------------------------+-----+----------------+-----------+-------------+--------------------------------------+

7 rows in set (0.00 sec)

************************

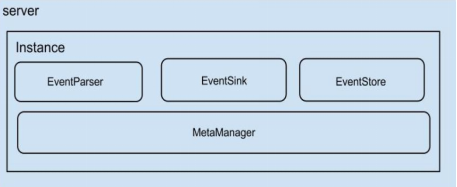

canal 架构

server表示canal实例:对应一个jvm进程;

instance对应一个数据队列:连接mysql,对数据进行处理转换,供客户端订阅;

一个server可包含多个instance队列,同时处理多个mysql数据源

******************

instance 组成

eventParser:数据源接入,模拟slave与mysql进行交互,解析数据

eventSink:数据转换处理

eventStore:数据存储(存储在内存),后续计划支持本地file存储

MetaDataManager:元数据管理器,存储订阅信息等数据

******************

eventParser 设计

canal server与mysql建立连接时,获取上一次解析的位置,如果初次启动,则获取初始指定位置或者mysql master的当前位置

canal server发送binlog_dump指令

0. write command number

1. write 4 bytes bin-log position to start at

2. write 2 bytes bin-log flags

3. write 4 bytes server id of the slave

4. write bin-log file name

mysql向canal server推送binlog数据;

canal server使用binlog_parser解析binlog,补充特定信息,推送给eventSink;

eventSink对数据进行处理转换,交给eventStore存储(存储为阻塞操作)

存储成功后,定时记录binary log位置

******************

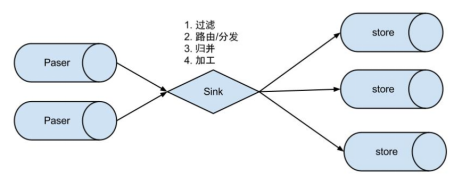

eventSink 设计

数据过滤:支持通配符过滤,查找指定的数据库、表等数据

路由分发:mysql数据库实例可创建多个数据库,按照数据库分类存储

数据归并:业务中数据量过大需要分库分表,使用canal将分散的数据集中存储

数据加工:数据存储之前进行额外处理,如

sql: "select a.id as _id, a.name as _name, a.role_id as _role_id, b.role_name as _role_name,

a.c_time as _c_time, c.labels as _labels from user a

left join role b on b.id=a.role_id

left join (select user_id, group_concat(label order by id desc separator ';') as labels from label

group by user_id) c on c.user_id=a.id"

******************

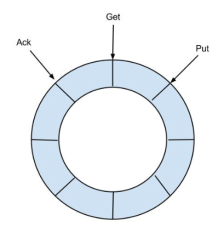

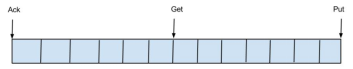

eventStore 设计

eventStore目前仅支持内存存储,未来会引入本地file存储、混合存储

数据存储在内存,大小固定,为2^n

put 指针:数据最后一次写入位置

get 指针:客户端最后一次拉取数据位置

ack 指针:客户端最后一次消费成功提交位置

说明:客户端get数据时,使用与操作(cursor&(size-1)),因此ringSize为2^n时效率较高

将ringBuffer拉直,put、get、ack满足如下关系

ack <= get <= put

put -ack <= ringBuffer.size

说明:put、get、ack指针位置用long类型记录;

******************

instance 配置管理

manager 方式:可视化界面配置canal参数

spring 方式:本地文件spring xml、properties配置canal参数

instance.xml作用:创建CanalInstanceWithSpring实例对象

memory-instance.xml:使用内存存储parser log position、cursor

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:tx="http://www.springframework.org/schema/tx"

xmlns:aop="http://www.springframework.org/schema/aop" xmlns:lang="http://www.springframework.org/schema/lang"

xmlns:context="http://www.springframework.org/schema/context"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-2.0.xsd

http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-2.0.xsd

http://www.springframework.org/schema/lang http://www.springframework.org/schema/lang/spring-lang-2.0.xsd

http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-2.0.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-2.5.xsd"

default-autowire="byName">

<import resource="classpath:spring/base-instance.xml" />

# 创建CanalInstanceWithSpring实例对象

<bean id="instance" class="com.alibaba.otter.canal.instance.spring.CanalInstanceWithSpring">

<property name="destination" value="${canal.instance.destination}" />

<property name="eventParser">

<ref bean="eventParser" />

</property>

<property name="eventSink">

<ref bean="eventSink" />

</property>

<property name="eventStore">

<ref bean="eventStore" />

</property>

<property name="metaManager">

<ref bean="metaManager" />

</property>

<property name="alarmHandler">

<ref bean="alarmHandler" />

</property>

<property name="mqConfig">

<ref bean="mqConfig" />

</property>

</bean>

<!-- 报警处理类 -->

<bean id="alarmHandler" class="com.alibaba.otter.canal.common.alarm.LogAlarmHandler" />

# 元数据管理器使用内存存储

<bean id="metaManager" class="com.alibaba.otter.canal.meta.MemoryMetaManager" />

# eventStore使用内存存储数据

<bean id="eventStore" class="com.alibaba.otter.canal.store.memory.MemoryEventStoreWithBuffer">

<property name="bufferSize" value="${canal.instance.memory.buffer.size:16384}" />

<property name="bufferMemUnit" value="${canal.instance.memory.buffer.memunit:1024}" />

<property name="batchMode" value="${canal.instance.memory.batch.mode:MEMSIZE}" />

<property name="ddlIsolation" value="${canal.instance.get.ddl.isolation:false}" />

<property name="raw" value="${canal.instance.memory.rawEntry:true}" />

</bean>

<bean id="eventSink" class="com.alibaba.otter.canal.sink.entry.EntryEventSink">

<property name="eventStore" ref="eventStore" />

<property name="filterTransactionEntry" value="${canal.instance.filter.transaction.entry:false}"/>

</bean>

<bean id="eventParser" parent="baseEventParser">

<property name="destination" value="${canal.instance.destination}" />

<property name="slaveId" value="${canal.instance.mysql.slaveId:0}" />

<!-- 心跳配置 -->

<property name="detectingEnable" value="${canal.instance.detecting.enable:false}" />

<property name="detectingSQL" value="${canal.instance.detecting.sql}" />

<property name="detectingIntervalInSeconds" value="${canal.instance.detecting.interval.time:5}" />

<property name="haController">

<bean class="com.alibaba.otter.canal.parse.ha.HeartBeatHAController">

<property name="detectingRetryTimes" value="${canal.instance.detecting.retry.threshold:3}" />

<property name="switchEnable" value="${canal.instance.detecting.heartbeatHaEnable:false}" />

</bean>

</property>

<property name="alarmHandler" ref="alarmHandler" />

<!-- 解析过滤处理 -->

<property name="eventFilter">

<bean class="com.alibaba.otter.canal.filter.aviater.AviaterRegexFilter" >

<constructor-arg index="0" value="${canal.instance.filter.regex:.*\..*}" />

</bean>

</property>

<property name="eventBlackFilter">

<bean class="com.alibaba.otter.canal.filter.aviater.AviaterRegexFilter" >

<constructor-arg index="0" value="${canal.instance.filter.black.regex:}" />

<constructor-arg index="1" value="false" />

</bean>

</property>

<property name="fieldFilter" value="${canal.instance.filter.field}" />

<property name="fieldBlackFilter" value="${canal.instance.filter.black.field}" />

<!-- 最大事务解析大小,超过该大小后事务将被切分为多个事务投递 -->

<property name="transactionSize" value="${canal.instance.transaction.size:1024}" />

<!-- 网络链接参数 -->

<property name="receiveBufferSize" value="${canal.instance.network.receiveBufferSize:16384}" />

<property name="sendBufferSize" value="${canal.instance.network.sendBufferSize:16384}" />

<property name="defaultConnectionTimeoutInSeconds" value="${canal.instance.network.soTimeout:30}" />

<!-- 解析编码 -->

<!-- property name="connectionCharsetNumber" value="${canal.instance.connectionCharsetNumber:33}" /-->

<property name="connectionCharset" value="${canal.instance.connectionCharset:UTF-8}" />

# 除初次启动外,后续从内存中记录的上次解析位置开始解析

<!-- 解析位点记录 -->

<property name="logPositionManager">

<bean class="com.alibaba.otter.canal.parse.index.MemoryLogPositionManager" />

</property>

<!-- failover切换时回退的时间 -->

<property name="fallbackIntervalInSeconds" value="${canal.instance.fallbackIntervalInSeconds:60}" />

<!-- 解析数据库信息 -->

<property name="masterInfo">

<bean class="com.alibaba.otter.canal.parse.support.AuthenticationInfo" init-method="initPwd">

<property name="address" value="${canal.instance.master.address}" />

<property name="username" value="${canal.instance.dbUsername:retl}" />

<property name="password" value="${canal.instance.dbPassword:retl}" />

<property name="pwdPublicKey" value="${canal.instance.pwdPublicKey:retl}" />

<property name="enableDruid" value="${canal.instance.enableDruid:false}" />

<property name="defaultDatabaseName" value="${canal.instance.defaultDatabaseName:}" />

</bean>

</property>

<property name="standbyInfo">

<bean class="com.alibaba.otter.canal.parse.support.AuthenticationInfo" init-method="initPwd">

<property name="address" value="${canal.instance.standby.address}" />

<property name="username" value="${canal.instance.dbUsername:retl}" />

<property name="password" value="${canal.instance.dbPassword:retl}" />

<property name="pwdPublicKey" value="${canal.instance.pwdPublicKey:retl}" />

<property name="enableDruid" value="${canal.instance.enableDruid:false}" />

<property name="defaultDatabaseName" value="${canal.instance.defaultDatabaseName:}" />

</bean>

</property>

# 初次启动时,eventParser解析的起始位置:从配置参数中读取

<!-- 解析起始位点 -->

<property name="masterPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.master.journal.name}" />

<property name="position" value="${canal.instance.master.position}" />

<property name="timestamp" value="${canal.instance.master.timestamp}" />

<property name="gtid" value="${canal.instance.master.gtid}" />

</bean>

</property>

<property name="standbyPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.standby.journal.name}" />

<property name="position" value="${canal.instance.standby.position}" />

<property name="timestamp" value="${canal.instance.standby.timestamp}" />

<property name="gtid" value="${canal.instance.standby.gtid}" />

</bean>

</property>

<property name="filterQueryDml" value="${canal.instance.filter.query.dml:false}" />

<property name="filterQueryDcl" value="${canal.instance.filter.query.dcl:false}" />

<property name="filterQueryDdl" value="${canal.instance.filter.query.ddl:false}" />

<property name="useDruidDdlFilter" value="${canal.instance.filter.druid.ddl:true}" />

<property name="filterDmlInsert" value="${canal.instance.filter.dml.insert:false}" />

<property name="filterDmlUpdate" value="${canal.instance.filter.dml.update:false}" />

<property name="filterDmlDelete" value="${canal.instance.filter.dml.delete:false}" />

<property name="filterRows" value="${canal.instance.filter.rows:false}" />

<property name="filterTableError" value="${canal.instance.filter.table.error:false}" />

<property name="supportBinlogFormats" value="${canal.instance.binlog.format}" />

<property name="supportBinlogImages" value="${canal.instance.binlog.image}" />

<!--表结构相关-->

<property name="enableTsdb" value="${canal.instance.tsdb.enable:false}"/>

<property name="tsdbSpringXml" value="${canal.instance.tsdb.spring.xml:}"/>

<property name="tsdbSnapshotInterval" value="${canal.instance.tsdb.snapshot.interval:24}" />

<property name="tsdbSnapshotExpire" value="${canal.instance.tsdb.snapshot.expire:360}" />

<!--是否启用GTID模式-->

<property name="isGTIDMode" value="${canal.instance.gtidon:false}"/>

<!-- parallel parser -->

<property name="parallel" value="${canal.instance.parser.parallel:true}" />

<property name="parallelThreadSize" value="${canal.instance.parser.parallelThreadSize}" />

<property name="parallelBufferSize" value="${canal.instance.parser.parallelBufferSize:256}" />

<property name="autoResetLatestPosMode" value="${canal.auto.reset.latest.pos.mode:false}" />

</bean>

<bean id="mqConfig" class="com.alibaba.otter.canal.instance.core.CanalMQConfig">

<property name="topic" value="${canal.mq.topic}" />

<property name="dynamicTopic" value="${canal.mq.dynamicTopic}" />

<property name="partition" value="${canal.mq.partition}" />

<property name="partitionsNum" value="${canal.mq.partitionsNum}" />

<property name="partitionHash" value="${canal.mq.partitionHash}" />

<property name="dynamicTopicPartitionNum" value="${canal.mq.dynamicTopicPartitionNum}" />

</bean>

</beans>

file-instance.xml:混合使用内存、本地文件存储parser log position、cursor信息

#其余省略

# metaManager使用本地文件存储

<bean id="metaManager" class="com.alibaba.otter.canal.meta.FileMixedMetaManager">

<property name="dataDir" value="${canal.file.data.dir:../conf}" />

<property name="period" value="${canal.file.flush.period:1000}" />

</bean>

# eventStore使用内存存储

<bean id="eventStore" class="com.alibaba.otter.canal.store.memory.MemoryEventStoreWithBuffer">

<property name="bufferSize" value="${canal.instance.memory.buffer.size:16384}" />

<property name="bufferMemUnit" value="${canal.instance.memory.buffer.memunit:1024}" />

<property name="batchMode" value="${canal.instance.memory.batch.mode:MEMSIZE}" />

<property name="ddlIsolation" value="${canal.instance.get.ddl.isolation:false}" />

<property name="raw" value="${canal.instance.memory.rawEntry:true}" />

</bean>

<bean id="eventSink" class="com.alibaba.otter.canal.sink.entry.EntryEventSink">

<property name="eventStore" ref="eventStore" />

<property name="filterTransactionEntry" value="${canal.instance.filter.transaction.entry:false}"/>

</bean>

# eventParser实例对象

<bean id="eventParser" parent="baseEventParser">

# 优先使用使用memoryLogPositionManager查找解析位置

# 若查找不到,则从FileMixedMetaManager获取解析位置

<!-- 解析位点记录 -->

<property name="logPositionManager">

<bean class="com.alibaba.otter.canal.parse.index.FailbackLogPositionManager">

<constructor-arg>

<bean class="com.alibaba.otter.canal.parse.index.MemoryLogPositionManager" />

</constructor-arg>

<constructor-arg>

<bean class="com.alibaba.otter.canal.parse.index.MetaLogPositionManager">

<constructor-arg ref="metaManager"/>

</bean>

</constructor-arg>

</bean>

</property>

# 初次启动时,eventParser解析的日志位置

<!-- 解析起始位点 -->

<property name="masterPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.master.journal.name}" />

<property name="position" value="${canal.instance.master.position}" />

<property name="timestamp" value="${canal.instance.master.timestamp}" />

<property name="gtid" value="${canal.instance.master.gtid}" />

</bean>

</property>

<property name="standbyPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.standby.journal.name}" />

<property name="position" value="${canal.instance.standby.position}" />

<property name="timestamp" value="${canal.instance.standby.timestamp}" />

<property name="gtid" value="${canal.instance.standby.gtid}" />

</bean>

</property>

</bean>

default-instance.xml:混合使用内存、zookeeper存储log position、cursor信息

# 其余省略

# zookeeper客户端

<bean id="zkClientx" class="org.springframework.beans.factory.config.MethodInvokingFactoryBean" >

<property name="targetClass" value="com.alibaba.otter.canal.common.zookeeper.ZkClientx" />

<property name="targetMethod" value="getZkClient" />

<property name="arguments">

<list>

<value>${canal.zkServers:127.0.0.1:2181}</value>

</list>

</property>

</bean>

# 使用zookeeper存储元数据

<bean id="metaManager" class="com.alibaba.otter.canal.meta.PeriodMixedMetaManager">

<property name="zooKeeperMetaManager">

<bean class="com.alibaba.otter.canal.meta.ZooKeeperMetaManager">

<property name="zkClientx" ref="zkClientx" />

</bean>

</property>

<property name="period" value="${canal.zookeeper.flush.period:1000}" />

</bean>

# eventStore使用内存存储数据

<bean id="eventStore" class="com.alibaba.otter.canal.store.memory.MemoryEventStoreWithBuffer">

<property name="bufferSize" value="${canal.instance.memory.buffer.size:16384}" />

<property name="bufferMemUnit" value="${canal.instance.memory.buffer.memunit:1024}" />

<property name="batchMode" value="${canal.instance.memory.batch.mode:MEMSIZE}" />

<property name="ddlIsolation" value="${canal.instance.get.ddl.isolation:false}" />

<property name="raw" value="${canal.instance.memory.rawEntry:true}" />

</bean>

<bean id="eventSink" class="com.alibaba.otter.canal.sink.entry.EntryEventSink">

<property name="eventStore" ref="eventStore" />

<property name="filterTransactionEntry" value="${canal.instance.filter.transaction.entry:false}"/>

</bean>

# eventParser实例

<bean id="eventParser" parent="baseEventParser" >

# 优先在内存中查找parser log position信息

# 若查找不到,则在zookeeper中查找

<!-- 解析位点记录 -->

<property name="logPositionManager">

<bean class="com.alibaba.otter.canal.parse.index.FailbackLogPositionManager">

<constructor-arg>

<bean class="com.alibaba.otter.canal.parse.index.MemoryLogPositionManager" />

</constructor-arg>

<constructor-arg>

<bean class="com.alibaba.otter.canal.parse.index.MetaLogPositionManager">

<constructor-arg ref="metaManager"/>

</bean>

</constructor-arg>

</bean>

</property>

# 初次启动是解析的log position

<!-- 解析起始位点 -->

<property name="masterPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.master.journal.name}" />

<property name="position" value="${canal.instance.master.position}" />

<property name="timestamp" value="${canal.instance.master.timestamp}" />

<property name="gtid" value="${canal.instance.master.gtid}" />

</bean>

</property>

<property name="standbyPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.standby.journal.name}" />

<property name="position" value="${canal.instance.standby.position}" />

<property name="timestamp" value="${canal.instance.standby.timestamp}" />

<property name="gtid" value="${canal.instance.standby.gtid}" />

</bean>

</property>

</bean>

group-instance.xml:使用内存存储元数据信息,将多个parser整合为一个parser(n -> 1)

# 其余省略

# 使用内存存储元数据

<bean id="metaManager" class="com.alibaba.otter.canal.meta.MemoryMetaManager" />

# eventStore使用内存存储数据

<bean id="eventStore" class="com.alibaba.otter.canal.store.memory.MemoryEventStoreWithBuffer">

<property name="bufferSize" value="${canal.instance.memory.buffer.size:16384}" />

<property name="bufferMemUnit" value="${canal.instance.memory.buffer.memunit:1024}" />

<property name="batchMode" value="${canal.instance.memory.batch.mode:MEMSIZE}" />

<property name="ddlIsolation" value="${canal.instance.get.ddl.isolation:false}" />

<property name="raw" value="${canal.instance.memory.rawEntry:true}" />

</bean>

<bean id="eventSink" class="com.alibaba.otter.canal.sink.entry.EntryEventSink">

<property name="eventStore" ref="eventStore" />

<property name="filterTransactionEntry" value="${canal.instance.filter.transaction.entry:false}"/>

</bean>

# eventParser将eventParser1、eventParser2整合为1个parser

<bean id="eventParser" class="com.alibaba.otter.canal.parse.inbound.group.GroupEventParser">

<property name="eventParsers">

<list>

<ref bean="eventParser1" />

<ref bean="eventParser2" />

</list>

</property>

</bean>

# eventParser1

<bean id="eventParser1" parent="baseEventParser">

# 使用内存存储元数据

<!-- 解析位点记录 -->

<property name="logPositionManager">

<bean class="com.alibaba.otter.canal.parse.index.MemoryLogPositionManager" />

</property>

# 初次启动读取的log position

<!-- 解析起始位点 -->

<property name="masterPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.master1.journal.name}" />

<property name="position" value="${canal.instance.master1.position}" />

<property name="timestamp" value="${canal.instance.master1.timestamp}" />

<property name="gtid" value="${canal.instance.master1.gtid}" />

</bean>

</property>

<property name="standbyPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.standby1.journal.name}" />

<property name="position" value="${canal.instance.standby1.position}" />

<property name="timestamp" value="${canal.instance.standby1.timestamp}" />

<property name="gtid" value="${canal.instance.standby1.gtid}" />

</bean>

</property>

</bean>

# eventParser2

<bean id="eventParser2" parent="baseEventParser">

# 使用内存存储log position

<!-- 解析位点记录 -->

<property name="logPositionManager">

<bean class="com.alibaba.otter.canal.parse.index.MemoryLogPositionManager" />

</property>

# 初次启动读取的log position

<!-- 解析起始位点 -->

<property name="masterPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.master2.journal.name}" />

<property name="position" value="${canal.instance.master2.position}" />

<property name="timestamp" value="${canal.instance.master2.timestamp}" />

<property name="gtid" value="${canal.instance.master2.gtid}" />

</bean>

</property>

<property name="standbyPosition">

<bean class="com.alibaba.otter.canal.protocol.position.EntryPosition">

<property name="journalName" value="${canal.instance.standby2.journal.name}" />

<property name="position" value="${canal.instance.standby2.position}" />

<property name="timestamp" value="${canal.instance.standby2.timestamp}" />

<property name="gtid" value="${canal.instance.standby2.gtid}" />

</bean>

</property>

</bean>

******************

相关类与接口

MemoryMetaManager:内存存储cursor元数据(put、get、ack cursor)

public class MemoryMetaManager extends AbstractCanalLifeCycle implements CanalMetaManager {

protected Map<String, List<ClientIdentity>> destinations;

protected Map<ClientIdentity, MemoryMetaManager.MemoryClientIdentityBatch> batches;

protected Map<ClientIdentity, Position> cursors;

public MemoryMetaManager() {

}

MemoryLogPositionmanager:内存存储eventParser解析的日志点位

public class MemoryLogPositionManager extends AbstractLogPositionManager {

private Map<String, LogPosition> positions;

public MemoryLogPositionManager() {

}

CanalInstanceWithSpring

public class CanalInstanceWithSpring extends AbstractCanalInstance {

private static final Logger logger = LoggerFactory.getLogger(CanalInstanceWithSpring.class);

public CanalInstanceWithSpring() {

}

public void start() {

logger.info("start CannalInstance for {}-{} ", new Object[]{1, this.destination});

super.start();

}

public void setEventParser(CanalEventParser eventParser) {

public void setEventSink(CanalEventSink<List<Entry>> eventSink) {

public void setEventStore(CanalEventStore<Event> eventStore) {

public void setDestination(String destination) {

public void setMetaManager(CanalMetaManager metaManager) {

public void setAlarmHandler(CanalAlarmHandler alarmHandler) {

public void setMqConfig(CanalMQConfig mqConfig) {

AbstractCanalInstance

public class AbstractCanalInstance extends AbstractCanalLifeCycle implements CanalInstance {

private static final Logger logger = LoggerFactory.getLogger(AbstractCanalInstance.class);

protected Long canalId;

protected String destination;

protected CanalEventStore<Event> eventStore;

protected CanalEventParser eventParser;

protected CanalEventSink<List<Entry>> eventSink;

protected CanalMetaManager metaManager;

protected CanalAlarmHandler alarmHandler;

protected CanalMQConfig mqConfig;

public AbstractCanalInstance() {

}

************************

canal 客户端消费订阅

get:先ack数据,然后返回数据

public class SimpleCanalConnector implements CanalConnector {

# get:客户端先ack,然后返回数据

public Message get(int batchSize) throws CanalClientException {

return this.get(batchSize, (Long)null, (TimeUnit)null);

}

public Message get(int batchSize, Long timeout, TimeUnit unit) throws CanalClientException {

Message message = this.getWithoutAck(batchSize, timeout, unit);

this.ack(message.getId()); //先ack,然后返回数据

return message;

}

# ClusterCanalConnector调用SimpleCanalConnector获取数据

getWithoutAck:直接获取数据,异步ack

rollback(batchId):回滚数据,重新获取

ack(batchId):客户端消费成功,通知server删除数据

******************

get、ack异步好处

减少ack操作带来的网络延时

可以不停轮询get数据,提高并行化

******************

cursor 设计

get:生成一个mark,mark递增,保证全局唯一性

每次get时,都从上一次get的mark后拉取数据,如果mark不存在,则从last ack mark后获取数据

ack:按照mark的顺序处理,不能跳跃ack

确认后,将last ack mark修改为当前mark,删除当前mark

rollback:删除所有get mark,下次get 数据时,从last ack mark处拉取数据

******************

相关类与接口

CanalConnector

public interface CanalConnector {

void connect() throws CanalClientException;

void disconnect() throws CanalClientException;

boolean checkValid() throws CanalClientException;

void subscribe(String var1) throws CanalClientException;

void subscribe() throws CanalClientException;

void unsubscribe() throws CanalClientException;

Message get(int var1) throws CanalClientException;

Message get(int var1, Long var2, TimeUnit var3) throws CanalClientException;

Message getWithoutAck(int var1) throws CanalClientException;

Message getWithoutAck(int var1, Long var2, TimeUnit var3) throws CanalClientException;

void ack(long var1) throws CanalClientException;

void rollback(long var1) throws CanalClientException;

void rollback() throws CanalClientException;

}

CanalConnectors:创建客户端连接方式

public class CanalConnectors {

public CanalConnectors() {

}

public static CanalConnector newSingleConnector(SocketAddress address, String destination, String username, String password) {

//单机连接

SimpleCanalConnector canalConnector = new SimpleCanalConnector(address, username, password, destination);

canalConnector.setSoTimeout(60000);

canalConnector.setIdleTimeout(3600000);

return canalConnector;

}

public static CanalConnector newClusterConnector(List<? extends SocketAddress> addresses, String destination, String username, String password) {

//客户端直接连接canal server集群,支持canal server fail over

ClusterCanalConnector canalConnector = new ClusterCanalConnector(username, password, destination, new SimpleNodeAccessStrategy(addresses));

canalConnector.setSoTimeout(60000);

canalConnector.setIdleTimeout(3600000);

return canalConnector;

}

public static CanalConnector newClusterConnector(String zkServers, String destination, String username, String password) {

//客户端连接zookeeper,从zookeeper处获取canal server地址,

//支持canal server、canal client fail over

ClusterCanalConnector canalConnector = new ClusterCanalConnector(username, password, destination, new ClusterNodeAccessStrategy(destination, ZkClientx.getZkClient(zkServers)));

canalConnector.setSoTimeout(60000);

canalConnector.setIdleTimeout(3600000);

return canalConnector;

}

}

SimpleCanalConnector:单机连接

public class SimpleCanalConnector implements CanalConnector {

# get:客户端先ack,然后返回数据

public Message get(int batchSize) throws CanalClientException {

return this.get(batchSize, (Long)null, (TimeUnit)null);

}

public Message get(int batchSize, Long timeout, TimeUnit unit) throws CanalClientException {

Message message = this.getWithoutAck(batchSize, timeout, unit);

this.ack(message.getId()); //先ack,然后返回数据

return message;

}

# getWithoutAck:客户端直接返回数据

public Message getWithoutAck(int batchSize) throws CanalClientException {

public Message getWithoutAck(int batchSize, Long timeout, TimeUnit unit) throws CanalClientException {

# ack数据

public void ack(long batchId) throws CanalClientException {

# rollback回滚拉取的数据

public void rollback(long batchId) throws CanalClientException {

public void rollback() throws CanalClientException {

ClusterCanalConnector:集群连接

public class ClusterCanalConnector implements CanalConnector {

private final Logger logger = LoggerFactory.getLogger(this.getClass());

private String username;

private String password;

private int soTimeout = 60000;

private int idleTimeout = 3600000;

private int retryTimes = 3;

private int retryInterval = 5000;

private CanalNodeAccessStrategy accessStrategy;

private SimpleCanalConnector currentConnector; //内部调用SimpleCalalConnector连接

private String destination;

private String filter;

public ClusterCanalConnector(String username, String password, String destination, CanalNodeAccessStrategy accessStrategy) {

this.username = username;

this.password = password;

this.destination = destination;

this.accessStrategy = accessStrategy;

}

public void connect() throws CanalClientException {

while(this.currentConnector == null) {

int times = 0;

while(true) {

try {

this.currentConnector = new SimpleCanalConnector((SocketAddress)null, this.username, this.password, this.destination) {

public SocketAddress getNextAddress() {

return ClusterCanalConnector.this.accessStrategy.nextNode();

}

};

this.currentConnector.setSoTimeout(this.soTimeout);

this.currentConnector.setIdleTimeout(this.idleTimeout);

if (this.filter != null) {

this.currentConnector.setFilter(this.filter);

}

if (this.accessStrategy instanceof ClusterNodeAccessStrategy) {

this.currentConnector.setZkClientx(((ClusterNodeAccessStrategy)this.accessStrategy).getZkClient());

}

this.currentConnector.connect();

break;

} catch (Exception var5) {

this.logger.warn("failed to connect to:{} after retry {} times", this.accessStrategy.currentNode(), times);

this.currentConnector.disconnect();

this.currentConnector = null;

++times;

if (times >= this.retryTimes) {

throw new CanalClientException(var5);

}

try {

Thread.sleep((long)this.retryInterval);

} catch (InterruptedException var4) {

throw new CanalClientException(var4);

}

}

}

}

}

public Message get(int batchSize) throws CanalClientException {

int times = 0;

while(times < this.retryTimes) {

try {

Message msg = this.currentConnector.get(batchSize);

//调用SimpleCanalConnector get方法获取数据,

//该方法获取数据时需先ack,然后返回数据

return msg;

} catch (Throwable var4) {

this.logger.warn(String.format("something goes wrong when getting data from server:%s", this.currentConnector != null ? this.currentConnector.getAddress() : "null"), var4);

++times;

this.restart();

this.logger.info("restart the connector for next round retry.");

}

}

throw new CanalClientException("failed to fetch the data after " + times + " times retry");

}

public Message get(int batchSize, Long timeout, TimeUnit unit) throws CanalClientException {

int times = 0;

while(times < this.retryTimes) {

try {

Message msg = this.currentConnector.get(batchSize, timeout, unit);

return msg;

} catch (Throwable var6) {

this.logger.warn(String.format("something goes wrong when getting data from server:%s", this.currentConnector != null ? this.currentConnector.getAddress() : "null"), var6);

++times;

this.restart();

this.logger.info("restart the connector for next round retry.");

}

}

throw new CanalClientException("failed to fetch the data after " + times + " times retry");

}

public Message getWithoutAck(int batchSize) throws CanalClientException {

int times = 0;

while(times < this.retryTimes) {

try {

Message msg = this.currentConnector.getWithoutAck(batchSize);

//调用SImpleCanalConnector getWithoutAck获取数据

//该方法直接返回数据,异步ack

return msg;

} catch (Throwable var4) {

this.logger.warn(String.format("something goes wrong when getWithoutAck data from server:%s", this.currentConnector != null ? this.currentConnector.getAddress() : "null"), var4);

++times;

this.restart();

this.logger.info("restart the connector for next round retry.");

}

}

throw new CanalClientException("failed to fetch the data after " + times + " times retry");

}

public Message getWithoutAck(int batchSize, Long timeout, TimeUnit unit) throws CanalClientException {

int times = 0;

while(times < this.retryTimes) {

try {

Message msg = this.currentConnector.getWithoutAck(batchSize, timeout, unit);

return msg;

} catch (Throwable var6) {

this.logger.warn(String.format("something goes wrong when getWithoutAck data from server:%s", this.currentConnector != null ? this.currentConnector.getAddress() : "null"), var6);

++times;

this.restart();

this.logger.info("restart the connector for next round retry.");

}

}

throw new CanalClientException("failed to fetch the data after " + times + " times retry");

}

public void rollback(long batchId) throws CanalClientException {

public void rollback() throws CanalClientException {

public void ack(long batchId) throws CanalClientException {

Message:客户端获取的数据

public class Message implements Serializable {

private static final long serialVersionUID = 1234034768477580009L;

private long id; //数据批次唯一标识 batch id

private List<Entry> entries = new ArrayList();

private boolean raw = true; //默认为byteString

private List<ByteString> rawEntries = new ArrayList();

public Message(long id, List<Entry> entries) {

this.id = id;

this.entries = (List)(entries == null ? new ArrayList() : entries);

this.raw = false;

}

public Message(long id, boolean raw, List entries) {

this.id = id;

if (raw) {

this.rawEntries = (List)(entries == null ? new ArrayList() : entries);

} else {

this.entries = (List)(entries == null ? new ArrayList() : entries);

}

this.raw = raw;

}

************************

高可用

canal 高可用:canal server高可用、canal client高可用

canal server:不同的canal server上的instance对同一mysql请求数据,为减少对mysql的dump请求,同一时间只能有一个instance处于运行状态,其余处于standby状态

canal client:为保证有序性,同一时间一个instance只能被一个canal client消费,其余client处于standby状态

******************

canal server 实现原理

canal server启动时,在zookeeper创建临时节点,

若创建成功则运行,否则处于standby状态

zookeeper会记录处于运行状态的canal server信息

当处于运行状态的canal server故障,临时节点在会话超时后消失,

此时,处于standby状态的server得到通知,尝试创建临时节点,临时节点创建成功,则运行

canal client启动时,会从zookeeper处获取处于运行状态的canal server,和其建立连接

如果canal server不可用,连接断开,会重新尝试建立连接

******************

触发 failover 场景

正常关闭:canal server释放instance资源、删除running的canal servr节点

平滑切换:在zookeeper中将处于运行状态的canal zerver的活跃状态修改为false,canal server收到信息后,主动释放instance资源,但不退出jvm

canal server jvm异常:canal server在会话失效后,释放instance资源,session timeout默认为40s

canal server网络中断(假死状态),导致zookeeper认为canal server故障,触发failover

canal server瞬间时效处理(假死状态)

目的:避免因瞬间runing失效导致instance重新分布

处理方法:

canal server在收到running节点释放后,延迟一段时间抢占running,

原本running节点的拥有者可以不需要等待延迟,优先取得running节点,

可以保证假死状态下尽可能不无谓的释放资源。

延时时间设置:默认值为5秒,即running节点针对假死状态的保护期为5秒

************************

TableMeta TSDB 设计

背景:DDL语句会改变表的结构,早期canal版本在处理DDL时,直接使用内存中维护的当前数据库内表的结构,这种处理方法会遇到一些问题

可能问题:处理的binlog时间为T0,当前时间为T1,T0<T1

T0 ~ T1,发生增减column的语句(alter table add column/drop column),

在解析T0的binlog时,使用T1的表结构,会出现列不匹配的情况,报如下错误:

column size is not match for table: xx , 12 vs 13

T0 ~ T1,发生增加column、删除column,数据列总数一样,但操作的数据列不是对应的数据列

T0 ~ T1,发生drop table,导致无法找到对应的数据表(not found [xx] in db),binlog处理阻塞

解决方法:基于druid的ddl处理能力,构建动态的表结构

每条建表语句传入druid的SchemaRepository.console(),构建一份druid的初始表结构

之后在收到每条DDL变更时,把alter table add/drop column等,全部传递给druid,

由druid识别ddl语句并在内存里执行具体的add/drop column的行为,维护一份最终的表结构

定时把druid的内存表结构,做一份checkpoint,

之后的位点回溯,以checkpoint + 增量DDL重放的方式来快速构建任意时间点的表结构

内存表存储

本地存储(h2):使用内嵌的数据库h2,存储在本地(默认)

中心存储(MySQL):将内存表快照存储在mysql中,canal server共享mysql,canal server集群部署时使用

**************

本地存储配置(h2)

#canal.properties

canal.instance.tsdb.spring.xml=classpath:spring/tsdb/h2-tsdb.xml

#instance.properties

canal.instance.tsdb.enable=true

canal.instance.tsdb.dir=${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url=jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;

canal.instance.tsdb.dbUsername=canal

canal.instance.tsdb.dbPassword=canal

**************

中心存储配置(mysql)

#canal.properties

canal.instance.tsdb.spring.xml=classpath:spring/tsdb/mysql-tsdb.xml

#instance.properties

canal.instance.tsdb.enable=true

#canal.instance.tsdb.url=jdbc:mysql://******:3306/canal_tsdb

canal.instance.tsdb.dbUsername=canal

canal.instance.tsdb.dbPassword=canal

21万+

21万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?