docker-compos Mongo 6.0 分片集群高可用搭建

本文采用 mongo version: 6.0进行搭建

我们将创建一个包含以下详细信息的分片集群。

一个具有 3 个副本集的 Config Server。

一个分片,每个分片有 3 个副本集。

三个路由器 (mongos)

根据上述详细信息,Config Server 需要 3 台服务器(每个副本 1 台),Shard1 需要 3 台服务器(每个副本 1 台)Router (mongos) 需要 3 台服务器。

服务器配置及端口

| IP | ROLE | PORT |

|---|---|---|

| 192.168.1.136 | configsvr | 10001 |

| 192.168.1.136 | shardsvr1_1 | 20001 |

| 192.168.1.136 | shardsvr1_2 | 20002 |

| 192.168.1.136 | shardsvr1_3 | 20003 |

| 192.168.1.136 | mongos | 30000 |

| 192.168.1.137 | configsvr | 10001 |

| 192.168.1.137 | shardsvr1_1 | 20001 |

| 192.168.1.137 | shardsvr1_2 | 20002 |

| 192.168.1.137 | shardsvr1_3 | 20003 |

| 192.168.1.137 | mongos | 30000 |

| 192.168.1.138 | configsvr | 10001 |

| 192.168.1.138 | shardsvr1_1 | 20001 |

| 192.168.1.138 | shardsvr1_2 | 20002 |

| 192.168.1.138 | shardsvr1_3 | 20003 |

| 192.168.1.138 | mongos | 30000 |

创建目录

#三台服务器均执行

mkdir -p /test/{common,data}

#目录结构

[root@node2 ~]# tree -d -L 2 /test/

/test/

├── common

│ └── configsvr1

├── data

│ ├── shardsvr1_1

│ ├── shardsvr1_2

│ └── shardsvr1_3

└── tmp (废弃目录)

编写docker-compose文件

# 三台服务器compose文件均相同

[root@node1 test]# cat docker-compose.yml

version: '3'

services:

configsvr1:

container_name: config

image: mongo:6

command: mongod --configsvr --replSet config_rs --dbpath /data/db --port 27017

ports:

- 10001:27017

volumes:

- ./common/configsvr1:/data/db

shardsvr1_1:

container_name: shardsvr1_1

image: mongo:6

command: mongod --shardsvr --replSet shard1_rs --dbpath /data/db --port 27017

ports:

- 20001:27017

volumes:

- ./data/shardsvr1_1:/data/db

shardsvr1_2:

container_name: shardsvr1_2

image: mongo:6

command: mongod --shardsvr --replSet shard1_rs --dbpath /data/db --port 27017

ports:

- 20002:27017

volumes:

- ./data/shardsvr1_2:/data/db

shardsvr1_3:

container_name: shardsvr1_3

image: mongo:6

command: mongod --shardsvr --replSet shard1_rs --dbpath /data/db --port 27017

ports:

- 20003:27017

volumes:

- ./data/shardsvr1_3:/data/db

mongos:

container_name: mongos

image: mongo:6

command: mongos --configdb config_rs/192.168.1.136:10001,192.168.1.137:10001,192.168.1.138:10001 --port 27017 --bind_ip_all

ports:

- 30000:27017

启动容器

#三台服务器均执行

cd /test;

#启动容器

docker-compose up -d

#查看容器

[root@node1 test]# docker-compose ps -a

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

config mongo:6 "docker-entrypoint.s…" configsvr1 About an hour ago Up About an hour 0.0.0.0:10001->27017/tcp, :::10001->27017/tcp

mongos mongo:6 "docker-entrypoint.s…" mongos About an hour ago Up About an hour 0.0.0.0:30000->27017/tcp, :::30000->27017/tcp

shardsvr1_1 mongo:6 "docker-entrypoint.s…" shardsvr1_1 About an hour ago Up About an hour 0.0.0.0:20001->27017/tcp, :::20001->27017/tcp

shardsvr1_2 mongo:6 "docker-entrypoint.s…" shardsvr1_2 About an hour ago Up About an hour 0.0.0.0:20002->27017/tcp, :::20002->27017/tcp

shardsvr1_3 mongo:6 "docker-entrypoint.s…" shardsvr1_3 About an hour ago Up About an hour 0.0.0.0:20003->27017/tcp, :::20003->27017/tcp

[root@node1 test]#

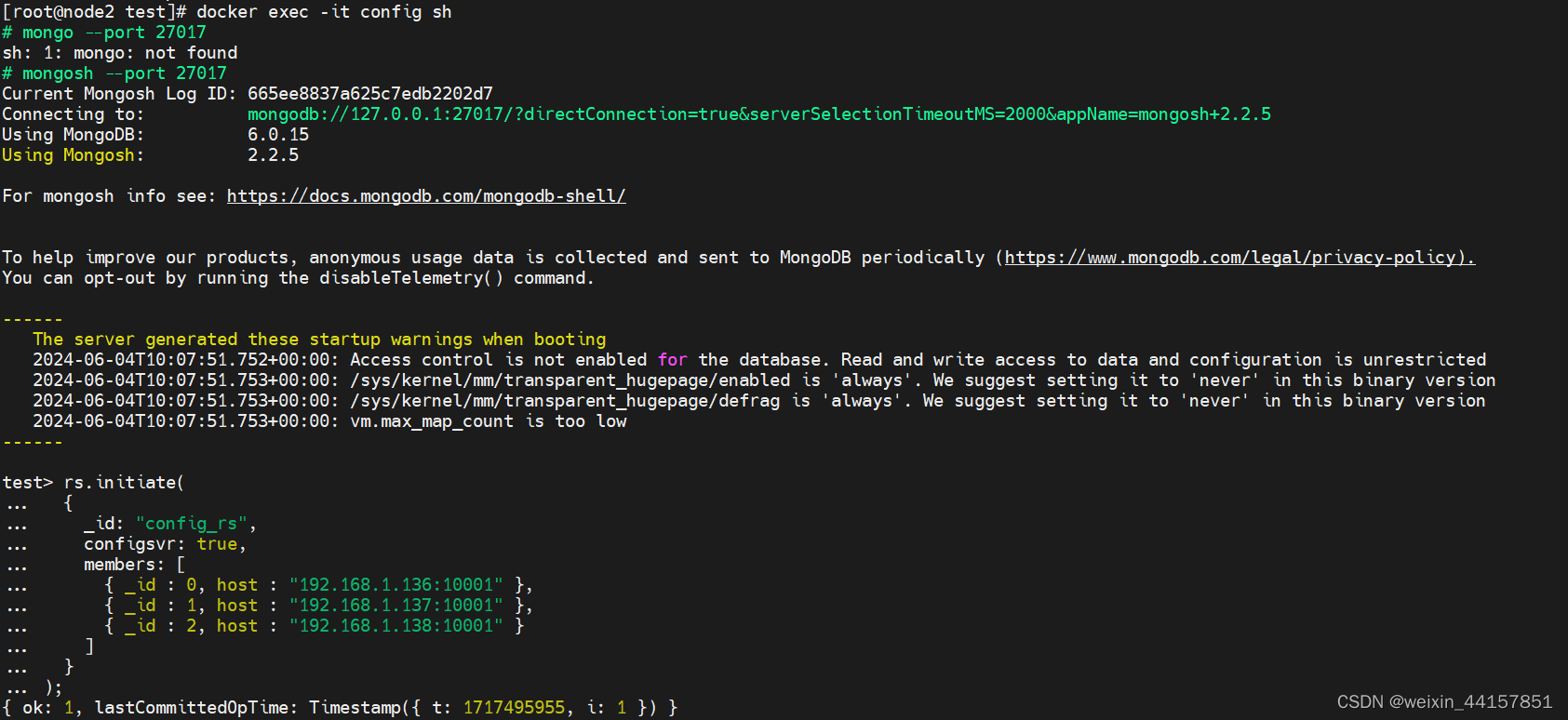

配置 Config Server 环境

进入第二台宿主机 (192.168.1.137) 的 config-server 容器(仅需要在一台执行即可)

执行docker exec -it config mongosh --port 27017

rs.initiate(

{

_id: "config_rs",

configsvr: true,

members: [

{ _id : 0, host : "192.168.1.136:10001" },

{ _id : 1, host : "192.168.1.137:10001" },

{ _id : 2, host : "192.168.1.138:10001" }

]

}

);

图-(配置configsvr)

测试configsvr

docker exec -it config mongosh --port 27017 --eval "db.runCommand({ ping: 1 })" | grep ok

ok: 1,

分片配置

本节,我们创建1个分片(本来是创建两个的,原始环境清了,偷懒只搭一个分片) 。每个分片有 3 个副本集,与配置服务器相同。对于 shard1,我们将使用 20001、20002 和 20003 端口,对于 shard2,我们将使用 20004、20005 和 20006 端口

初始化副本集

#这里的操作,三台服务器均执行。

#192.168.1.136

docker exec -it shardsvr1_1 mongosh --port 27017 (进入CLI工具)

rs.initiate(

{

_id: "shard1_rs",

members: [

{ _id : 0, host : "192.168.1.136:20001" },

{ _id : 1, host : "192.168.1.136:20002" },

{ _id : 2, host : "192.168.1.136:20003" }

]

}

)

如果返回 ok 则成功

----------------------------------

#192.168.1.137

docker exec -it shardsvr1_1 mongosh --port 27017

rs.initiate(

{

_id: "shard1_rs",

members: [

{ _id : 0, host : "192.168.1.137:20001" },

{ _id : 1, host : "192.168.1.137:20002" },

{ _id : 2, host : "192.168.1.137:20003" }

]

}

如果返回 ok 则成功

----------------------------------

#192.168.1.138

docker exec -it shardsvr1_1 mongosh --port 27017

rs.initiate(

{

_id: "shard1_rs",

members: [

{ _id : 0, host : "192.168.1.138:20001" },

{ _id : 1, host : "192.168.1.138:20002" },

{ _id : 2, host : "192.168.1.138:20003" }

]

}

)

如果返回 ok 则成功

检查副本集的状态

[root@node2 ~]# docker exec -it shardsvr1_2 mongosh --eval "rs.status()"

{

set: 'shard1_rs',

date: ISODate('2024-06-04T11:45:14.480Z'),

myState: 2,

term: Long('1'),

syncSourceHost: '192.168.1.137:20001',

syncSourceId: 0,

heartbeatIntervalMillis: Long('2000'),

majorityVoteCount: 2,

writeMajorityCount: 2,

votingMembersCount: 3,

writableVotingMembersCount: 3,

optimes: {

lastCommittedOpTime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

lastCommittedWallTime: ISODate('2024-06-04T11:45:04.681Z'),

readConcernMajorityOpTime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

appliedOpTime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

durableOpTime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

lastAppliedWallTime: ISODate('2024-06-04T11:45:04.681Z'),

lastDurableWallTime: ISODate('2024-06-04T11:45:04.681Z')

},

lastStableRecoveryTimestamp: Timestamp({ t: 1717501464, i: 1 }),

electionParticipantMetrics: {

votedForCandidate: true,

electionTerm: Long('1'),

lastVoteDate: ISODate('2024-06-04T10:15:32.151Z'),

electionCandidateMemberId: 0,

voteReason: '',

lastAppliedOpTimeAtElection: { ts: Timestamp({ t: 1717496121, i: 1 }), t: Long('-1') },

maxAppliedOpTimeInSet: { ts: Timestamp({ t: 1717496121, i: 1 }), t: Long('-1') },

priorityAtElection: 1,

newTermStartDate: ISODate('2024-06-04T10:15:33.318Z'),

newTermAppliedDate: ISODate('2024-06-04T10:15:33.831Z')

},

members: [

{

_id: 0,

name: '192.168.1.137:20001',

health: 1,

state: 1,

stateStr: 'PRIMARY',

uptime: 5392,

optime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

optimeDurable: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

optimeDate: ISODate('2024-06-04T11:45:04.000Z'),

optimeDurableDate: ISODate('2024-06-04T11:45:04.000Z'),

lastAppliedWallTime: ISODate('2024-06-04T11:45:04.681Z'),

lastDurableWallTime: ISODate('2024-06-04T11:45:04.681Z'),

lastHeartbeat: ISODate('2024-06-04T11:45:13.036Z'),

lastHeartbeatRecv: ISODate('2024-06-04T11:45:13.032Z'),

pingMs: Long('0'),

lastHeartbeatMessage: '',

syncSourceHost: '',

syncSourceId: -1,

infoMessage: '',

electionTime: Timestamp({ t: 1717496132, i: 1 }),

electionDate: ISODate('2024-06-04T10:15:32.000Z'),

configVersion: 1,

configTerm: 1

},

{

_id: 1,

name: '192.168.1.137:20002',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 5844,

optime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

optimeDate: ISODate('2024-06-04T11:45:04.000Z'),

lastAppliedWallTime: ISODate('2024-06-04T11:45:04.681Z'),

lastDurableWallTime: ISODate('2024-06-04T11:45:04.681Z'),

syncSourceHost: '192.168.1.137:20001',

syncSourceId: 0,

infoMessage: '',

configVersion: 1,

configTerm: 1,

self: true,

lastHeartbeatMessage: ''

},

{

_id: 2,

name: '192.168.1.137:20003',

health: 1,

state: 2,

stateStr: 'SECONDARY',

uptime: 5392,

optime: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

optimeDurable: { ts: Timestamp({ t: 1717501504, i: 1 }), t: Long('1') },

optimeDate: ISODate('2024-06-04T11:45:04.000Z'),

optimeDurableDate: ISODate('2024-06-04T11:45:04.000Z'),

lastAppliedWallTime: ISODate('2024-06-04T11:45:04.681Z'),

lastDurableWallTime: ISODate('2024-06-04T11:45:04.681Z'),

lastHeartbeat: ISODate('2024-06-04T11:45:13.036Z'),

lastHeartbeatRecv: ISODate('2024-06-04T11:45:13.029Z'),

pingMs: Long('0'),

lastHeartbeatMessage: '',

syncSourceHost: '192.168.1.137:20002',

syncSourceId: 1,

infoMessage: '',

configVersion: 1,

configTerm: 1

}

],

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1717501504, i: 1 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1717501504, i: 1 })

}

Mongos 配置

#这里的操作,三台服务器均执行。

#192.168.1.136

docker exec -it mongos mongosh --port 27017

sh.addShard("shard1_rs/192.168.1.136:20001,192.168.1.136:20002,192.168.1.136:20003")

sh.addShard("shard1_rs/192.168.1.137:20001,192.168.1.137:20002,192.168.1.137:20003")

sh.addShard("shard1_rs/192.168.1.138:20001,192.168.1.138:20002,192.168.1.138:20003")

全部返回 ok 则成功

----------------------------------

#192.168.1.137

docker exec -it mongos mongosh --port 27017

sh.addShard("shard1_rs/192.168.1.136:20001,192.168.1.136:20002,192.168.1.136:20003")

sh.addShard("shard1_rs/192.168.1.137:20001,192.168.1.137:20002,192.168.1.137:20003")

sh.addShard("shard1_rs/192.168.1.138:20001,192.168.1.138:20002,192.168.1.138:20003")

全部返回 ok 则成功

----------------------------------

#192.168.1.1378

docker exec -it mongos mongosh --port 27017

sh.addShard("shard1_rs/192.168.1.136:20001,192.168.1.136:20002,192.168.1.136:20003")

sh.addShard("shard1_rs/192.168.1.137:20001,192.168.1.137:20002,192.168.1.137:20003")

sh.addShard("shard1_rs/192.168.1.138:20001,192.168.1.138:20002,192.168.1.138:20003")

全部返回 ok 则成功

返回结果(192.168.1.137执行详情)

[root@node2 test]# docker exec -it mongos mongosh --port 27017

Current Mongosh Log ID: 665ee9a4d20a39665b2202d7

Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000&appName=mongosh+2.2.5

Using MongoDB: 6.0.15

Using Mongosh: 2.2.5

For mongosh info see: https://docs.mongodb.com/mongodb-shell/

To help improve our products, anonymous usage data is collected and sent to MongoDB periodically (https://www.mongodb.com/legal/privacy-policy).

You can opt-out by running the disableTelemetry() command.

------

The server generated these startup warnings when booting

2024-06-04T10:07:50.780+00:00: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted

------

[direct: mongos] test> sh.addShard("shard1_rs/192.168.1.136:20001,192.168.1.136:20002,192.168.1.136:20003")

{

shardAdded: 'shard1_rs',

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1717496273, i: 18 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1717496273, i: 18 })

}

[direct: mongos] test> sh.addShard("shard1_rs/192.168.1.137:20001,192.168.1.137:20002,192.168.1.137:20003")

{

shardAdded: 'shard1_rs',

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1717496279, i: 1 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1717496279, i: 1 })

}

[direct: mongos] test> sh.addShard("shard1_rs/192.168.1.138:20001,192.168.1.138:20002,192.168.1.138:20003")

{

shardAdded: 'shard1_rs',

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1717496283, i: 4 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1717496283, i: 4 })

}

[direct: mongos] test> sh.enableSharding("test")

{

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1717496333, i: 4 }),

signature: {

hash: Binary.createFromBase64('AAAAAAAAAAAAAAAAAAAAAAAAAAA=', 0),

keyId: Long('0')

}

},

operationTime: Timestamp({ t: 1717496333, i: 4 })

}

功能测试

这一部分参考https://blog.csdn.net/github_38616039/article/details/134158118 ,感谢!!!!!

数据库分片

docker exec -it mongos mongosh --port 27017

use test

sh.enableSharding("test")

对test库的test集合的_id进行哈希分片

db.users.createIndex({ _id: "hashed" })

sh.shardCollection("test.test", {"_id": "hashed" })

##插入数据测试

use test

for (i = 1; i <= 300; i=i+1){db.test.insert({'name': "bigkang"})}

##查询数据

use test

[direct: mongos] test> db.getCollection("test").find().limit(50).skip(0)

[

{ _id: ObjectId('665eea4f2704a6c2892202d8'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202d9'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202da'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202db'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202dc'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202dd'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202de'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202df'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e0'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e1'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e2'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e3'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e4'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e5'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e6'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e7'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e8'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202e9'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202ea'), name: 'bigkang' },

{ _id: ObjectId('665eea4f2704a6c2892202eb'), name: 'bigkang' }

]

创建用户

docker exec -it mongos mongosh --port 27017

use admin;db.createUser({user:"root",pwd:"boya1234",roles:[{role:"clusterAdmin",db:"admin"},{role:"clusterManager",db:"admin"},{role:"clusterMonitor",db:"admin"}]})

##结果如下

,{role:"clusterManager",db:"admin"},{role:"clusterMonitor",db:"admin"}]});

{

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1719207433, i: 4 }),

signature: {

hash: Binary.createFromBase64("AAAAAAAAAAAAAAAAAAAAAAAAAAA=", 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t: 1719207433, i: 4 })

}

补充

本节目前搭建是一个分片,如果要搭建两个分片的也比较简单,后续有时间再补充吧,最近加班太晚…。

话说百度搜索的mongo分片集群搭建的文章是真难用,各种报错,根本对小白没有任何帮助。

6941

6941

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?