DMA概念

1,直接内存访问,是一种不经过CPU而直接从内存存取数据的数据交换模式。在DMA模式下,CPU只须向DMA控制器下达指令,让DMA控制器来处理数据的传送,数据传送完毕再把信息反馈给CPU。

2,每个通道对应不同的外设的DMA请求。虽然每个通道可以接收多个外设的请求,但是同一时间只能接收一个,不能同时接收多个。

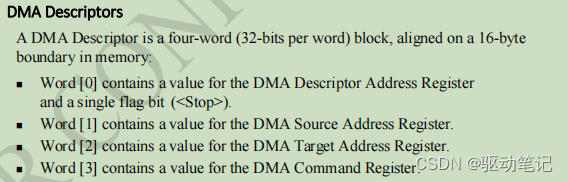

3,通过传输描述符,来描述在通道中的一次传输的,比如源地址,目标地址等。

4,当发生多个DMA通道请求时,就意味着有先后响应处理的顺序问题,这个就由仲裁器来管理,如果软件设置的优先级一样,就由通道编号决定优先级。

void USARTx_DMA_Config()

{

DMA_InitTypeDef DMA_InitStructure;//声明结构体变量

RCC_APB2PeriphClockCmd(RCC_AHBPeriph_DMA1,ENABLE);//使能DMA1时钟

DMA_InitStructure.DMA_PeripheralBaseAddr=USART_DR_ADDRESS;//外设地址为串口的数据寄存器的位置

DMA_InitStructure.DMA_MemoryBaseAddr=(u32)SendBuff;//存储器地址位数组首元素的地址

DMA_InitStructure.DMA_BufferSize=SENDBUFF_SIZE;//缓冲区大小也即数组大小

DMA_InitStructure.DMA_DIR=DMA_DIR_PeripheralDST;//存储器向外设传输数据

DMA_InitStructure.DMA_PeripheralInc = DMA_PeripheralInc_Disable;//失能外设地址自增

DMA_InitStructure.DMA_MemoryInc = DMA_MemoryInc_Enable;//使能存储器地址自增

DMA_InitStructure.DMA_PeripheralDataSize = DMA_PeripheralDataSize_Byte;//外设的数据单位为字节

DMA_InitStructure.DMA_MemoryDataSize = DMA_MemoryDataSize_Byte; //存储器的数据单位为字节

DMA_InitStructure.DMA_Mode = DMA_Mode_Normal;//一次

//DMA_InitStructure.DMA_Mode = DMA_Mode_Circular;//循环

DMA_InitStructure.DMA_Priority = DMA_Priority_Medium;

//禁止存储器向存储器传输

DMA_InitStructure.DMA_M2M = DMA_M2M_Disable;

DMA_Init(USART_TX_DMA_CHANNEL, &DMA_InitStructure); //初始化配置

DMA_Cmd (USART_TX_DMA_CHANNEL,ENABLE);//使能DMA传输通道

}

.......

USARTx_DMA_Config();

for(i=0;i<SENDBUFF_SIZE;i++)

{

SendBuff[i] = 'L';

}

//串口向DMA发送TX请求

USART_DMACmd(USARTx, USART_DMAReq_Tx, ENABLE);linux中dma框架

在Linux当中有一个专门处理DMA的框架,叫做dmaengine,它的代码实现在drivers/dma/dmaengine.c。这个文件主要是提供一套DMA使用的抽象层。

linux驱动会调用这些接口来完成dma处理;但是有些外设自带dma处理,就不会走通用的dma控制器来处理dma请求

1、dma通道请求,dma_request_channel(), 可以向DMA框架申请一个DMA通道。一般是从设备树节点的dma属性里获取的;因为不同设备用的dma通道是不一样的;不同DMA控制器包含的通道号也不一样,用于通道号匹配DMA控制器

2、dma通道配置, dmaengine_slave_config(), 用户通过该函数,可以配置指定通道的参数,比如目的或者源地址,位宽,传输方向等。

3、dma通道预处理,dmaengine_prep_*(),这个预处理函数会比较多,这个API返回做好预处理的DMA TX结构。这个结构会准备好完备的目的地址和源地址来匹配一次传输

4、dma数据提交, dmaengine_submit(),主要是将DMA处理事务提交到dma通道处理链上,这个submit用的是第四步得到的DMA TX结构。

5、dma数据处理, dma_async_issue_pending(),用于启动一次事务处理。

struct dma_device

我们在实现一个dma控制器驱动的时候,其实最主要的就是将struct dma_device这个结构体填充好,下面是这个结构体的实现

struct dma_device {

unsigned int chancnt; // 通道个数

struct list_head channels; // 用于存放channels结构,所有的channel都会链接到这个链表上

struct list_head global_node; // 用于链接到dma_device_list链表

.......

struct device *dev;

u32 src_addr_widths; // 源地址位宽

u32 dst_addr_widths; // 目的地址位宽

u32 directions; // 支持的传输方向

.........

/* 申请channel回调,返回一个channel结构体,后面的回调都要用 */

int (*device_alloc_chan_resources)(struct dma_chan *chan);

/* 释放channel回调 */

void (*device_free_chan_resources)(struct dma_chan *chan);

/* channel预处理回调 */

struct dma_async_tx_descriptor *(*device_prep_dma_memcpy)(

struct dma_chan *chan, dma_addr_t dst, dma_addr_t src,

size_t len, unsigned long flags);

/*.......省略一堆类似的预处理回调函数...............*/

struct dma_async_tx_descriptor *(*device_prep_dma_interrupt)(

struct dma_chan *chan, unsigned long flags);

/* channel 配置回调 */

int (*device_config)(struct dma_chan *chan,

struct dma_slave_config *config);

/* channel 传输暂停回调 */

int (*device_pause)(struct dma_chan *chan);

/* channel 传输恢复回调 */

int (*device_resume)(struct dma_chan *chan);

/* channel传输终止回调 */

int (*device_terminate_all)(struct dma_chan *chan);

void (*device_synchronize)(struct dma_chan *chan);

/* 查看传输状态回调 */

enum dma_status (*device_tx_status)(struct dma_chan *chan,

dma_cookie_t cookie,

struct dma_tx_state *txstate);

/* 处理所有事物回调 */

void (*device_issue_pending)(struct dma_chan *chan);

}DMA控制器驱动注册

在控制器驱动填充好成员函数后,然后通过函数dma_async_device_register()将其挂接到dma_device_list对应的链表上;of_dma_controller_register将dma控制器和dts结点关联,方便以后通过dts来配置dma channel

static int mmp_pdma_probe(struct platform_device *op)

{

struct mmp_pdma_device *pdev;

const struct of_device_id *of_id;

struct mmp_dma_platdata *pdata = dev_get_platdata(&op->dev);

struct resource *iores;

int i, ret, irq = 0;

int dma_channels = 0, irq_num = 0;

const enum dma_slave_buswidth widths =

DMA_SLAVE_BUSWIDTH_1_BYTE | DMA_SLAVE_BUSWIDTH_2_BYTES |

DMA_SLAVE_BUSWIDTH_4_BYTES;

pdev = devm_kzalloc(&op->dev, sizeof(*pdev), GFP_KERNEL);

if (!pdev)

return -ENOMEM;

pdev->dev = &op->dev;

spin_lock_init(&pdev->phy_lock);

iores = platform_get_resource(op, IORESOURCE_MEM, 0);

pdev->base = devm_ioremap_resource(pdev->dev, iores);

if (IS_ERR(pdev->base))

return PTR_ERR(pdev->base);

of_id = of_match_device(mmp_pdma_dt_ids, pdev->dev);

if (of_id)

of_property_read_u32(pdev->dev->of_node, "#dma-channels",

&dma_channels);

else if (pdata && pdata->dma_channels)

dma_channels = pdata->dma_channels;

else

dma_channels = 32; /* default 32 channel */

pdev->dma_channels = dma_channels;

for (i = 0; i < dma_channels; i++) {

if (platform_get_irq(op, i) > 0)

irq_num++;

}

pdev->phy = devm_kcalloc(pdev->dev, dma_channels, sizeof(*pdev->phy),

GFP_KERNEL);

if (pdev->phy == NULL)

return -ENOMEM;

INIT_LIST_HEAD(&pdev->device.channels);

if (irq_num != dma_channels) {

/* all chan share one irq, demux inside */

irq = platform_get_irq(op, 0);

ret = devm_request_irq(pdev->dev, irq, mmp_pdma_int_handler,

IRQF_SHARED, "pdma", pdev);

if (ret)

return ret;

}

for (i = 0; i < dma_channels; i++) {

irq = (irq_num != dma_channels) ? 0 : platform_get_irq(op, i);

ret = mmp_pdma_chan_init(pdev, i, irq);

if (ret)

return ret;

}

dma_cap_set(DMA_SLAVE, pdev->device.cap_mask);

dma_cap_set(DMA_MEMCPY, pdev->device.cap_mask);

dma_cap_set(DMA_CYCLIC, pdev->device.cap_mask);

dma_cap_set(DMA_PRIVATE, pdev->device.cap_mask);

pdev->device.dev = &op->dev;

pdev->device.device_alloc_chan_resources = mmp_pdma_alloc_chan_resources;

pdev->device.device_free_chan_resources = mmp_pdma_free_chan_resources;

pdev->device.device_tx_status = mmp_pdma_tx_status;

pdev->device.device_prep_dma_memcpy = mmp_pdma_prep_memcpy;

pdev->device.device_prep_slave_sg = mmp_pdma_prep_slave_sg;

pdev->device.device_prep_dma_cyclic = mmp_pdma_prep_dma_cyclic;

pdev->device.device_issue_pending = mmp_pdma_issue_pending;

pdev->device.device_config = mmp_pdma_config;

pdev->device.device_terminate_all = mmp_pdma_terminate_all;

pdev->device.copy_align = DMAENGINE_ALIGN_8_BYTES;

pdev->device.src_addr_widths = widths;

pdev->device.dst_addr_widths = widths;

pdev->device.directions = BIT(DMA_MEM_TO_DEV) | BIT(DMA_DEV_TO_MEM);

pdev->device.residue_granularity = DMA_RESIDUE_GRANULARITY_DESCRIPTOR;

if (pdev->dev->coherent_dma_mask)

dma_set_mask(pdev->dev, pdev->dev->coherent_dma_mask);

else

dma_set_mask(pdev->dev, DMA_BIT_MASK(64));

ret = dma_async_device_register(&pdev->device);

if (ret) {

dev_err(pdev->device.dev, "unable to register\n");

return ret;

}

if (op->dev.of_node) {

/* Device-tree DMA controller registration */

ret = of_dma_controller_register(op->dev.of_node,

mmp_pdma_dma_xlate, pdev);

if (ret < 0) {

dev_err(&op->dev, "of_dma_controller_register failed\n");

return ret;

}

}

platform_set_drvdata(op, pdev);

dev_info(pdev->device.dev, "initialized %d channels\n", dma_channels);

return 0;

}预处理

驱动按上面的步骤1,2分配通道和传输信息后,通过通道找到dma设备,再调用设备的device_prep_*这个成员完整配置一次传输,就会调用到dma控制器的这个具体实现

主要是分配一个特定平台的传输描述符,其包含通用的软件传输描述符,和特定平台的硬件传输描述符----用于写入源地址和目标地址,来描述一次传输最重要的信息

static struct dma_async_tx_descriptor *

mmp_pdma_prep_memcpy(struct dma_chan *dchan,

dma_addr_t dma_dst, dma_addr_t dma_src,

size_t len, unsigned long flags)

{

struct mmp_pdma_chan *chan;

struct mmp_pdma_desc_sw *first = NULL, *prev = NULL, *new;

size_t copy = 0;

if (!dchan)

return NULL;

if (!len)

return NULL;

chan = to_mmp_pdma_chan(dchan);

chan->byte_align = false;

if (!chan->dir) {

chan->dir = DMA_MEM_TO_MEM;

chan->dcmd = DCMD_INCTRGADDR | DCMD_INCSRCADDR;

chan->dcmd |= DCMD_BURST32;

}

do {

/* Allocate the link descriptor from DMA pool */

new = mmp_pdma_alloc_descriptor(chan);

if (!new) {

dev_err(chan->dev, "no memory for desc\n");

goto fail;

}

copy = min_t(size_t, len, PDMA_MAX_DESC_BYTES);

if (dma_src & 0x7 || dma_dst & 0x7)

chan->byte_align = true;

new->desc.dcmd = chan->dcmd | (DCMD_LENGTH & copy);

new->desc.dsadr = dma_src;

new->desc.dtadr = dma_dst;

if (!first)

first = new;

else

prev->desc.ddadr = new->async_tx.phys;

new->async_tx.cookie = 0;

async_tx_ack(&new->async_tx);

prev = new;

len -= copy;

if (chan->dir == DMA_MEM_TO_DEV) {

dma_src += copy;

} else if (chan->dir == DMA_DEV_TO_MEM) {

dma_dst += copy;

} else if (chan->dir == DMA_MEM_TO_MEM) {

dma_src += copy;

dma_dst += copy;

}

/* Insert the link descriptor to the LD ring */

list_add_tail(&new->node, &first->tx_list);

} while (len);

first->async_tx.flags = flags; /* client is in control of this ack */

first->async_tx.cookie = -EBUSY;

/* last desc and fire IRQ */

new->desc.ddadr = DDADR_STOP;

new->desc.dcmd |= DCMD_ENDIRQEN;

chan->cyclic_first = NULL;

return &first->async_tx;

fail:

if (first)

mmp_pdma_free_desc_list(chan, &first->tx_list);

return NULL;

}将申请特定平台的传输描述符结构的的物理地址pdesc(首地址就是硬件传输描述符的基地址),传递给通用的软件传输描述符的phys成员

static struct mmp_pdma_desc_sw *

mmp_pdma_alloc_descriptor(struct mmp_pdma_chan *chan)

{

struct mmp_pdma_desc_sw *desc;

dma_addr_t pdesc;

desc = dma_pool_zalloc(chan->desc_pool, GFP_ATOMIC, &pdesc);

if (!desc) {

dev_err(chan->dev, "out of memory for link descriptor\n");

return NULL;

}

INIT_LIST_HEAD(&desc->tx_list);

dma_async_tx_descriptor_init(&desc->async_tx, &chan->chan);

/* each desc has submit */

desc->async_tx.tx_submit = mmp_pdma_tx_submit;

desc->async_tx.phys = pdesc;

return desc;

}

。。。。。

struct mmp_pdma_desc_hw {

u32 ddadr; /* Points to the next descriptor + flags */

u32 dsadr; /* DSADR value for the current transfer */

u32 dtadr; /* DTADR value for the current transfer */

u32 dcmd; /* DCMD value for the current transfer */

} __aligned(32);

struct mmp_pdma_desc_sw {

struct mmp_pdma_desc_hw desc;

struct list_head node;

struct list_head tx_list;

struct dma_async_tx_descriptor async_tx;

};硬件描述符

用于描述dma传输的描述符地址;源地址;目的地址;传输命令。跟上面代码一一对应

传输

mmp_pdma_issue_pending最终用set_desc(chan->phy, desc->async_tx.phys);将这个地址写入DMA控制器的相关寄存器,完成一次传输的物理配置,然后启动传输

static void mmp_pdma_issue_pending(struct dma_chan *dchan)

{

struct mmp_pdma_chan *chan = to_mmp_pdma_chan(dchan);

unsigned long flags;

spin_lock_irqsave(&chan->desc_lock, flags);

start_pending_queue(chan);

spin_unlock_irqrestore(&chan->desc_lock, flags);

}

static void start_pending_queue(struct mmp_pdma_chan *chan)

{

struct mmp_pdma_desc_sw *desc;

/* still in running, irq will start the pending list */

if (!chan->idle) {

dev_dbg(chan->dev, "DMA controller still busy\n");

return;

}

if (list_empty(&chan->chain_pending)) {

/* chance to re-fetch phy channel with higher prio */

mmp_pdma_free_phy(chan);

dev_dbg(chan->dev, "no pending list\n");

return;

}

if (!chan->phy) {

chan->phy = lookup_phy(chan);

if (!chan->phy) {

dev_dbg(chan->dev, "no free dma channel\n");

return;

}

}

/*

* pending -> running

* reintilize pending list

*/

desc = list_first_entry(&chan->chain_pending,

struct mmp_pdma_desc_sw, node);

list_splice_tail_init(&chan->chain_pending, &chan->chain_running);

/*

* Program the descriptor's address into the DMA controller,

* then start the DMA transaction

*/

set_desc(chan->phy, desc->async_tx.phys);

enable_chan(chan->phy);

chan->idle = false;

}

static void set_desc(struct mmp_pdma_phy *phy, dma_addr_t addr)

{

u32 reg = (phy->idx << 4) + DDADR;

writel(addr, phy->base + reg);

}

1582

1582

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?