一.RHCS集群的实现

1.RHCS的基本套件

2.实验环境设置

RHCS为红帽6.5版本的内置高可用套件,采用6.5系统进行实验

两台虚拟机:172.25.4.121;172.25.4.122

火墙为关闭状态

强制级为disabled

3.6.5版本全套yum源的搭建

[root@server1 ~]# cd /etc/yum.repos.d

[root@server1 yum.repos.d]# ls

rhel-source.repo westos.repo

[root@server1 yum.repos.d]# vim westos.repo

[root@server1 yum.repos.d]# cat westos.repo

[rhel6.5]

name=rhel6.5

baseurl=http://172.25.4.250/redhat6.5

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[HighAvailability] ##高可用

name=HighAvailability

baseurl=http://172.25.4.250/redhat6.5/HighAvailability

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[LoadBalancer] ##负载均衡

name=LoadBalancer

baseurl=http://172.25.4.250/redhat6.5/LoadBalancer

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[ResilientStorage] ##弹性存储

name=ResilientStorage

baseurl=http://172.25.4.250/redhat6.5/ResilientStorage

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[ScalableFileSystem] ##共享文件系统

name=ScalableFileSystem

baseurl=http://172.25.4.250/redhat6.5/ScalableFileSystem

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[root@server1 yum.repos.d]# yum repolist

Loaded plugins: product-id, subscription-manager

This system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.

HighAvailability | 3.9 kB 00:00

HighAvailability/primary_db | 43 kB 00:00

LoadBalancer | 3.9 kB 00:00

LoadBalancer/primary_db | 7.0 kB 00:00

ResilientStorage | 3.9 kB 00:00

ResilientStorage/primary_db | 47 kB 00:00

ScalableFileSystem | 3.9 kB 00:00

ScalableFileSystem/primary_db | 6.8 kB 00:00

rhel6.5 | 3.9 kB 00:00

rhel6.5/primary_db | 3.1 MB 00:00

repo id repo name status

HighAvailability HighAvailability 56

LoadBalancer LoadBalancer 4

ResilientStorage ResilientStorage 62

ScalableFileSystem ScalableFileSystem 7

rhel6.5 rhel6.5 3,690

repolist: 3,819 ##全套yum源配置正常

将server2的yum源也配置为全套

[root@server1 yum.repos.d]# scp /etc/yum.repos.d/westos.repo root@172.25.4.122:/etc/yum.repos.d

4.软件的安装

(1)server1

[root@server1 yum.repos.d]# yum install -y ricci luci

[root@server1 yum.repos.d]# passwd ricci

Changing password for user ricci.

New password:

BAD PASSWORD: it is based on a dictionary word

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updated successfully.

[root@server1 yum.repos.d]# /etc/init.d/ricci start

[root@server1 yum.repos.d]# chkconfig ricci on

[root@server1 yum.repos.d]# chkconfig luci on

[root@server1 yum.repos.d]# /etc/init.d/luci start

[root@server1 yum.repos.d]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:8084 0.0.0.0:* LISTEN 1311/python

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 879/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 955/master

tcp 0 0 172.25.4.121:22 172.25.4.250:58514 ESTABLISHED 1006/sshd

tcp 0 0 :::22 :::* LISTEN 879/sshd

tcp 0 0 ::1:25 :::* LISTEN 955/master

tcp 0 0 :::11111 :::* LISTEN 1228/ricci

(2)server2

[root@server2 ~]# yum install -y ricci

[root@server2 ~]# id ricci

uid=140(ricci) gid=140(ricci) groups=140(ricci)

[root@server2 ~]# passwd ricci

Changing password for user ricci.

New password:

BAD PASSWORD: it is based on a dictionary word

BAD PASSWORD: is too simple

Retype new password:

passwd: all authentication tokens updated successfully.

[root@server2 ~]# /etc/init.d/ricci start

[root@server2 ~]# chkconfig ricci on

5.域名的查看

域名会影响在前端网页集群的建立

[root@server1 yum.repos.d]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.25.4.121 server1

172.25.4.122 server2

172.25.4.123 server3

172.25.4.124 server4

172.25.4.125 server5

172.25.4.126 server6

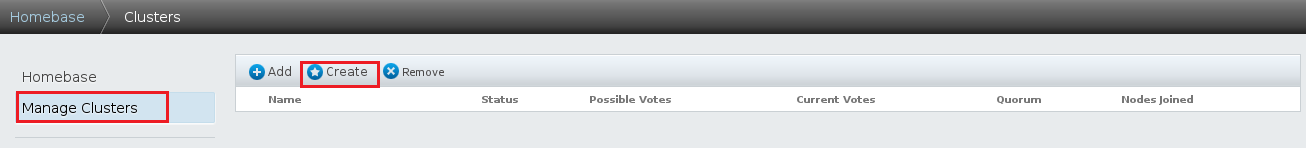

6.前端界面建立集群

https://172.25.4.121:8084/

首次访问lucish时需要添加SSL证书

进行登陆

创建集群

查看集群是否建立成功

[root@server1 ~]# clustat ##查看节点状态

Cluster Status for luck @ Thu Jun 20 21:45:32 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local

server2 2 Online

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 21:45:40 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online

server2 2 Online, Local

二.Fence

1.Fence的简单介绍

Fence技术”核心在于解决高可用集群在出现极端问题情况下的运行保障问题,在高可用集群的运行过程中,有时候会检测到某个节点功能不正常,比如在两台高可用服务器间的心跳线突然出现故障,这时一般高可用集群技术将由于链接故障而导致系统错判服务器宕机从而导致资源的抢夺,为解决这一问题就必须通过集群主动判断及检测发现问题并将其从集群中删除以保证集群的稳定运行,Fence技术的应用可以有效的实现这一功能

Fence设备可以防止集群资源(例如文件系统)同时被多个节点占有,保护了共享数据的安全性和一致性节

在RHCS中,集群里的服务器会互相争抢资源造成客户体验端的不稳定,也就是脑裂问题。利用Fence可以解决脑裂问题,相当于集群里的服务器可以关闭对方的电闸,防止集群之间互相争抢资源

2.Fence的配置

(1)真机下载Fence的相关软件

[root@foundation4 images]# yum install -y fence-virtd.x86_64 fence-virtd-libvirt.x86_64 fence-virtd-multicast.x86_64

(2)编辑fence信息

[root@foundation4 images]# fence_virtd -c ##编辑新的fence信息

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [virbr0]: br0

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

backends {

libvirt {

uri = "qemu:///system";

}

}

listeners {

multicast {

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";

}

}

fence_virtd {

module_path = "/usr/lib64/fence-virt";

backend = "libvirt";

listener = "multicast";

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]? y

(3)Fence密钥的生成

[root@foundation4 images]# mkdir /etc/cluster

[root@foundation4 images]# cd /etc/cluster/

[root@foundation4 cluster]# pwd

/etc/cluster

[root@foundation4 cluster]# dd if=/dev/urandom of=/etc/cluster/fence_xvm.key bs=128 count=1

1+0 records in

1+0 records out

128 bytes (128 B) copied, 0.000233278 s, 549 kB/s

[root@foundation4 cluster]# ls

fence_xvm.key

[root@foundation4 cluster]# systemctl start fence_virtd.service ##开启Fence服务器

(4)密钥发送给集群的服务器并查看是否生成密钥

[root@foundation4 cluster]# scp fence_xvm.key root@172.25.4.121:/etc/cluster/

[root@foundation4 cluster]# scp fence_xvm.key root@172.25.4.122:/etc/cluster/

[root@server1 ~]# cd /etc/cluster/

[root@server1 cluster]# ls

cluster.conf cman-notify.d fence_xvm.key

[root@server1 cluster]# cat fence_xvm.key

@r6饉�'2������[root@server1 cluster]# �`�&�v*���9=����,��

[root@server2 ~]# cd /etc/cluster/

[root@server2 cluster]# ls

cluster.conf cman-notify.d fence_xvm.key

[root@server2 cluster]# cat fence_xvm.key

@r6饉�'2������[root@server2 cluster]# �`�&�v*���9=����,��

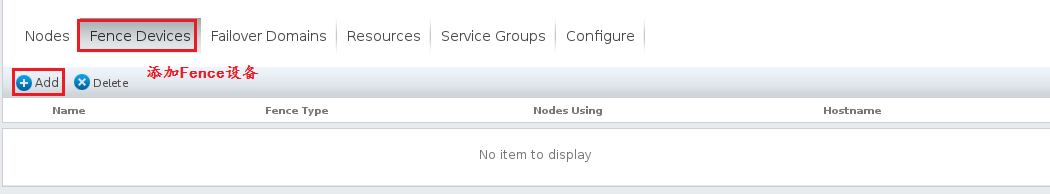

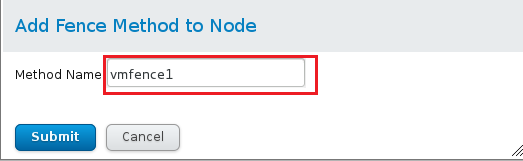

3.网页中对Fence的设置

在集群中的两个服务器中均添加Fence

server2以同样的方式添加

3.集群服务器的测试

(1)查看集群两服务器中生成文件内容

完全相同则测试成功

server1生成文件

[root@server1 cluster]# cat cluster.conf

<?xml version="1.0"?>

<cluster config_version="8" name="luck">

<clusternodes>

<clusternode name="server1" nodeid="1">

<fence>

<method name="vmfence1">

<device domain="e6048222-0bd8-4567-b2c1-e44a222100b8" name="vmfence"/>

</method>

</fence>

</clusternode>

<clusternode name="server2" nodeid="2">

<fence>

<method name="vmfence2">

<device domain="50f9ace2-d156-4b7b-801c-97c28e472249" name="vmfence"/>

</method>

</fence>

</clusternode>

</clusternodes>

<cman expected_votes="1" two_node="1"/>

<fencedevices>

<fencedevice agent="fence_xvm" name="vmfence"/>

</fencedevices>

</cluster>

server2生成文件

[root@server2 cluster]# cat cluster.conf

<?xml version="1.0"?>

<cluster config_version="8" name="luck">

<clusternodes>

<clusternode name="server1" nodeid="1">

<fence>

<method name="vmfence1">

<device domain="e6048222-0bd8-4567-b2c1-e44a222100b8" name="vmfence"/>

</method>

</fence>

</clusternode>

<clusternode name="server2" nodeid="2">

<fence>

<method name="vmfence2">

<device domain="50f9ace2-d156-4b7b-801c-97c28e472249" name="vmfence"/>

</method>

</fence>

</clusternode>

</clusternodes>

<cman expected_votes="1" two_node="1"/>

<fencedevices>

<fencedevice agent="fence_xvm" name="vmfence"/>

</fencedevices>

</cluster>

(2)模拟问题

当server1 fence掉server2

[root@server1 cluster]# fence_node server2

fence server2 success

此时server2出现重启则成功

当server2 fence掉server1

[root@server2 ~]# fence_node server1

fence server1 success

此时server1出现重启则成功

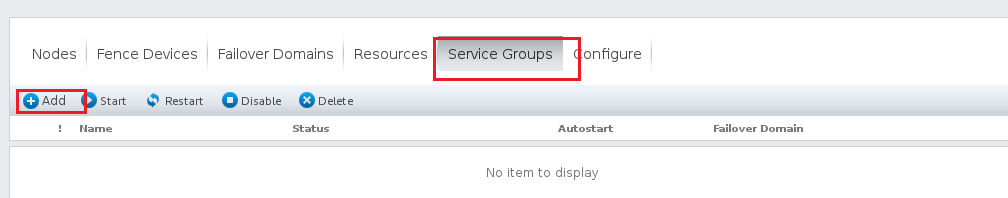

三.Fence实现故障切换以http服务为例

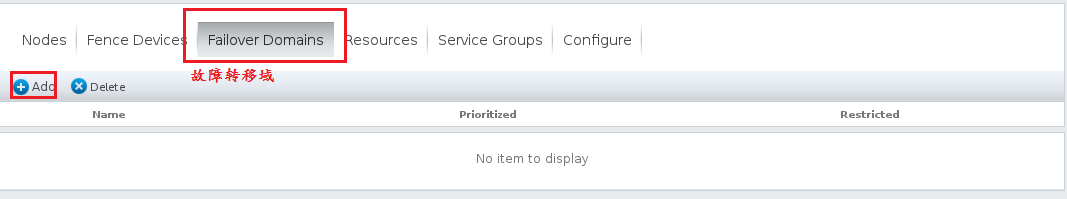

1.前端网页进行设置

(1)定义故障转移域

(2)添加资源

添加VIP

添加Apache服务

(3)添加资源组

2.集群服务器的设置

安装httpd但不开启

[root@server1 ~]# yum install -y httpd

[root@server1 ~]# vim /var/www/html/index.html

[root@server1 ~]# cat /var/www/html/index.html

server1

[root@server1 ~]# clustat

Cluster Status for luck @ Thu Jun 20 22:49:50 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache (none) disabled

[root@server2 ~]# yum install -y httpd

[root@server2 ~]# vim /var/www/html/index.html

[root@server2 ~]# cat /var/www/html/index.html

server2

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 22:49:42 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache (none) disabled

3.在网页端开启httpd服务,因为server1优先级高,则此时由server1提供服务

[root@server1 ~]# clustat

Cluster Status for luck @ Thu Jun 20 22:55:05 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

物理机测试

[root@foundation4 cluster]# curl 172.25.4.200

server1

[root@foundation4 cluster]# curl 172.25.4.200

server1

[root@foundation4 cluster]# curl 172.25.4.200

server1

4.模拟测试

(1)server1的httpd服务被人为关闭–服务器不会重启

[root@server1 ~]# /etc/init.d/httpd stop

Stopping httpd: [ OK ]

此时集群状态的变化

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 22:57:47 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache (none) recoverable

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 22:57:52 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 starting

物理机测试

[root@foundation4 cluster]# curl 172.25.4.200

server2

[root@foundation4 cluster]# curl 172.25.4.200

server2

[root@foundation4 cluster]# curl 172.25.4.200

server2

(2)server2系统内核崩溃 —服务器重启

[root@server2 ~]# echo c>/proc/sysrq-triggerWrite failed: Broken pipe

此时集群状态的变化

[root@server1 ~]# clustat

Cluster Status for luck @ Thu Jun 20 23:03:23 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 started

[root@server1 ~]# clustat

Cluster Status for luck @ Thu Jun 20 23:03:35 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Offline

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server1 ~]# ip addr show eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 52:54:00:b5:81:e7 brd ff:ff:ff:ff:ff:ff

inet 172.25.4.121/24 brd 172.25.4.255 scope global eth0

inet 172.25.4.200/24 scope global secondary eth0

inet6 fe80::5054:ff:feb5:81e7/64 scope link

valid_lft forever preferred_lft forever

物理机的测试

[root@foundation4 cluster]# curl 172.25.4.200

server1

[root@foundation4 cluster]# curl 172.25.4.200

server1

[root@foundation4 cluster]# curl 172.25.4.200

server1

(3)server1的网络中断

[root@server1 ~]# /etc/init.d/network stop

Shutting down interface eth0:

此时集群的状态改变

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 23:08:57 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Offline

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 23:08:58 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Offline

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 starting

[root@server2 ~]# clustat

Cluster Status for luck @ Thu Jun 20 23:09:55 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 started

物理机的测试

[root@foundation4 cluster]# curl 172.25.4.200

server2

[root@foundation4 cluster]# curl 172.25.4.200

server2

[root@foundation4 cluster]# curl 172.25.4.200

server2

[root@foundation4 cluster]# curl 172.25.4.200

server2

四.利用本地文件系统实现资源共享

实验环境:三台虚拟机

其中两台为集群中的服务器;另一台作为提供共享磁盘的服务端

1.共享磁盘服务端的配置

(1)添加1块硬盘

[root@server3 ~]# fdisk -l ##查看添加成功

Disk /dev/vda: 21.5 GB, 21474836480 bytes

16 heads, 63 sectors/track, 41610 cylinders

Units = cylinders of 1008 * 512 = 516096 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x00000000

(2)安装磁盘共享

[root@server3 ~]# yum install -y scsi-*

(3)指定磁盘的共享

[root@server3 ~]# vim /etc/tgt/targets.conf

38 <target iqn.2019-06.com.example:server.target1>

39 backing-store /dev/vda ##添加需要被共享的磁盘

40 </target>

(4)服务的开启

[root@server3 ~]# /etc/init.d/tgtd start ##开启服务

Starting SCSI target daemon: [ OK ]

[root@server3 ~]# tgt-admin -s

Account information:

ACL information:

ALL

[root@server3 ~]# ps ax ##查看进程,2个则正确

PID TTY STAT TIME COMMAND

1036 ? Ssl 0:00 tgtd

1039 ? S 0:00 tgtd

1070 pts/0 R+ 0:00 ps ax

2.集群中server1与server2的设置

(1)server1设置

[root@server1 ~]# /etc/init.d/httpd status

httpd is stopped

[root@server1 ~]# yum install iscsi-* -y

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.4.123 ##搜索共享磁盘

Starting iscsid: [ OK ]

172.25.4.123:3260,1 iqn.2019-06.com.example:server.target1

[root@server1 ~]# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 512000 sda1

8 2 20458496 sda2

253 0 19439616 dm-0

253 1 1015808 dm-1

[root@server1 ~]# iscsiadm -m node -l ##同步共享磁盘

Logging in to [iface: default, target: iqn.2019-06.com.example:server.target1, portal: 172.25.4.123,3260] (multiple)

Login to [iface: default, target: iqn.2019-06.com.example:server.target1, portal: 172.25.4.123,3260] successful.

[root@server1 ~]# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 512000 sda1

8 2 20458496 sda2

253 0 19439616 dm-0

253 1 1015808 dm-1

8 16 20971520 sdb

[root@server1 ~]# fdisk -cu /dev/sdb ##使用共享磁盘,创建为LVM

Disk identifier: 0x2711f132

Device Boot Start End Blocks Id System

/dev/sdb1 2048 41943039 20970496 8e Linux LVM

[root@server1 ~]# partprobe

[root@server1 ~]# pvcreate /dev/sdb1 ##将共享磁盘创建物理卷为物理卷中

[root@server1 ~]# vgcreate vg0 /dev/sdb1 ##在卷组中创建逻辑卷

[root@server1 ~]# lvcreate -L 4G -n lv0 vg0 ##

[root@server1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 VolGroup lvm2 a-- 19.51g 0

/dev/sdb1 vg0 lvm2 a-- 20.00g 20.00g

[root@server1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

VolGroup 1 2 0 wz--n- 19.51g 0

vg0 1 0 0 wz--nc 20.00g 20.00g

[root@server1 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 18.54g

lv_swap VolGroup -wi-ao---- 992.00m

lv0 vg0 -wi-a----- 4.00g

[root@server1 ~]# mkfs.ext4 /dev/vg0/lv0 ##将磁盘格式化

[root@server1 ~]# clusvcadm -d apache ##平缓关闭网页中的集群服务器

Local machine disabling service:apache...Success

(2)server2设置

为了让共享磁盘同步,需要先启动集群服务

[root@server2 ~]# yum install iscsi-* -y

[root@server2 ~]# iscsiadm -m discovery -t st -p 172.25.4.123

[root@server2 ~]# iscsiadm -m node -l

[root@server2 ~]# cat /proc/partitions

major minor #blocks name

8 0 20971520 sda

8 1 512000 sda1

8 2 20458496 sda2

253 0 19439616 dm-0

253 1 1015808 dm-1

8 16 20971520 sdb

8 17 20970496 sdb1

[root@server2 ~]# pvs ##同步成功

PV VG Fmt Attr PSize PFree

/dev/sda2 VolGroup lvm2 a-- 19.51g 0

/dev/sdb1 vg0 lvm2 a-- 20.00g 20.00g

[root@server2 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

VolGroup 1 2 0 wz--n- 19.51g 0

vg0 1 0 0 wz--nc 20.00g 20.00g

[root@server2 ~]# lvs

LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert

lv_root VolGroup -wi-ao---- 18.54g

lv_swap VolGroup -wi-ao---- 992.00m

lv0 vg0 -wi-a----- 4.00g

此时共享磁盘同时挂载则资源无法进行共享

3.网页端设置

在集群中哪台服务器开,则共享磁盘挂载在哪台服务器上实现资源的共享

(1)添加资源

(2)资源组重新添加

在网页端对apache进行开启

4.物理机测试

(1)server1优先级高,此时server1的阿帕奇服务打开

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

集群状态

[root@server1 ~]# clustat

Cluster Status for luck @ Fri Jun 21 00:24:32 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1108020 17054332 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33476 436768 8% /boot

/dev/mapper/vg0-lv0 4128448 139256 3779480 4% /var/www/html

(2)当server1中的httpd服务停止

集群状态的变化

[root@server2 ~]# clustat

Cluster Status for luck @ Fri Jun 21 00:28:35 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server2 ~]# clustat

Cluster Status for luck @ Fri Jun 21 00:28:37 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache (server2) recoverable

[root@server2 ~]# clustat

Cluster Status for luck @ Fri Jun 21 00:29:18 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 started

物理机测试

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

(3)server2系统内核崩溃

此时集群状态及挂载

集群节点切换速度很快,迅速将服务切换到服务1

[root@server1 ~]# clustat

Cluster Status for luck @ Fri Jun 21 00:38:21 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server1 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1105968 17056384 7% /

tmpfs 510200 25656 484544 6% /dev/shm

/dev/sda1 495844 33476 436768 8% /boot

/dev/mapper/vg0-lv0 4128448 139260 3779476 4% /var/www/html

物理机测试

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

[root@foundation4 cluster]# curl 172.25.4.200

hahaha~~

五.利用共享文件系统实现共享资源

1.删除网页界面中资源组里本地文件实现资源共享

2.平缓关闭网页集群中的服务器

[root@server1 ~]# clusvcadm -d apache

Local machine disabling service:apache…Success

3.在集群服务器进行设置

(1)server1

[root@server1 ~]# mkfs.gfs2 -p lock_dlm -j 2 -t luck:mygfs2 /dev/vg0/lv0 ##将磁盘格式化为gfs2模式

[root@server1 ~]# mount /dev/vg0/lv0 /mnt将其挂载在/mnt中

[root@server1 ~]# cd /mnt

[root@server1 mnt]# ls

[root@server1 mnt]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1106044 17056308 7% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33476 436768 8% /boot

/dev/mapper/vg0-lv0 4193856 264776 3929080 7% /mnt

[root@server1 mnt]# cp /etc/passwd . ##将文件复制到/mnt中

[root@server1 mnt]# ls

passwd

(2)server2

[root@server2 ~]# mkfs.gfs2 -p lock_dlm -j 2 -t luck:mygfs2 /dev/vg0/lv0

[root@server2 ~]# cd /mnt

[root@server2 mnt]# ls

[root@server2 mnt]# mount /dev/vg0/lv0 /mnt

[root@server2 mnt]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 1048804 17113548 6% /

tmpfs 510200 31816 478384 7% /dev/shm

/dev/sda1 495844 33476 436768 8% /boot

/dev/mapper/vg0-lv0 4193856 264776 3929080 7% /mnt

[root@server2 mnt]# ls ##因为是共享网络系统,所以server1中有资源时,server2也可看到资源

passwd

在1中删除文件则2中也看不到共享资源

[root@server1 mnt]# rm -fr passwd

[root@server1 mnt]# ls

[root@server2 mnt]# ls

(3)将磁盘永久挂载到共享目录中

server1

[root@server1 ~]# umount /mnt

[root@server1 ~]# vim /etc/fstab

[root@server1 ~]# cat /etc/fstab

/dev/vg0/lv0 /var/www/html gfs2 _netdev 0 0

server2

[root@server2 ~]# umount /mnt

[root@server2 ~]# vim /etc/fstab

[root@server2 ~]# cat /etc/fstab

/dev/vg0/lv0 /var/www/html gfs2 _netdev 0 0

在图形界面开启集群

[root@server1 ~]# clustat

Cluster Status for luck @ Fri Jun 21 01:21:38 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, Local, rgmanager

server2 2 Online, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server1 ~]# vim /var/www/html/index.html

[root@server1 ~]# cat /var/www/html/index.html

hello lucky

物理机测试时,则可看到共享资源

[root@foundation4 cluster]# curl 172.25.4.200

hello lucky

[root@foundation4 cluster]# curl 172.25.4.200

hello lucky

[root@foundation4 cluster]# curl 172.25.4.200

hello lucky

当关闭server1的httpd

[root@server1 ~]# /etc/init.d/httpd stop

Stopping httpd: [ OK ]

此时集群的状态变化

[root@server2 ~]# clustat

Cluster Status for luck @ Fri Jun 21 01:34:42 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server1 started

[root@server2 ~]# clustat

Cluster Status for luck @ Fri Jun 21 01:35:10 2019

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

server1 1 Online, rgmanager

server2 2 Online, Local, rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:apache server2 started

物理机进行测试

此时仍可以看见共享资源

[root@foundation4 cluster]# curl 172.25.4.200

hello lucky

[root@foundation4 cluster]# curl 172.25.4.200

hello lucky

[root@foundation4 cluster]# curl 172.25.4.200

hello lucky

455

455

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?