Flink HBaseLookupFunction

Flink版本:1.12.2

Flink源码内有HbaseLookupFuncation类,最近想试试kafka 流数据实时关联hbase维表数据,看看使用HbaseLookupFuncation能否成功使用,于是稍微研究:

1. Flink源码:

HBaseLookupFunction

package org.apache.flink.connector.hbase.source;

import org.apache.flink.annotation.Internal;

import org.apache.flink.annotation.VisibleForTesting;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.connector.hbase.util.HBaseConfigurationUtil;

import org.apache.flink.connector.hbase.util.HBaseReadWriteHelper;

import org.apache.flink.connector.hbase.util.HBaseTableSchema;

import org.apache.flink.table.functions.FunctionContext;

import org.apache.flink.table.functions.TableFunction;

import org.apache.flink.types.Row;

import org.apache.flink.util.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HConstants;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.TableNotFoundException;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Result;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

/**

* The HBaseLookupFunction is a standard user-defined table function, it can be used in tableAPI and

* also useful for temporal table join plan in SQL. It looks up the result as {@link Row}.

*/

@Internal

public class HBaseLookupFunction extends TableFunction<Row> {

private static final Logger LOG = LoggerFactory.getLogger(HBaseLookupFunction.class);

private static final long serialVersionUID = 1L;

private final String hTableName;

private final byte[] serializedConfig;

private final HBaseTableSchema hbaseTableSchema;

private transient HBaseReadWriteHelper readHelper;

private transient Connection hConnection;

private transient HTable table;

public HBaseLookupFunction(

Configuration configuration, String hTableName, HBaseTableSchema hbaseTableSchema) {

this.serializedConfig = HBaseConfigurationUtil.serializeConfiguration(configuration);

this.hTableName = hTableName;

this.hbaseTableSchema = hbaseTableSchema;

}

/**

* The invoke entry point of lookup function.

*

* @param rowKey the lookup key. Currently only support single rowkey.

*/

public void eval(Object rowKey) throws IOException {

// fetch result

Result result = table.get(readHelper.createGet(rowKey));

if (!result.isEmpty()) {

// parse and collect

collect(readHelper.parseToRow(result, rowKey));

}

}

@Override

public TypeInformation<Row> getResultType() {

return hbaseTableSchema.convertsToTableSchema().toRowType();

}

private org.apache.hadoop.conf.Configuration prepareRuntimeConfiguration() {

// create default configuration from current runtime env (`hbase-site.xml` in classpath)

// first,

// and overwrite configuration using serialized configuration from client-side env

// (`hbase-site.xml` in classpath).

// user params from client-side have the highest priority

org.apache.hadoop.conf.Configuration runtimeConfig =

HBaseConfigurationUtil.deserializeConfiguration(

serializedConfig, HBaseConfigurationUtil.getHBaseConfiguration());

// do validation: check key option(s) in final runtime configuration

if (StringUtils.isNullOrWhitespaceOnly(runtimeConfig.get(HConstants.ZOOKEEPER_QUORUM))) {

LOG.error(

"can not connect to HBase without {} configuration",

HConstants.ZOOKEEPER_QUORUM);

throw new IllegalArgumentException(

"check HBase configuration failed, lost: '"

+ HConstants.ZOOKEEPER_QUORUM

+ "'!");

}

return runtimeConfig;

}

@Override

public void open(FunctionContext context) {

LOG.info("start open ...");

org.apache.hadoop.conf.Configuration config = prepareRuntimeConfiguration();

try {

hConnection = ConnectionFactory.createConnection(config);

table = (HTable) hConnection.getTable(TableName.valueOf(hTableName));

} catch (TableNotFoundException tnfe) {

LOG.error("Table '{}' not found ", hTableName, tnfe);

throw new RuntimeException("HBase table '" + hTableName + "' not found.", tnfe);

} catch (IOException ioe) {

LOG.error("Exception while creating connection to HBase.", ioe);

throw new RuntimeException("Cannot create connection to HBase.", ioe);

}

this.readHelper = new HBaseReadWriteHelper(hbaseTableSchema);

LOG.info("end open.");

}

@Override

public void close() {

LOG.info("start close ...");

if (null != table) {

try {

table.close();

table = null;

} catch (IOException e) {

// ignore exception when close.

LOG.warn("exception when close table", e);

}

}

if (null != hConnection) {

try {

hConnection.close();

hConnection = null;

} catch (IOException e) {

// ignore exception when close.

LOG.warn("exception when close connection", e);

}

}

LOG.info("end close.");

}

@VisibleForTesting

public String getHTableName() {

return hTableName;

}

}

2. 简单版:

之前由于在不熟悉代码的情况下测试,使用的源码的返回值Row会报错,所以我在基于HBaseLookupFunction的逻辑上简化了操作,具体代码如下:

MyHbaseLookupFuncation

public static class MyHbaseLookupFuncation extends TableFunction<String> {

private static final Logger LOG = LoggerFactory.getLogger(MyHbaseLookupFuncation.class);

private final String hTableName;

private Configuration configuration;

private transient Connection hConnection;

private transient HTable table;

public MyHbaseLookupFuncation(String hTableName) {

this.hTableName = hTableName;

}

// 修改了返回值,从Row 改成 String

public void eval(Object rowKey) throws IOException {

Result result = table.get(new Get(Bytes.toBytes(String.valueOf(rowKey))));

if (!result.isEmpty()) {

collect(parseColumn(result));

}

}

public String parseColumn(Result result) {

StringBuffer sb = new StringBuffer();

//行键

String rowkey = Bytes.toString(result.getRow());

sb.append(rowkey).append(",");

KeyValue[] kvs = result.raw();

for (KeyValue kv : kvs) {

//列族名

String family = Bytes.toString(kv.getFamily());

//列名

String qualifier = Bytes.toString(kv.getQualifier());

String value = Bytes.toString(result.getValue(Bytes.toBytes(family), Bytes.toBytes(qualifier)));

sb.append(qualifier).append(":").append(value).append(" , ");

}

return sb.toString();

}

@Override

public void open(FunctionContext context) {

LOG.info("start open ...");

try {

Configuration configuration = new Configuration();

configuration.set("hbase.zookeeper.quorum", "192.168.0.115");

hConnection = ConnectionFactory.createConnection(configuration);

table = (HTable) hConnection.getTable(TableName.valueOf(hTableName));

} catch (TableNotFoundException tnfe) {

LOG.error("Table '{}' not found ", hTableName, tnfe);

throw new RuntimeException("HBase table '" + hTableName + "' not found.", tnfe);

} catch (IOException ioe) {

LOG.error("Exception while creating connection to HBase.", ioe);

throw new RuntimeException("Cannot create connection to HBase.", ioe);

}

LOG.info("end open.");

}

@Override

public void close() {

LOG.info("start close ...");

if (null != table) {

try {

table.close();

table = null;

} catch (IOException e) {

// ignore exception when close.

LOG.warn("exception when close table", e);

}

}

if (null != hConnection) {

try {

hConnection.close();

hConnection = null;

} catch (IOException e) {

// ignore exception when close.

LOG.warn("exception when close connection", e);

}

}

LOG.info("end close.");

}

}

最终代码:

1. Main

package cn.xhjava.flink.table.connector;

import cn.xhjava.domain.Student2;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.connector.hbase.source.HBaseLookupFunction;

import org.apache.flink.connector.hbase.util.HBaseTableSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.table.functions.FunctionContext;

import org.apache.flink.table.functions.TableFunction;

import org.apache.flink.types.Row;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.TableNotFoundException;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

import java.io.Serializable;

/**

* @author Xiahu

* @create 2021/4/6

*/

public class HbaseLookupFuncationDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

DataStreamSource<String> sourceStream = env.readTextFile("student");

DataStream<Student2> map = sourceStream.map(new MapFunction<String, Student2>() {

@Override

public Student2 map(String s) throws Exception {

String[] fields = s.split(",");

return new Student2(Integer.valueOf(fields[0]), fields[1], fields[2]);

}

});

Table student = tableEnv.fromDataStream(map, "id,name,sex");

tableEnv.createTemporaryView("student", student);

// 实例化之前修改的Function

MyHbaseLookupFuncation baseLookupFunction = new MyHbaseLookupFuncation("test:hbase_user_behavior");

//注册函数

tableEnv.registerFunction("hbaseLookup", baseLookupFunction);

System.out.println("函数注册成功~~~");

Table table = tableEnv.sqlQuery("select id,name,sex,info from student,LATERAL TABLE(hbaseLookup(id)) as T(info)");

tableEnv.toAppendStream(table, Row.class).print();

env.execute();

}

}

2. student 文件

1,mike,男

2,jimmy,女

3,jerry,女

3. Student2.java

public class Student2 {

private Integer id;

private String name;

private String sex;

public Student2() {

}

public Student2(Integer id, String name, String sex) {

this.id = id;

this.name = name;

this.sex = sex;

}

public Integer getId() {

return id;

}

public void setId(Integer id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getSex() {

return sex;

}

public void setSex(String sex) {

this.sex = sex;

}

}

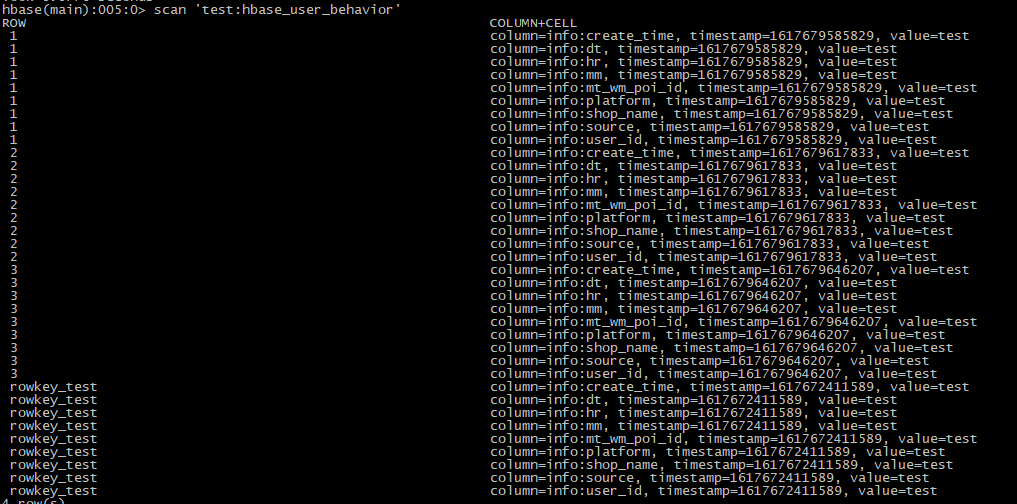

hbase 表内数据

执行结果:

14:52:07,647 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x346643ca connecting to ZooKeeper ensemble=192.168.0.115:2181

14:52:07,647 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x3c6b1620 connecting to ZooKeeper ensemble=192.168.0.115:2181

14:52:07,647 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x8dc76f connecting to ZooKeeper ensemble=192.168.0.115:2181

14:52:07,647 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x23388e54 connecting to ZooKeeper ensemble=192.168.0.115:2181

14:52:10,274 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e824dc6

2> 2,jimmy,女,2,create_time,test,dt,test,hr,test,mm,test,mt_wm_poi_id,test,platform,test,shop_name,test,source,test,user_id,test,

3> 3,jerry,女,3,create_time,test,dt,test,hr,test,mm,test,mt_wm_poi_id,test,platform,test,shop_name,test,source,test,user_id,test,

1> 1,mike,男,1,create_time,test,dt,test,hr,test,mm,test,mt_wm_poi_id,test,platform,test,shop_name,test,source,test,user_id,test,

14:52:10,998 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e824dc3

14:52:10,998 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e824dc4

14:52:10,998 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e824dc5

student 文件内有三条数据,lookup hbase 内的四条数据,最后打印3条数据,结果没问题

注意:通过日志显示,程序与hbase服务端 连接共计4次,断开连接4次;因为Flink程序默认的并行度为4,所以与habse服务端建立4次连接,如果需要修改,env.setParallelism(1);即可

3. 源码版

在对HBaseLookupFunction进行一定的了解后,使用TableFunction 进行look up 查询

源码

package org.apache.flink.connector.hbase.source;

import org.apache.flink.annotation.Internal;

import org.apache.flink.annotation.VisibleForTesting;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.connector.hbase.util.HBaseConfigurationUtil;

import org.apache.flink.connector.hbase.util.HBaseReadWriteHelper;

import org.apache.flink.connector.hbase.util.HBaseTableSchema;

import org.apache.flink.table.functions.FunctionContext;

import org.apache.flink.table.functions.TableFunction;

import org.apache.flink.types.Row;

import org.apache.flink.util.StringUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HConstants;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.TableNotFoundException;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Result;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.io.IOException;

@Internal

public class HBaseLookupFunction extends TableFunction<Row> {

private static final Logger LOG = LoggerFactory.getLogger(HBaseLookupFunction.class);

private static final long serialVersionUID = 1L;

private final String hTableName;

private final byte[] serializedConfig;

private final HBaseTableSchema hbaseTableSchema;

private transient HBaseReadWriteHelper readHelper;

private transient Connection hConnection;

private transient HTable table;

public HBaseLookupFunction(

Configuration configuration, String hTableName, HBaseTableSchema hbaseTableSchema) {

this.serializedConfig = HBaseConfigurationUtil.serializeConfiguration(configuration);

this.hTableName = hTableName;

this.hbaseTableSchema = hbaseTableSchema;

}

public void eval(Object rowKey) throws IOException {

Result result = table.get(readHelper.createGet(rowKey));

if (!result.isEmpty()) {

collect(readHelper.parseToRow(result, rowKey));

}

}

//改方法有关返回值参数列表,比如:

// 返回两个参数: return new RowTypeInfo(Types.STRING, Types.INT);

// 返回三个参数: return new RowTypeInfo(Types.STRING, Types.INT);

// 由于我们是去lookUp hbase的数据,我们只能去操作:HBaseTableSchema,来修改返回参数

@Override

public TypeInformation<Row> getResultType() {

return hbaseTableSchema.convertsToTableSchema().toRowType();

}

private org.apache.hadoop.conf.Configuration prepareRuntimeConfiguration() {

org.apache.hadoop.conf.Configuration runtimeConfig =

HBaseConfigurationUtil.deserializeConfiguration(

serializedConfig, HBaseConfigurationUtil.getHBaseConfiguration());

// do validation: check key option(s) in final runtime configuration

if (StringUtils.isNullOrWhitespaceOnly(runtimeConfig.get(HConstants.ZOOKEEPER_QUORUM))) {

LOG.error(

"can not connect to HBase without {} configuration",

HConstants.ZOOKEEPER_QUORUM);

throw new IllegalArgumentException(

"check HBase configuration failed, lost: '"

+ HConstants.ZOOKEEPER_QUORUM

+ "'!");

}

return runtimeConfig;

}

@Override

public void open(FunctionContext context) {

LOG.info("start open ...");

org.apache.hadoop.conf.Configuration config = prepareRuntimeConfiguration();

try {

hConnection = ConnectionFactory.createConnection(config);

table = (HTable) hConnection.getTable(TableName.valueOf(hTableName));

} catch (TableNotFoundException tnfe) {

LOG.error("Table '{}' not found ", hTableName, tnfe);

throw new RuntimeException("HBase table '" + hTableName + "' not found.", tnfe);

} catch (IOException ioe) {

LOG.error("Exception while creating connection to HBase.", ioe);

throw new RuntimeException("Cannot create connection to HBase.", ioe);

}

this.readHelper = new HBaseReadWriteHelper(hbaseTableSchema);

LOG.info("end open.");

}

@Override

public void close() {

LOG.info("start close ...");

if (null != table) {

try {

table.close();

table = null;

} catch (IOException e) {

// ignore exception when close.

LOG.warn("exception when close table", e);

}

}

if (null != hConnection) {

try {

hConnection.close();

hConnection = null;

} catch (IOException e) {

// ignore exception when close.

LOG.warn("exception when close connection", e);

}

}

LOG.info("end close.");

}

@VisibleForTesting

public String getHTableName() {

return hTableName;

}

}

1. 实时数据lookup 单张Hbase表

1. Main

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

env.setParallelism(1);

DataStreamSource<String> sourceStream = env.readTextFile("D:\\git\\study\\Flink-Learning\\flink-table\\src\\main\\resources\\student");

DataStream<Student3> map = sourceStream.map(new MapFunction<String, Student3>() {

@Override

public Student3 map(String s) throws Exception {

String[] fields = s.split(",");

return new Student3(String.valueOf(fields[0]), fields[1], fields[2]);

}

});

Table student = tableEnv.fromDataStream(map, "id,name,sex");

tableEnv.createTemporaryView("student", student);

Configuration configuration = new Configuration();

configuration.set("hbase.zookeeper.quorum", "192.168.0.115");

// 注册封装HBaseTableSchema,关于后续参数返回

HBaseTableSchema baseTableSchema = new HBaseTableSchema();

// 设置主键

baseTableSchema.setRowKey("rowkey", String.class);

// 设置列族

baseTableSchema.addColumn("info","small",String.class);

baseTableSchema.addColumn("info","yellow",String.class);

baseTableSchema.addColumn("info","man",String.class);

HBaseLookupFunction baseLookupFunction = new HBaseLookupFunction(configuration, "test:hbase_user_behavior", baseTableSchema);

//注册函数

tableEnv.registerFunction("hbaseLookup", baseLookupFunction);

System.out.println("函数注册成功~~~");

Table table = tableEnv.sqlQuery("select id,name,sex,info.small,info.yellow,info.man from student,LATERAL TABLE(hbaseLookup(id)) as T(rowkey,info)");

/*

* 在封装HBaseTableSchema阶段,我设置了RowKey,列族,所以在SQL中可以使用:LATERAL TABLE(hbaseLookup(id)) as T(rowkey,info)

* 在上面代码设置列族时分别设置三个列:small,yellow,man

* 所以在SQL中可以使用:info.small,info.yellow,info.man

*/

tableEnv.toAppendStream(table, Row.class).print();

env.execute();

}

2. Student3.java

package cn.xhjava.domain;

/**

* @author Xiahu

* @create 2020/10/27

*/

public class Student3 {

private String id;

private String name;

private String sex;

public Student3() {

}

public Student3(String id, String name, String sex) {

this.id = id;

this.name = name;

this.sex = sex;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getSex() {

return sex;

}

public void setSex(String sex) {

this.sex = sex;

}

}

3. student

1,tom1,男

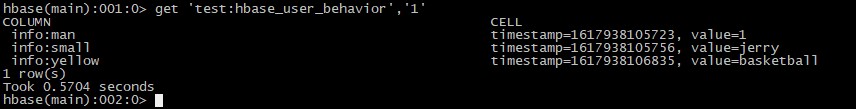

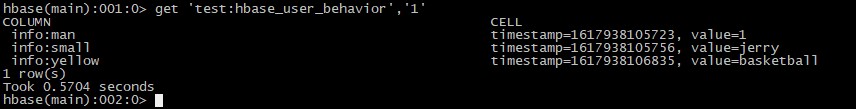

4. hbase数据

test:hbase_user_behavior

5. 输出结果

函数注册成功~~~

14:32:14,046 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x82fa530 connecting to ZooKeeper ensemble=192.168.0.115:2181

1,tom1,男,jerry,basketball,1

14:32:18,952 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e827f9e

2. 实时数据lookup 多张Hbase表

1. Main

package cn.xhjava.flink.table.demo;

import cn.xhjava.domain.Student3;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.connector.hbase.source.HBaseLookupFunction;

import org.apache.flink.connector.hbase.util.HBaseTableSchema;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import org.apache.flink.types.Row;

import org.apache.hadoop.conf.Configuration;

import java.text.SimpleDateFormat;

/**

* @author Xiahu

* @create 2021/4/6

* <p>

* 流数据实时 look up hbase 双表表数据查询,数据类型 Row

*/

public class FlinkTable_02_HbaseLookupFuncation4 {

private static SimpleDateFormat simpleDateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(env);

env.setParallelism(1);

DataStreamSource<String> sourceStream = env.readTextFile("D:\\git\\study\\Flink-Learning\\flink-table\\src\\main\\resources\\student");

DataStream<Student3> map = sourceStream.map(new MapFunction<String, Student3>() {

@Override

public Student3 map(String s) throws Exception {

String[] fields = s.split(",");

return new Student3(String.valueOf(fields[0]), fields[1], fields[2]);

}

});

Table student = tableEnv.fromDataStream(map, "id,name,sex");

tableEnv.createTemporaryView("student", student);

Configuration configuration = new Configuration();

configuration.set("hbase.zookeeper.quorum", "192.168.0.115");

// 封装hbase_user_behavior HBaseTableSchema

HBaseTableSchema baseTableSchema = new HBaseTableSchema();

baseTableSchema.setRowKey("rowkey", String.class);

baseTableSchema.addColumn("info","small",String.class);

baseTableSchema.addColumn("info","yellow",String.class);

baseTableSchema.addColumn("info","man",String.class);

HBaseLookupFunction baseLookupFunction = new HBaseLookupFunction(configuration, "test:hbase_user_behavior", baseTableSchema);

// 封装hbase_user_behavior2 HBaseTableSchema

HBaseTableSchema baseTableSchema2 = new HBaseTableSchema();

baseTableSchema2.setRowKey("rowkey", String.class);

baseTableSchema2.addColumn("info","age",String.class);

baseTableSchema2.addColumn("info","name",String.class);

baseTableSchema2.addColumn("info","sex",String.class);

HBaseLookupFunction baseLookupFunction2 = new HBaseLookupFunction(configuration, "test:hbase_user_behavior2", baseTableSchema2);

//注册函数

tableEnv.registerFunction("hbaseLookup", baseLookupFunction);

tableEnv.registerFunction("hbaseLookup2", baseLookupFunction2);

System.out.println("函数注册成功~~~");

Table table = tableEnv.sqlQuery("select id,info1.small,info1.yellow,info1.man,info2 from student,LATERAL TABLE(hbaseLookup(id)) as T(rowkey1,info1),LATERAL TABLE(hbaseLookup2(info1.man)) as T2(rowkey2,info2)");

// 通过从表:hbase_user_behavior 查询hbase_user_behavior.man的值, 去 hbase_user_behavior2 查询结果,最终将查询结果返回

tableEnv.toAppendStream(table, Row.class).print();

env.execute();

}

}

2. Student3.java

package cn.xhjava.domain;

/**

* @author Xiahu

* @create 2020/10/27

*/

public class Student3 {

private String id;

private String name;

private String sex;

public Student3() {

}

public Student3(String id, String name, String sex) {

this.id = id;

this.name = name;

this.sex = sex;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getSex() {

return sex;

}

public void setSex(String sex) {

this.sex = sex;

}

}

3. student

1,tom1,男

4. hbase数据

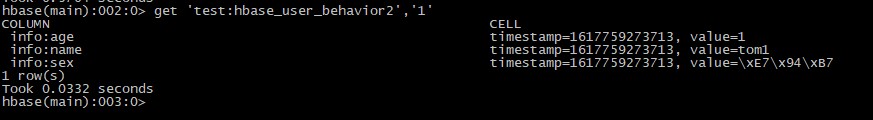

test:hbase_user_behavior

test:hbase_user_behavior2

5. 结果

函数注册成功~~~

14:47:30,075 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x6a6c0d1d connecting to ZooKeeper ensemble=192.168.0.115:2181

14:47:32,585 INFO org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper - Process identifier=hconnection-0x51c734e8 connecting to ZooKeeper ensemble=192.168.0.115:2181

1,jerry,basketball,1,1,tom1,男

14:47:33,295 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e827fcd

14:47:33,308 INFO org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation - Closing zookeeper sessionid=0x1781a191e827fcc

4. 性能测试

基于并行度=1测试

1.hbase 数据1000万;实时数据:1000

总耗时(flink程序启动):

rowkey有序:

开始时间2021-04-06 15:33:33

结束时间2021-04-06 15:33:42

rowkey无序:

开始时间2021-04-06 15:38:49

结束时间2021-04-06 15:38:59

2.hbase 数据133631681;实时数据:1000

总耗时(flink程序启动):

rowkey有序:

开始时间2021-04-06 18:07:55

结束时间2021-04-06 18:08:02

rowkey无序:

开始时间2021-04-06 18:05:56

结束时间2021-04-06 18:06:04

5. 实时

- 目前hbase内没有rowkey=200000数据;

- 实时数据id = 200000 暂时无法在hbase内查找到;

- hbase内插入rowkey = 200000 的数据;

- 实时数据id = 200000 再次进来,成功关联;

结论:实时数据可以实时的从hbase内look up ,真实实时性暂时还未测试

2739

2739

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?