04.08.2021

Part 1

Data processing in Python

Data Processing Tools

An intuition about Object-Oriented Programming:

A Class is a construction plan to build a house; An Instance is a house built based on the plan; A Method is a tool we can use on the house to complete a specific action.

1. Import the libraries

Numpy, Matplotlib.pyplot, Pandas, sklearn

(pandas is a open source data analysis and manipulation tool, )

2. Import the dataset (create the data frame)

An entity can be any word or series of words that consistently refers to the same thing, a machinery model needs two entities: x and y.

x is a matric of features which is called independent variable and y is the dependent variable vector, y is usually stored in the last colunm of the csv file.

dataset = pd.read_csv("file.csv")

x = dataset.iloc[row, column].values

y = dataset.iloc[row, column].values

3. Taking care of missing data

There are two solutions to deal with missing data. You can delete those when you have large dataset that contains only a small amount of missing data (maybe less than 1%). Or you can replace missing data with the numerical average of all data of this column, using sklearn library, you will use methods to replace old columns with new ones including the previously missing data.

from sklearn.impute import SimpleImputer

imputer = SimpleImputer(missing_values = np.nan, strategy = "mean") #取平均

imputer.fit(X[:, 1:3])

X[:, 1:3] = imputer.transform(X[:, 1:3]) # 替代* np.nan - NAN is "not a number"

* 注意X的column范围!the Upper bound is excluded in python. 想要取第一列和第二列,index应该为0:2

4. Encoding Categorical data

1. We need to replace categorical data (countries) with binary data, use One Hot Encoding to convert categorical data to binary vectors without numerical orders rather than use numerical data like 1 2 3. One hot encoding is very useful to process datasets that contain categorical variables.

2. The dependent variable y (No, Yes) can be replaced by 0, 1

# Encode the independent variable

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import OneHotEncoder

ct = ColumnTransformer(transformers=[('encoder', OneHotEncoder(), [0])], remainder="passthrough")

X = np.array(ct.fit_transform(X))

# Encode the dependent varabile

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

y = le.fit_transform(y) # will convert the text into numerical values * the train function will expect features of X as np array, so don't forget to convert it

Splitting the dataset into the training set and test set

Q: Feature scaling should be done before Splitting dataset or after?

A: Splitting dataset first. Training set is for training existing data and the test dataset is to evaluate the model. Feature scaling is to make sure all features in the same scale. Splitting dataset firstly is to prevent information leaked from the test set until the training is done.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=1)* random_state = 1 is to keep the seed. train_test_split splits arrays or matrices into random train and test subsets. That means that everytime you run it without specifying random_state, you will get a different result, this is expected behavior. It changes. On the other hand if you use random_state=some_number, then you can guarantee that the output of Run 1 will be equal to the output of Run 2

Feature Scaling

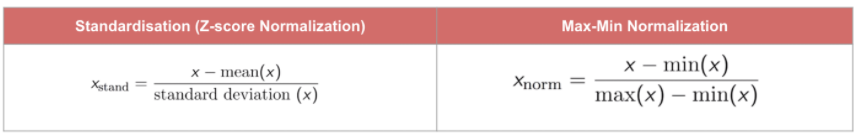

Why? - To have all values of features in the same range. Because for some of the machine learning models, that in order to avoid some features to be dominated by other features in such a way that the dominated features are even not considered by the machine learning model. Also, we only need to do it for some of the models, not all of them. There are two frequent used technics on matrices of features to improve the training process.

Standardization : -3 to 3. look at the frequency of the features, gurantee the data which has specially higher or lower frequency won't be ignored by the model

Normalization : 0 to 1.

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train[:, 3:] = sc.fit_transform(X_train[:, 3:])

X_test[:, 3:] = sc.fit_transform(X_test[:, 3:])* Don't do standardization on dummy variables ( one-hot encoding) because it will only make it worse since it will lose the interpretation of which city it represents. Only do it on those variables taking values in a very different range.

* Difference between 'fit' and 'transform' is, fit will get the mean and standard deviation of each of your features, transform will apply this formula to transform your values.

* Why do we also have to transform matrices of features of the test set? Because features of the test set need to be scaled by the same scalar which was used on the training set.

127

127

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?