paper:MonoSDF: Exploring Monocular Geometric Cues for Neural Implicit Surface Reconstruction,Nicer-SLAM

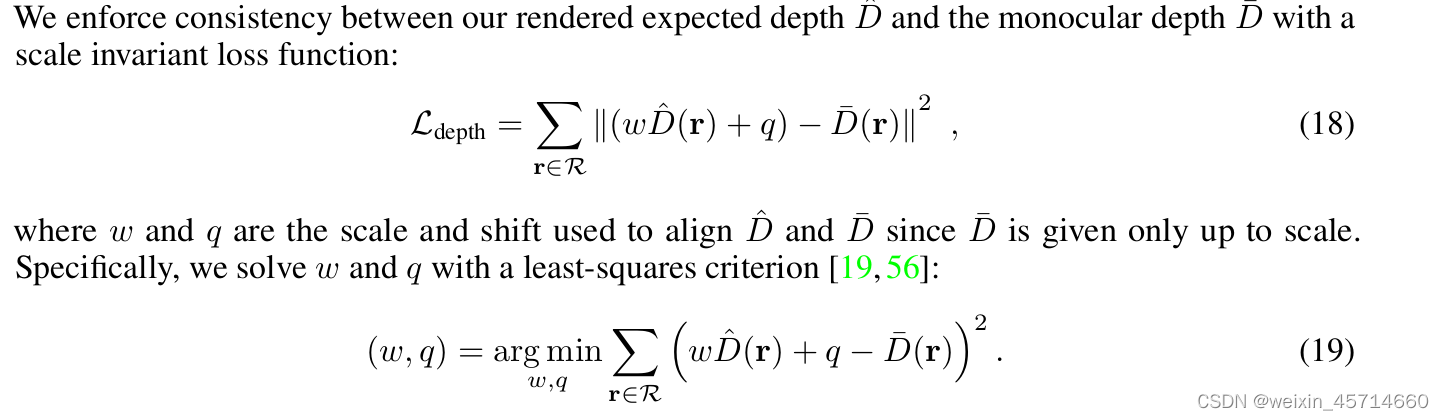

Depth Consistency Loss

说明:monocular depth与MLP网络预测的depth尺度应该需要保持一致,为了对齐monocular depth和rendered depth提出的loss,scale和shift保持一致性

主要是利用最小二乘法求解scale和shift

主要是利用最小二乘法求解scale和shift

A x = B x = h = ( w , q ) T = A − 1 B A = ∑ d r d r T \\Ax = B\, \\x = h=(w,q)^{T}=A^{-1}B\, \\A = \sum d_rd_r^{T}\, Ax=Bx=h=(w,q)T=A−1BA=∑drdrT

code

def normalized_depth_scale_and_shift(

prediction: TensorType[1, 32, "mult"], target: TensorType[1, 32, "mult"], mask: TensorType[1, 32, "mult"]

):

"""

More info here: https://arxiv.org/pdf/2206.00665.pdf supplementary section A2 Depth Consistency Loss

This function computes scale/shift required to normalizes predicted depth map,

to allow for using normalized depth maps as input from monocular depth estimation networks.

These networks are trained such that they predict normalized depth maps.

Solves for scale/shift using a least squares approach with a closed form solution:

Based on:

https://github.com/autonomousvision/monosdf/blob/d9619e948bf3d85c6adec1a643f679e2e8e84d4b/code/model/loss.py#L7

Args:

prediction: predicted depth map

target: ground truth depth map

mask: mask of valid pixels

Returns:

scale and shift for depth prediction

"""

# system matrix: A = [[a_00, a_01], [a_10, a_11]]

a_00 = torch.sum(mask * prediction * prediction, (1, 2))

a_01 = torch.sum(mask * prediction, (1, 2))

a_11 = torch.sum(mask, (1, 2))

# right hand side: b = [b_0, b_1]

b_0 = torch.sum(mask * prediction * target, (1, 2))

b_1 = torch.sum(mask * target, (1, 2))

# solution: x = A^-1 . b = [[a_11, -a_01], [-a_10, a_00]] / (a_00 * a_11 - a_01 * a_10) . b

scale = torch.zeros_like(b_0)

shift = torch.zeros_like(b_1)

det = a_00 * a_11 - a_01 * a_01

valid = det.nonzero()

scale[valid] = (a_11[valid] * b_0[valid] - a_01[valid] * b_1[valid]) / det[valid]

shift[valid] = (-a_01[valid] * b_0[valid] + a_00[valid] * b_1[valid]) / det[valid]

return scale, shift

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?