1 概述

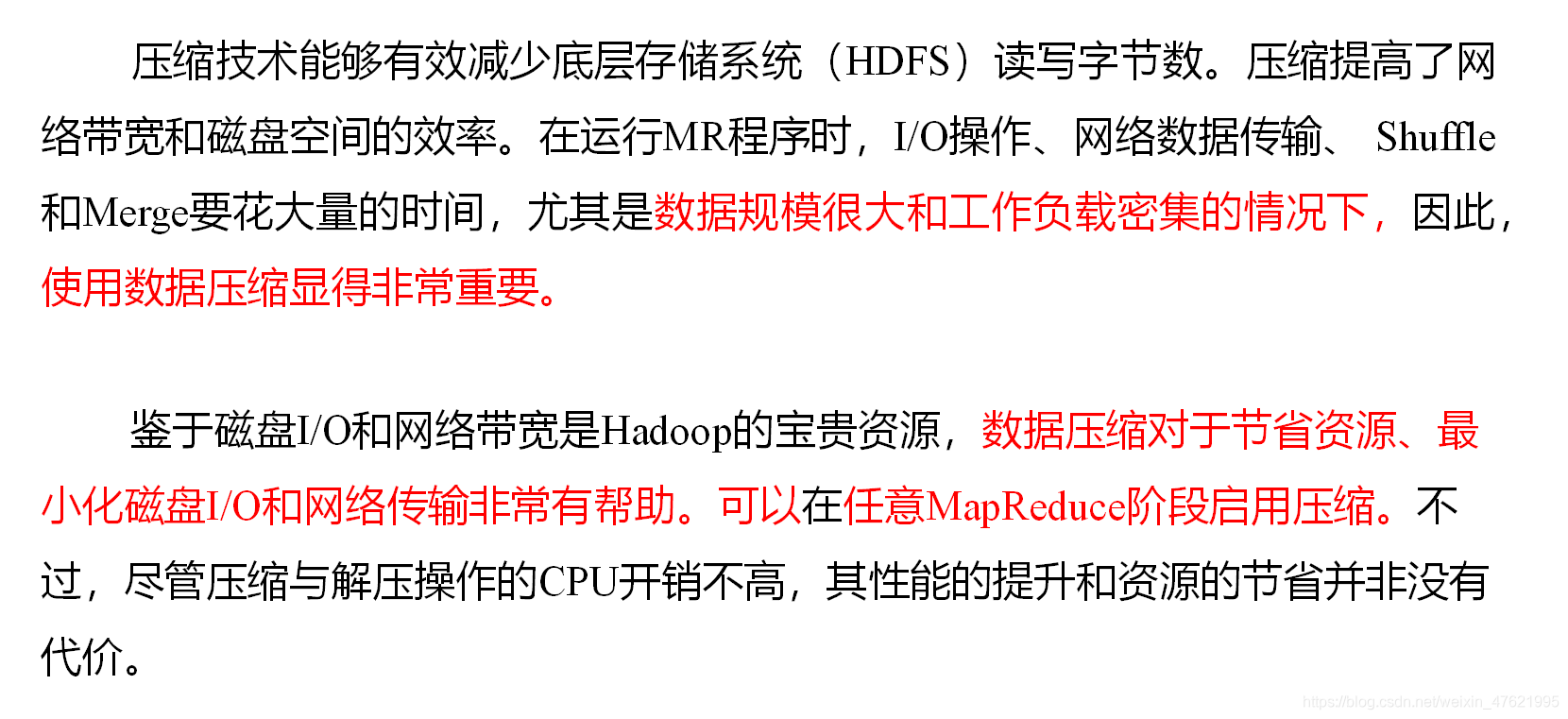

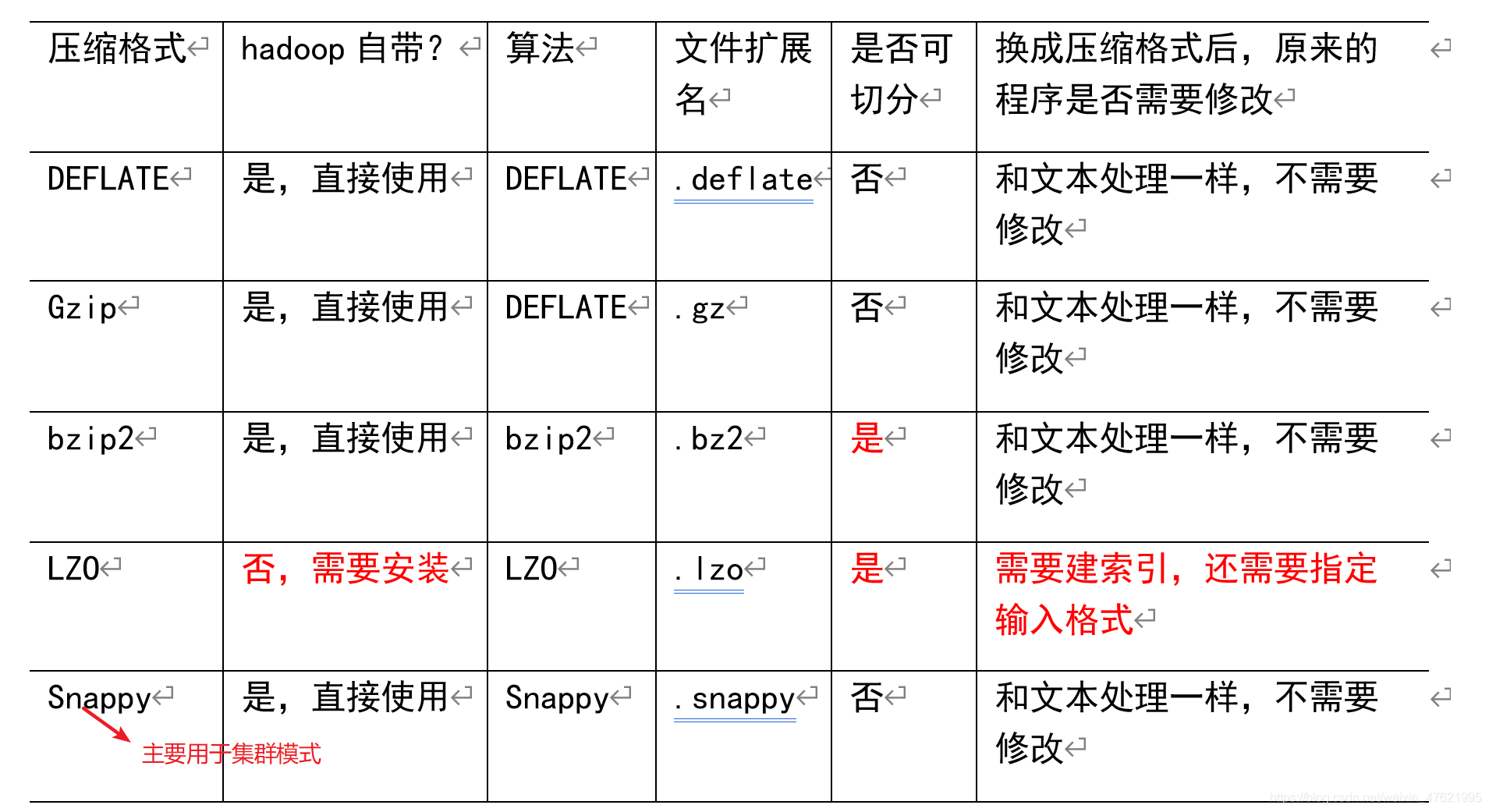

2 MR支持的压缩编码

为了支持多种压缩/解压缩算法,Hadoop引入了编码/解码器,如下表所示。

压缩格式 对应的编码/解码器

DEFLATE org.apache.hadoop.io.compress.DefaultCodec

gzip org.apache.hadoop.io.compress.GzipCodec

bzip2 org.apache.hadoop.io.compress.BZip2Codec

LZO com.hadoop.compression.lzo.LzopCodec

Snappy org.apache.hadoop.io.compress.SnappyCodec

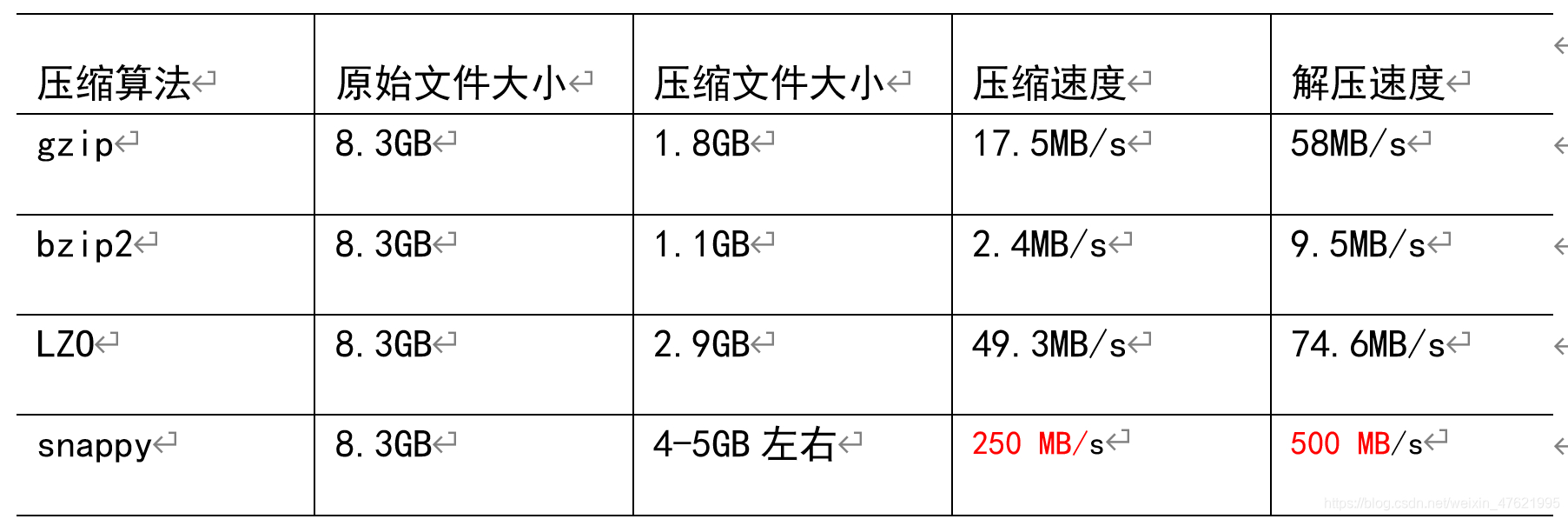

压缩性能的比较

http://google.github.io/snappy/

Snappy is a compression/decompression library. It does not aim for maximum compression, or compatibility with any other compression library; instead, it aims for very high speeds and reasonable compression. For instance, compared to the fastest mode of zlib, Snappy is an order of magnitude faster for most inputs, but the resulting compressed files are anywhere from 20% to 100% bigger.On a single core of a Core i7 processor in 64-bit mode, Snappy compresses at about 250 MB/sec or more and decompresses at about 500 MB/sec or more.

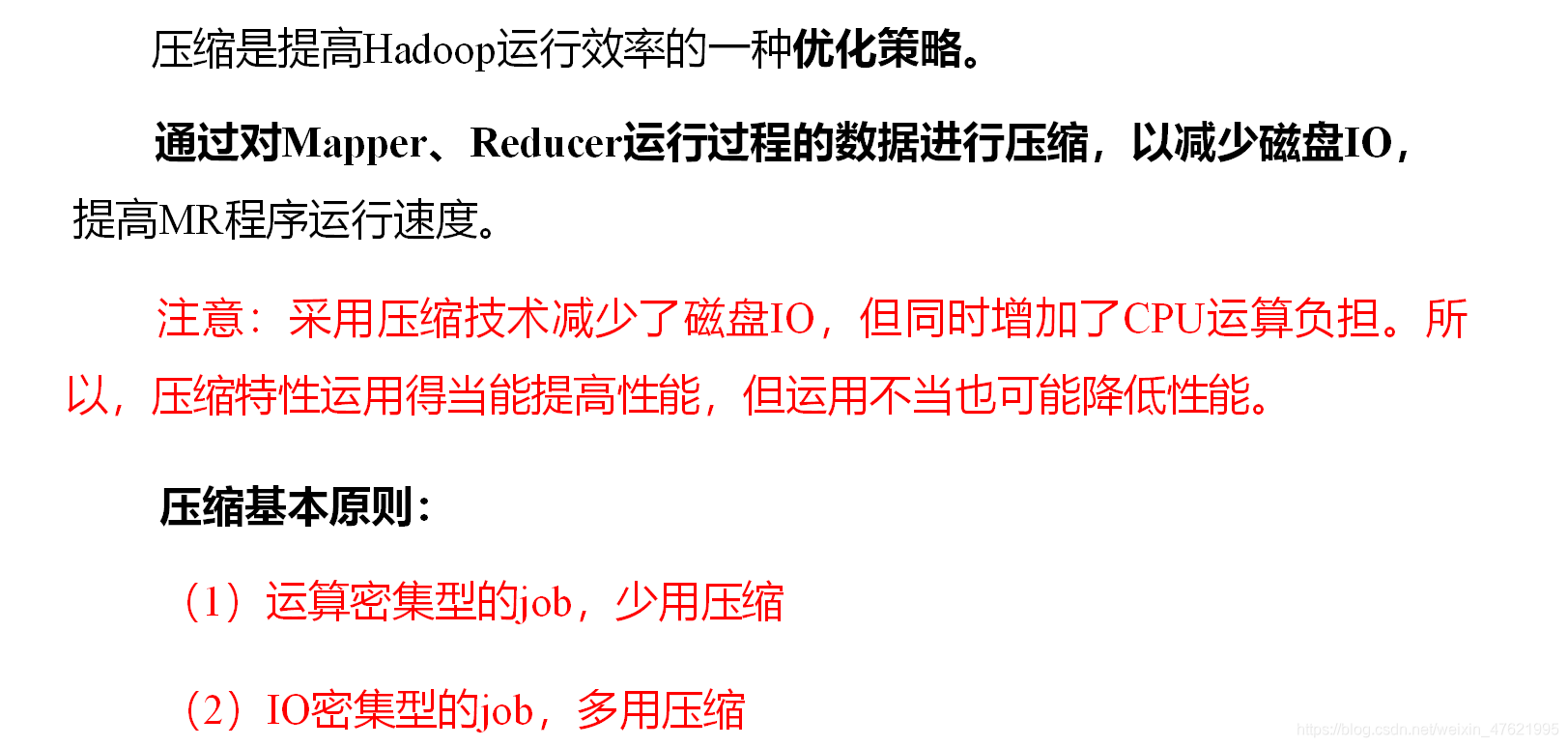

3 压缩方式选择

3.1 Gzip压缩

3.2 Bzip2压缩

3.3 Lzo压缩

3.4 Snappy压缩

4 压缩位置选择

压缩可以在MapReduce作用的任意阶段启用。

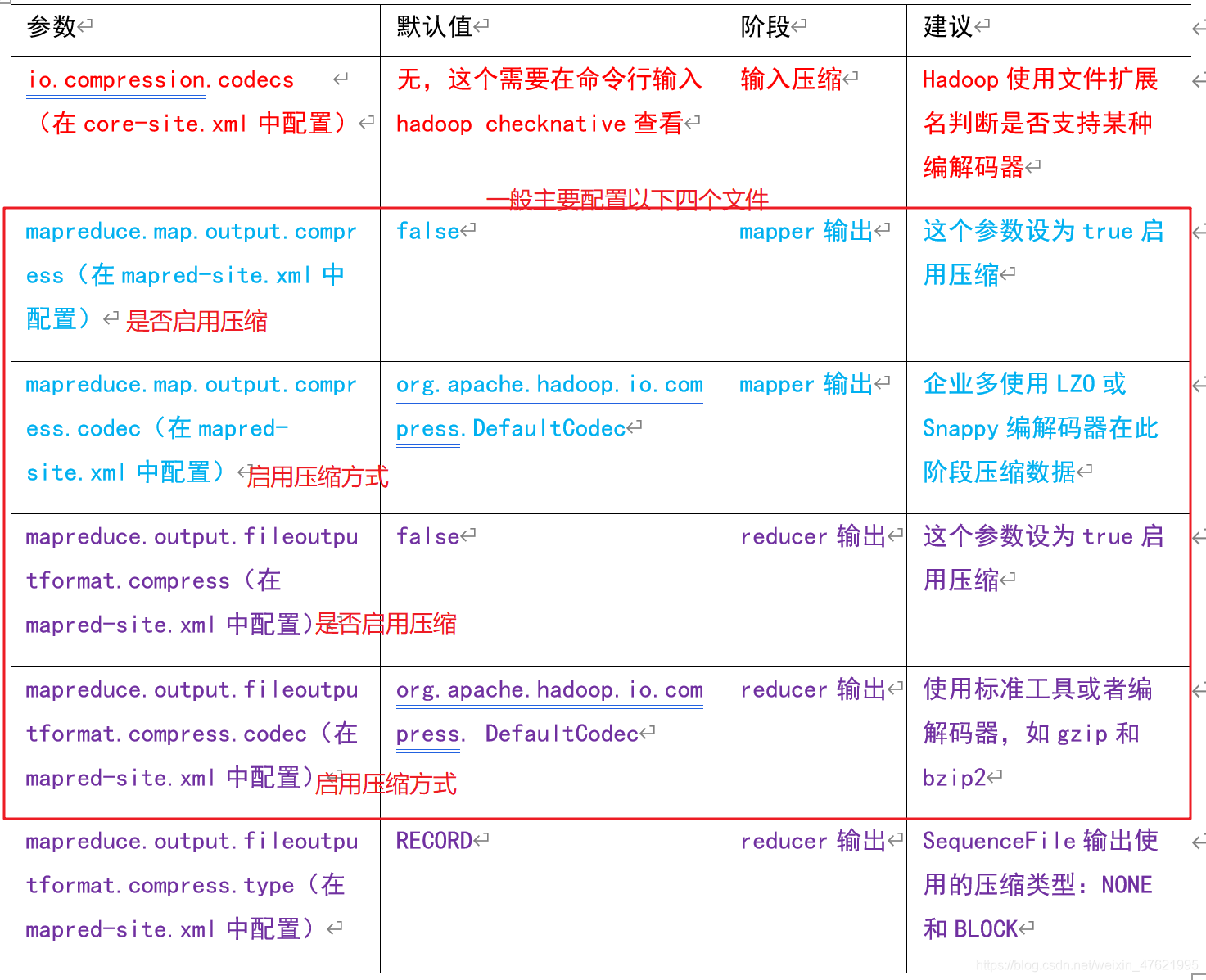

5 压缩参数配置

如何查看hadoop集群所支持压缩方式:

[atguigu@hadoop102 ~]$ hadoop checknative

2020-07-26 10:01:08,242 INFO bzip2.Bzip2Factory: Successfully loaded & initialized native-bzip2 library system-native

2020-07-26 10:01:08,248 INFO zlib.ZlibFactory: Successfully loaded & initialized native-zlib library

2020-07-26 10:01:08,263 WARN erasurecode.ErasureCodeNative: ISA-L support is not available in your platform... using builtin-java codec where applicable

Native library checking:

hadoop: true /opt/module/hadoop-3.1.3/lib/native/libhadoop.so.1.0.0

zlib: true /lib64/libz.so.1

zstd : true /lib64/libzstd.so.1

snappy: true /lib64/libsnappy.so.1

lz4: true revision:10301

bzip2: true /lib64/libbz2.so.1

openssl: true /lib64/libcrypto.so

ISA-L: false libhadoop was built without ISA-L support

要在Hadoop中启用压缩,可以配置如下参数:

6 压缩实操案例

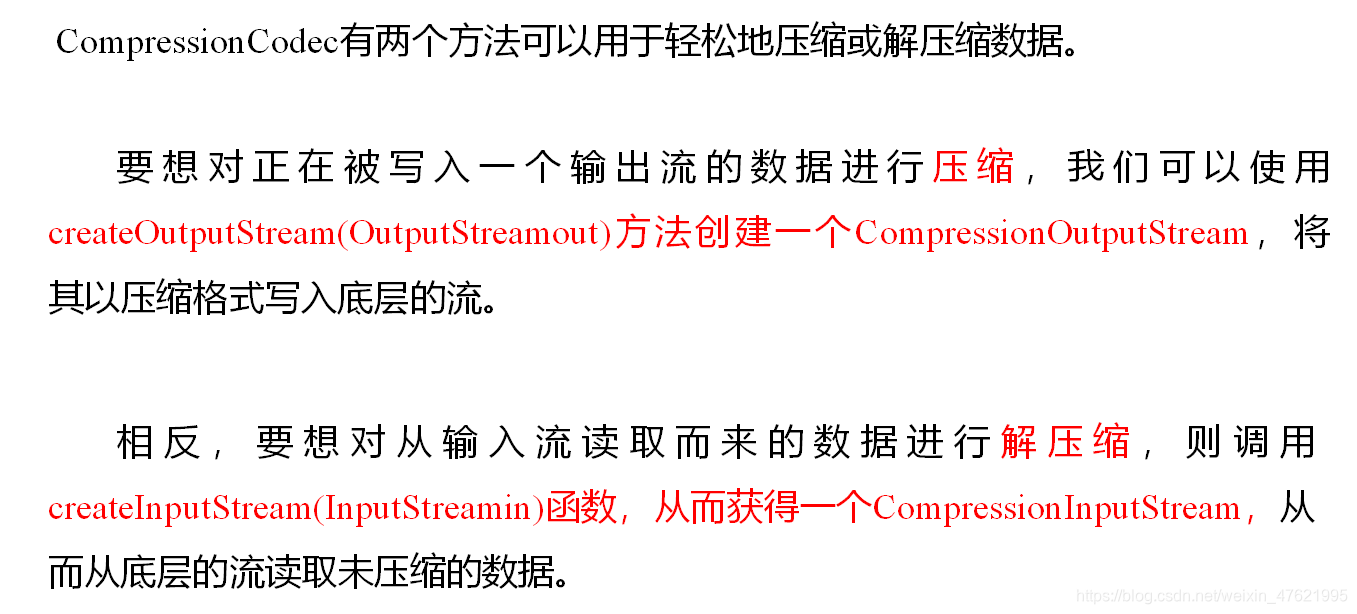

6.1 数据流的压缩和解压缩

测试一下如下压缩方式:

DEFLATE org.apache.hadoop.io.compress.DefaultCodec

gzip org.apache.hadoop.io.compress.GzipCodec

bzip2 org.apache.hadoop.io.compress.BZip2Codec

package com.atguigu.mapreduce.compress;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.compress.CompressionCodec;

import org.apache.hadoop.io.compress.CompressionCodecFactory;

import org.apache.hadoop.io.compress.CompressionInputStream;

import org.apache.hadoop.io.compress.CompressionOutputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.FileOutputStream;

import java.io.IOException;

public class TestCompress {

public static void main(String[] args) throws IOException {

compress("D:\\input\\inputcompression\\JaneEyre.txt"

,"org.apache.hadoop.io.compress.BZip2Codec");

//decompress("D:\\input\\inputcompression\\JaneEyre.txt.bz2");

}

//压缩

private static void compress(String filename, String method) throws IOException {

//1 获取输入流

FileInputStream fis = new FileInputStream(new File(filename));

//2 获取输出流

//获取压缩编解码器codec

CompressionCodecFactory factory = new CompressionCodecFactory(new Configuration());

CompressionCodec codec = factory.getCodecByName(method);

//获取普通输出流,文件后面需要加上压缩后缀

FileOutputStream fos = new FileOutputStream(new File(filename + codec.getDefaultExtension()));

//获取压缩输出流,用压缩解码器对fos进行压缩

CompressionOutputStream cos = codec.createOutputStream(fos);

//3 流的对拷

IOUtils.copyBytes(fis,cos,new Configuration());

//4 关闭资源

IOUtils.closeStream(cos);

IOUtils.closeStream(fos);

IOUtils.closeStream(fis);

}

//解压缩

private static void decompress(String filename) throws IOException {

//0 校验是否能解压缩

CompressionCodecFactory factory = new CompressionCodecFactory(new Configuration());

CompressionCodec codec = factory.getCodec(new Path(filename));

if (codec == null) {

System.out.println("cannot find codec for file " + filename);

return;

}

//1 获取输入流

FileInputStream fis = new FileInputStream(new File(filename));

CompressionInputStream cis = codec.createInputStream(fis);

//2 获取输出流

FileOutputStream fos = new FileOutputStream(new File(filename + ".decodec"));

//3 流的对拷

IOUtils.copyBytes(cis,fos,new Configuration());

//4 关闭资源

IOUtils.closeStream(fos);

IOUtils.closeStream(cis);

IOUtils.closeStream(fis);

}

}

6.2 Map输出端采用压缩

即使你的MapReduce的输入输出文件都是未压缩的文件,你仍然可以对Map任务的中间结果输出做压缩,因为它要写在硬盘并且通过网络传输到Reduce节点,对其压缩可以提高很多性能,这些工作只要设置两个属性即可,我们来看下代码怎么设置。

1)给大家提供的Hadoop源码支持的压缩格式有:BZip2Codec 、DefaultCodec

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.compress.BZip2Codec;

import org.apache.hadoop.io.compress.CompressionCodec;

import org.apache.hadoop.io.compress.GzipCodec;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1 获取job对象

Configuration conf = new Configuration();

// // 开启map端输出压缩

// conf.setBoolean("mapreduce.map.output.compress", true);

// // 设置map端输出压缩方式

// conf.setClass("mapreduce.map.output.compress.codec", DefaultCodec.class, CompressionCodec.class);

//压缩方式设置2

//设置map端压缩

conf.set("mapreduce.map.output.compress","true");

//conf.set("mapreduce.map.output.compress.codec","org.apache.hadoop.io.compress.BZip2Codec");

//设置reduce端输出压缩

conf.set("mapreduce.output.fileoutputformat.compress","true");

conf.set("mapreduce.output.fileoutputformat.compress.codec","org.apache.hadoop.io.compress.BZip2Codec");

Job job = Job.getInstance(conf);

//2 设置本Driver程序的类

job.setJarByClass(WordCountDriver.class);

//3 关联mapper和reducer

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

//4 设置map端输出的KV类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//5 设置mr程序的最终输出KV类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//6 设置程序的输入输出路径

FileInputFormat.setInputPaths(job, new Path("D:\\input\\inputcompress2"));

FileOutputFormat.setOutputPath(job, new Path("D:\\hadoop\\compress5"));

// // 设置reduce端输出压缩开启

// FileOutputFormat.setCompressOutput(job, true);

// // 设置压缩的方式

// FileOutputFormat.setOutputCompressorClass(job, BZip2Codec.class);

//7 提交job

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

2)Mapper保持不变

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

Text k = new Text();

IntWritable v = new IntWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context)throws IOException, InterruptedException {

// 1 获取一行

String line = value.toString();

// 2 切割

String[] words = line.split(" ");

// 3 循环写出

for(String word:words){

k.set(word);

context.write(k, v);

}

}

}

3)Reducer保持不变

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

IntWritable v = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

// 1 汇总

for(IntWritable value:values){

sum += value.get();

}

v.set(sum);

// 2 输出

context.write(key, v);

}

}

6.3 Reduce输出端采用压缩

基于WordCount案例处理。

1)修改驱动

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.compress.BZip2Codec;

import org.apache.hadoop.io.compress.DefaultCodec;

import org.apache.hadoop.io.compress.GzipCodec;

import org.apache.hadoop.io.compress.Lz4Codec;

import org.apache.hadoop.io.compress.SnappyCodec;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration configuration = new Configuration();

Job job = Job.getInstance(configuration);

job.setJarByClass(WordCountDriver.class);

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 设置reduce端输出压缩开启

FileOutputFormat.setCompressOutput(job, true);

// 设置压缩的方式

FileOutputFormat.setOutputCompressorClass(job, BZip2Codec.class);

// FileOutputFormat.setOutputCompressorClass(job, GzipCodec.class);

// FileOutputFormat.setOutputCompressorClass(job, DefaultCodec.class);

boolean result = job.waitForCompletion(true);

System.exit(result?1:0);

}

}

2)Mapper保持不变

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable>{

Text k = new Text();

IntWritable v = new IntWritable(1);

@Override

protected void map(LongWritable key, Text value, Context context)throws IOException, InterruptedException {

// 1 获取一行

String line = value.toString();

// 2 切割

String[] words = line.split(" ");

// 3 循环写出

for(String word:words){

k.set(word);

context.write(k, v);

}

}

}

3)Reducer保持不变

package com.atguigu.mapreduce.compress;

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

IntWritable v = new IntWritable();

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

// 1 汇总

for(IntWritable value:values){

sum += value.get();

}

v.set(sum);

// 2 输出

context.write(key, v);

}

}

3039

3039

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?