环境准备

- 两台操作系统 CentOS7.x-86_x64

- 硬件至少2GB,2CPU,硬盘40GB

- 可访问外网,拉取镜像

- 禁用swap分区

规划ip

master1 192.168.4.11

master2 192.168.4.22

VIP 192.168.4.100

设置/etc/hosts

[root@master1 ~]# cat >> /etc/hosts << EOF

> 192.168.4.11 master1

> 192.168.4.22 master2

> 192.168.4.33 nova

> EOF

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

setenforce 0 # 临时

#关闭swap

swapoff -a # 临时

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久

将桥接的IPv4流量传递到iptables的链

[root@master1 ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl --system

设置时间同步

[root@master1 ~]# yum install -y chrony

server ntp.aliyun.com iburst

bindacqaddress 0.0.0.0

allow 0/0

local stratum 10

[root@master1 ~]# chronyc sources -v

[root@master2 ~]# vim /etc/chrony.conf

server 192.168.4.11 iburst

[root@master2 ~]# chronyc sources -v

配置网络yum源

网易yum源

wget http://mirrors.163.com/.help/CentOS7-Base-163.repo

阿里yum源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

master节点配置keepalived

安装keepalived

[root@master1 ~]# yum -y install keepalived

[root@master2 ~]# yum -y install keepalived

master1 ,master2配置keepalived

[root@master1 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 5

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth1

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.4.100

}

track_script {

check_haproxy

}

}

[root@master2 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id k8s

}

vrrp_script check_haproxy {

script "killall -0 haproxy"

interval 3

weight -2

fall 5

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.4.100

}

track_script {

check_haproxy

}

}

开机自启并检查服务状态

[root@master1 ~]# systemctl enable keepalived.service --now

[root@master2 ~]# systemctl enable keepalived.service --now

[root@master1 ~]# systemctl status keepalived.service

[root@master2 ~]# systemctl status keepalived.service

看到 Active: active (running)

[root@master1 ~]# ip a s eth1

...

inet 192.168.4.11/24 brd 192.168.4.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.4.100/32 scope global eth1

....

可以看到master1上有VIP 192.168.4.100

所有节点配置haproxy

安装haproxy并配置,master1与master2配置同理

[root@master1 ~]# yum install -y haproxy

[root@master1 ~]# cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# k8s aipserver which proxys to the backends

#---------------------------------------------------------------------

frontend k8s-apiserver

mode tcp

bind *:16443

option tcplog

default_backend k8s-apiserver

#---------------------------------------------------------------------

# haproxy message

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:admin

stats refresh 30s

stats realm HAProxy\ Statistics

stats uri /stats

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend k8s-apiserver

mode tcp

balance roundrobin

server master01.k8s.io 192.168.4.11:6443 check

server master02.k8s.io 192.168.4.22:6443 check

启动并开机自启,检查端口

两台同理

[root@master1 ~]# systemctl enable haproxy.service --now

[root@master1 ~]# systemctl status haproxy.service

Active: active (running) 看到即可

[root@master1 ~]# ss -ntulp | grep haproxy

udp UNCONN 0 0 *:60441 *:* users:(("haproxy",pid=74028,fd=6),("haproxy",pid=74027,fd=6))

tcp LISTEN 0 128 *:1080 *:* users:(("haproxy",pid=74028,fd=7))

tcp LISTEN 0 128 *:16443 *:* users:(("haproxy",pid=74028,fd=5))

安装docker,kubeadm,kubelet,kubectl,ipvs

配置docker,kubernetes网络yum源

[root@master1 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@master1 ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

master2 同理

安装ipvsadm,docker,kubectl,kubeadm,kubelet

[root@master1 ~]# yum install -y ipset ipvsadm

[root@master2 ~]# yum install -y ipset ipvsadm

[root@master1 ~]# yum -y install docker-ce-18.09.1 docker-ce-cli-18.09.1 containerd.io

[root@master1 ~]# yum list docker-ce --showduplicates | sort -r

[root@master2 ~]# yum -y install docker-ce-18.09.1 docker-ce-cli-18.09.1 containerd.io

[root@master2 ~]# yum list docker-ce --showduplicates | sort -r

[root@master1 ~]# mkdir -p /etc/docker

vim /etc/docker/daemon.json (网易)

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://hub-mirror.c.163.com"]

}

任选其一

[root@master1 ~]# vim /etc/docker/daemon.json(阿里)

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://zu9nxqmf.mirror.aliyuncs.com"]

}

[root@master1 ~]# systemctl daemon-reload

[root@master1 ~]# systemctl restart docker

[root@master1 ~]# docker --version

Docker version 19.03.13, build 4484c46d9d

[root@master2 ~]# docker --version

Docker version 19.03.13, build 4484c46d9d

[root@master1 ~]# yum install -y kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0 --disableexcludes=kubernetes

[root@master2 ~]# yum install -y kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0 --disableexcludes=kubernetes

[root@master1 ~]# systemctl enable kubelet && systemctl start kubelet

[root@master2 ~]# systemctl enable kubelet && systemctl start kubelet

设置tab键补全

[root@master1 ~]# kubectl completion bash >/etc/bash_completion.d/kubectl

[root@master1 ~]# kubeadm completion bash >/etc/bash_completion.d/kubeadm

[root@master2 ~]# kubectl completion bash >/etc/bash_completion.d/kubectl

[root@master2 ~]# kubeadm completion bash >/etc/bash_completion.d/kubeadm

创建kubeadm配置文件(方法一)

[root@master1 ~]#mkdir init; cd init

[root@master1 ~]# cat init/kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

kubernetesVersion: v1.19.0

apiServer:

certSANs:

- "192.168.4.100"

controlPlaneEndpoint: "192.168.4.100:6443"

imageRepository: registry.aliyuncs.com/google_containers

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

初始化(先试运初始化根据提示错)

[root@master1 init]# kubeadm init --dry-run (根据提示排错若干)

W1130 08:57:38.728697 19315 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.19.4

[preflight] Running pre-flight checks

[preflight] Would pull the required images (like 'kubeadm config images pull')

[certs] Using certificateDir folder "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master1] and IPs [10.96.0.1 192.168.1.134]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master1] and IPs [192.168.1.134 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master1] and IPs [192.168.1.134 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309/config.yaml"

[control-plane] Using manifest folder "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[dryrun] Would ensure that "/var/lib/etcd" directory is present

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309"

[dryrun] Wrote certificates, kubeconfig files and control plane manifests to the "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309" directory

[dryrun] The certificates or kubeconfig files would not be printed due to their sensitive nature

[dryrun] Please examine the "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309" directory for details about what would be written

[dryrun] Would write file "/etc/kubernetes/manifests/kube-apiserver.yaml" with content:

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.1.134:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.1.134

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --insecure-port=0

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

image: k8s.gcr.io/kube-apiserver:v1.19.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 192.168.1.134

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-apiserver

readinessProbe:

failureThreshold: 3

httpGet:

host: 192.168.1.134

path: /readyz

port: 6443

scheme: HTTPS

periodSeconds: 1

timeoutSeconds: 15

resources:

requests:

cpu: 250m

startupProbe:

failureThreshold: 24

httpGet:

host: 192.168.1.134

path: /livez

port: 6443

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

status: {}

[dryrun] Would write file "/etc/kubernetes/manifests/kube-controller-manager.yaml" with content:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-controller-manager

tier: control-plane

name: kube-controller-manager

namespace: kube-system

spec:

containers:

- command:

- kube-controller-manager

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=127.0.0.1

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --cluster-name=kubernetes

- --cluster-signing-cert-file=/etc/kubernetes/pki/ca.crt

- --cluster-signing-key-file=/etc/kubernetes/pki/ca.key

- --controllers=*,bootstrapsigner,tokencleaner

- --kubeconfig=/etc/kubernetes/controller-manager.conf

- --leader-elect=true

- --port=0

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --root-ca-file=/etc/kubernetes/pki/ca.crt

- --service-account-private-key-file=/etc/kubernetes/pki/sa.key

- --use-service-account-credentials=true

image: k8s.gcr.io/kube-controller-manager:v1.19.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10257

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-controller-manager

resources:

requests:

cpu: 200m

startupProbe:

failureThreshold: 24

httpGet:

host: 127.0.0.1

path: /healthz

port: 10257

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certs

readOnly: true

- mountPath: /etc/pki

name: etc-pki

readOnly: true

- mountPath: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

name: flexvolume-dir

- mountPath: /etc/kubernetes/pki

name: k8s-certs

readOnly: true

- mountPath: /etc/kubernetes/controller-manager.conf

name: kubeconfig

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

volumes:

- hostPath:

path: /etc/ssl/certs

type: DirectoryOrCreate

name: ca-certs

- hostPath:

path: /etc/pki

type: DirectoryOrCreate

name: etc-pki

- hostPath:

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec

type: DirectoryOrCreate

name: flexvolume-dir

- hostPath:

path: /etc/kubernetes/pki

type: DirectoryOrCreate

name: k8s-certs

- hostPath:

path: /etc/kubernetes/controller-manager.conf

type: FileOrCreate

name: kubeconfig

status: {}

[dryrun] Would write file "/etc/kubernetes/manifests/kube-scheduler.yaml" with content:

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

component: kube-scheduler

tier: control-plane

name: kube-scheduler

namespace: kube-system

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --leader-elect=true

- --port=0

image: k8s.gcr.io/kube-scheduler:v1.19.4

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 8

httpGet:

host: 127.0.0.1

path: /healthz

port: 10259

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

name: kube-scheduler

resources:

requests:

cpu: 100m

startupProbe:

failureThreshold: 24

httpGet:

host: 127.0.0.1

path: /healthz

port: 10259

scheme: HTTPS

initialDelaySeconds: 10

periodSeconds: 10

timeoutSeconds: 15

volumeMounts:

- mountPath: /etc/kubernetes/scheduler.conf

name: kubeconfig

readOnly: true

hostNetwork: true

priorityClassName: system-node-critical

volumes:

- hostPath:

path: /etc/kubernetes/scheduler.conf

type: FileOrCreate

name: kubeconfig

status: {}

[dryrun] Would write file "/var/lib/kubelet/config.yaml" with content:

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

[dryrun] Would write file "/var/lib/kubelet/kubeadm-flags.env" with content:

KUBELET_KUBEADM_ARGS="--network-plugin=cni --pod-infra-container-image=k8s.gcr.io/pause:3.2"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/tmp/kubeadm-init-dryrun569943309". This can take up to 4m0s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[dryrun] Would perform action CREATE on resource "configmaps" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

data:

ClusterConfiguration: |

apiServer:

extraArgs:

authorization-mode: Node,RBAC

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.19.4

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

ClusterStatus: |

apiEndpoints:

master1:

advertiseAddress: 192.168.1.134

bindPort: 6443

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterStatus

kind: ConfigMap

metadata:

creationTimestamp: null

name: kubeadm-config

namespace: kube-system

[dryrun] Would perform action CREATE on resource "roles" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: kubeadm:nodes-kubeadm-config

namespace: kube-system

rules:

- apiGroups:

- ""

resourceNames:

- kubeadm-config

resources:

- configmaps

verbs:

- get

[dryrun] Would perform action CREATE on resource "rolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

creationTimestamp: null

name: kubeadm:nodes-kubeadm-config

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubeadm:nodes-kubeadm-config

subjects:

- kind: Group

name: system:bootstrappers:kubeadm:default-node-token

- kind: Group

name: system:nodes

[kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster

[dryrun] Would perform action CREATE on resource "configmaps" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

data:

kubelet: |

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

kind: ConfigMap

metadata:

annotations:

kubeadm.kubernetes.io/component-config.hash: sha256:c7c1c183c541e235b7922b2a5799a4a919c49faea7b315559c3b28d1642db368

creationTimestamp: null

name: kubelet-config-1.19

namespace: kube-system

[dryrun] Would perform action CREATE on resource "roles" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: kubeadm:kubelet-config-1.19

namespace: kube-system

rules:

- apiGroups:

- ""

resourceNames:

- kubelet-config-1.19

resources:

- configmaps

verbs:

- get

[dryrun] Would perform action CREATE on resource "rolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

creationTimestamp: null

name: kubeadm:kubelet-config-1.19

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubeadm:kubelet-config-1.19

subjects:

- kind: Group

name: system:nodes

- kind: Group

name: system:bootstrappers:kubeadm:default-node-token

[dryrun] Would perform action GET on resource "nodes" in API group "core/v1"

[dryrun] Resource name: "master1"

[dryrun] Would perform action PATCH on resource "nodes" in API group "core/v1"

[dryrun] Resource name: "master1"

[dryrun] Attached patch:

{"metadata":{"annotations":{"kubeadm.alpha.kubernetes.io/cri-socket":"/var/run/dockershim.sock"}}}

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[dryrun] Would perform action GET on resource "nodes" in API group "core/v1"

[dryrun] Resource name: "master1"

[dryrun] Would perform action PATCH on resource "nodes" in API group "core/v1"

[dryrun] Resource name: "master1"

[dryrun] Attached patch:

{"metadata":{"labels":{"node-role.kubernetes.io/master":""}},"spec":{"taints":[{"effect":"NoSchedule","key":"node-role.kubernetes.io/master"}]}}

[bootstrap-token] Using token: fk7c5q.2r7lllv42ap70fc2

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[dryrun] Would perform action GET on resource "secrets" in API group "core/v1"

[dryrun] Resource name: "bootstrap-token-fk7c5q"

[dryrun] Would perform action CREATE on resource "secrets" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

data:

auth-extra-groups: c3lzdGVtOmJvb3RzdHJhcHBlcnM6a3ViZWFkbTpkZWZhdWx0LW5vZGUtdG9rZW4=

description: VGhlIGRlZmF1bHQgYm9vdHN0cmFwIHRva2VuIGdlbmVyYXRlZCBieSAna3ViZWFkbSBpbml0Jy4=

expiration: MjAyMC0xMi0wMVQwODo1Nzo0My0wNTowMA==

token-id: Zms3YzVx

token-secret: MnI3bGxsdjQyYXA3MGZjMg==

usage-bootstrap-authentication: dHJ1ZQ==

usage-bootstrap-signing: dHJ1ZQ==

kind: Secret

metadata:

creationTimestamp: null

name: bootstrap-token-fk7c5q

namespace: kube-system

type: bootstrap.kubernetes.io/token

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[dryrun] Would perform action CREATE on resource "clusterroles" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: kubeadm:get-nodes

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

[dryrun] Would perform action CREATE on resource "clusterrolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: kubeadm:get-nodes

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubeadm:get-nodes

subjects:

- kind: Group

name: system:bootstrappers:kubeadm:default-node-token

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[dryrun] Would perform action CREATE on resource "clusterrolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: kubeadm:kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- kind: Group

name: system:bootstrappers:kubeadm:default-node-token

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[dryrun] Would perform action CREATE on resource "clusterrolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: kubeadm:node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- kind: Group

name: system:bootstrappers:kubeadm:default-node-token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[dryrun] Would perform action CREATE on resource "clusterrolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: kubeadm:node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- kind: Group

name: system:nodes

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[dryrun] Would perform action CREATE on resource "configmaps" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

data:

kubeconfig: |

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJd01URXpNREV6TlRjek9Wb1hEVE13TVRFeU9ERXpOVGN6T1Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBS1FoCjBTRkdmc1lqRlFTeWowR3dITUFheU1kU1RlcWVCbzI3UFN4YVVKMU0vamtZQTJ3R1F4c2tDVzFQTjFmVDEyaHQKNzdvNjl4L1JIUUs5eHBJdVIxL2NzbGhTaVpEVW9MNkFXUisvc0theHJvcUVLUFo3YUlEazB3cCs4aGx5dmQweApTSVlCNFhlY2J0b3VSOVdPTC9xdHUrQ2lOYktJdVR2bjViS2JQdHR2dzRXVlhnaDNtdkFvUGlCZSs2b25IV1VlCm1rNG9NSGJPZTlmV1RtMktLSGFNV3p1cG8xcTlSWkdITkxuTWNTeVpsQ1JWNTdnbjZZYjBJZU1vWWxZaW9tb1kKUWx0UzBLRVMwRmVCNXhqUGs4eXZpd2hPYi84cGp4TFJzanVucnBIUlFqRmEzY25DcHpBR0c5UUxPV1NqVG5TZwpBKzlsejNBSmhvdjZrcTBKNjFzQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZOSHYyUWZWc1QvUUhFdXMrLzNwMkJWZzdNMHpNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDZkpNUkl3SngvZTkzM3hlTmhSVFZ0VDYzN3AwQmhYdXJpMXdZYTVJMjdvNEV0bFRPNgpiSlJuM2wvSUpRWVB4elZwZzRwZDN6dHVwU3RranBFbitwdjFWa3BtdWRRdnQ1MjFIandQYVZ6aHJzcVJBYnRkCnJZaG1mZ1Z1UlRGMW9hS0hNbTFWVVlYVWlySHVtbVpIRk51cG8rYm1LcHZuRzMrVi9kcTVaMmlxdi8yMWVTcjAKSmI3Zzh3Nm1ndDkwWERwbE5PeUo1RnNnbWxDa1lUc2pYdmsvbmdoRlNtd3J4OFNWeTR5enVUekJKMHFBY2YxUQpIUm9NQ1VmanZsRzZTZ0RkYjgwR0hhMDNEbjJ0Ym85WmsxOThKR0VvQXZBUnNVa0VBTCtnOGhyTUZ6ckk0K1liCk1ZbllDQklUUngyY1Vqamp3T1NvRXVyVFFrQWZqakMwZlVPRwotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://192.168.1.134:6443

name: ""

contexts: null

current-context: ""

kind: Config

preferences: {}

users: null

kind: ConfigMap

metadata:

creationTimestamp: null

name: cluster-info

namespace: kube-public

[dryrun] Would perform action CREATE on resource "roles" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: kubeadm:bootstrap-signer-clusterinfo

namespace: kube-public

rules:

- apiGroups:

- ""

resourceNames:

- cluster-info

resources:

- configmaps

verbs:

- get

[dryrun] Would perform action CREATE on resource "rolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

creationTimestamp: null

name: kubeadm:bootstrap-signer-clusterinfo

namespace: kube-public

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubeadm:bootstrap-signer-clusterinfo

subjects:

- kind: User

name: system:anonymous

[dryrun] Would perform action LIST on resource "deployments" in API group "apps/v1"

[dryrun] Would perform action GET on resource "configmaps" in API group "core/v1"

[dryrun] Resource name: "kube-dns"

[dryrun] Would perform action GET on resource "configmaps" in API group "core/v1"

[dryrun] Resource name: "coredns"

[dryrun] Would perform action LIST on resource "pods" in API group "core/v1"

[dryrun] Would perform action CREATE on resource "configmaps" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

metadata:

creationTimestamp: null

name: coredns

namespace: kube-system

[dryrun] Would perform action CREATE on resource "clusterroles" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

[dryrun] Would perform action CREATE on resource "clusterrolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

[dryrun] Would perform action CREATE on resource "serviceaccounts" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: null

name: coredns

namespace: kube-system

[dryrun] Would perform action CREATE on resource "deployments" in API group "apps/v1"

[dryrun] Attached object:

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

k8s-app: kube-dns

name: coredns

namespace: kube-system

spec:

replicas: 2

selector:

matchLabels:

k8s-app: kube-dns

strategy:

rollingUpdate:

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

k8s-app: kube-dns

spec:

containers:

- args:

- -conf

- /etc/coredns/Corefile

image: k8s.gcr.io/coredns:1.7.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 5

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

successThreshold: 1

timeoutSeconds: 5

name: coredns

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

volumeMounts:

- mountPath: /etc/coredns

name: config-volume

readOnly: true

dnsPolicy: Default

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- effect: NoSchedule

key: node-role.kubernetes.io/master

volumes:

- configMap:

items:

- key: Corefile

path: Corefile

name: coredns

name: config-volume

status: {}

[dryrun] Would perform action CREATE on resource "services" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

creationTimestamp: null

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: KubeDNS

name: kube-dns

namespace: kube-system

resourceVersion: "0"

spec:

clusterIP: 10.96.0.10

ports:

- name: dns

port: 53

protocol: UDP

targetPort: 53

- name: dns-tcp

port: 53

protocol: TCP

targetPort: 53

- name: metrics

port: 9153

protocol: TCP

targetPort: 9153

selector:

k8s-app: kube-dns

status:

loadBalancer: {}

[addons] Applied essential addon: CoreDNS

[dryrun] Would perform action CREATE on resource "serviceaccounts" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: null

name: kube-proxy

namespace: kube-system

[dryrun] Would perform action CREATE on resource "configmaps" in API group "core/v1"

[dryrun] Attached object:

apiVersion: v1

data:

config.conf: |-

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: ""

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

metricsBindAddress: ""

mode: ""

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

udpIdleTimeout: 0s

winkernel:

enableDSR: false

networkName: ""

sourceVip: ""

kubeconfig.conf: |-

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://192.168.1.134:6443

name: default

contexts:

- context:

cluster: default

namespace: default

user: default

name: default

current-context: default

users:

- name: default

user:

tokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

kind: ConfigMap

metadata:

annotations:

kubeadm.kubernetes.io/component-config.hash: sha256:7207ee17464c98fe6bd8e6992f7746970132893226c7f55701c2e11e6e8e957a

creationTimestamp: null

labels:

app: kube-proxy

name: kube-proxy

namespace: kube-system

[dryrun] Would perform action CREATE on resource "daemonsets" in API group "apps/v1"

[dryrun] Attached object:

apiVersion: apps/v1

kind: DaemonSet

metadata:

creationTimestamp: null

labels:

k8s-app: kube-proxy

name: kube-proxy

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: kube-proxy

template:

metadata:

creationTimestamp: null

labels:

k8s-app: kube-proxy

spec:

containers:

- command:

- /usr/local/bin/kube-proxy

- --config=/var/lib/kube-proxy/config.conf

- --hostname-override=$(NODE_NAME)

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

image: k8s.gcr.io/kube-proxy:v1.19.4

imagePullPolicy: IfNotPresent

name: kube-proxy

resources: {}

securityContext:

privileged: true

volumeMounts:

- mountPath: /var/lib/kube-proxy

name: kube-proxy

- mountPath: /run/xtables.lock

name: xtables-lock

- mountPath: /lib/modules

name: lib-modules

readOnly: true

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-node-critical

serviceAccountName: kube-proxy

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- operator: Exists

volumes:

- configMap:

name: kube-proxy

name: kube-proxy

- hostPath:

path: /run/xtables.lock

type: FileOrCreate

name: xtables-lock

- hostPath:

path: /lib/modules

name: lib-modules

updateStrategy:

type: RollingUpdate

status:

currentNumberScheduled: 0

desiredNumberScheduled: 0

numberMisscheduled: 0

numberReady: 0

[dryrun] Would perform action CREATE on resource "clusterrolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

creationTimestamp: null

name: kubeadm:node-proxier

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-proxier

subjects:

- kind: ServiceAccount

name: kube-proxy

namespace: kube-system

[dryrun] Would perform action CREATE on resource "roles" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

creationTimestamp: null

name: kube-proxy

namespace: kube-system

rules:

- apiGroups:

- ""

resourceNames:

- kube-proxy

resources:

- configmaps

verbs:

- get

[dryrun] Would perform action CREATE on resource "rolebindings" in API group "rbac.authorization.k8s.io/v1"

[dryrun] Attached object:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

creationTimestamp: null

name: kube-proxy

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kube-proxy

subjects:

- kind: Group

name: system:bootstrappers:kubeadm:default-node-token

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/tmp/kubeadm-init-dryrun569943309/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.134:6443 --token fk7c5q.2r7lllv42ap70fc2 \

--discovery-token-ca-cert-hash sha256:78deac4c392ca90d2ac6416e4fba52e50030741de1eb98b42344438ddd67d69f

[root@master1 init]#

初始化(方法二)

[root@master1 k8s]# cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.4.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.19.0

controlPlaneEndpoint: 192.168.4.11

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

初始化方法三;

kubeadm init \

--apiserver-advertise-address=192.168.4.11 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.19.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=all

–apiserver-advertise-address #集群通告地址,填写Master的物理网卡地址

–image-repository #指定阿里云镜像仓库地址

–kubernetes-version #K8s版本

–service-cidr #集群内部虚拟网络,Cluster IP网段

–pod-network-cidr #指定Pod IP的网段

–ignore-preflight-errors=all #忽略安装过程的一些错误

方法一:

如果初始化错误,使用

kubeadm reseat 恢复,在重新初始化

方法二:

1、移除所有工作节点

kubectl delete node master1

2、所有工作节点删除工作目录,并重置kubeadm

rm -rf /etc/kubernetes/*

kubeadm reset

3、Master节点删除工作目录,并重置kubeadm

rm -rf /etc/kubernetes/*

rm -rf ~/.kube/*

rm -rf /var/lib/etcd/*

kubeadm reset -f

4、重新init kubernetes

真正初始化

初始化管道后创建一个日志文件

[root@master1 init]# kubeadm config print init-defaults > kubeadm-config.yaml 制作初始化文件

[root@master1 init]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

执行在master1上init后输出的join命令,需要带上参数--control-plane表示把master控制节点加入集群

master加入集群的命令

kubeadm join 192.168.4.100:6443 --token vk662r.h5m3lpfd3pk5lfwn \

--discovery-token-ca-cert-hash sha256:640838c4afd7dea679c00f1553bb51d6c74eeda5467ddd3dd591174cd7c2f46c \

--control-plane --certificate-key cb2de0e442b3932d451a1e2d7895a4e07f5cb43cf184d436dd9ec8487034c521

node加入集群的命令

kubeadm join 192.168.4.100:6443 --token vk662r.h5m3lpfd3pk5lfwn \

--discovery-token-ca-cert-hash sha256:640838c4afd7dea679c00f1553bb51d6c74eeda5467ddd3dd591174cd7c2f46c

初始化成功后执行的命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

出现Ready证明master1ok

[root@master1 init]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 5m v1.19.0

排错

[root@master1 ]# kubectl get pods -n kube-system -o wide

[root@master1 ]# kubectl describe -n kube-system 容器名称

[root@master1 ]# kubectl -n kube-system logs 容器名称

查询nodes出现NotReady开始排错(是因为flannel没有安装)

[root@master1 init]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady master 2m v1.19.0

查看 cs (组件状态scheduler Unhealthy及controller-manager Unhealthy,图中是不正常的),删除port=0

[root@master1 flannel]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

不正常时打开 下面文件,修改

[root@master1 manifests]# pwd

/etc/kubernetes/manifests

[root@master1 manifests]# ls

etcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

[root@master1 manifests]# vim kube-controller-manager.yaml

删除将port=0

[root@master1 manifests]# vim kube-scheduler.yaml

同样删除port=0

[root@master1 manifests]# systemctl restart kubelet 重启服务(cs将正常)

网络插件安装配置

[root@master1 ~]# mkdir flannel

[root@master1 ~]# cd flannel

[root@master1 ~]# wget -c https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

[root@master1 flannel]# ls

kube-flannel.yml

[root@master1 flannel]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

由于镜像使用的是github上无法拉取

[root@master1 flannel]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d56c8448f-8df92 0/1 Pending 0 22m

coredns-6d56c8448f-t466t 0/1 Pending 0 22m

etcd-master1 1/1 Running 0 22m

kube-apiserver-master1 1/1 Running 0 22m

kube-controller-manager-master1 1/1 Running 0 22m

kube-flannel-ds-b2wph 0/1 Init:ImagePullBackOff 0 56s

kube-proxy-f9lxl 1/1 Running 0 22m

kube-scheduler-master1 1/1 Running 0 22m

打开kube-flannel.yml文件

下载镜像

下载镜像

https://github.com/coreos/flannel/releases 重新执行kubectl apply -f kube-flannel.yml,出现下面问题

重新执行kubectl apply -f kube-flannel.yml,出现下面问题

在kube-flannel.yml文件中找到flannel的资源文件,单独复制到新的文件中重新执行

在kube-flannel.yml文件中找到flannel的资源文件,单独复制到新的文件中重新执行

kubectl apply -f a.yml

[root@master1 flannel]# cat a.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay-mirror.qiniu.com/coreos/flannel:v0.13.1-rc1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay-mirror.qiniu.com/coreos/flannel:v0.13.1-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@master1 flannel]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d56c8448f-8df92 1/1 Running 5 43h

coredns-6d56c8448f-t466t 1/1 Running 5 43h

etcd-master1 1/1 Running 8 43h

etcd-master2 1/1 Running 0 19h

kube-apiserver-master1 1/1 Running 9 43h

kube-apiserver-master2 1/1 Running 6 19h

kube-controller-manager-master1 1/1 Running 8 20h

kube-controller-manager-master2 1/1 Running 7 19h

kube-flannel-ds-7rd8n 1/1 Running 2 19h

kube-flannel-ds-amd64-sg6qf 1/1 Running 2 20h

kube-flannel-ds-bv6xd 1/1 Running 0 20h

kube-proxy-dzhc2 1/1 Running 0 19h

kube-proxy-f9lxl 1/1 Running 6 43h

kube-scheduler-master1 1/1 Running 7 20h

加入master2

用另外一种方法将master2加入

[root@master1 ~]# mkdir -p /etc/kubernetes/pki/etcd

[root@master1 ~]# scp /etc/kubernetes/admin.conf root@192.168.4.22:/etc/kubernetes

[root@master1 ~]# scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@192.168.4.22:/etc/kubernetes/pki

[root@master1 ~]# scp /etc/kubernetes/pki/etcd/ca.* root@192.168.4.22:/etc/kubernetes/pki/etcd

--control-plane表示把master2控制节点加入集群

[root@master2 ~]# kubeadm join 192.168.4.100:16443 --token 31p03i.fene5hpbo0qvkq35 --discovery-token-ca-cert-hash sha256:640838c4afd7dea679c00f1553bb51d6c74eeda5467ddd3dd591174cd7c2f46c --control-plane

[root@master1 flannel]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d56c8448f-8df92 1/1 Running 5 44h

kube-system coredns-6d56c8448f-t466t 1/1 Running 5 44h

kube-system etcd-master1 1/1 Running 8 44h

kube-system etcd-master2 1/1 Running 0 19h

kube-system kube-apiserver-master1 1/1 Running 9 44h

kube-system kube-apiserver-master2 1/1 Running 6 19h

kube-system kube-controller-manager-master1 1/1 Running 8 20h

kube-system kube-controller-manager-master2 1/1 Running 7 19h

kube-system kube-flannel-ds-7rd8n 1/1 Running 2 19h

kube-system kube-flannel-ds-amd64-sg6qf 1/1 Running 2 20h

kube-system kube-flannel-ds-bv6xd 1/1 Running 0 20h

kube-system kube-proxy-dzhc2 1/1 Running 0 19h

kube-system kube-proxy-f9lxl 1/1 Running 6 44h

kube-system kube-scheduler-master1 1/1 Running 7 20h

kube-system kube-scheduler-master2 1/1 Running 4 19h

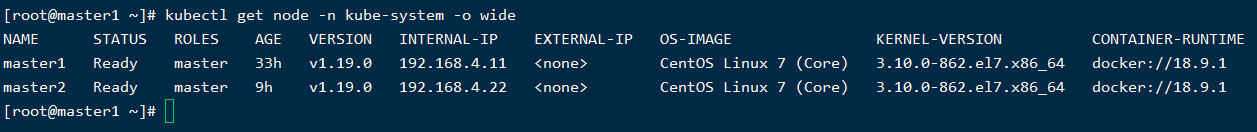

[root@master1 ~]# kubectl get node -n kube-system -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master1 Ready master 24h v1.19.0 192.168.4.11 <none> CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://18.9.1

master2 NotReady master 21m v1.19.0 192.168.4.22 <none> CentOS Linux 7 (Core) 3.10.0-862.el7.x86_64 docker://18.9.1

双master节点完成

创建永久token, 默认token是24h有效

[root@master ~]# kubeadm token create --ttl=0 --print-join-command

[root@master ~]# kubeadm token list

获取token_hash

[root@master ~]# openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt |openssl rsa -pubin -outform der |openssl dgst -sha256 -hex

[root@master1 ~]# kubectl get namespace

NAME STATUS AGE

default Active 43h

kube-node-lease Active 43h

kube-public Active 43h

kube-system Active 43h

3688

3688

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?