一、 Kafka、ZooKeeper 的分布式消息队列系统总体架构

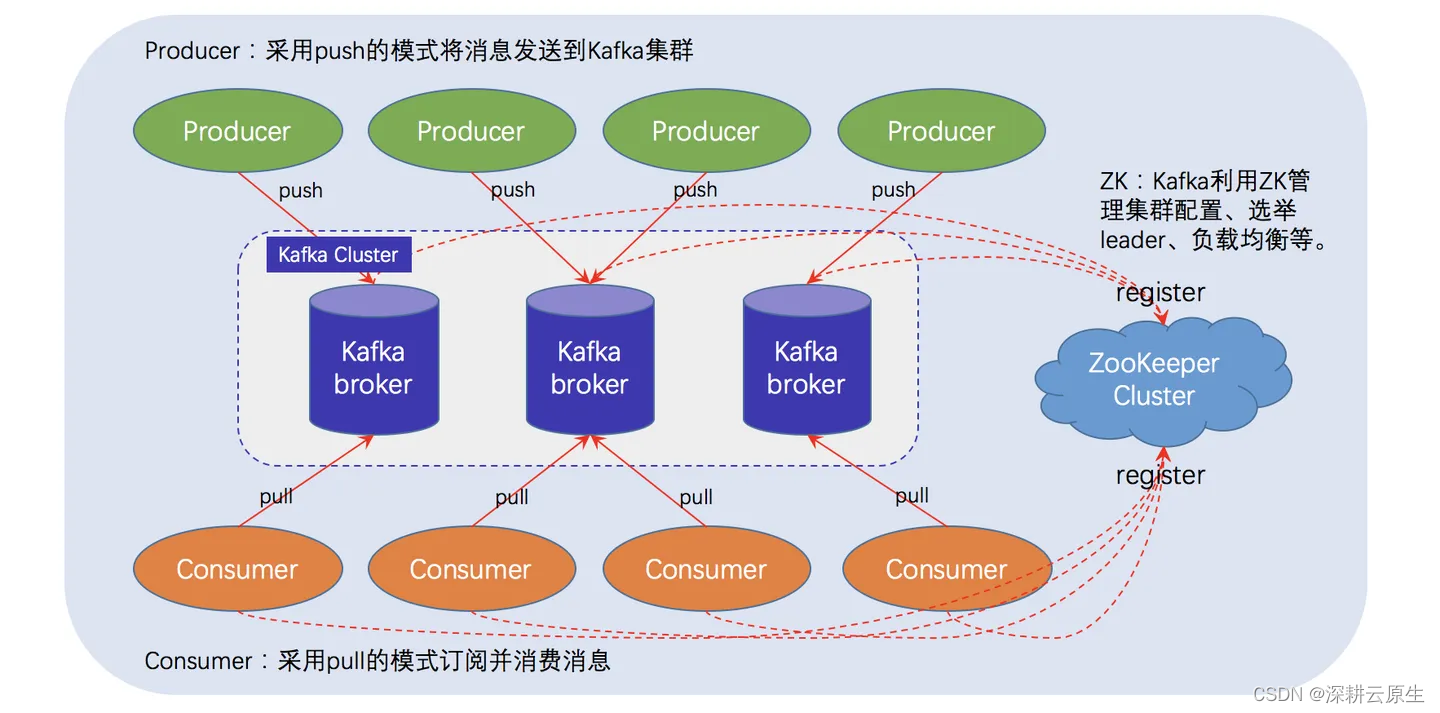

典型的 Kafka 体系架构包括若干 Producer(消息生产者),若干 Broker(作为 Kafka 节点的服务器),若干 Consumer (Group),以及一个 ZooKeeper 集群。

Kafka 通过 ZooKeeper 管理集群配置、选举 Leader,并在 Consumer Group 发生变化时进行 Rebalance(即消费者负载均衡)。Producer 使用 Push(推)模式将消息发布到 Broker,Consumer 使用 Pull(拉)模式从 Broker 订阅并消费消息。

Kafka 节点涉及 Topic、Partition 两个重要概念。

在 Kafka 架构中,有几个术语需要了解下。

- Producer: 生产者,即消息发送者,Push 消息到 Kafka 集群的 Broker(就是 Server)中; Broker:

Kafka 集群由多个 Kafka 实例(Server)组成,每个实例构成一个 Broker,其实就是服务器; - Topic: Producer 向 Kafka 集群 Push 的消息会被归于某一类别,即

Topic。本质上,这只是一个逻辑概念,面向的对象是 Producer 和 Consumer,Producer 只需关注将消息 Push

到哪一个 Topic 中,而 Consumer 只需关心自己订阅了哪个 Topic; - Partition: 每个 Topic 又被分为多个 Partition,即物理分区。出于负载均衡的考虑,同一个 Topic 的

Partition 分别存储于 Kafka 集群的多个 Broker 上。而为了提高可靠性,这些 Partition 可以由 Kafka

机制中的 Replicas 来设置备份的数量。如上面框架图所示,每个 Partition 都存在两个备份; - Consumer: 消费者,从 Kafka 集群的 Broker 中 Pull 消息、消费消息;

- Consumer Group: High-Level Consumer API 中,每个 Consumer 都属于一个 Consumer

Group,每条消息只能被 Consumer Group 中的一个 Consumer 消费,但可以被多个 Consumer Group 消费; - Replicas: Partition 的副本,保障 Partition 的高可用;

- Leader: Replicas 中的一个角色, Producer 和 Consumer 只与 Leader 交互;

- Follower: Replicas 中的一个角色,从 Leader 中复制数据,作为它的副本,同时一旦某 Leader

挂掉,便会从它的所有 Follower 中选举出一个新的 Leader 继续提供服务; - Controller: Kafka 集群中的一个服务器,用来进行 Leader Election 以及各种 Fail Over;

Zookeeper: Kafka 通过 ZooKeeper 存储集群的 Meta 信息等,文中将详述。

Kafka、ZooKeeper 的分布式消息队列系统总体架构已经简单了解啦,接下来开始上干货!

二、部署nfs-provisioner 实现PV 动态供给(StorageClass)

三、集群部署

# 操作系统

# CentOS Linux release 7.9.2009 (Core)

lsb_release -a

# 内核版本

# 3.10.0-1160.90.1.el7.x86_64

uname -a

# k8s 版本 1.21

# zookeeper 版本 3.4.10

# kafka 版本 3.5.2

一、Zookeeper on k8s 部署

k8s官网参考链接

zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zk-hs

labels:

app: zk

spec:

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

clusterIP: None

selector:

app: zk

---

apiVersion: v1

kind: Service

metadata:

name: zk-cs

labels:

app: zk

spec:

ports:

- port: 2181

targetPort: 2181

name: client

selector:

app: zk

---

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: zk-pdb

spec:

selector:

matchLabels:

app: zk

maxUnavailable: 2

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zk

spec:

selector:

matchLabels:

app: zk

serviceName: zk-hs

replicas: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: OrderedReady

template:

metadata:

labels:

app: zk

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zk

topologyKey: "kubernetes.io/hostname"

containers:

- name: kubernetes-zookeeper

imagePullPolicy: IfNotPresent

image: "zhaoguanghui6/kubernetes-zookeeper:1.0-3.4.10"

resources:

requests:

memory: "0.5Gi"

cpu: "0.5"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "start-zookeeper \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: datadir

mountPath: /var/lib/zookeeper

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: datadir

spec:

storageClassName: nfs-client

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

二、Kafka on k8s 部署

1.构建镜像

Dockerfile

FROM openjdk:8-jdk

ENV KAFKA_HOME=/opt/kafka

RUN curl --silent --output /tmp/kafka_2.12-3.5.2.tgz https://mirrors.tuna.tsinghua.edu.cn/apache/kafka/3.5.2/kafka_2.12-3.5.2.tgz && tar --directory /opt -xzf /tmp/kafka_2.12-3.5.2.tgz && rm /tmp/kafka_2.12-3.5.2.tgz

RUN ln -s /opt/kafka_2.12-3.5.2 ${KAFKA_HOME}

WORKDIR $KAFKA_HOME

EXPOSE 9092

2.创建 SVC

svc.yaml

apiVersion: v1

kind: Service

metadata:

namespace: kafka

name: kafka-hs

labels:

app: kafka

spec:

clusterIP: None

ports:

- name: server

port: 9092

targetPort: 9092

selector:

app: kafka

---

apiVersion: v1

kind: Service

metadata:

namespace: kafka

name: kafka-cs-0

labels:

app: kafka

spec:

ports:

- port: 9092

targetPort: 9092

nodePort: 30127

name: client

type: NodePort

selector:

statefulset.kubernetes.io/pod-name: kafka-0

---

apiVersion: v1

kind: Service

metadata:

namespace: kafka

name: kafka-cs-1

labels:

app: kafka

spec:

ports:

- port: 9092

targetPort: 9092

nodePort: 30128

name: client

type: NodePort

selector:

statefulset.kubernetes.io/pod-name: kafka-1

---

apiVersion: v1

kind: Service

metadata:

namespace: kafka

name: kafka-cs-2

labels:

app: kafka

spec:

ports:

- port: 9092

targetPort: 9092

nodePort: 30129

name: client

type: NodePort

selector:

statefulset.kubernetes.io/pod-name: kafka-2

3.创建StatefulSet部署kafka

获取zookeeper地址

for i in 0 1 2; do kubectl exec zk-$i -- hostname -f; done

kafka.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: kafka

namespace: kafka

spec:

serviceName: kafka-hs

replicas: 3

selector:

matchLabels:

app: kafka

template:

metadata:

labels:

app: kafka

spec:

containers:

- name: kafka

imagePullPolicy: IfNotPresent

image: zhaoguanghui6/kubernetes-kafka:v3.5.2

resources:

requests:

memory: "2Gi"

cpu: 1000m

ports:

- containerPort: 9092

name: server

command:

- sh

- -c

- "exec /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties \

--override broker.id=${HOSTNAME##*-} \

--override listeners=PLAINTEXT://:9092 \

--override advertised.listeners=PLAINTEXT://10.10.101.114:$((${HOSTNAME##*-}+30127)) \

--override zookeeper.connect=zk-0.zk-hs.default.svc.cluster.local:2181,zk-0.zk-hs.default.svc.cluster.local:2181,zk-0.zk-hs.default.svc.cluster.local:2181 \

--override log.dir=/var/lib/kafka/ \

--override auto.create.topics.enable=true \

--override auto.leader.rebalance.enable=true \

--override background.threads=10 \

--override compression.type=producer \

--override delete.topic.enable=true \

--override leader.imbalance.check.interval.seconds=300 \

--override leader.imbalance.per.broker.percentage=10 \

--override log.flush.interval.messages=9223372036854775807 \

--override log.flush.offset.checkpoint.interval.ms=60000 \

--override log.flush.scheduler.interval.ms=9223372036854775807 \

--override log.retention.bytes=-1 \

--override log.retention.hours=168 \

--override log.roll.hours=168 \

--override log.roll.jitter.hours=0 \

--override log.segment.bytes=1073741824 \

--override log.segment.delete.delay.ms=60000 \

--override message.max.bytes=1000012 \

--override min.insync.replicas=1 \

--override num.io.threads=8 \

--override num.network.threads=3 \

--override num.recovery.threads.per.data.dir=1 \

--override num.replica.fetchers=1 \

--override offset.metadata.max.bytes=4096 \

--override offsets.commit.required.acks=-1 \

--override offsets.commit.timeout.ms=5000 \

--override offsets.load.buffer.size=5242880 \

--override offsets.retention.check.interval.ms=600000 \

--override offsets.retention.minutes=1440 \

--override offsets.topic.compression.codec=0 \

--override offsets.topic.num.partitions=50 \

--override offsets.topic.replication.factor=3 \

--override offsets.topic.segment.bytes=104857600 \

--override queued.max.requests=500 \

--override quota.consumer.default=9223372036854775807 \

--override quota.producer.default=9223372036854775807 \

--override replica.fetch.min.bytes=1 \

--override replica.fetch.wait.max.ms=500 \

--override replica.high.watermark.checkpoint.interval.ms=5000 \

--override replica.lag.time.max.ms=10000 \

--override replica.socket.receive.buffer.bytes=65536 \

--override replica.socket.timeout.ms=30000 \

--override request.timeout.ms=30000 \

--override socket.receive.buffer.bytes=102400 \

--override socket.request.max.bytes=104857600 \

--override socket.send.buffer.bytes=102400 \

--override unclean.leader.election.enable=true \

--override zookeeper.session.timeout.ms=6000 \

--override zookeeper.set.acl=false \

--override broker.id.generation.enable=true \

--override connections.max.idle.ms=600000 \

--override controlled.shutdown.enable=true \

--override controlled.shutdown.max.retries=3 \

--override controlled.shutdown.retry.backoff.ms=5000 \

--override controller.socket.timeout.ms=30000 \

--override default.replication.factor=1 \

--override fetch.purgatory.purge.interval.requests=1000 \

--override group.max.session.timeout.ms=300000 \

--override group.min.session.timeout.ms=6000 \

--override log.cleaner.backoff.ms=15000 \

--override log.cleaner.dedupe.buffer.size=134217728 \

--override log.cleaner.delete.retention.ms=86400000 \

--override log.cleaner.enable=true \

--override log.cleaner.io.buffer.load.factor=0.9 \

--override log.cleaner.io.buffer.size=524288 \

--override log.cleaner.io.max.bytes.per.second=1.7976931348623157E308 \

--override log.cleaner.min.cleanable.ratio=0.5 \

--override log.cleaner.min.compaction.lag.ms=0 \

--override log.cleaner.threads=1 \

--override log.cleanup.policy=delete \

--override log.index.interval.bytes=4096 \

--override log.index.size.max.bytes=10485760 \

--override log.message.timestamp.difference.max.ms=9223372036854775807 \

--override log.message.timestamp.type=CreateTime \

--override log.preallocate=false \

--override log.retention.check.interval.ms=300000 \

--override max.connections.per.ip=2147483647 \

--override num.partitions=1 \

--override producer.purgatory.purge.interval.requests=1000 \

--override replica.fetch.backoff.ms=1000 \

--override replica.fetch.max.bytes=1048576 \

--override replica.fetch.response.max.bytes=10485760 \

--override reserved.broker.max.id=1000"

env:

- name: KAFKA_HEAP_OPTS

value : "-Xmx2G -Xms2G"

- name: KAFKA_OPTS

value: "-Dlogging.level=INFO"

volumeMounts:

- name: datadir

mountPath: /var/lib/kafka

volumeClaimTemplates:

- metadata:

name: datadir

spec:

accessModes: [ "ReadWriteMany" ]

resources:

requests:

storage: 1Gi

storageClassName: "nfs-client"

也可以加一些调度规则和健康检查,安全系数等:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- kafka

topologyKey: "kubernetes.io/hostname"

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

labelSelector:

matchExpressions:

- key: "app"

operator: In

values:

- zk

topologyKey: "kubernetes.io/hostname"

terminationGracePeriodSeconds: 300

readinessProbe:

tcpSocket:

port: 9092

timeoutSeconds: 5

periodSeconds: 5

initialDelaySeconds: 40

livenessProbe:

exec:

command:

- sh

- -c

- "/opt/kafka/bin/kafka-broker-api-versions.sh --bootstrap-server=localhost:9093"

timeoutSeconds: 5

periodSeconds: 5

initialDelaySeconds: 70

securityContext:

runAsUser: 1000

fsGroup: 1000

制作不易,点点关注!持续更新中。。。

如果有问题和建议,请在评论区留言喔。

本文详细介绍了如何在 Kubernetes 集群中部署 Kafka 和 ZooKeeper,包括使用 nfs-provisioner 实现 PV 动态供给、Zookeeper 的部署以及 Kafka 镜像构建、SVC 创建和 StatefulSet 部署的步骤。通过 ZooKeeper 管理 Kafka 集群配置,并确保集群的高可用性和外部通信能力。

本文详细介绍了如何在 Kubernetes 集群中部署 Kafka 和 ZooKeeper,包括使用 nfs-provisioner 实现 PV 动态供给、Zookeeper 的部署以及 Kafka 镜像构建、SVC 创建和 StatefulSet 部署的步骤。通过 ZooKeeper 管理 Kafka 集群配置,并确保集群的高可用性和外部通信能力。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?