1.划分数据集

import os

import random

from shutil import copy2

# 步骤一未作改动,需要带入自己的文件路径file_path 、new_file_path

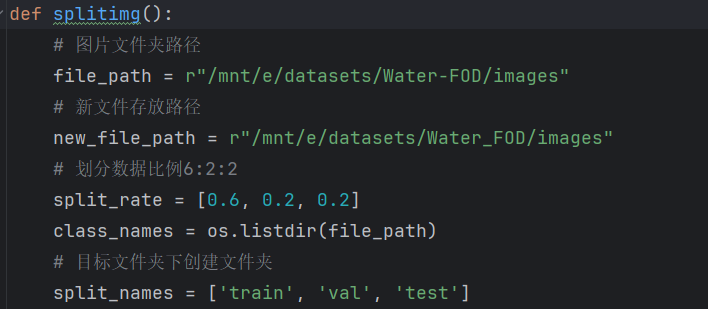

def splitimg():

# 图片文件夹路径

file_path = r"/mnt/e/datasets/Water-FOD/images"

# 新文件存放路径

new_file_path = r"/mnt/e/datasets/Water_FOD/images"

# 划分数据比例6:2:2

split_rate = [0.6, 0.2, 0.2]

class_names = os.listdir(file_path)

# 目标文件夹下创建文件夹

split_names = ['train', 'val', 'test']

print(class_names) # ['00000.jpg', '00001.jpg', '00002.jpg'... ]

current_all_data = os.listdir(file_path)

# 判断是否存在目标文件夹,不存在则创建---->创建train\val\test文件夹

if os.path.isdir(new_file_path):

pass

else:

os.makedirs(new_file_path)

for split_name in split_names:

split_path = os.path.join(new_file_path, split_name)

# D:/Code/Data/GREENTdata/train, val, test

if os.path.isdir(split_path):

pass

else:

os.makedirs(split_path)

# 按照比例划分数据集,并进行数据图片的复制

for class_name in class_names:

current_data_path = file_path # D:/Code/Data/centerlinedata/tem_voc/JPEGImages/

current_data_length = len(class_names) # 文件夹下的图片个数

current_data_index_list = list(range(current_data_length))

random.shuffle(current_data_index_list)

train_stop_flag = current_data_length * split_rate[0]

val_stop_flag = current_data_length * (split_rate[0] + split_rate[1])

current_idx = 0

train_num = 0

val_num = 0

test_num = 0

# 图片复制到文件夹中

for i in current_data_index_list:

src_img_path = os.path.join(current_data_path, current_all_data[i])

if current_idx <= train_stop_flag:

newpath = os.path.join(os.path.join(new_file_path, 'train'), current_all_data[i])

os.rename(src_img_path, newpath)

train_num += 1

elif (current_idx > train_stop_flag) and (current_idx <= val_stop_flag):

newpath = os.path.join(os.path.join(new_file_path, 'val'), current_all_data[i])

os.rename(src_img_path, newpath)

# copy2(src_img_path, newpath)

val_num += 1

else:

newpath = os.path.join(os.path.join(new_file_path, 'test'), current_all_data[i])

os.rename(src_img_path, newpath)

# copy2(src_img_path, newpath)

test_num += 1

current_idx += 1

print("Done!", train_num, val_num, test_num)

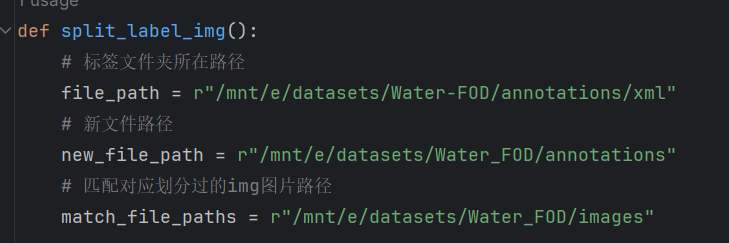

# 步骤二升级,仅需要带入文件路径file_path 、new_file_path 、 match_file_paths

def split_label_img():

# 标签文件夹所在路径

file_path = r"/mnt/e/datasets/Water-FOD/annotations/xml"

# 新文件路径

new_file_path = r"/mnt/e/datasets/Water_FOD/annotations"

# 匹配对应划分过的img图片路径

match_file_paths = r"/mnt/e/datasets/Water_FOD/images"

# class_names = os.listdir(file_path)

match_names = os.listdir(match_file_paths) # 获取文件名称['train', 'val', 'test']

# 判断是否存在目标文件夹,不存在则创建---->创建train_label\val_label\test_label文件夹

if os.path.isdir(new_file_path):

pass

else:

os.makedirs(new_file_path)

for match_name in match_names:

split_path = os.path.join(new_file_path,

match_name + '_labels') # 目标文件夹下创建文件夹 ['train_labels', 'val_labels', 'test_labels']

# print(split_path) # D:\Code\Data\UAS_Dataset\Rain\Rain_txt\ +[train_label, val_label, test_label]

if os.path.isdir(split_path):

pass

else:

os.makedirs(split_path)

match_img = os.path.join(match_file_paths,

match_name) # img文件夹的路径,如D:\Code\Data\UAS_Dataset\Rain\Rain_img\train

imgs = os.listdir(match_img) # img图片名称

for img in imgs:

firstname = img[0:-4] # img图片名称(不带后缀)

txtname = firstname + '.xml' # 图片对应的label名称

txtpath = os.path.join(file_path, txtname) # 图片对应label的位置

newpath = os.path.join(split_path, txtname)

os.rename(txtpath, newpath)

# 按照比例划分数据集,并进行数据图片的复制

# for class_name in class_names:

# transF = os.path.splitext(class_name)

# class_num = transF[0]

# for match_name in match_names:

# transF2 = os.path.splitext(match_name)

# match_num = transF2[0]

# if match_num == class_num:

# src_img_path = os.path.join(file_path, class_name)

# copy2(src_img_path, split_path)

print("Done!")

if __name__ == '__main__':

splitimg()

split_label_img()主要的改动有:

标注文件格式改为自己需要划分的后缀名。

标注文件格式改为自己需要划分的后缀名。

2.随机删除指定数量的图片和对应的标注,做之前记得备份原数据集,避免无法找回。

import os

import random

import argparse

def delete_files(num, img_dir, xml_dir):

# 获取所有共同的基文件名

img_bases = set()

for f in os.listdir(img_dir):

if f.endswith('.jpg'):

base = os.path.splitext(f)[0]

img_bases.add(base)

xml_bases = set()

for f in os.listdir(xml_dir):

if f.endswith('.xml'):

base = os.path.splitext(f)[0]

xml_bases.add(base)

common_bases = img_bases & xml_bases

# 检查可删除数量

if len(common_bases) < num:

print(f"错误: 仅有 {len(common_bases)} 对文件存在,无法删除 {num} 对")

return

# 随机选择要删除的文件

to_delete = random.sample(common_bases, num)

# 确认操作

proceed = input(f"即将删除 {num} 对文件,确认吗?(y/n): ")

if proceed.lower() != 'y':

print("操作已取消")

return

# 执行删除

deleted_count = 0

for base in to_delete:

img_path = os.path.join(img_dir, f"{base}.jpg")

xml_path = os.path.join(xml_dir, f"{base}.xml")

try:

os.remove(img_path)

os.remove(xml_path)

deleted_count += 1

except Exception as e:

print(f"删除 {base} 失败: {str(e)}")

print(f"成功删除 {deleted_count}/{num} 对文件")

if __name__ == "__main__":

parser = argparse.ArgumentParser(description="随机删除指定数量的图片和XML标注对")

parser.add_argument('--num', type=int, default=2,required=True, help='要删除的文件对数量')

parser.add_argument('--img_dir', default='/mnt/e/datasets/SODA-D/Images/Images', help='图片目录路径(默认:images)')

parser.add_argument('--xml_dir', default='/mnt/e/datasets/SODA-D/lables/val', help='XML目录路径(默认:annotations)')

args = parser.parse_args()

# 验证目录存在

if not os.path.isdir(args.img_dir):

print(f"图片目录不存在: {args.img_dir}")

exit(1)

if not os.path.isdir(args.xml_dir):

print(f"XML目录不存在: {args.xml_dir}")

exit(1)

delete_files(args.num, args.img_dir, args.xml_dir)代码中改为自己数据集的图片格式和标注文件格式。

--------------------------------------------------------------------------------------------------------------------------------

通用目标检测格式

COCO格式:

结构:使用单个JSON文件存储所有图像的标注信息,包括类别、边界框(bbox)、分割掩码等。

适用场景:适用于大规模目标检测和实例分割任务,支持多标签和复杂标注。

VOC格式

结构:每张图像对应一个XML文件,包含物体类别、边界框(左上右下坐标)及图像元数据

适用场景:传统目标检测任务,兼容性强

YOLO格式

结构:每个图像对应一个TXT文件,标注信息为归一化的中心坐标及宽高(例如 类别 x_center y_center width height)

特点:轻量化,适合实时检测模型训练

DOTA格式

结构:每张图像对应一个TXT文件,支持旋转框(OBB)标注,格式为多边形顶点坐标或旋转框参数

适用场景:航空图像或复杂场景下的倾斜目标检测

--------------------------------------------------------------------------------------------------------------------------------

3.json转xml

适用场景:适用于大规模目标检测和实例分割任务,支持多标签和复杂标注ml

import json

import xml.etree.ElementTree as ET

from xml.dom import minidom

import os

def json_to_voc_xml(json_path, output_dir):

# 创建输出目录

os.makedirs(output_dir, exist_ok=True)

# 加载JSON数据

with open(json_path, 'r') as f:

data = json.load(f)

# 创建映射关系

images = {img["id"]: img for img in data["images"]}

categories = {cat["id"]: cat["name"] for cat in data["categories"]}

# 按图像ID分组标注

annotations = {}

for ann in data["annotations"]:

img_id = ann["image_id"]

if img_id not in annotations:

annotations[img_id] = []

annotations[img_id].append(ann)

# 处理每个图像

for img_id, img_info in images.items():

# 创建XML根节点

root = ET.Element("annotation")

# 添加基本信息

ET.SubElement(root, "folder").text = "images"

ET.SubElement(root, "filename").text = img_info["file_name"]

ET.SubElement(root, "path").text = "" # 可根据需要填写实际路径

# 图像尺寸

size = ET.SubElement(root, "size")

ET.SubElement(size, "width").text = str(img_info["width"])

ET.SubElement(size, "height").text = str(img_info["height"])

ET.SubElement(size, "depth").text = "3" # 假设为RGB图像

# 添加每个对象的标注

for ann in annotations.get(img_id, []):

obj = ET.SubElement(root, "object")

ET.SubElement(obj, "name").text = categories[ann["category_id"]]

ET.SubElement(obj, "pose").text = "Unspecified"

ET.SubElement(obj, "truncated").text = "0"

ET.SubElement(obj, "difficult").text = "0"

# 转换bbox格式 (x,y,w,h -> xmin,ymin,xmax,ymax)

bbox = ann["bbox"]

xmin = bbox[0]

ymin = bbox[1]

xmax = bbox[0] + bbox[2]

ymax = bbox[1] + bbox[3]

bndbox = ET.SubElement(obj, "bndbox")

ET.SubElement(bndbox, "xmin").text = str(int(xmin))

ET.SubElement(bndbox, "ymin").text = str(int(ymin))

ET.SubElement(bndbox, "xmax").text = str(int(xmax))

ET.SubElement(bndbox, "ymax").text = str(int(ymax))

# 格式化输出XML

xml_str = ET.tostring(root, encoding="utf-8")

dom = minidom.parseString(xml_str)

pretty_xml = dom.toprettyxml(indent=" ", encoding="utf-8").decode("utf-8")

# 保存XML文件

xml_filename = os.path.splitext(img_info["file_name"])[0] + ".xml"

output_path = os.path.join(output_dir, xml_filename)

with open(output_path, "w") as f:

f.write(pretty_xml)

if __name__ == "__main__":

# 使用示例 - 根据需要修改路径

json_to_voc_xml(

json_path="/mnt/e/datasets/SODA-D/Annotations/val.json", # 输入JSON文件路径

output_dir="/mnt/e/datasets/SODA-D/val" # 输出目录路径

)4.根据图像文件移动对应的XML文件

import os

import shutil

# 定义数据集路径

dataset_path = "/mnt/e/datasets/water_trash"

images_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash/images")

annotations_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash/Annotations")

train_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash1/images/train")

val_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash1/images/val")

test_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash1/images/test")

train_anno_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash1/Annotations/train")

val_anno_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash1/Annotations/val")

test_anno_path = os.path.join(dataset_path, "/mnt/e/datasets/water_trash1/Annotations/test")

# 创建目标目录

os.makedirs(train_anno_path, exist_ok=True)

os.makedirs(val_anno_path, exist_ok=True)

os.makedirs(test_anno_path, exist_ok=True)

def move_annotations(image_folder, target_annotation_folder):

"""根据图像文件移动对应的XML标注文件"""

for img_file in os.listdir(image_folder):

if img_file.endswith(".jpg") or img_file.endswith(".png"):

xml_file = os.path.splitext(img_file)[0] + ".xml"

xml_source_path = os.path.join(annotations_path, xml_file)

xml_target_path = os.path.join(target_annotation_folder, xml_file)

if os.path.exists(xml_source_path):

shutil.move(xml_source_path, xml_target_path)

else:

print(f"Warning: Annotation file {xml_file} not found!")

# 进行XML文件的划分

move_annotations(train_path, train_anno_path)

move_annotations(val_path, val_anno_path)

move_annotations(test_path, test_anno_path)

print("XML文件划分完成!")

5.xml转json

from tqdm import tqdm

import os

import json

import xml.etree.ElementTree as ET

def read_xml(xml_root):

'''

:param xml_root: .xml文件

:return: dict('cat':['cat1',...],'bboxes':[[x1,y1,x2,y2],...],'whd':[w ,h,d])

'''

dict_info = {'cat': [], 'bboxes': [], 'box_wh': [], 'img_whd': []}

if os.path.splitext(xml_root)[-1] == '.xml':

tree = ET.parse(xml_root) # ET是一个xml文件解析库,ET.parse()打开xml文件。parse--"解析"

root = tree.getroot() # 获取根节点

whd = root.find('size')

whd = [whd.find('width').text, whd.find('height').text, whd.find('depth').text]

dict_info['img_whd'] = whd

for obj in root.findall('object'): # 找到根节点下所有“object”节点

cat = str(obj.find('name').text) # 找到object节点下name子节点的值(字符串)

bbox = obj.find('bndbox')

x1, y1, x2, y2 = [int(bbox.find('xmin').text),

int(bbox.find('ymin').text),

int(bbox.find('xmax').text),

int(bbox.find('ymax').text)]

b_w = x2 - x1 + 1

b_h = y2 - y1 + 1

dict_info['cat'].append(cat)

dict_info['bboxes'].append([x1, y1, x2, y2])

dict_info['box_wh'].append([b_w, b_h])

else:

pass

# print('[inexistence]:{} suffix is not xml '.format(xml_root))

return dict_info

def get_path_name(file_path, format='.jpg'):

obj_path_lst = [os.path.join(root, file) for root, _, files in os.walk(file_path) for file in files if

file.endswith(format)]

obj_name_lst = [os.path.basename(p) for p in obj_path_lst]

return obj_path_lst, obj_name_lst

def xml2cocojson(xml_root, out_dir=None, assign_label=None, json_name=None, img_root=None):

'''

:param xml_root: xml文件所在路径,可以总路径

:param out_dir:json文件保存地址

:param assign_label: 提供训练列表,如['pedes', 'bus'],若为None则从xml中搜寻并自动给出

:param json_name:保存json文件的名字

:param img_root: 和xml_root格式一样,提供图片路径,用于获取高与宽

:return:返回coco json 格式

'''

xml_root_lst, xml_names_lst = get_path_name(xml_root, format='.xml')

json_name = json_name if json_name is not None else 'coco_data_format.json'

out_dir = out_dir if out_dir else 'out_dir'

os.makedirs(out_dir, exist_ok=True)

out_dir_json = os.path.join(out_dir, json_name)

# 若提供img_root获得路径与名称

img_root_lst, img_name_lst = get_path_name(xml_root, format='.jpg') if img_root else None, None

json_dict = {"images": [], "type": "instances", "annotations": [], "categories": []}

image_id = 10000000

anation_id = 10000000

label_lst = assign_label if assign_label else []

info = {'vaild_img': 0, 'invaild_img': 0}

for i, xml_path in tqdm(enumerate(xml_root_lst)):

xml_info = read_xml(xml_path)

cat_lst = xml_info['cat'] # 类别是数字,从0 1 2 等

img_w, img_h = int(xml_info['img_whd'][0]), int(xml_info['img_whd'][1])

img_name = xml_names_lst[i][:-3] + 'jpg'

if img_name_lst: # 从图像中获取图像尺寸,高与宽

import cv2

j = list(img_name_lst).index(img_name)

img_name = img_name_lst[j]

img = cv2.imread(img_root_lst[int(j)])

img_w, img_h = img.shape[:2]

if len(cat_lst) < 1: continue

image_id += 1

image = {'file_name': img_name, 'height': img_h, 'width': img_w, 'id': image_id}

boxes_lst = xml_info['bboxes']

for j, cat in tqdm(enumerate(cat_lst)):

if not assign_label: # 未指定,添加类

if cat not in label_lst:

label_lst.append(cat)

b = boxes_lst[j]

obj_width, obj_height = b[2] - b[0], b[3] - b[1]

xmin, ymin = b[0], b[1]

category_id = int(label_lst.index(cat) + 1) # 我使用类别数字从1开始,满足coco格式,当然也可以从0开始

if image not in json_dict['images']:

json_dict['images'].append(image) # 将图像信息添加到json中

anation_id = anation_id + 1

ann = {'area': obj_width * obj_height, 'iscrowd': 0, 'image_id': image_id,

'bbox': [xmin, ymin, obj_width, obj_height],

'category_id': category_id, 'id': anation_id, 'ignore': 0,

'segmentation': []}

json_dict['annotations'].append(ann)

for cid, cate in enumerate(label_lst): # 我这里使用1开始的,当然也可以使用0开始

cat = {'supercategory': 'FWW', 'id': cid + 1, 'name': cate}

json_dict['categories'].append(cat)

with open(out_dir_json, 'w') as f:

json.dump(json_dict, f, indent=4) # indent表示间隔长度

print('saving json path:{}\n info:{}\ncategory list: {}'.format(out_dir_json, info, label_lst))

if __name__ == '__main__':

root = '/mnt/e/datasets/SODA-D/lables/test'

cat_lst = None

xml2cocojson(root, assign_label=cat_lst)

6.xml转txt(yolo)

# -*- coding: utf-8 -*-

import xml.etree.ElementTree as ET

import os, cv2

import numpy as np

classes = []

def convert(size, box):

dw = 1. / (size[0])

dh = 1. / (size[1])

x = (box[0] + box[1]) / 2.0 - 1

y = (box[2] + box[3]) / 2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)

def convert_annotation(xmlpath, xmlname):

with open(xmlpath, "r", encoding='utf-8') as in_file:

txtname = xmlname[:-4] + '.txt'

txtfile = os.path.join(txtpath, txtname)

tree = ET.parse(in_file)

root = tree.getroot()

filename = root.find('filename')

img = cv2.imdecode(np.fromfile('{}/{}.{}'.format(imgpath, xmlname[:-4], postfix), np.uint8), cv2.IMREAD_COLOR)

h, w = img.shape[:2]

res = []

for obj in root.iter('object'):

cls = obj.find('name').text

if cls not in classes:

classes.append(cls)

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text),

float(xmlbox.find('ymax').text))

bb = convert((w, h), b)

res.append(str(cls_id) + " " + " ".join([str(a) for a in bb]))

if len(res) != 0:

with open(txtfile, 'w+') as f:

f.write('\n'.join(res))

if __name__ == "__main__":

postfix = 'jpg' # 图像后缀

imgpath = r'datasets/COCO2017-mini/images/val2017' # 图像文件路径

xmlpath = r'datasets/COCO2017-mini/annotations/val2017' # xml文件文件路径

txtpath = r'datasets/COCO2017-mini/annotations/val_txt' # 生成的txt文件路径

if not os.path.exists(txtpath):

os.makedirs(txtpath, exist_ok=True)

list = os.listdir(xmlpath)

error_file_list = []

for i in range(0, len(list)):

try:

path = os.path.join(xmlpath, list[i])

if ('.xml' in path) or ('.XML' in path):

convert_annotation(path, list[i])

print(f'file {list[i]} convert success.')

else:

print(f'file {list[i]} is not xml format.')

except Exception as e:

print(f'file {list[i]} convert error.')

print(f'error message:\n{e}')

error_file_list.append(list[i])

print(f'this file convert failure\n{error_file_list}')

print(f'Dataset Classes:{classes}')

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?