1 部署 ceph 集群

https://github.com/ceph/ceph

# 简要部署过程

http://docs.ceph.org.cn/install/manual-deployment/

# 版本历史

https://docs.ceph.com/en/latest/releases/index.html

# ceph 15 即 octopus 版本支持的系统

https://docs.ceph.com/en/latest/releases/octopus/

#ceph 16 即 Pacific 版本支持的系统

https://docs.ceph.com/en/latest/releases/pacific/

1.1 部署方式

# ceph-ansible(python )

https://github.com/ceph/ceph-ansible

# ceph-salt(python)

https://github.com/ceph/ceph-salt

# ceph-container(shell)

https://github.com/ceph/ceph-container

# ceph-chef(Ruby)

https://github.com/ceph/ceph-chef

# cephadm(ceph 官方在 ceph 15 版本加入的 ceph 部署工具)

https://docs.ceph.com/en/latest/cephadm/

# ceph-deploy(python)

https://github.com/ceph/ceph-deploy

# 是一个 ceph 官方维护的基于 ceph-deploy 命令行部署 ceph 集群的工具,基于 ssh 执行可以 sudo 权限的 shell 命令

# 以及一些 python 脚本实现 ceph 集群的部署和管理维护。

# Ceph-deploy 只用于部署和管理 ceph 集群,客户端需要访问 ceph,需要部署客户端工具。

1.2 服务器准备

# 硬件推荐

http://docs.ceph.org.cn/start/hardware-recommendations/

1.四台服务器作为 ceph 集群 OSD 存储服务器,每台服务器支持两个网络,public 网络针对 客户端访问,cluster 网络用于集群管理及数据同步,每台三块或以上的磁盘

172.18.60.16 / 192.168.60.16

172.18.60.17 / 192.168.60.17

172.18.60.18 / 192.168.60.18

172.18.60.19 / 192.168.60.19

# 各存储服务器磁盘划分

# /dev/sdb /dev/sdc /dev/sdd /dev/sde 100G

root@ceph-node1:~# fdisk -l

Disk /dev/loop0: 89.1 MiB, 93417472 bytes, 182456 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 200 GiB, 214748364800 bytes, 419430400 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 9EB58D83-B2F8-48D9-8E82-9B08002F3C59

Device Start End Sectors Size Type

/dev/sda1 2048 4095 2048 1M BIOS boot

/dev/sda2 4096 209719295 209715200 100G Linux filesystem

/dev/sda3 209719296 213913599 4194304 2G Linux filesystem

/dev/sda4 213913600 218107903 4194304 2G Linux swap

/dev/sda5 218107904 322965503 104857600 50G Linux filesystem

Disk /dev/sdb: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdc: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sde: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

root@ceph-node1:~#

2.三台服务器作为 ceph 集群 Mon 监视服务器,每台服务器可以和 ceph 集群的 cluster 网络通信

172.18.60.11 / 192.168.60.11

172.18.60.12 / 192.168.60.12

172.18.60.13 / 192.168.60.13

3.两个 ceph-mgr 管理服务器,可以和 ceph 集群的 cluster 网络通信。

172.18.60.14 / 192.168.60.14

172.18.60.15 / 192.168.60.15

4.一个服务器用于部署 ceph 集群即安装 Ceph-deploy,也可以和 ceph-mgr 等复用。

172.18.60.10 / 192.168.60.10

5.创建一个普通用户,能够通过 sudo 执行特权命令,配置主机名解析,ceph 集群部署过程 中需要对各主机配置不通的主机名,另外如果是 centos 系统则需要关闭各服务器的防火墙 和 selinux。

| 角色 | 客户端访问网段 | 集群管理和数据同步网段 | 磁盘数量 | 操作系统 |

|---|---|---|---|---|

| ceph-deploy | 172.18.60.10 | 192.168.60.10 | 无特殊要求 | Ubuntu18.04 |

| ceph-mon1 | 172.18.60.11 | 192.168.60.11 | 同上 | 同上 |

| ceph-mon2 | 172.18.60.12 | 192.168.60.12 | 同上 | 同上 |

| ceph-mon3 | 172.18.60.13 | 192.168.60.13 | 同上 | 同上 |

| ceph-mgr1 | 172.18.60.14 | 192.168.60.14 | 同上 | 同上 |

| ceph-mgr2 | 172.18.60.15 | 192.168.60.15 | 同上 | 同上 |

| ceph-node1 | 172.18.60.16 | 192.168.60.16 | 新增四块磁盘,不挂载 | 同上 |

| ceph-node2 | 172.18.60.17 | 192.168.60.17 | 新增四块磁盘,不挂载 | 同上 |

| ceph-node3 | 172.18.60.18 | 192.168.60.18 | 新增四块磁盘,不挂载 | 同上 |

| ceph-node4 | 172.18.60.19 | 192.168.60.19 | 新增四块磁盘,不挂载 | 同上 |

1.3 系统环境准备

-

时间同步

-

关闭 selinux 和防火墙

-

配置域名解析或通过 DNS 解析

1.4 部署 RADOS 集群

# 阿里云镜像仓库

https://mirrors.aliyun.com/ceph/

# 网易镜像仓库

http://mirrors.163.com/ceph/

# 清华大学镜像源

https://mirrors.tuna.tsinghua.edu.cn/ceph/

1.4.1 仓库准备

各节点配置 ceph yum 仓库:

导入 key 文件:

wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

Centos 7.x

yum -y install https://mirrors.aliyun.com/ceph/rpm-octopus/el7/noarch/ceph-release-1-1.el7.noarch.rpm

ll /etc/yum.repos.d/

各节点配置 epel 仓库

yum install epel-release -y

ll /etc/yum.repos.d/

Ubuntu 18.04.x

cat /etc/apt/sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main

sudo echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main" >> /etc/apt/sources.list

脚本执行

for ip in $(seq 0 9);\

do echo $ip; \

ssh root@172.18.60.1$ip \

"sudo echo 'deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main' >> /etc/apt/sources.list";\

done

1.4.2 创建 song 用户

推荐使用指定的普通用户部署和运行 ceph 集群,普通用户只要能以非交互方式执行 sudo 命 令执行一些特权命令即可,新版的 ceph-deploy 可以指定包含 root 的在内只要可以执行 sudo 命令的用户,不过仍然推荐使用普通用户,比如 ceph、cephuser、cephadmin 这样的用户去管理 ceph 集群。

在包含 ceph-deploy 节点的存储节点、mon 节点和 mgr 节点等创建 song 用户

# Ubuntu

groupadd -r -g 2021 song && useradd -r -m -s /bin/bash -u 2021 -g 2021 song

echo song:123456 | chpasswd

# Centos

groupadd song -g 2020 && useradd -u 2020 -g 2020 song && echo "123456" | passwd --stdin song

各服务器允许 ceph 用户以 sudo 执行特权命令

echo "song ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoers

# 脚本批量执行

for ip in $(seq 0 9);do echo $ip;ssh root@172.18.60.1$ip "echo 'song ALL=(ALL) NOPASSWD:ALL' >> /etc/sudoers";done

配置免秘钥登录

在 ceph-deploy 节点配置允许以非交互的方式登录到各 ceph node/mon/mgr 节点,即在 ceph-deploy 节点生成秘钥对,然后分发公钥到各被管理节点:

[root@ceph-deploy ~]# ssh-keygen

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.10

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.11

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.12

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.13

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.14

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.15

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.16

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.17

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.18

[root@ceph-deploy ~]# ssh-copy-id song@172.18.60.19

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.10

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.11

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.12

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.13

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.14

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.15

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.16

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.17

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.18

[root@ceph-deploy ~]# ssh-copy-id root@172.18.60.19

root@ceph-deploy:~# su - song

song@ceph-deploy:~$ ssh-keygen

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.10

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.11

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.12

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.13

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.14

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.15

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.16

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.17

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.18

song@ceph-deploy:~$ ssh-copy-id song@172.18.60.19

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.10

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.11

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.12

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.13

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.14

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.15

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.16

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.17

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.18

song@ceph-deploy:~$ ssh-copy-id root@172.18.60.19

# 测试

for ip in `seq 0 9`;do echo $ip;ssh song@172.18.60.1$ip "hostname -I;hostname";done

0

172.18.60.10 192.168.60.10

ceph-deploy

1

172.18.60.11 192.168.60.11

ceph-mon1

2

172.18.60.12 192.168.60.12

ceph-mon2

3

172.18.60.13 192.168.60.13

ceph-mon3

4

172.18.60.14 192.168.60.14

ceph-mgr1

5

172.18.60.15 192.168.60.15

ceph-mgr2

6

172.18.60.16 192.168.60.16

ceph-node1

7

172.18.60.17 192.168.60.17

ceph-node2

8

172.18.60.18 192.168.60.18

ceph-node3

9

172.18.60.19 192.168.60.19

ceph-node4

[root@ceph-deploy ~]#

# 测试2

for ip in `seq 0 9`;do echo $ip;ssh root@172.18.60.1$ip "hostname -I;hostname";done

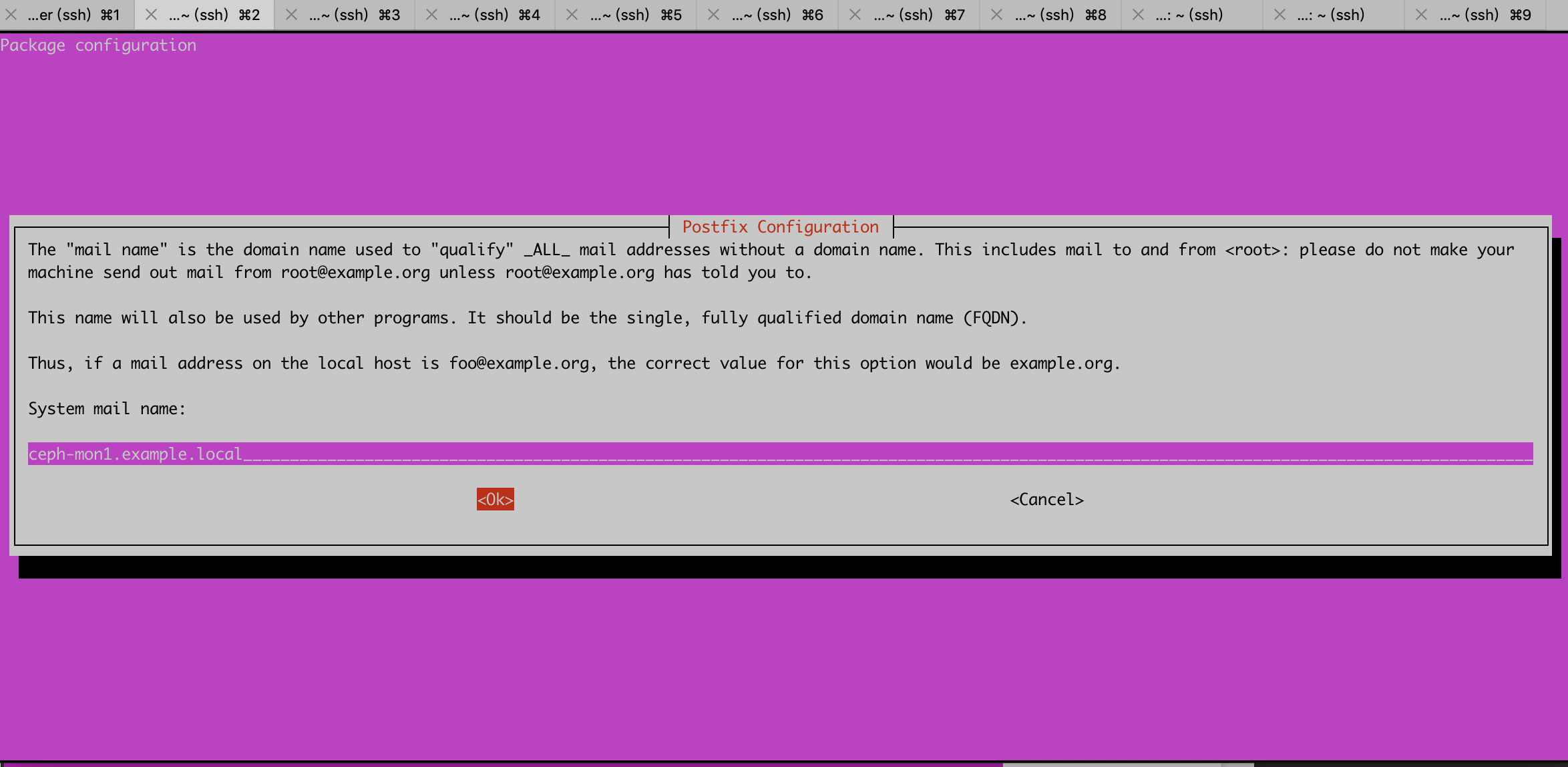

1.4.3 配置主机名解析(每台机器都需要执行)

cat >> /etc/hosts <<EOF

172.18.60.10 ceph-deploy.example.local ceph-deploy

172.18.60.11 ceph-mon1.example.local ceph-mon1

172.18.60.12 ceph-mon2.example.local ceph-mon2

172.18.60.13 ceph-mon3.example.local ceph-mon3

172.18.60.14 ceph-mgr1.example.local ceph-mgr1

172.18.60.15 ceph-mgr2.example.local ceph-mgr2

172.18.60.16 ceph-node1.example.local ceph-node1

172.18.60.17 ceph-node2.example.local ceph-node2

172.18.60.18 ceph-node3.example.local ceph-node3

172.18.60.19 ceph-node4.example.local ceph-node4

EOF

1.4.4 安装 ceph 部署工具

在 ceph 部署服务器安装部署工具 ceph-deploy

# Ubuntu:

[root@ceph-deploy ~]# apt-cache madison ceph-deploy

ceph-deploy | 2.0.1 | https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic/main amd64 Packages

ceph-deploy | 1.5.38-0ubuntu1 | https://mirrors.tuna.tsinghua.edu.cn/ubuntu bionic/universe amd64 Packages

[root@ceph-deploy ~]# apt install ceph-deploy=2.0.1

# Centos

[ceph@ceph-deploy ~]$ sudo yum install ceph-deploy python-setuptools python2-subprocess3

1.4.5 初始化 mon 节点

在管理节点初始化 mon 节点

[root@ceph-deploy ~]# su - song

song@ceph-deploy:~$ mkdir ceph-cluster # 保存当前集群的初始化配置信息

song@ceph-deploy:~$ cd ceph-cluster/

song@ceph-deploy:~/ceph-cluster$

文档

# http://docs.ceph.org.cn/man/8/ceph-deploy/#id3

song@ceph-deploy:~/ceph-cluster$ ceph-deploy --help

usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME]

[--overwrite-conf] [--ceph-conf CEPH_CONF]

COMMAND ...

Easy Ceph deployment

-^-

/ \

|O o| ceph-deploy v2.0.1

).-.(

'/|||\`

| '|` |

'|`

Full documentation can be found at: http://ceph.com/ceph-deploy/docs

optional arguments:

-h, --help show this help message and exit

-v, --verbose be more verbose

-q, --quiet be less verbose

--version the current installed version of ceph-deploy

--username USERNAME the username to connect to the remote host

--overwrite-conf overwrite an existing conf file on remote host (if

present)

--ceph-conf CEPH_CONF

use (or reuse) a given ceph.conf file

commands:

COMMAND description

new Start deploying a new cluster, and write a

CLUSTER.conf and keyring for it.

install Install Ceph packages on remote hosts.

rgw Ceph RGW daemon management

mgr Ceph MGR daemon management

mon Ceph MON Daemon management

mds Ceph MDS daemon management

gatherkeys Gather authentication keys for provisioning new nodes.

disk Manage disks on a remote host.

osd Prepare a data disk on remote host.

admin Push configuration and client.admin key to a remote

host.

repo Repo definition management

config Copy ceph.conf to/from remote host(s)

uninstall Remove Ceph packages from remote hosts.

purge Remove Ceph packages from remote hosts and purge all

data.

purgedata Purge (delete, destroy, discard, shred) any Ceph data

from /var/lib/ceph

calamari Install and configure Calamari nodes. Assumes that a

repository with Calamari packages is already

configured. Refer to the docs for examples

(http://ceph.com/ceph-deploy/docs/conf.html)

forgetkeys Remove authentication keys from the local directory.

pkg Manage packages on remote hosts.

See 'ceph-deploy <command> --help' for help on a specific command

song@ceph-deploy:~/ceph-cluster$

new : 开始部署一个新的 ceph 存储集群,并生成 CLUSTER.conf 集群配置文件和 keyring 认证文件

install: 在远程主机上安装 ceph 相关的软件包, 可以通过--release 指定安装的版本。

rgw:管理 RGW 守护程序(RADOSGW,对象存储网关)。

mgr:管理 MGR 守护程序(ceph-mgr,Ceph Manager DaemonCeph 管理器守护程序)。

mds:管理 MDS 守护程序(Ceph Metadata Server,ceph 源数据服务器)。

mon:管理 MON 守护程序(ceph-mon,ceph 监视器)。

gatherkeys:从指定获取提供新节点的验证 keys,这些 keys 会在添加新的 MON/OSD/MD 加 入的时候使用。

disk:管理远程主机磁盘。

osd:在远程主机准备数据磁盘,即将指定远程主机的指定磁盘添加到 ceph 集群作为 osd 使用。

repo: 远程主机仓库管理。

admin:推送 ceph 集群配置文件和 client.admin 认证文件到远程主机。

config:将 ceph.conf 配置文件推送到远程主机或从远程主机拷贝。

uninstall:从远端主机删除安装包。

purgedata:从/var/lib/ceph 删除 ceph 数据,会删除/etc/ceph 下的内容。

purge: 删除远端主机的安装包和所有数据。

forgetkeys:从本地主机删除所有的验证 keyring, 包括 client.admin, monitor, bootstrap 等认证 文件。

pkg: 管理远端主机的安装包。

calamari:安装并配置一个 calamari web 节点,calamari 是一个 web 监控平台。

初始化 mon 节点过程如下

Ubuntu 各服务器需要单独安装 Python2

[root@ceph-deploy ~]# for ip in `seq 0 9`;do echo $ip;ssh root@172.18.60.1$ip "apt -y install python2.7;ln -sv /usr/bin/python2.7 /usr/bin/python2";done

[root@ceph-deploy ~]# for ip in `seq 0 9`;do echo $ip;ssh root@172.18.60.1$ip "python2 --version";done

0

Python 2.7.17

1

Python 2.7.17

2

Python 2.7.17

3

Python 2.7.17

4

Python 2.7.17

5

Python 2.7.17

6

Python 2.7.17

7

Python 2.7.17

8

Python 2.7.17

9

Python 2.7.17

[root@ceph-deploy ~]#

[root@ceph-deploy ~]# su - song

song@ceph-deploy:~$ cd ceph-cluster/

song@ceph-deploy:~/ceph-cluster$ ceph-deploy new --cluster-network 192.168.60.0/24 --public-network 172.18.0.0/16 ceph-mon1.example.local

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy new --cluster-network 192.168.60.0/24 --public-network 172.18.0.0/16 ceph-mon1.example.local

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f8b14af9dc0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['ceph-mon1.example.local']

[ceph_deploy.cli][INFO ] func : <function new at 0x7f8b11ef5ad0>

[ceph_deploy.cli][INFO ] public_network : 172.18.0.0/16

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : 192.168.60.0/24

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[ceph-mon1.example.local][DEBUG ] connected to host: ceph-deploy

[ceph-mon1.example.local][INFO ] Running command: ssh -CT -o BatchMode=yes ceph-mon1.example.local

[ceph_deploy.new][WARNIN] could not connect via SSH

[ceph_deploy.new][INFO ] creating a passwordless id_rsa.pub key file

[ceph_deploy.new][DEBUG ] connected to host: ceph-deploy

[ceph_deploy.new][INFO ] Running command: ssh-keygen -t rsa -N -f /home/song/.ssh/id_rsa

[ceph_deploy.new][DEBUG ] Generating public/private rsa key pair.

[ceph_deploy.new][DEBUG ] Your identification has been saved in /home/song/.ssh/id_rsa.

[ceph_deploy.new][DEBUG ] Your public key has been saved in /home/song/.ssh/id_rsa.pub.

[ceph_deploy.new][DEBUG ] The key fingerprint is:

[ceph_deploy.new][DEBUG ] SHA256:a2rxljuFjOq+T5kEFP8L4EwMIMXWeNQgCacqcSUFqOs song@ceph-deploy

[ceph_deploy.new][DEBUG ] The key's randomart image is:

[ceph_deploy.new][DEBUG ] +---[RSA 2048]----+

[ceph_deploy.new][DEBUG ] |+*BBB+ |

[ceph_deploy.new][DEBUG ] |oo=Bo.. |

[ceph_deploy.new][DEBUG ] |+...= . |

[ceph_deploy.new][DEBUG ] |oo + o . |

[ceph_deploy.new][DEBUG ] |o. o ooS. |

[ceph_deploy.new][DEBUG ] |o .o+oo. |

[ceph_deploy.new][DEBUG ] |. .+o+o |

[ceph_deploy.new][DEBUG ] | E ...o= |

[ceph_deploy.new][DEBUG ] | o++o..o |

[ceph_deploy.new][DEBUG ] +----[SHA256]-----+

[ceph_deploy.new][INFO ] will connect again with password prompt

The authenticity of host 'ceph-mon1.example.local (172.18.60.11)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

mon 节点也需要有 cluster network,否则初始化报错:

[ceph-mon1.example.local][DEBUG ] IP addresses found: [u'172.18.60.11']

[ceph_deploy][ERROR] RuntimeError: subnet (192.168.60.0/24) is not valid for any of the ips found [u'172.18.60.11]

验证初始化

song@ceph-deploy:~/ceph-cluster$ ll

total 28

drwxrwxr-x 2 song song 4096 Aug 21 09:29 ./

drwxr-xr-x 6 song song 4096 Aug 21 08:56 ../

-rw-rw-r-- 1 song song 264 Aug 21 09:29 ceph.conf # 自动生成的配置文件

-rw-rw-r-- 1 song song 11204 Aug 21 09:29 ceph-deploy-ceph.log # 初始化日志

-rw------- 1 song song 73 Aug 21 09:29 ceph.mon.keyring # 用于 ceph mon 节点内部通讯认证的秘钥环文件

song@ceph-deploy:~/ceph-cluster$ cat ceph.conf

[global]

fsid = 1e185a05-7e53-40f4-a618-5abc802d5ae7 # ceph 的集群 ID

public_network = 172.18.0.0/16

cluster_network = 192.168.60.0/24

mon_initial_members = ceph-mon1 # 可以用逗号做分割添加多个 mon 节点

mon_host = 172.18.60.11

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

song@ceph-deploy:~/ceph-cluster$

1.4.6 初始化 ceph 存储节点

初始化存储节点等于在存储节点安装了 ceph 及 ceph-rodsgw 安装包,但是使用默认的官方 仓库会因为网络原因导致初始化超时,因此各存储节点推荐修改 ceph 仓库为阿里或者清华 等国内的镜像源:

1.4.6.1 修改 ceph 镜像源

各节点配置清华的 ceph 镜像源

1.4.6.1.1 ceph-mimic 版本(13)

vim /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-octopus/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-octopus/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-octopus/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc

1.4.6.1.2 ceph-nautilus 版本(14):

vim /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-nautilus/el7/$basearch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc

[Ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-nautilus/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-nautilus/el7/SRPMS

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc

1.4.6.1.3 ceph-octopus 版本(15)

Ubuntu 18.04:

cat /etc/apt/sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-octopus bionic main

1.4.6.1.4 ceph-pacific 版本(16)

Ubuntu 18.04

cat /etc/apt/sources.list

# 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-updates main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-backports main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

# deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ bionic-security main restricted universe multiverse

deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main

1.4.6.2 修改 epel 镜像源

https://mirrors.tuna.tsinghua.edu.cn/help/epel/

# yum install epel-release -y

# sed -e 's!^metalink=!#metalink=!g' \

-e 's!^#baseurl=!baseurl=!g' \

-e 's!//download\.fedoraproject\.org/pub!//mirrors.tuna.tsinghua.edu.cn!g' \

-e 's!http://mirrors\.tuna!https://mirrors.tuna!g' \

-i /etc/yum.repos.d/epel.repo /etc/yum.repos.d/epel-testing.repo

# yum makecache fast

初始化 node 节点过程

song@ceph-deploy:~/ceph-cluster$ ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node1 ceph-node2 ceph-node3 ceph-node4

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node1 ceph-node2 ceph-node3 ceph-node4

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] testing : None

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f8051997be0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] dev_commit : None

[ceph_deploy.cli][INFO ] install_mds : False

[ceph_deploy.cli][INFO ] stable : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] adjust_repos : False

[ceph_deploy.cli][INFO ] func : <function install at 0x7f8052249a50>

[ceph_deploy.cli][INFO ] install_mgr : False

[ceph_deploy.cli][INFO ] install_all : False

[ceph_deploy.cli][INFO ] repo : False

[ceph_deploy.cli][INFO ] host : ['ceph-node1', 'ceph-node2', 'ceph-node3', 'ceph-node4']

[ceph_deploy.cli][INFO ] install_rgw : False

[ceph_deploy.cli][INFO ] install_tests : False

[ceph_deploy.cli][INFO ] repo_url : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] install_osd : False

[ceph_deploy.cli][INFO ] version_kind : stable

[ceph_deploy.cli][INFO ] install_common : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] dev : master

[ceph_deploy.cli][INFO ] nogpgcheck : True

[ceph_deploy.cli][INFO ] local_mirror : None

[ceph_deploy.cli][INFO ] release : None

[ceph_deploy.cli][INFO ] install_mon : False

[ceph_deploy.cli][INFO ] gpg_url : None

[ceph_deploy.install][DEBUG ] Installing stable version mimic on cluster ceph hosts ceph-node1 ceph-node2 ceph-node3 ceph-node4

[ceph_deploy.install][DEBUG ] Detecting platform for host ceph-node1 ...

The authenticity of host 'ceph-node1 (172.18.60.16)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

...省略...

[ceph_deploy.install][DEBUG ] Detecting platform for host ceph-node2 ...

The authenticity of host 'ceph-node2 (172.18.60.17)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

...省略...

[ceph_deploy.install][DEBUG ] Detecting platform for host ceph-node3 ...

The authenticity of host 'ceph-node3 (172.18.60.18)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)?yes # 输入 yes

...省略...

[ceph_deploy.install][DEBUG ] Detecting platform for host ceph-node4 ...

The authenticity of host 'ceph-node4 (172.18.60.19)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)?yes # 输入 yes

此过程会在指定的 ceph node 节点按照串行的方式逐个服务器安装 epel 源和 ceph 源并按安 装 ceph ceph-radosgw

初始化完成

1.4.7 配置 mon 节点并生成及同步秘钥

在各 mon 节点按照组件 ceph-mon,并通初始化 mon 节点,mon 节点 ha 还可以后期横向扩容。

Ubuntu 安装 ceph-mon

root@ceph-mon1:~# apt install ceph-mon

root@ceph-mon2:~# apt install ceph-mon

root@ceph-mon3:~# apt install ceph-mon

# Centos 安装 ceph-mon

[root@ceph-mon1 ~]# yum install ceph-mon -y

[root@ceph-deploy ~]# su - song

song@ceph-deploy:~$ cd ceph-cluster/

song@ceph-deploy:~/ceph-cluster$ pwd

/home/song/ceph-cluster

song@ceph-deploy:~/ceph-cluster$ ceph-deploy mon create-initial

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon create-initial

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create-initial

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f4f181ccfa0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f4f181afad0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] keyrings : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][DEBUG ] Deploying mon, cluster ceph hosts ceph-mon1

[ceph_deploy.mon][DEBUG ] detecting platform for host ceph-mon1 ...

The authenticity of host 'ceph-mon1 (172.18.60.11)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

... 省略 ...

[ceph_deploy.gatherkeys][INFO ] Storing ceph.client.admin.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mds.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-mgr.keyring

[ceph_deploy.gatherkeys][INFO ] keyring 'ceph.mon.keyring' already exists

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-osd.keyring

[ceph_deploy.gatherkeys][INFO ] Storing ceph.bootstrap-rgw.keyring

[ceph_deploy.gatherkeys][INFO ] Destroy temp directory /tmp/tmpTx3xIX

1.4.8 验证 mon 节点

验证在 mon 定节点已经自动安装并启动了 ceph-mon 服务,并且后期在 ceph-deploy 节点初始化目录会生成一些 bootstrap ceph mds/mgr/osd/rgw 等服务的 keyring 认证文件,这些初始化文件拥有对 ceph 集群的最高权限,所以一定要保存好。

song@ceph-mon1:~$ ps -ef | grep ceph-mon

ceph 21764 1 0 10:33 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id ceph-mon1 --setuser ceph --setgroup ceph

song 22386 21365 0 10:37 pts/0 00:00:00 grep --color=auto ceph-mon

song@ceph-mon1:~$

1.4.9 分发 admin 秘钥

在 ceph-deploy 节点把配置文件和 admin 密钥拷贝至 Ceph 集群需要执行 ceph 管理命令的节 点,从而不需要后期通过 ceph 命令对 ceph 集群进行管理配置的时候每次都需要指定 ceph-mon 节点地址和 ceph.client.admin.keyring 文件,另外各 ceph-mon 节点也需要同步 ceph 的集群配置文件与认证文件。

如果在 ceph-deploy 节点管理集群

[root@ceph-deploy ~]# apt -y install ceph-common # 先安装 ceph 的公共组件

root@ceph-node1:~# apt -y install ceph-common

root@ceph-node2:~# apt -y install ceph-common

root@ceph-node3:~# apt -y install ceph-common

root@ceph-node4:~# apt -y install ceph-common

song@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-node1 ceph-node2 ceph-node3 ceph-node4

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin ceph-node1 ceph-node2 ceph-node3 ceph-node4

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f5092ae5140>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-node1', 'ceph-node2', 'ceph-node3', 'ceph-node4']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7f50933e6a50>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node1

[ceph-node1][DEBUG ] connection detected need for sudo

[ceph-node1][DEBUG ] connected to host: ceph-node1

[ceph-node1][DEBUG ] detect platform information from remote host

[ceph-node1][DEBUG ] detect machine type

[ceph-node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node2

[ceph-node2][DEBUG ] connection detected need for sudo

[ceph-node2][DEBUG ] connected to host: ceph-node2

[ceph-node2][DEBUG ] detect platform information from remote host

[ceph-node2][DEBUG ] detect machine type

[ceph-node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node3

[ceph-node3][DEBUG ] connection detected need for sudo

[ceph-node3][DEBUG ] connected to host: ceph-node3

[ceph-node3][DEBUG ] detect platform information from remote host

[ceph-node3][DEBUG ] detect machine type

[ceph-node3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-node4

[ceph-node4][DEBUG ] connection detected need for sudo

[ceph-node4][DEBUG ] connected to host: ceph-node4

[ceph-node4][DEBUG ] detect platform information from remote host

[ceph-node4][DEBUG ] detect machine type

[ceph-node4][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

song@ceph-deploy:~/ceph-cluster$

1.4.10 ceph 节点验证秘钥

到 node 节点验证 key 文件

root@ceph-node1:~# su - song

song@ceph-node1:~$ ll /etc/ceph/

total 20

drwxr-xr-x 2 root root 4096 Aug 21 10:39 ./

drwxr-xr-x 93 root root 4096 Aug 21 09:50 ../

-rw------- 1 root root 151 Aug 21 10:39 ceph.client.admin.keyring

-rw-r--r-- 1 root root 264 Aug 21 10:39 ceph.conf

-rw-r--r-- 1 root root 92 Jul 8 14:17 rbdmap

-rw------- 1 root root 0 Aug 21 10:39 tmp7vjtaV

song@ceph-node1:~$

认证文件的属主和属组为了安全考虑,默认设置为了 root 用户和 root 组,如果需要 ceph 用 户也能执行 ceph 命令,那么就需要对 ceph 用户进行授权,

root@ceph-node1:~# setfacl -m u:song:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-node2:~# setfacl -m u:song:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-node3:~# setfacl -m u:song:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-node4:~# setfacl -m u:song:rw /etc/ceph/ceph.client.admin.keyring

1.4.11 配置 manager 节点

ceph 的 Luminious 及以上版本有 manager 节点,早期的版本没有

1.4.12 部署 ceph-mgr 节点

mgr 节点需要读取 ceph 的配置文件,即/etc/ceph 目录中的配置文件

song@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr --help

usage: ceph-deploy mgr [-h] {create} ...

Ceph MGR daemon management

positional arguments:

{create}

create Deploy Ceph MGR on remote host(s)

optional arguments:

-h, --help show this help message and exit

song@ceph-deploy:~/ceph-cluster$

初始化 ceph-mgr 节点

# Ubuntu 安装

root@ceph-mgr1:~# apt -y install ceph-mgr

# yum 安装

[root@ceph-mgr1 ~]# yum -y install ceph-mgr

song@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr create ceph-mgr1

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create ceph-mgr1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('ceph-mgr1', 'ceph-mgr1')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7facda357be0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7facda7b6150>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mgr1:ceph-mgr1

The authenticity of host 'ceph-mgr1 (172.18.60.14)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

Warning: Permanently added 'ceph-mgr1' (ECDSA) to the list of known hosts.

[ceph-mgr1][DEBUG ] connection detected need for sudo

[ceph-mgr1][DEBUG ] connected to host: ceph-mgr1

[ceph-mgr1][DEBUG ] detect platform information from remote host

[ceph-mgr1][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr1

[ceph-mgr1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mgr1][WARNIN] mgr keyring does not exist yet, creating one

[ceph-mgr1][DEBUG ] create a keyring file

[ceph-mgr1][DEBUG ] create path recursively if it doesn't exist

[ceph-mgr1][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr1 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr1/keyring

[ceph-mgr1][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr1

[ceph-mgr1][WARNIN] Created symlink /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr1.service → /lib/systemd/system/ceph-mgr@.service.

[ceph-mgr1][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr1

[ceph-mgr1][INFO ] Running command: sudo systemctl enable ceph.target

song@ceph-deploy:~/ceph-cluster$

1.4.13 验证 ceph-mgr 节点

root@ceph-mgr1:~# ps -ef | grep ceph

ceph 18954 1 0 10:53 ? 00:00:00 /usr/bin/ceph-mgr -f --cluster ceph --id ceph-mgr1 --setuser ceph --setgroup ceph

root 19034 14150 0 10:54 pts/0 00:00:00 grep --color=auto ceph

root@ceph-mgr1:~#

1.4.14 ceph-deploy 管理 ceph 集群

在 ceph-deploy 节点配置一下系统环境,以方便后期可以执行 ceph 管理命令

# Ubuntu

[root@ceph-deploy ~]# apt -y install ceph-common

# CentOS

[root@ceph-deploy ~]# yum -y install ceph-common

[root@ceph-deploy ~]# su - song

song@ceph-deploy:~$ cd ceph-cluster/

song@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-deploy # 推送正证书给自己

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin ceph-deploy

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2d28cf0140>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-deploy']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7f2d295f1a50>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-deploy

[ceph-deploy][DEBUG ] connection detected need for sudo

[ceph-deploy][DEBUG ] connected to host: ceph-deploy

[ceph-deploy][DEBUG ] detect platform information from remote host

[ceph-deploy][DEBUG ] detect machine type

[ceph-deploy][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

song@ceph-deploy:~/ceph-cluster$

1.4.15 测试 ceph 命令

# 授权

[root@ceph-deploy ~]# setfacl -m u:song:rw /etc/ceph/ceph.client.admin.keyring

[root@ceph-deploy ~]# su - song

song@ceph-deploy:~$ ceph -s

cluster:

id: 1e185a05-7e53-40f4-a618-5abc802d5ae7

health: HEALTH_WARN

client is using insecure global_id reclaim

mon is allowing insecure global_id reclaim # 需要禁用非安全模式通信

OSD count 0 < osd_pool_default_size 3 # 集群的 OSD 数量小于 3

services:

mon: 1 daemons, quorum ceph-mon1 (age 27m)

mgr: ceph-mgr1(active, since 7m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

song@ceph-deploy:~$ ceph config set mon auth_allow_insecure_global_id_reclaim false

song@ceph-deploy:~$ ceph versions

{

"mon": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 1

},

"mgr": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 1

},

"osd": {},

"mds": {},

"overall": {

"ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable)": 2

}

}

song@ceph-deploy:~$

song@ceph-deploy:~$ ceph -s

cluster:

id: 1e185a05-7e53-40f4-a618-5abc802d5ae7

health: HEALTH_WARN

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-mon1 (age 45m)

mgr: ceph-mgr1(active, since 6m)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

song@ceph-deploy:~$

1.4.16 准备 OSD 节点

OSD 节点安装运行环境:

# 擦除磁盘之前通过 deploy 节点对 node 节点执行安装 ceph 基本运行环境

song@ceph-deploy:~$ ceph-deploy install --release pacific ceph-node1

song@ceph-deploy:~$ ceph-deploy install --release pacific ceph-node2

song@ceph-deploy:~$ ceph-deploy install --release pacific ceph-node3

song@ceph-deploy:~$ ceph-deploy install --release pacific ceph-node4

node 节点可选删除不需要的包

root@ceph-node1:~# apt autoremove

root@ceph-node2:~# apt autoremove

root@ceph-node3:~# apt autoremove

root@ceph-node4:~# apt autoremove

列出 ceph node 节点磁盘

# 列出远端存储 node 节点的磁盘信息(必须进入 ceph-cluster 目录执行)

song@ceph-deploy:~/ceph-cluster$ ceph-deploy disk list ceph-node1

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk list ceph-node1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f2b262d6f50>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ['ceph-node1']

[ceph_deploy.cli][INFO ] func : <function disk at 0x7f2b262b22d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph-node1][DEBUG ] connection detected need for sudo

[ceph-node1][DEBUG ] connected to host: ceph-node1

[ceph-node1][DEBUG ] detect platform information from remote host

[ceph-node1][DEBUG ] detect machine type

[ceph-node1][DEBUG ] find the location of an executable

[ceph-node1][INFO ] Running command: sudo fdisk -l

[ceph-node1][INFO ] Disk /dev/loop0: 89.1 MiB, 93417472 bytes, 182456 sectors

[ceph-node1][INFO ] Disk /dev/loop1: 99.4 MiB, 104210432 bytes, 203536 sectors

[ceph-node1][INFO ] Disk /dev/sda: 200 GiB, 214748364800 bytes, 419430400 sectors

[ceph-node1][INFO ] Disk /dev/sdb: 100 GiB, 107374182400 bytes, 209715200 sectors

[ceph-node1][INFO ] Disk /dev/sdc: 100 GiB, 107374182400 bytes, 209715200 sectors

[ceph-node1][INFO ] Disk /dev/sdd: 100 GiB, 107374182400 bytes, 209715200 sectors

[ceph-node1][INFO ] Disk /dev/sde: 100 GiB, 107374182400 bytes, 209715200 sectors

song@ceph-deploy:~/ceph-cluster$

使用 ceph-deploy disk zap 擦除各 ceph node 的 ceph 数据磁盘

ceph-node1 ceph-node2 ceph-node3 ceph-node4 的存储节点磁盘擦除过程如下

song@ceph-deploy:~/ceph-cluster$ cat diskZap.sh

#!/bin/bash

#

diskName="sdb sdc sdd sde"

for num in $(seq 1 4)

do

for disk in $diskName

do

ceph-deploy disk zap ceph-node$num /dev/$disk

done

done

song@ceph-deploy:~/ceph-cluster$

1.4.17 添加 OSD

数据分类保存方式

-

Data:即 ceph 保存的对象数据

-

Block: rocks DB 数据即元数据

-

block-wal:数据库的 wal 日志

song@ceph-deploy:~/ceph-cluster$ ceph-deploy osd --help

usage: ceph-deploy osd [-h] {list,create} ...

Create OSDs from a data disk on a remote host:

ceph-deploy osd create {node} --data /path/to/device

For bluestore, optional devices can be used::

ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device

ceph-deploy osd create {node} --data /path/to/data --block-wal /path/to/wal-device

ceph-deploy osd create {node} --data /path/to/data --block-db /path/to/db-device --block-wal /path/to/wal-device

For filestore, the journal must be specified, as well as the objectstore::

ceph-deploy osd create {node} --filestore --data /path/to/data --journal /path/to/journal # 使用 filestor 的数据和文件系统的日志的路径

For data devices, it can be an existing logical volume in the format of:

vg/lv, or a device. For other OSD components like wal, db, and journal, it

can be logical volume (in vg/lv format) or it must be a GPT partition.

positional arguments:

{list,create}

list List OSD info from remote host(s)

create Create new Ceph OSD daemon by preparing and activating a

device

optional arguments:

-h, --help show this help message and exit

song@ceph-deploy:~/ceph-cluster$

添加 OSD

song@ceph-deploy:~/ceph-cluster$ cat osdCreate.sh

#!/bin/bash

#

diskName="sdb sdc sdd sde"

for num in $(seq 1 4)

do

for disk in $diskName

do

ceph-deploy osd create ceph-node$num --data /dev/$disk

done

done

song@ceph-deploy:~/ceph-cluster$

1.4.18 设置 OSD 服务自启动

默认就已经为自启动, node 节点添加完成后,开源测试 node 服务器重启后,OSD 是否会自动启动。

song@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 1e185a05-7e53-40f4-a618-5abc802d5ae7

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-mon1 (age 97m)

mgr: ceph-mgr1(active, since 57m)

osd: 16 osds: 16 up (since 6m), 16 in (since 6m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 109 MiB used, 1.6 TiB / 1.6 TiB avail

pgs: 1 active+clean

song@ceph-deploy:~/ceph-cluster$

ceph-node1

root@ceph-node1:~# ps -ef | grep osd | grep -v grep

ceph 24468 1 0 12:00 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 1 --setuser ceph --setgroup ceph

ceph 26216 1 0 12:00 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 2 --setuser ceph --setgroup ceph

ceph 27948 1 0 12:00 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 3 --setuser ceph --setgroup ceph

ceph 29686 1 0 12:00 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 4 --setuser ceph --setgroup ceph

root@ceph-node1:~#

root@ceph-node1:~# systemctl enable ceph-osd@1 ceph-osd@2 ceph-osd@3 ceph-osd@4

root@ceph-node1:~# systemctl status ceph-osd@1 ceph-osd@2 ceph-osd@3 ceph-osd@4

ceph-node2

root@ceph-node2:~# ps -ef | grep osd | grep -v grep

ceph 24309 1 0 11:59 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

ceph 26027 1 0 12:01 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 5 --setuser ceph --setgroup ceph

ceph 28053 1 0 12:01 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 6 --setuser ceph --setgroup ceph

ceph 29789 1 0 12:02 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 7 --setuser ceph --setgroup ceph

root@ceph-node2:~#

root@ceph-node2:~# systemctl enable ceph-osd@0 ceph-osd@5 ceph-osd@6 ceph-osd@7

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@0.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@5.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@6.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@7.service → /lib/systemd/system/ceph-osd@.service.

root@ceph-node2:~# systemctl status ceph-osd@0 ceph-osd@5 ceph-osd@6 ceph-osd@7

ceph-node3

root@ceph-node3:~# ps -ef | grep osd | grep -v grep

ceph 23892 1 0 12:02 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 8 --setuser ceph --setgroup ceph

ceph 25646 1 0 12:02 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 9 --setuser ceph --setgroup ceph

ceph 27393 1 0 12:03 ? 00:00:05 /usr/bin/ceph-osd -f --cluster ceph --id 10 --setuser ceph --setgroup ceph

ceph 29126 1 0 12:03 ? 00:00:04 /usr/bin/ceph-osd -f --cluster ceph --id 11 --setuser ceph --setgroup ceph

root@ceph-node3:~#

root@ceph-node3:~# systemctl enable ceph-osd@8 ceph-osd@9 ceph-osd@10 ceph-osd@11

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@8.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@9.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@10.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@11.service → /lib/systemd/system/ceph-osd@.service.

root@ceph-node3:~# systemctl status ceph-osd@8 ceph-osd@9 ceph-osd@10 ceph-osd@11

ceph-node4

root@ceph-node4:~# ps -ef | grep osd | grep -v grep

ceph 4567 1 0 12:03 ? 00:00:04 /usr/bin/ceph-osd -f --cluster ceph --id 12 --setuser ceph --setgroup ceph

ceph 6318 1 0 12:04 ? 00:00:04 /usr/bin/ceph-osd -f --cluster ceph --id 13 --setuser ceph --setgroup ceph

ceph 8054 1 0 12:04 ? 00:00:04 /usr/bin/ceph-osd -f --cluster ceph --id 14 --setuser ceph --setgroup ceph

ceph 9797 1 0 12:04 ? 00:00:03 /usr/bin/ceph-osd -f --cluster ceph --id 15 --setuser ceph --setgroup ceph

root@ceph-node4:~#

root@ceph-node4:~# systemctl enable ceph-osd@12 ceph-osd@13 ceph-osd@14 ceph-osd@15

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@12.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@13.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@14.service → /lib/systemd/system/ceph-osd@.service.

Created symlink /etc/systemd/system/ceph-osd.target.wants/ceph-osd@15.service → /lib/systemd/system/ceph-osd@.service.

root@ceph-node4:~# systemctl status ceph-osd@12 ceph-osd@13 ceph-osd@14 ceph-osd@15

1.4.19 验证 ceph 集群

song@ceph-deploy:~/ceph-cluster$ ceph health

HEALTH_OK

song@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 1e185a05-7e53-40f4-a618-5abc802d5ae7

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-mon1 (age 110m)

mgr: ceph-mgr1(active, since 70m)

osd: 16 osds: 16 up (since 19m), 16 in (since 19m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 109 MiB used, 1.6 TiB / 1.6 TiB avail

pgs: 1 active+clean

song@ceph-deploy:~/ceph-cluster$

1.4.20 从 RADOS 移除 OSD

Ceph 集群中的一个 OSD 是一个 node 节点的服务进程且对应于一个物理磁盘设备,是一个 专用的守护进程。在某 OSD 设备出现故障,或管理员出于管理之需确实要移除特定的 OSD 设备时,需要先停止相关的守护进程,而后再进行移除操作。对于 Luminous 及其之后的版 本来说,停止和移除命令的格式分别如下所示:

1. 停用设备:ceph osd out {osd-num}

2. 停止进程:sudo systemctl stop ceph-osd@{osd-num}

3. 移除设备:ceph osd purge {id} --yes-i-really-mean-it

若类似如下的 OSD 的配置信息存在于 ceph.conf 配置文件中,管理员在删除 OSD 之后手动将 其删除

不过,对于 Luminous 之前的版本来说,管理员需要依次手动执行如下步骤删除 OSD 设备

1. 于 CRUSH 运行图中移除设备:ceph osd crush remove {name}

2. 移除 OSD 的认证 key:ceph auth del osd.{osd-num}

3. 最后移除 OSD 设备:ceph osd rm {osd-num}

1.4.21 测试上传与下载数据:

存取数据时,客户端必须首先连接至 RADOS 集群上某存储池,然后根据对象名称由相关的 CRUSH 规则完成数据对象寻址。于是,为了测试集群的数据存取功能,这里首先创建一个用 于测试的存储池 mypool,并设定其 PG 数量为 32 个。

song@ceph-deploy:~/ceph-cluster$ ceph -h

song@ceph-deploy:~/ceph-cluster$ rados -h

创建 pool

# 32PG 和 32PGP

song@ceph-deploy:~/ceph-cluster$ ceph osd pool create mypool 32 32

pool 'mypool' created

song@ceph-deploy:~/ceph-cluster$

# 验证 PG 与 PGP 组合

song@ceph-deploy:~/ceph-cluster$ ceph pg ls-by-pool mypool | awk '{print $1,$2,$15}'

PG OBJECTS ACTING

2.0 0 [3,10,6]p3

2.1 0 [14,0,8]p14

2.2 0 [3,6,14]p3

2.3 0 [14,2,9]p14

2.4 0 [5,10,15]p5

2.5 0 [8,0,1]p8

2.6 0 [6,8,14]p6

2.7 0 [3,13,6]p3

2.8 0 [12,9,0]p12

2.9 0 [5,4,14]p5

2.a 0 [11,5,15]p11

2.b 0 [8,3,12]p8

2.c 0 [11,5,1]p11

2.d 0 [9,13,7]p9

2.e 0 [6,9,13]p6

2.f 0 [8,13,4]p8

2.10 0 [15,8,0]p15

2.11 0 [15,3,7]p15

2.12 0 [10,7,2]p10

2.13 0 [15,4,6]p15

2.14 0 [2,9,12]p2

2.15 0 [14,0,8]p14

2.16 0 [2,11,12]p2

2.17 0 [1,10,6]p1

2.18 0 [13,1,11]p13

2.19 0 [6,4,8]p6

2.1a 0 [3,8,7]p3

2.1b 0 [11,2,13]p11

2.1c 0 [10,1,6]p10

2.1d 0 [15,10,3]p15

2.1e 0 [5,10,15]p5

2.1f 0 [0,3,8]p0

* NOTE: afterwards

song@ceph-deploy:~/ceph-cluster$

song@ceph-deploy:~/ceph-cluster$ ceph osd pool ls

device_health_metrics

mypool

song@ceph-deploy:~/ceph-cluster$ rados lspools

device_health_metrics

mypool

song@ceph-deploy:~/ceph-cluster$

当前的 ceph 环境还没还没有部署使用块存储和文件系统使用 ceph,也没有使用对象存储的 客户端,但是 ceph 的 rados 命令可以实现访问 ceph 对象存储的功能:

上传文件

# 把 messages 文件上传到 mypool 并指定对象 id 为 msg1

song@ceph-deploy:~/ceph-cluster$ sudo rados put msg1 /var/log/syslog --pool=mypool

列出文件

song@ceph-deploy:~/ceph-cluster$ rados ls --pool=mypool

msg1

song@ceph-deploy:~/ceph-cluster$

文件信息

ceph osd map 命令可以获取到存储池中数据对象的具体位置信息:

song@ceph-deploy:~/ceph-cluster$ ceph osd map mypool msg1

osdmap e92 pool 'mypool' (2) object 'msg1' -> pg 2.c833d430 (2.10) -> up ([15,8,0], p15) acting ([15,8,0], p15)

song@ceph-deploy:~/ceph-cluster$

示例

ceph@ceph-deploy:/home/ceph/ceph-cluster$ ceph osd map mypool msg1

osdmap e114 pool 'mypool' (2) object 'msg1' -> pg 2.c833d430 (2.10) -> up ([15,13,0], p15) acting ([15,13,0], p15)

表示文件放在了存储池 id 为 2 的 c833d430 的 PG 上,10 为当前 PG 的 id, 2.10 表示数据是在 id 为 2 的存储池当中 id 为 10 的 PG 中存储,在线的 OSD 编号 15,13,10,主 OSD 为 5,活动 的 OSD 15,13,10,三个 OSD 表示数据放一共 3 个副本,PG 中的 OSD 是 ceph 的 crush 算法计 算出三份数据保存在哪些 OSD。

下载文件

song@ceph-deploy:~/ceph-cluster$ sudo rados get msg1 --pool=mypool /opt/my.txt

song@ceph-deploy:~/ceph-cluster$ ll /opt/

total 1000

drwxr-xr-x 2 root root 4096 Aug 21 12:34 ./

drwxr-xr-x 25 root root 4096 May 30 01:29 ../

-rw-r--r-- 1 root root 1013470 Aug 21 12:34 my.txt

# 验证下载文件:

song@ceph-deploy:~/ceph-cluster$ head /opt/my.txt

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] Linux version 4.15.0-143-generic (buildd@lcy01-amd64-001) (gcc version 7.5.0 (Ubuntu 7.5.0-3ubuntu1~18.04)) #147-Ubuntu SMP Wed Apr 14 16:10:11 UTC 2021 (Ubuntu 4.15.0-143.147-generic 4.15.18)

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] Command line: BOOT_IMAGE=/vmlinuz-4.15.0-143-generic root=UUID=15d0f5cc-21c8-43e0-ae92-5320da72b3c9 ro maybe-ubiquity

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] KERNEL supported cpus:

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] Intel GenuineIntel

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] AMD AuthenticAMD

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] Centaur CentaurHauls

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] Disabled fast string operations

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

Sep 11 09:25:51 ubuntu-1804 kernel: [ 0.000000] x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

song@ceph-deploy:~/ceph-cluster$

修改文件

song@ceph-deploy:~/ceph-cluster$ sudo rados put msg1 /etc/passwd --pool=mypool

song@ceph-deploy:~/ceph-cluster$ sudo rados get msg1 --pool=mypool /opt/2.txt

# 验证下载文件:

song@ceph-deploy:~/ceph-cluster$ tail /opt/2.txt

_apt:x:104:65534::/nonexistent:/usr/sbin/nologin

lxd:x:105:65534::/var/lib/lxd/:/bin/false

uuidd:x:106:110::/run/uuidd:/usr/sbin/nologin

dnsmasq:x:107:65534:dnsmasq,,,:/var/lib/misc:/usr/sbin/nologin

landscape:x:108:112::/var/lib/landscape:/usr/sbin/nologin

pollinate:x:109:1::/var/cache/pollinate:/bin/false

sshd:x:110:65534::/run/sshd:/usr/sbin/nologin

long:x:1000:1000:long:/home/long:/bin/bash

song:x:2021:2021::/home/song:/bin/bash

ceph:x:64045:64045:Ceph storage service:/var/lib/ceph:/usr/sbin/nologin

song@ceph-deploy:~/ceph-cluster$

删除文件

song@ceph-deploy:~/ceph-cluster$ sudo rados rm msg1 --pool=mypool

song@ceph-deploy:~/ceph-cluster$ rados ls --pool=mypool

song@ceph-deploy:~/ceph-cluster$

1.5 扩展 ceph 集群实现高可用

主要是扩展 ceph 集群的 mon 节点以及 mgr 节点,以实现集群高可用

1.5.1 扩展 ceph-mon 节点

Ceph-mon 是原生具备自选举以实现高可用机制的 ceph 服务,节点数量通常是奇数。

# Ubuntu

root@ceph-mon2:~# apt install ceph-mon

root@ceph-mon3:~# apt install ceph-mon

# Centos

[root@ceph-mon2 ~]# yum install ceph-common ceph-mon

[root@ceph-mon3 ~]# yum install ceph-common ceph-mon

ceph-mon2

root@ceph-deploy:~# su - song

song@ceph-deploy:~$ cd ceph-cluster/

song@ceph-deploy:~/ceph-cluster$ ceph-deploy mon add ceph-mon2

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon add ceph-mon2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : add

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f692ef73fa0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['ceph-mon2']

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f692ef56ad0>

[ceph_deploy.cli][INFO ] address : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][INFO ] ensuring configuration of new mon host: ceph-mon2

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-mon2

The authenticity of host 'ceph-mon2 (172.18.60.12)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)?yes # 输入yes

... 省略 ...

[ceph-mon2][DEBUG ] "name": "ceph-mon2",

[ceph-mon2][DEBUG ] "outside_quorum": [],

[ceph-mon2][DEBUG ] "quorum": [],

[ceph-mon2][DEBUG ] "rank": -1,

[ceph-mon2][DEBUG ] "state": "probing",

[ceph-mon2][DEBUG ] "stretch_mode": false,

[ceph-mon2][DEBUG ] "sync_provider": []

[ceph-mon2][DEBUG ] }

[ceph-mon2][DEBUG ] ********************************************************************************

[ceph-mon2][INFO ] monitor: mon.ceph-mon2 is currently at the state of probing

song@ceph-deploy:~/ceph-cluster$

ceph-mon3

song@ceph-deploy:~/ceph-cluster$ ceph-deploy mon add ceph-mon3

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mon add ceph-mon3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : add

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f4146a00fa0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['ceph-mon3']

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f41469e3ad0>

[ceph_deploy.cli][INFO ] address : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][INFO ] ensuring configuration of new mon host: ceph-mon3

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-mon3

The authenticity of host 'ceph-mon3 (172.18.60.13)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

... 省略 ...

[ceph-mon3][DEBUG ] "name": "ceph-mon3",

[ceph-mon3][DEBUG ] "outside_quorum": [],

[ceph-mon3][DEBUG ] "quorum": [],

[ceph-mon3][DEBUG ] "rank": -1,

[ceph-mon3][DEBUG ] "state": "probing",

[ceph-mon3][DEBUG ] "stretch_mode": false,

[ceph-mon3][DEBUG ] "sync_provider": []

[ceph-mon3][DEBUG ] }

[ceph-mon3][DEBUG ] ********************************************************************************

[ceph-mon3][INFO ] monitor: mon.ceph-mon3 is currently at the state of probing

song@ceph-deploy:~/ceph-cluster$

1.5.2 验证 ceph-mon 状态

song@ceph-deploy:~/ceph-cluster$ ceph quorum_status

song@ceph-deploy:~/ceph-cluster$ ceph quorum_status --format json-pretty

{

"election_epoch": 14,

"quorum": [

0,

1,

2

],

"quorum_names": [

"ceph-mon1",

"ceph-mon2",

"ceph-mon3"

],

"quorum_leader_name": "ceph-mon1", # 当前的 leader

"quorum_age": 198,

"features": {

"quorum_con": "4540138297136906239",

"quorum_mon": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging"

]

},

"monmap": {

"epoch": 3,

"fsid": "1e185a05-7e53-40f4-a618-5abc802d5ae7",

"modified": "2021-08-24T02:37:51.373006Z",

"created": "2021-08-21T10:33:54.391920Z",

"min_mon_release": 16,

"min_mon_release_name": "pacific",

"election_strategy": 1,

"disallowed_leaders: ": "",

"stretch_mode": false,

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging"

],

"optional": []

},

"mons": [

{

"rank": 0, # 当前节点的等级

"name": "ceph-mon1", # 节点的名称

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "172.18.60.11:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "172.18.60.11:6789",

"nonce": 0

}

]

},

"addr": "172.18.60.11:6789/0", # 监听地址

"public_addr": "172.18.60.11:6789/0", # 同上

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 1,

"name": "ceph-mon2",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "172.18.60.12:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "172.18.60.12:6789",

"nonce": 0

}

]

},

"addr": "172.18.60.12:6789/0",

"public_addr": "172.18.60.12:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 2,

"name": "ceph-mon3",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "172.18.60.13:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "172.18.60.13:6789",

"nonce": 0

}

]

},

"addr": "172.18.60.13:6789/0",

"public_addr": "172.18.60.13:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

}

]

}

}

song@ceph-deploy:~/ceph-cluster$

验证 ceph 集群状态

song@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 1e185a05-7e53-40f4-a618-5abc802d5ae7

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 6m)

mgr: ceph-mgr1(active, since 28m)

osd: 16 osds: 16 up (since 26m), 16 in (since 2d)

data:

pools: 2 pools, 33 pgs

objects: 0 objects, 0 B

usage: 120 MiB used, 1.6 TiB / 1.6 TiB avail

pgs: 33 active+clean

song@ceph-deploy:~/ceph-cluster$

1.5.3 扩展 mgr 节点

# Ubuntu

root@ceph-mgr2:~# apt -y install ceph-mgr

# Centos

[root@ceph-mgr2 ~]# yum install ceph-mgr -y

添加

root@ceph-deploy:~# su - song

song@ceph-deploy:~$ cd ceph-cluster/

song@ceph-deploy:~/ceph-cluster$ ceph-deploy mgr create ceph-mgr2

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mgr create ceph-mgr2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('ceph-mgr2', 'ceph-mgr2')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7ff6cf2b1be0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mgr at 0x7ff6cf710150>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts ceph-mgr2:ceph-mgr2

The authenticity of host 'ceph-mgr2 (172.18.60.15)' can't be established.

ECDSA key fingerprint is SHA256:Iev+S4q6mDUmvCcZh1qvnu0xJeZzax5DFo/0klcSjjA.

Are you sure you want to continue connecting (yes/no)? yes # 输入 yes

Warning: Permanently added 'ceph-mgr2' (ECDSA) to the list of known hosts.

[ceph-mgr2][DEBUG ] connection detected need for sudo

[ceph-mgr2][DEBUG ] connected to host: ceph-mgr2

[ceph-mgr2][DEBUG ] detect platform information from remote host

[ceph-mgr2][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to ceph-mgr2

[ceph-mgr2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph-mgr2][WARNIN] mgr keyring does not exist yet, creating one

[ceph-mgr2][DEBUG ] create a keyring file

[ceph-mgr2][DEBUG ] create path recursively if it doesn't exist

[ceph-mgr2][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.ceph-mgr2 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-ceph-mgr2/keyring

[ceph-mgr2][INFO ] Running command: sudo systemctl enable ceph-mgr@ceph-mgr2

[ceph-mgr2][WARNIN] Created symlink /etc/systemd/system/ceph-mgr.target.wants/ceph-mgr@ceph-mgr2.service → /lib/systemd/system/ceph-mgr@.service.

[ceph-mgr2][INFO ] Running command: sudo systemctl start ceph-mgr@ceph-mgr2

[ceph-mgr2][INFO ] Running command: sudo systemctl enable ceph.target

song@ceph-deploy:~/ceph-cluster$

同步配置文件到 ceph-mg2 节点

song@ceph-deploy:~/ceph-cluster$ ceph-deploy admin ceph-mgr2

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/song/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin ceph-mgr2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f64411f4140>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] client : ['ceph-mgr2']

[ceph_deploy.cli][INFO ] func : <function admin at 0x7f6441af5a50>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to ceph-mgr2

[ceph-mgr2][DEBUG ] connection detected need for sudo

[ceph-mgr2][DEBUG ] connected to host: ceph-mgr2

[ceph-mgr2][DEBUG ] detect platform information from remote host

[ceph-mgr2][DEBUG ] detect machine type

[ceph-mgr2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

song@ceph-deploy:~/ceph-cluster$

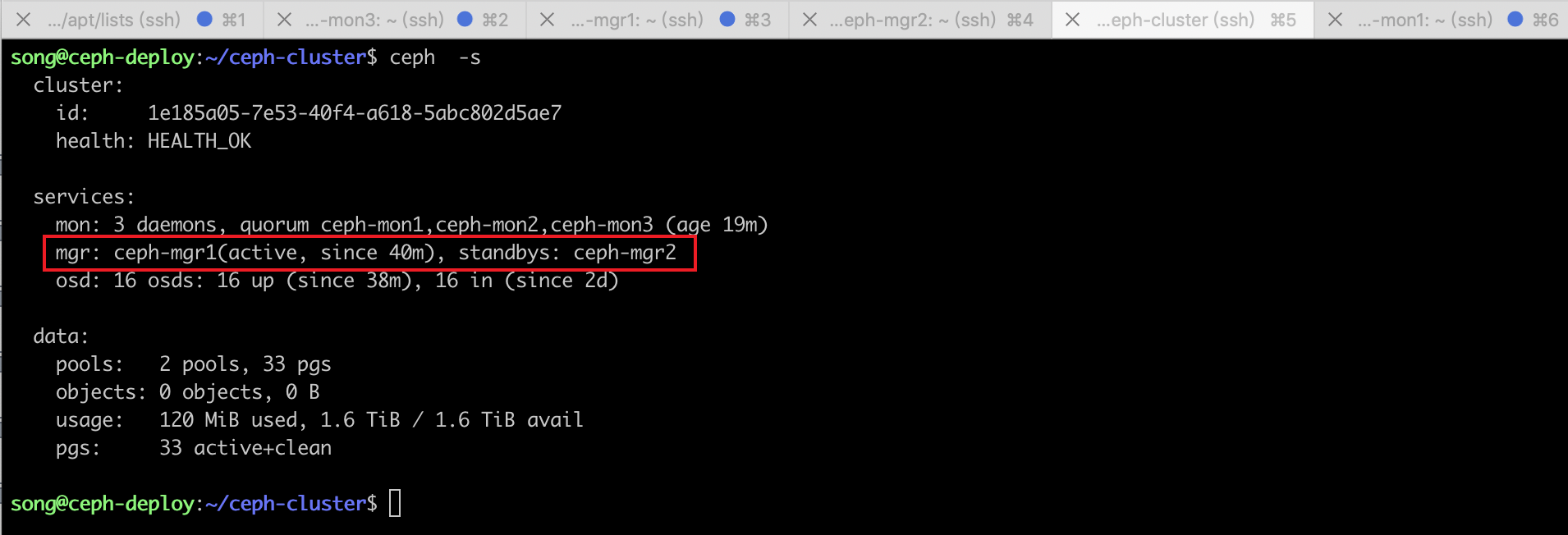

1.5.4 验证 mgr 节点状态

song@ceph-deploy:~/ceph-cluster$ ceph -s

cluster:

id: 1e185a05-7e53-40f4-a618-5abc802d5ae7

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-mon1,ceph-mon2,ceph-mon3 (age 19m)

mgr: ceph-mgr1(active, since 40m), standbys: ceph-mgr2

osd: 16 osds: 16 up (since 38m), 16 in (since 2d)

data:

pools: 2 pools, 33 pgs

objects: 0 objects, 0 B

usage: 120 MiB used, 1.6 TiB / 1.6 TiB avail

pgs: 33 active+clean

song@ceph-deploy:~/ceph-cluster$

470

470

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?