一般来说企业用的二进制比较多;因为组件出问题了,只需要把组件停掉看看日志,然后处理即可;

注意:机器要求cpu核心至少2;内存3G以上

1、准备工作

1.1、docker安装

开始前提:配置docker查看这篇文章:

最牛docker安装,解决一切pull,配置等问题-CSDN博客

配置好ip、master、node1、node2

[root@k8s-master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.110 k8s-master

192.168.2.111 k8s-node1

192.168.2.112 k8s-node21.2、关闭防火墙:master、node1、node2均如此

[root@k8s-master ~]# cat /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disable

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted1.3、Kubernetes关闭Swap

1.8版本开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将⽆法启动。⽅法⼀,通过 kubelet的启动参数 fail-swap-on=false更改这个限制。⽅法二,关闭系统的Swap。

查看swap:

[root@k8s-master ~]# free -m

total used free shared buff/cache available

Mem: 2888 323 2240 11 324 2345

Swap: 1535 0 1535

先临时关闭:

[root@k8s-master ~]# swapoff -a

[root@k8s-master ~]# free -m

total used free shared buff/cache available

Mem: 2888 323 2240 11 324 2345

Swap: 0 0 0

在永久关闭:

将这行注释即可#/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-master ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Fri Oct 25 23:06:02 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=2e1501e9-31f0-42d4-af81-a31b2c6931d0 /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

2、 使用kubeadm部署Kubernetes

2.1、在所有节点安装kubeadm和kubelet、kubectl:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF开始安装:

grep -E:正则匹配

因为我选取的是1.19版本

如果直接yum install -y kubelet kubeadm kubectl

等安装后可以rpm -qa | grep -E "kubelet kubeadm kubectl" 查看当前版本

删除:yum -y remove `rpm -qa | grep -E "kubelet kubeadm kubectl"`安装指定版本:

yum install -y kubelet-1.19.1-0.x86_64 kubeadm-1.19.1-0.x86_64 kubectl-1.19.1-0.x86_64 ipvsadm

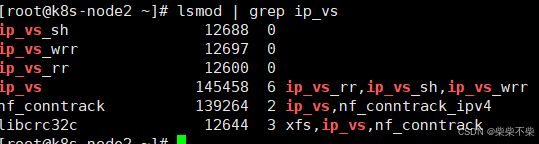

#ipvsadm 是一个用于管理 Linux 内核中的 IP 虚拟服务器(IPVS)模块的工具。2.2、加载 ipvs 相关内核模块

如果重新开机,需要重新加载(可以写在 /etc/rc.local 中开机自动加载)

modprobe ip_vs && modprobe ip_vs_rr && modprobe ip_vs_wrr && modprobe ip_vs_sh && modprobe nf_conntrack_ipv4 && modprobe br_netfilter

#master、node1、node2都需要

出现此结果表示成功;

配置开机自启:将上面加载 ipvs 相关内核模块放到etc下的rc.local下即可

给一个权限:chmod +x /etc/rc.local

2.3、配置转发相关参数,否则可能会出错 <ipv 6>

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

vm.swappiness=0

EOF让配置生效:

sysctl --system 所有的配置生效

#也可以指定这个文件生效:

sysctl -p /etc/sysctl.d/k8s.conf查看下配置的模块是否都已经产生:(所有节点都要按照上述的操作)

2.4 配置启动kubelet(所有节点)

配置kubelet使用的pause镜像

获取docker的驱动cgroups

[root@k8s-master ~]# DOCKER_CGROUPS=$(docker info | grep "Cgroup Driver" | awk '{print $3}')

[root@k8s-master ~]# echo $DOCKER_CGROUPS

cgroupfs

当然也可以不做上述的,直接配置:

cat >/etc/sysconfig/kubelet <<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=k8s.gcr.io/pause:3.2"

EOF

[root@k8s-node2 ~]# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--cgroup-driver=cgroupfs --pod-infra-container-image=k8s.gcr.io/pause:3.2"

#加载system系统配置管理文件

systemctl daemon-reload

#启动

# systemctl enable kubelet && systemctl restart kubelet

肯定是报错,因为kubelet还没有在master进行初始化启动3、master节点初始化:

初始化:init

docker ps可以先查看

kubeadm init --kubernetes-version=v1.19.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.2.110 --ignore-preflight-errors=Swap

如果失败要继续做的话需要kubeadm reset 重置一下我的会报错,所以我选择写一个脚本手动拉取镜像: 注意我的缺少的镜像版本不一致的话需要更改

[root@k8s-master ~]# ls

anaconda-ks.cfg

[root@k8s-master ~]# touch dockerpull.sh

#写了一个脚本自动pull

[root@k8s-master ~]# vim dockerpull.sh

[root@k8s-master ~]# cat dockerpull.sh

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

记住了确保docker是开启的状态

记住了确保docker是开启的状态

3.1 手动编写dockerpull相关镜像

执行脚本:

bash dockerpull.sh #跳过授权直接运行

然后将这个文件传给node1和node2

scp dockerpull.sh k8s-node1://root/

scp dockerpull.sh k8s-node2://root/3.2 给镜像打上tag(重命名)

下载完了之后需要将 aliyun 下载下来的所有镜像打成 k8s.gcr.io/kube-controller-manager:v1.19.1 这样的 tag:

docker tag 命令为从阿里云的镜像仓库拉取的 Kubernetes 相关组件镜像打上新的标签

[root@k8s-master ~]# touch dockertag.sh

[root@k8s-master ~]# vim dockertag.sh

#!/bin/bash

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.1 k8s.gcr.io/kube-controller-manager:v1.19.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.1 k8s.gcr.io/kube-proxy:v1.19.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.1 k8s.gcr.io/kube-apiserver:v1.19.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.1 k8s.gcr.io/kube-scheduler:v1.19.1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0 k8s.gcr.io/coredns:1.7.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0 k8s.gcr.io/etcd:3.4.13-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 k8s.gcr.io/pause:3.2

#写脚本的时候可以:set list 查看是否有空格预防报错master打完标签,也要在node1、2打上标签

[root@k8s-master ~]# scp dockertag.sh k8s-node1:/root/

root@k8s-node1's password:

dockertag.sh 100% 801 924.4KB/s 00:00

[root@k8s-master ~]# scp dockertag.sh k8s-node2:/root/

root@k8s-node2's password:

dockertag.sh 100% 801 987.7KB/s 00:00

[root@k8s-node1 ~]# bash dockertag.sh

[root@k8s-node2 ~]# bash dockertag.sh

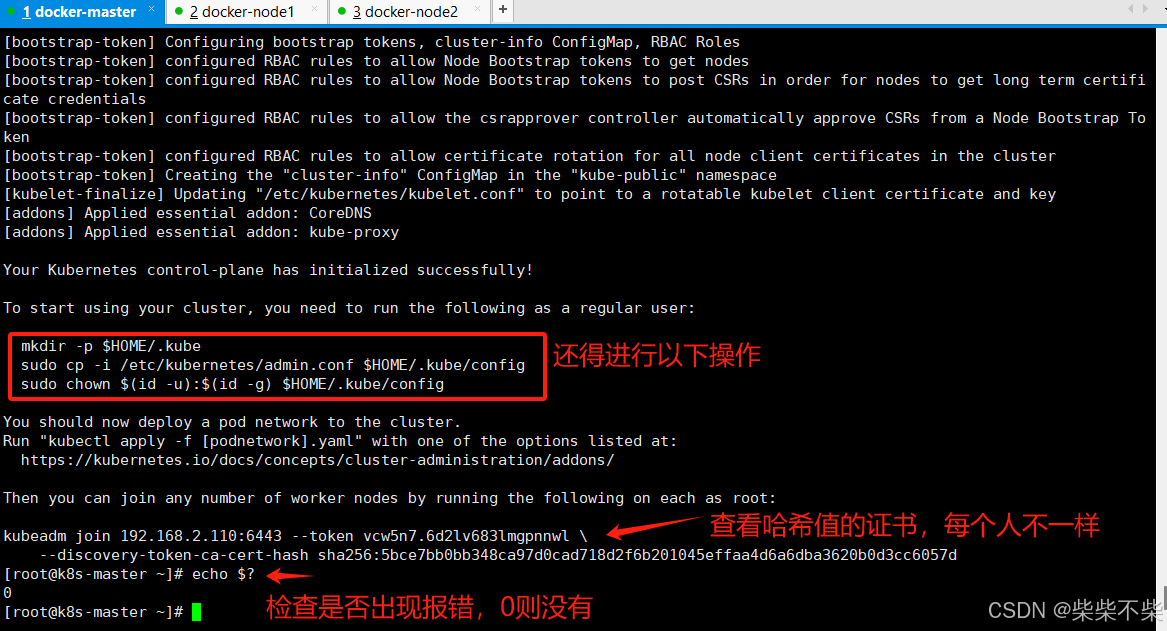

3.3 重新在master上输入初始化命令:

kubeadm init --kubernetes-version=v1.19.1 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.2.110 --ignore-preflight-errors=Swap

# 是控制平面节点上证书颁发机构(CA)证书的 SHA-256 哈希值,用于安全地发现控制平面节点

kubeadm join 192.168.2.110:6443 --token vcw5n7.6d2lv683lmgpnnwl --discovery-token-ca-cert-hash sha256:5bce7bb0bb348ca97d0cad718d2f6b201045effaa4d6a6dba3620b0d3cc6057d

保存下来后面用得到3.4 执行初始化后的要求:

继续来操作红色画圈圈的:

#当前使用home的家目录

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

#(查看root组的uid和gid)

[root@k8s-master ~]# chown $(id -u):$(id -g) $HOME/.kube/config

查看 node 节点

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 10m v1.19.1

显示NotReady是因为网络插件还没有安装好,搞好以后就可以了;后面有讲

创建一个pod的命名空间:pending是正常的,是因为没有部署网络插件

[root@k8s-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-f9fd979d6-h2z87 0/1 Pending 0 12m

coredns-f9fd979d6-txrm6 0/1 Pending 0 12m

etcd-k8s-master 1/1 Running 0 12m

kube-apiserver-k8s-master 1/1 Running 0 12m

kube-controller-manager-k8s-master 1/1 Running 0 12m

kube-proxy-hgzpx 1/1 Running 0 12m

kube-scheduler-k8s-master 1/1 Running 0 12m

#-n 是指定在哪个命名空间进行操作4、部署网络插件----flannel(二进制是部署node节点上)

⽤这个网络插件的配置⽂件kube-flannelv1.19.1.yaml

# 创建kube-flannel.yml,把下面内容复制进行

[root@k8s-master ~]# touch kube-flannelv1.19.1.yaml

[root@k8s-master ~]# vim kube-flannelv1.19.1.yaml

[root@k8s-master ~]# cat kube-flannelv1.19.1.yaml

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list", "watch"]

- apiGroups: [""]

resources: ["nodes/status"]

verbs: ["patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.0

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.0

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens32

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate有一行需要更改自己的网卡,我的是ens32,可以直接/反斜杠查询修改

回答上面的文件,把名字改了;

下面是安装需要补充的镜像docker pull docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

docker pull docker.io/rancher/mirrored-flannelcni-flannel:v0.20.0

docker images | grep flannel

进行查看

[root@k8s-master ~]# kubectl apply -f kube-flannelv1.19.1.yaml

[root@k8s-master ~]# kubectl get pod -n kube-system

[root@k8s-master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-f9fd979d6-h2z87 1/1 Running 0 73m

coredns-f9fd979d6-txrm6 1/1 Running 0 73m

etcd-k8s-master 1/1 Running 0 74m

kube-apiserver-k8s-master 1/1 Running 0 74m

kube-controller-manager-k8s-master 1/1 Running 0 74m

kube-flannel-ds-s97sk 1/1 Running 0 18m

kube-proxy-hgzpx 1/1 Running 0 73m

kube-scheduler-k8s-master 1/1 Running 0 74m

[root@k8s-master ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-f9fd979d6-h2z87 1/1 Running 0 78m 10.244.0.3 k8s-master <none> <none>

coredns-f9fd979d6-txrm6 1/1 Running 0 78m 10.244.0.2 k8s-master <none> <none>

etcd-k8s-master 1/1 Running 0 78m 192.168.2.110 k8s-master <none> <none>

kube-apiserver-k8s-master 1/1 Running 0 78m 192.168.2.110 k8s-master <none> <none>

kube-controller-manager-k8s-master 1/1 Running 0 78m 192.168.2.110 k8s-master <none> <none>

kube-flannel-ds-s97sk 1/1 Running 0 22m 192.168.2.110 k8s-master <none> <none>

kube-proxy-hgzpx 1/1 Running 0 78m 192.168.2.110 k8s-master <none> <none>

kube-scheduler-k8s-master 1/1 Running 0 78m 192.168.2.110 k8s-master <none> <none>

可以查看分配到了哪个节点我们来查看下kubelet可不可以运行:没有问题

现在的node还是跑不起来的,我们要部署配置 node 节点加⼊集群: 如果报错就要开启 ip 转发:

# sysctl -w net.ipv4.ip_forward=1在所有 node 节点操作,此命令为初始化 master 成功后返回的结果(就是上面说的哈希值证书):

kubeadm join 192.168.2.110:6443 --token vcw5n7.6d2lv683lmgpnnwl --discovery-token-ca-cert-hash sha256:5bce7bb0bb348ca97d0cad718d2f6b201045effaa4d6a6dba3620b0d3cc6057d

然后可以在master上用命令查看:全员Ready;

[root@k8s-master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 3h18m v1.19.1

k8s-node1 Ready <none> 12m v1.19.1

k8s-node2 Ready <none> 11m v1.19.1

5、测试集群

K8S 是容器化技术,可以联网去下载镜像,用容器的方式进行启动。

5.1 创建 pod

此命令会下载 nginx 镜像

kubectl create deployment nginx --image=nginx

5.2、查看状态,如果状态为 running ,示已经成功运行

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-6799fc88d8-2nkzp 1/1 Running 0 4m37s5.3 创建 svc

我们需要将端口暴露出去,让其它外界能够访问

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

5.4 查看对外端口

5.5. 访问:http://192.168.2.111:30022/

完结;

完结;

挖个坑:还有一个部署DASHBOARD应用,Dashboard是官方提供的一个UI,可用于基本管理K8s资源。有空在写;

1032

1032

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?