目录

2.1,先在web1上进行安装,并且将步骤记录好,写成一键安装nginx的脚本

3.1,在web1和web2上nginx.conf的配置均如下

3.2,新建www.sc.com和www.feng.com网站对应的存放网页的文件夹 ,在创建index.html首页文件,和404.html错误页面

3.4,将web1创建的sc.com和feng.com里的index.html 404.html 和50x.html传给web2服务器

4.7,在nginx.conf主配文件里添加配置,在刷新服务

5.2,在lb1和lb2负载均衡器上安装keepalived软件包,并设置开机启动

5.3,将内网的lb1和lb2都配置好IP地址和网关(192.168.152.2)

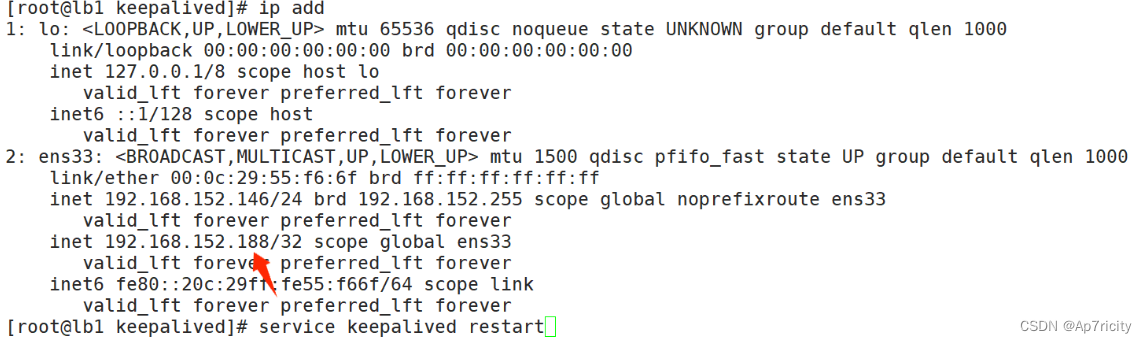

5.4,在2台lb负载均衡器上配置keepalived实现双vip配置

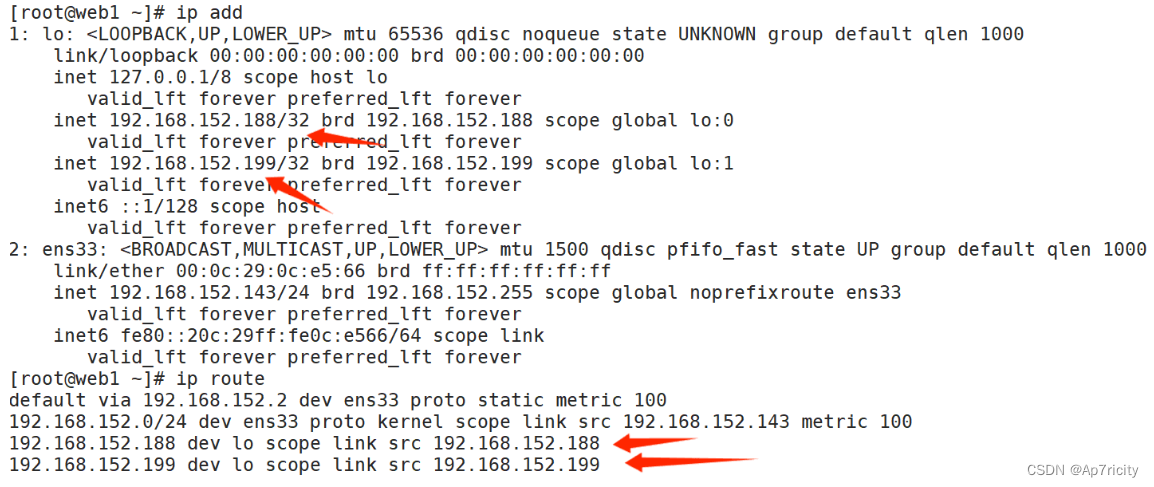

5.9,在后端的real server上进行配置,配置vip,用脚本实现

5.10,查看负载均衡的效果,在windows机器上访问负载均衡器的vip地址

6.1,在综合服务虚拟机安装nfs服务并启动nfs服务且设置开机启动,web1,和web2上也需要安装nfs去挂载共享目录

6.3,设置共享目录/web和复制web1上的html文件夹到综合服务器上来

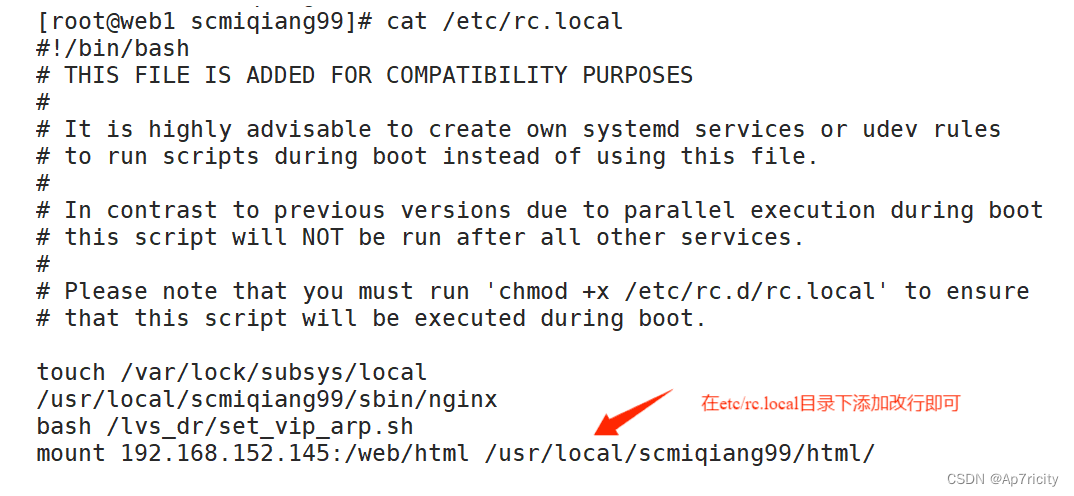

6.5,在web1和web2上都挂载综合服务器上共享的目录到html目录下

7.2,进入ansible的配置文件目录定义host主机清单

7.3,在ansible服务器和其他服务器之间建立单向信任关系的免密通道

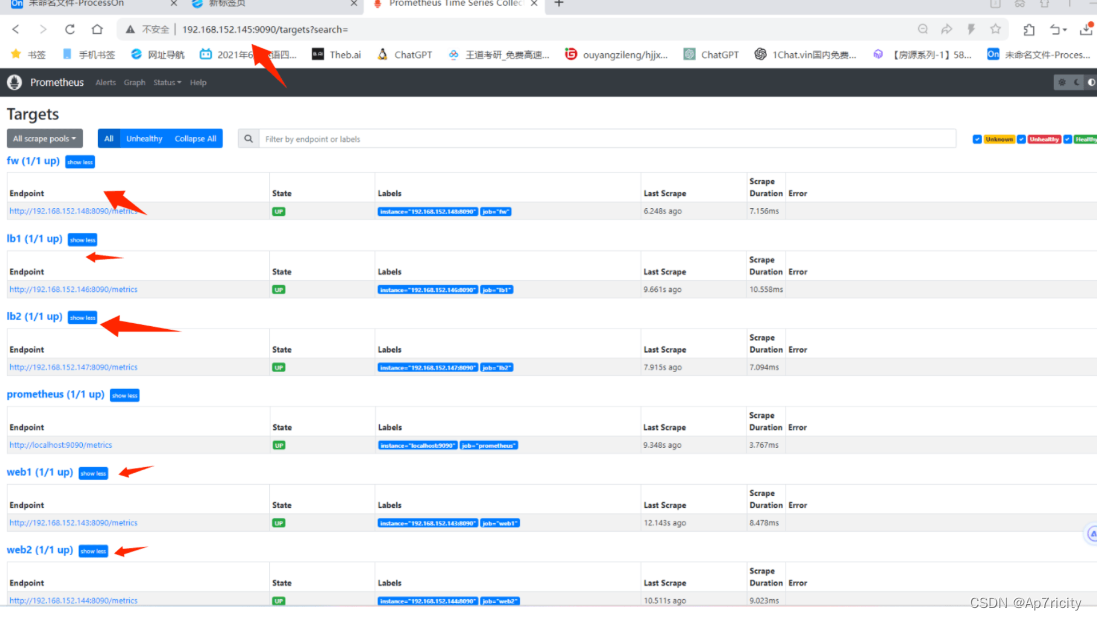

8.4,把prometheus做成一个服务来管理,方便维护和使用,并启动prometheus

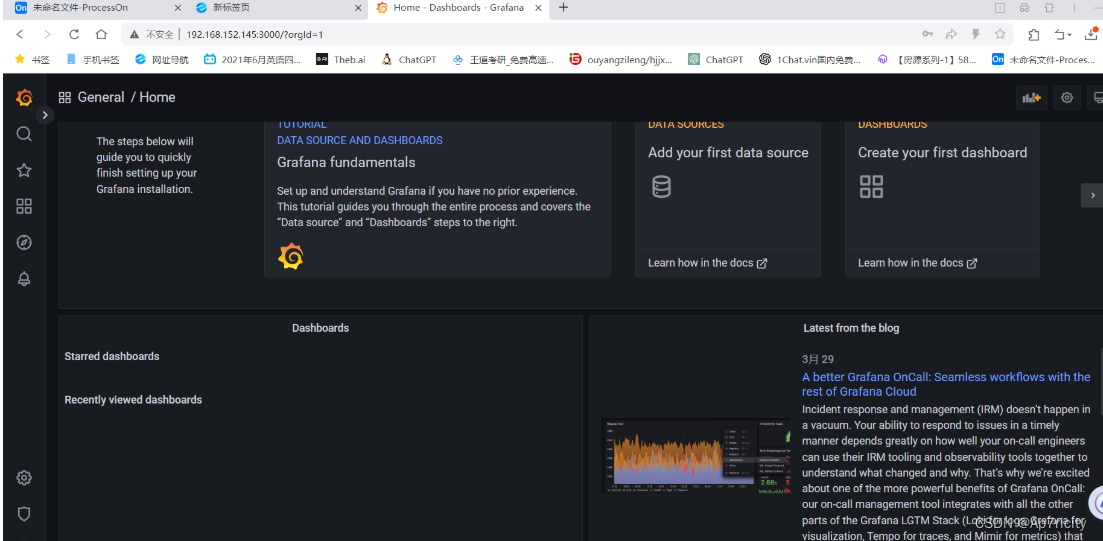

8.9,安装grafana出图软件,启动grafana并设置开机启动

8.11,登录,在浏览器里登录 http://192.168.152.145:3000 默认的用户名和密码是 用户名admin 密码admin

9.1,将web集群里的web1和web2,lb1和lb2上进行tcp wrappers 的配置,只允许堡垒机ssh过来,其他机器的访问拒绝

9.3,在防火墙服务器上编写脚本,实现snat和dnat功能,并且开启路由功能

9.5,将整个web集群里的服务器的网关设置为防火墙服务器的LAN口的ip

一.项目介绍:

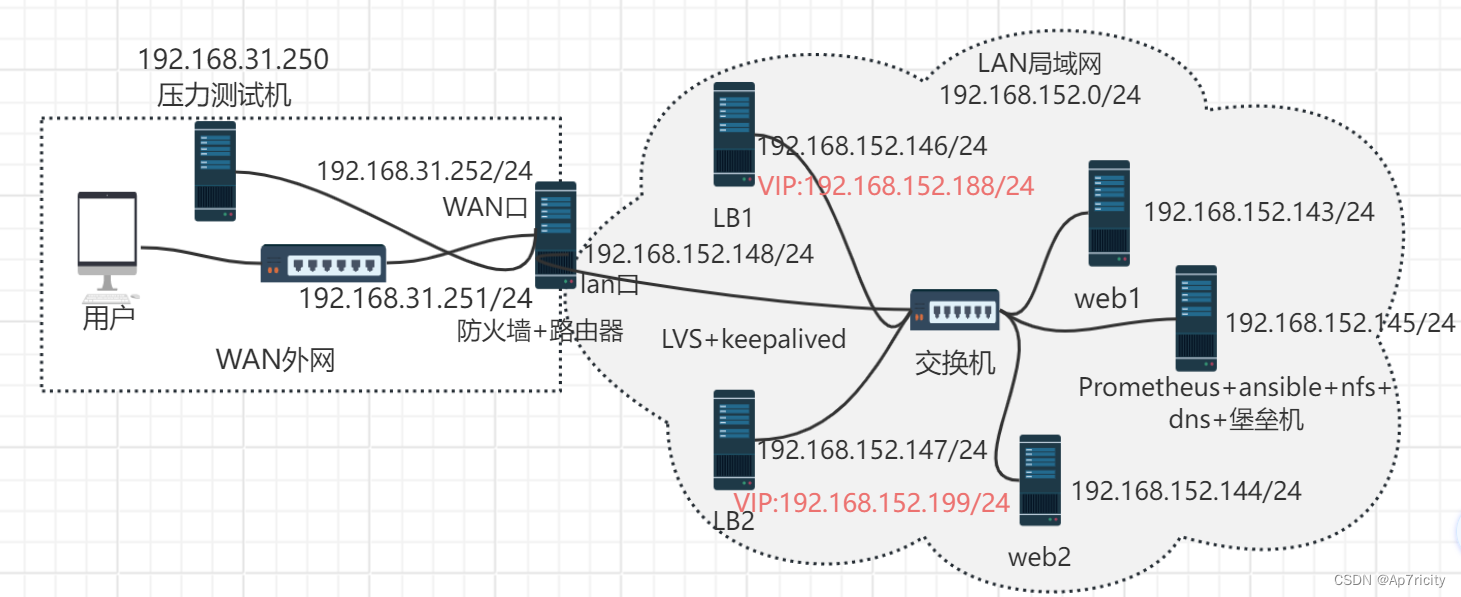

1.拓扑图

2,详细介绍

2,详细介绍

项目名称:基于lvs+keepalived+nginx的双vip高可用web集群

项目环境:centos7.9,prometheus.2.43,keepalived.1.35,grafana,Nginx 1.25.2等

项目描述: 模拟企业里的web项目需求。最终目的是构建一个高可用高性能的web集群系统,部署lvs负载均衡系统+keepalived高可用软件,后端使用nginx做web服务器,同时搭建内部的一套Promethus的监控系统,使用ansible实现整个集群系统的自动化运维工作。

项目步骤:

1,规划好整个项目的拓扑结构图,使用画图软件画出项目的拓扑图,并将ip地址规划好。

2,安装部署6台linux虚拟机服务器,做好网络初始化的工作(配置静态IP地址)并修改好主机名,在两台服务器上部署nginx web服务。

3,在第一台web服务器上部署nginx web服务,采用编译nginx的方式来进行部署,同时编写一键安装nginx的脚本,方便在第二台web服务器上执行并安装好nginx.

4, 使用基于域名的虚拟机功能,配置两个虚拟主机分别对应2个网站

5,在两台web服务器上安装nginx性能流量监控的一个模块vts(虚拟主机的流量监控),了解nginx的访问情况即负载

6,配置lvs(DR模式)+keepalived实现整个集群的高可用和负载均衡

7,安装nfs服务器实现web服务器数据一致性

8,安装absible,设置好主机清单,与其他服务器配置好免密通道,方便批量自动化管理

9,安装prometheus服务,给每个集群里的节点服务安装node_exporter,在安装grafana出图展示监控的效果

10,部署防火墙服务器发布内网的web服务器和堡垒机

11,ab压力测试

项目心得:

1,对高性能和高可用有了一定的了解,对系统的性能指标有了一定的认识。

2,对监控有了了解,监控是运维工作的基础部分,可以及时看到问题,进而解决问题

3,对压力测试下整个集群的瓶颈有了整体的概念,认识到了系统性能资源的重要性

4,对lvs的负载均衡(4层负载均衡)有了一定的了解

二.前期准备

1,IP划分

| 主机名 | IP地址 |

| web1 | 192.168.152.143 |

| web2 | 192.168.152.1442 |

| Prometheus+ansible+nfs+dns+堡垒机 | 192.168.152.145 |

| LB1 | 192.168.152.146 |

| LB2 | 192.168.152.147 |

| 防火墙+路由器 | 192.168.152.148 |

| 压力测试机 | 192.168.31.250 |

2,配置准备

修改主机名并且将7台服务器上的防火墙和enlinux关闭

2.1修改主机名

[root@localhost ~]# hostnamectl set-hostname web1

[root@localhost ~]# su

2.2禁用firewalld

[root@web1 network-scripts]# service firewalld stop

Redirecting to /bin/systemctl stop firewalld.service

[root@web1 network-scripts]# systemctl disable firewalld

2.3禁用enlinux

[root@web1 network-scripts]# setenforce 0

[root@web1 network-scripts]# vi /etc/selinux/config

#将SELINUX= permissive 改为 SELINUX=disabled三.项目步骤

1.网络初始化配置

1.1,在除防火墙和压力测试机上的5台虚拟机的配置

1.1,配置静态ip

[root@web1 ~]# cd /etc/sysconfig/network-scripts/

[root@web1 network-scripts]# vi ifcfg-ens33

[root@web1 network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

UUID="ed6e7033-6d6c-407f-83db-4c73195969ec"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.152.143

PREFIX=24

GATEWAY=192.168.152.2

DNS1=114.114.114.114

1.2,重启网络服务

[root@web1 network-scripts]# service network restart

Restarting network (via systemctl): [ 确定 ]1.2,防火墙服务器的配置

[root@web-firewalld network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

UUID="ed6e7033-6d6c-407f-83db-4c73195969ec"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.152.148

PREFIX=24

#GATEWAY=192.168.152.2

#DNS1=114.114.114.114

[root@web-firewalld network-scripts]# init 0

注意:关机后添加一块网卡,因为防火墙服务器需要两块网卡,一块LAN口,一块WAN口

LAN口的类型是NAT模式

WAN口的类型是桥接模式

1.3,给WAN口配置一个固定的IP地址

[root@web-firewalld ~]# cd /etc/sysconfig/network-scripts/

[root@web-firewalld network-scripts]# cp ifcfg-ens33 ifcfg-ens36

[root@web-firewalld network-scripts]# vi ifcfg-ens36

[root@web-firewalld network-scripts]# cat ifcfg-ens36

BOOTPROTO="none"

NAME="ens36"

DEVICE="ens36"

ONBOOT="yes"

IPADDR=192.168.31.252

PREFIX=24

GATEWAY=192.168.31.1

DNS1=114.114.114.114

因为使用的网络类型是桥接模式,需要和自己所在的环境的路由器网段一致,我所在的环境的网段192.168.31.0/24网段,网关是192.168.31.1

#再次刷新网络服务

[root@web-firewalld network-scripts]# service network restart

Restarting network (via systemctl): [ 确定 ]

ping百度测试防火墙服务是否能上网

[root@web-firewalld network-scripts]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.42) 56(84) bytes of data.

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=1 ttl=52 time=24.2 ms

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=3 ttl=52 time=265 ms

^Z

上述效果说明防火墙服务可以上网2,在web服务其上编译安装nginx

2.1,先在web1上进行安装,并且将步骤记录好,写成一键安装nginx的脚本

root@web1 ~]# mkdir nginx

[root@web1 ~]# cd nginx

[root@web1 nginx]# ls

[root@web1 nginx]#

#下载好一些编译软件

[root@web1 nginx-1.25.2]# yum install epel-release -y

[root@web1 nginx-1.25.2]# yum -y install zlib zlib-devel openssl openssl-devel pcre pcre-devel gcc gcc-c++ autoconf automake make psmisc net-tools lsof vim wget -y

#下载nginx的源码包

[root@web1 nginx]# curl -O http://nginx.org/download/nginx-1.25.2.tar.gz

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 1186k 100 1186k 0 0 577k 0 0:00:02 0:00:02 --:--:-- 577k

[root@web1 nginx]# ls

nginx-1.25.2.tar.gz

#解压源码包

[root@web1 nginx]# tar xf nginx-1.25.2.tar.gz

[root@web1 nginx]# ls

nginx-1.25.2 nginx-1.25.2.tar.gz

#进入解压后的源码包

[root@web1 nginx]# cd nginx-1.25.2

[root@web1 nginx-1.25.2]#

#编译前的配置

[root@web1 nginx-1.25.2]# ./configure --prefix=/usr/local/scmiqiang99 --user=lilin --group=lilin --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream --with-http_gunzip_module

#进行编译

[root@web1 nginx-1.25.2]# make -j 2

一些效果图

#编译安装

[root@web1 nginx-1.25.2]# make install

#编译安装nginx成功后,进入安装目录

[root@web1 nginx-1.25.2]# cd /usr/local/scmiqiang99/

[root@web1 scmiqiang99]# ls

conf html logs sbin

#修改path变量,添加编译安装的nginx的二进制文件的目录

[root@web1 scmiqiang99]# PATH=/usr/local/scmiqiang99/sbin/:$PATH

[root@web1 scmiqiang99]# echo 'PATH=/usr/local/scmiqiang99/sbin/:$PATH' >>/etc/profile

#查询nginx所在的路径,验证修改path变量是否成功,能找到说明修改成功

[root@web1 scmiqiang99]# su

[root@web1 scmiqiang99]# which nginx

/usr/local/scmiqiang99/sbin/nginx

#新建用户lilin,与编译前的启动user名字相对应

[root@web1 scmiqiang99]# useradd lilin

#启动nginx

[root@web1 scmiqiang99]# nginx

#查询nginx是否启动成功,如出现下面的第二行,第三行的内容则表示启动成功

[root@web1 scmiqiang99]# ps aux|grep nginx

root 15732 0.0 0.1 126420 1820 pts/1 S+ 17:47 0:00 vi onekey_install_nginx_2024.sh

root 19041 0.0 0.1 46236 1168 ? Ss 19:58 0:00 nginx: master process nginx

lilin 19042 0.0 0.1 46696 1920 ? S 19:58 0:00 nginx: worker process

root 19044 0.0 0.0 112824 976 pts/0 R+ 19:58 0:00 grep --color=auto nginx

#设置开机启动nginx服务

[root@web1 scmiqiang99]# echo "/usr/local/scmiqiang99/sbin/nginx" >>/etc/rc.local

[root@web1 scmiqiang99]# chmod +x /etc/rc.d/rc.local

#将编译好的安装nginx脚本scp传递给web2,然后验证脚本是否可以使用

[root@web1 nginx]# ls

nginx-1.25.2 nginx-1.25.2.tar.gz onekey_install_nginx_2024.sh

[root@web1 nginx]# scp onekey_install_nginx_2024.sh 192.168.152.144:/root

2.2,脚本内容

#!/bin/bash

#安装需要的软件包

yum install epel-release -y

yum install zlib zlib-devel openssl openssl-devel pcre pcre-devel gcc gcc-c++ autoconf automake make psmisc net-tools lsof vim wget -y

#判断miqiang用户是否存在,不存在则新建

id miqiang || useradd miqiang

#新建文件夹 /nginx

mkdir /nginx

cd /nginx

#下载nginx的源码包

curl -O http://nginx.org/download/nginx-1.25.2.tar.gz

#解压源码包

tar xf nginx-1.25.2.tar.gz

#进入解压后的目录

cd nginx-1.25.2

#编译前的配置

./configure --prefix=/usr/local/scmiqiang99 --user=miqiang --group=miqiang --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream --with-http_gunzip_module

if (( $? != 0 ));then

echo "请检查编译前的配置工作,应该需要安装某个依赖的软件"

exit

fi

#编译

make -j 2

#编译安装

make install

#配置path变量

PATH=/usr/local/scmiqiang99/sbin/:$PATH

echo 'PATH=/usr/local/scmiqiang99/sbin/:$PATH' >>/etc/profile

#启动nginx

nginx

#设置开机启动nginx

echo "/usr/local/scmiqiang99/sbin/nginx" >>/etc/rc.local

chmod +x /etc/rc.d/rc.local 2.3,在web2上执行脚本

[root@web2 ~]# ls

anaconda-ks.cfg onekey_install_nginx_2024.sh

在当前终端里运行脚本

[root@web2 ~]# source onekey_install_nginx_2024.sh

查看nginx进程是否启动,出现了第一行,和第二行的内容说明启动成功

[root@web2 nginx-1.25.2]# ps aux|grep nginx

root 18994 0.0 0.1 46236 1164 ? Ss 20:19 0:00 nginx: master process nginx

miqiang 18995 0.0 0.1 46696 1916 ? S 20:19 0:00 nginx: worker process

root 18998 0.0 0.0 112824 972 pts/0 R+ 20:19 0:00 grep --color=auto nginx

#说明脚本的内容正确2.4,验证两台虚拟机上的nginx是否启动成功

3. 基于域名的虚拟主机的配置

3.1,在web1和web2上nginx.conf的配置均如下

[root@web1 conf]# cat nginx.conf

#user nobody;

worker_processes 1;

#error_log logs/error.log;

error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

gzip on;

主要是一下两个server的配置

#是www.sc.com网站的配置

server {

listen 80;

server_name www.sc.com;

access_log logs/sc.com.access.log main;

location / {

root html/sc.com;

index index.html index.htm;

}

error_page 404 /404.html;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

#是www.feng.com网站的配置

server {

listen 80;

server_name www.feng.com;

access_log logs/feng.com.access.log main;

location / {

root html/feng.com;

index index.html index.htm;

}

error_page 404 /404.html;

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}3.2,新建www.sc.com和www.feng.com网站对应的存放网页的文件夹 ,在创建index.html首页文件,和404.html错误页面

[root@web1 html]# pwd

/usr/local/scmiqiang99/html

[root@web1 html]# ls

50x.html index.html

[root@web1 html]# mkdir sc.com feng.com

[root@web1 html]# ls

50x.html feng.com index.html sc.com

#进入sc.com文件夹创建index.html文件

[root@web1 html]# cd sc.com

[root@web1 sc.com]# ls

[root@web1 sc.com]# vim index.html

[root@web1 sc.com]# vim 404.htmlom

#创建50x.html错误页面

[root@web1 sc.com]# vim 50x.html

#进入feng.com创建,index.html,404.html,50x.html

[root@web1 sc.com]# cd ..

[root@web1 html]# cd feng.com/

[root@web1 feng.com]# ls

[root@web1 feng.com]# vim index.html

[root@web1 feng.com]# vim 404.html

[root@web1 feng.com]# vim 50x.html

#先测试一下修改的nginx.conf配置文件是否有问题

[root@web1 feng.com]# nginx -t

nginx: the configuration file /usr/local/scmiqiang99/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/scmiqiang99/conf/nginx.conf test is successful

#重新加载nginx

[root@web1 feng.com]# nginx -s reload

[root@web1 feng.com]# 3.3,在另外的机器上进行访问测试web1

在lb2上测试

[root@lb2 ~]# vi /etc/hosts

[root@lb2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#添加下述记录,进行域名解析

192.168.152.143 www.sc.com

192.168.152.143 www.feng.com

#测试效果:

[root@lb2 ~]# curl www.feng.com

welcome to feng.com

[root@lb2 ~]# curl www.sc.com

welcom to sc.com

#测试404错误页面

[root@lb2 ~]# curl www.feng.com/lihao

404 error to feng.com

[root@lb2 ~]# curl www.sc.com/lihao

404 error changsha sc.com

3.4,将web1创建的sc.com和feng.com里的index.html 404.html 和50x.html传给web2服务器

[root@web1 scmiqiang99]# scp -r html/* 192.168.152.144:/usr/local/scmiqiang99/html/3.5,再次回到web2服务器上刷新nginx服务

[root@web2 conf]# nginx -t

nginx: the configuration file /usr/local/scmiqiang99/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/scmiqiang99/conf/nginx.conf test is successful

[root@web2 conf]# nginx -s reload3.6在lb1服务器上测试web2

[root@lb1 ~]# vi /etc/hosts

[root@lb1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.152.144 www.sc.com

192.168.152.144 www.feng.com

访问测试

[root@lb1 ~]# curl www.sc.com

welcom to sc.com

[root@lb1 ~]# curl www.feng.com

welcome to feng.com

错误测试

[root@lb1 ~]# curl www.feng.com/hello

404 error to feng.com

[root@lb1 ~]# curl www.sc.com/hello

404 error changsha sc.com4.安装VTS模块对nginx的流量的监控

web1和web2上都做下述操作

4.1使用xftp上传到nginx目录下

4.2,解压前的配置

[root@web2 nginx]# pwd

/nginx

[root@web2 nginx]# ls

nginx-1.25.2 nginx-1.25.2.tar.gz nginx-module-vts-master.zip

#删除原来的nginx文件

[root@web2 nginx]# rm -rf nginx-1.25.2

[root@web2 nginx]# ls

nginx-1.25.2.tar.gz nginx-module-vts-master.zip

#在生成一个新的nginx文件

[root@web2 nginx]# tar xf nginx-1.25.2.tar.gz

[root@web2 nginx]# ls

nginx-1.25.2 nginx-1.25.2.tar.gz nginx-module-vts-master.zip4.3,解压该文件

[root@web2 nginx]# unzip nginx-module-vts-master.zip

[root@web2 nginx]# ls

nginx-1.25.2.tar.gz nginx-module-vts-master nginx-module-vts-master.zip4.4,重新编译一个新的二进制文件,里面包含了新的虚拟主机

#编译前的配置

[root@web2 nginx-1.25.2]# ./configure --prefix=/usr/local/scmiqiang99 --user=miqiang --group=miqiang --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream --with-http_gunzip_module --add-module=/nginx/nginx-module-vts-master

#编译

[root@web2 nginx-1.25.2]# make -j 2

编译完后,make一下,注意,如果已经安装了nginx,这边不能输入make install,否则就是重新安装了,将新编译好的nginx复制到/usr/local/scmiqiang99/sbin 下去,用热升级的方式去实现

4.5 ,热升级:

#将原来的二进制文件重命名nginx.old

[root@web2 objs]# cd /usr/local/scmiqiang99/sbin/

[root@web2 sbin]# ls

nginx

[root@web2 sbin]# mv nginx nginx.old

#在将新的nginx复制过去

[root@web2 sbin]# cd /nginx/nginx-1.25.2/objs/

[root@web2 objs]# ls

addon Makefile nginx.8 ngx_auto_headers.h ngx_modules.o

autoconf.err nginx ngx_auto_config.h ngx_modules.c src

[root@web2 objs]# cp nginx /usr/local/scmiqiang99/sbin/

#开始给原来的nginx主进程发送12的信号,使得原来的nginx启动性的进程,达到新旧进程共存

[root@web2 objs]# ps aux|grep nginx

root 946 0.0 0.2 47020 2180 ? Ss 14:49 0:00 nginx: master process /usr/local/scmiqiang99/sbin/nginx

miqiang 4616 0.0 0.2 47020 2356 ? S 20:24 0:00 nginx: worker process

root 8162 0.0 0.0 112824 976 pts/0 R+ 21:44 0:00 grep --color=auto nginx

[root@web2 objs]# kill -12 946

[root@web2 objs]# ps aux|grep nginx

root 946 0.0 0.2 47020 2180 ? Ss 14:49 0:00 nginx: master process /usr/local/scmiqiang99/sbin/nginx

miqiang 4616 0.0 0.2 47020 2356 ? S 20:24 0:00 nginx: worker process

root 8163 0.0 0.3 46504 3528 ? S 21:44 0:00 nginx: master process /usr/local/scmiqiang99/sbin/nginx

miqiang 8164 0.0 0.2 46952 2036 ? S 21:44 0:00 nginx: worker process

root 8166 0.0 0.0 112824 972 pts/0 R+ 21:44 0:00 grep --color=auto nginx

#杀死旧nginx的master进程和worker进程

[root@web2 objs]# kill -3 946

[root@web2 objs]# ps aux|grep nginx

root 8163 0.0 0.3 46504 3528 ? S 21:44 0:00 nginx: master process /usr/local/scmiqiang99/sbin/nginx

miqiang 8164 0.0 0.2 46952 2036 ? S 21:44 0:00 nginx: worker process

root 8169 0.0 0.0 112824 976 pts/0 R+ 21:48 0:00 grep --color=auto nginx

4.6,查看新的nginx的配置,是否有vts的模块

[root@web2 objs]# nginx -V

nginx version: nginx/1.25.2

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC)

built with OpenSSL 1.0.2k-fips 26 Jan 2017

TLS SNI support enabled

configure arguments: --prefix=/usr/local/scmiqiang99 --user=miqiang --group=miqiang --with-http_ssl_module --with-threads --with-http_v2_module --with-http_stub_status_module --with-stream --with-http_gunzip_module --add-module=/nginx/nginx-module-vts-master

出现 --add-module=/nginx/nginx-module-vts-master 说明已经拥有vts模块4.7,在nginx.conf主配文件里添加配置,在刷新服务

[root@web2 scmiqiang99]# cd conf

[root@web2 conf]# vim nginx.conf

root@web2 conf]# nginx -t

nginx: the configuration file /usr/local/scmiqiang99/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/scmiqiang99/conf/nginx.conf test is successful

[root@web2 conf]# nginx -s reload修改的http:

修改的server(两个网站都添加下述配置):

修改的server(两个网站都添加下述配置):

4.8,验证,在windows机器上访问www.sc.com/status查看效果,发现两个网站上都有状态统计

5 .lvs+keepalived的实现

5.1,ipvsadm是lvs的传参工具

#在lb1负载均衡器上安装ipvsadm软件包

[root@lb1 conf]# yum install ipvsadm -y

#使用

[root@lb1 conf]# ipvsadm -l

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

#在lb2负载均衡器上安装ipvsadm软件包

[root@lb2 network-scripts]# yum install ipvsadm -y5.2,在lb1和lb2负载均衡器上安装keepalived软件包,并设置开机启动

[root@lb1 ~]# yum install keepalived -y

[root@lb1 keepalived]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.

[root@lb2 ~]# yum install keepalived -y

[root@lb2 keepalived]# systemctl enable keepalived

Created symlink from /etc/systemd/system/multi-user.target.wants/keepalived.service to /usr/lib/systemd/system/keepalived.service.5.3,将内网的lb1和lb2都配置好IP地址和网关(192.168.152.2)

#lb1负载均衡器上:

[root@lb1 network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.152.146

PREFIX=24

GATEWAY=192.168.152.2

DNS1=114.114.114.114

[root@lb1 network-scripts]# ip route

default via 192.168.152.2 dev ens33 proto static metric 100

192.168.152.0/24 dev ens33 proto kernel scope link src 192.168.152.146 metric 100

[root@lb1 network-scripts]#

#lb2负载均衡器上:

[root@lb2 network-scripts]# cat ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.152.147

PREFIX=24

GATEWAY=192.168.152.2

DNS1=114.114.114.114

[root@lb2 network-scripts]# ip route

default via 192.168.152.2 dev ens33 proto static metric 100

192.168.152.0/24 dev ens33 proto kernel scope link src 192.168.152.147 metric 100 5.4,在2台lb负载均衡器上配置keepalived实现双vip配置

lb1上:

[root@lb1 keepalived]# pwd

/etc/keepalived

[root@lb1 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 88

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 111123

}

virtual_ipaddress {

192.168.152.188

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 90

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 111123

virtual_ipaddress {

192.168.152.199

}

}

[root@lb1 keepalived]# service keepalived restartlb2上:

[root@lb2 keepalived]# pwd

/etc/keepalived

[root@lb2 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

# vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 88

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 111123

}

virtual_ipaddress {

192.168.152.188

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 90

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 111123

}

virtual_ipaddress {

192.168.152.199

}

}

[root@lb2 keepalived]# service keepalived restart5.5,查看vip的效果

lb1:

lb2:

5.6,测试vip漂移

5.6,测试vip漂移

在lb2上关闭keepalived模拟master挂掉的情节

[root@lb2 keepalived]# service keepalived stop

Redirecting to /bin/systemctl stop keepalived.service在到lb1上查看vip,发现漂移成功

5.7,在DR调度器上配置负载均衡策略

5.7,在DR调度器上配置负载均衡策略

在lb1,lb2上都执行执行脚本,脚本内容如下:

[root@lb1 keepalived]# cat lvs_dr.sh

#!/bin/bash

# director 服务器上开启路由转发功能

echo 1 > /proc/sys/net/ipv4/ip_forward

#配置SNAT策略,让内网的服务器可以上网

iptables -F -t nat

#删除所有的自定义链

iptables -X -t nat

# 清空lvs里的规则

/usr/sbin/ipvsadm -C

#添加lvs的规则

/usr/sbin/ipvsadm -A -t 192.168.152.188:80 -s rr

/usr/sbin/ipvsadm -A -t 192.168.152.199:80 -s rr

#-g 是指定使用DR模式 -w 指定后端服务器的权重值为1 -r 指定后端的real server -t 是指定vip -a 追加一个规则 append

/usr/sbin/ipvsadm -a -t 192.168.152.188:80 -r 192.168.152.143:80 -g -w 1

/usr/sbin/ipvsadm -a -t 192.168.152.188:80 -r 192.168.152.144:80 -g -w 1

/usr/sbin/ipvsadm -a -t 192.168.152.199:80 -r 192.168.152.143:80 -g -w 1

/usr/sbin/ipvsadm -a -t 192.168.152.199:80 -r 192.168.152.144:80 -g -w 1

#在lb1,lb2上都保存ipvs的规则,方便开机启动的时候,自动加载

[root@lb1 keepalived]# ipvsadm -S >/etc/sysconfig/ipvsadm

[root@lb2 keepalived]# ipvsadm -S >/etc/sysconfig/ipvsadm

#在lb1,lb2上都设置ipvsadm服务开机启动,会自动加载上面保存的配置

[root@lb1 keepalived]# systemctl enable ipvsadm

Created symlink from /etc/systemd/system/multi-user.target.wants/ipvsadm.service to /usr/lib/systemd/system/ipvsadm.service.

[root@lb1 keepalived]# systemctl enable ipvsadm

Created symlink from /etc/systemd/system/multi-user.target.wants/ipvsadm.service to /usr/lib/systemd/system/ipvsadm.service.5.8,查看执行效果

lb1:

[root@lb1 keepalived]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.152.188:80 rr

-> 192.168.152.143:80 Route 1 0 0

-> 192.168.152.144:80 Route 1 0 0

TCP 192.168.152.199:80 rr

-> 192.168.152.143:80 Route 1 0 0

-> 192.168.152.144:80 Route 1 0 0 lb2:

[root@lb2 keepalived]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.152.188:80 rr

-> 192.168.152.143:80 Route 1 0 0

-> 192.168.152.144:80 Route 1 0 0

TCP 192.168.152.199:80 rr

-> 192.168.152.143:80 Route 1 0 0

-> 192.168.152.144:80 Route 1 0 0 5.9,在后端的real server上进行配置,配置vip,用脚本实现

web1,web2上的脚本如下并且执行该脚本,配置vip:

[root@web1 ~]# cat set_vip_arp.sh

#!/bin/bash

#在lo接口(loopback)上配置vip

/usr/sbin/ifconfig lo:0 192.168.152.188 netmask 255.255.255.255 broadcast 192.168.152.188 up

/usr/sbin/ifconfig lo:1 192.168.152.199 netmask 255.255.255.255 broadcast 192.168.152.199 up

#添加一条主机路由到192.168.152.188/199 走lo:0接口

/sbin/route add -host 192.168.152.188 dev lo:0

/sbin/route add -host 192.168.152.199 dev lo:1

执行后查看IP和路由:

5.10,查看负载均衡的效果,在windows机器上访问负载均衡器的vip地址

5.10,查看负载均衡的效果,在windows机器上访问负载均衡器的vip地址

6. nfs服务器的配置和使用

6.1,在综合服务虚拟机安装nfs服务并启动nfs服务且设置开机启动,web1,和web2上也需要安装nfs去挂载共享目录

[root@service ~]# yum install nfs-utils -y

[root@service ~]# service nfs restart

Redirecting to /bin/systemctl restart nfs.service

[root@service ~]# systemctl enable nfs

Created symlink from /etc/systemd/system/multi-user.target.wants/nfs-server.service to /usr/lib/systemd/system/nfs-server.service.web1,web2安装nfs

[root@web1 ~]# yum install nfs-utils -y

[root@web2 ~]# yum install nfs-utils -y6.2,设置共享目录

综合服务器上:

[root@service ~]# vim /etc/exports

[root@service ~]# cat /etc/exports

/web/html 192.168.152.0/24(rw,sync,all_squash)

#刷新服务,输出共享目录

[root@service ~]# exportfs -rv

exporting 192.168.152.0/24:/web/html

exportfs: Failed to stat /web: No such file or directory6.3,设置共享目录/web和复制web1上的html文件夹到综合服务器上来

在web1上将/usr/local/scmiqiang99/目录下的html scp到综合服务器上的/web目录下去

#综合服务器上:

[root@service ~]# mkdir /web

[root@service ~]# cd /web

#web1上:

[root@web1 ~]# cd /usr/local/scmiqiang99/

[root@web1 scmiqiang99]# scp -r html 192.168.152.145:/web设置/web/html文件夹的权限,允许其他人过来读写

[root@service web]# chown nfsnobody:nfsnobody html/

[root@service web]# ll

总用量 0

drwxr-xr-x 4 nfsnobody nfsnobody 70 3月 31 20:13 html6.4,刷新nfs

[root@service web]# exportfs -rv

exporting 192.168.152.0/24:/web/html

[root@service web]# service nfs restart

Redirecting to /bin/systemctl restart nfs.service6.5,在web1和web2上都挂载综合服务器上共享的目录到html目录下

#web1:

[root@web1 scmiqiang99]# mount 192.168.152.145:/web/html /usr/local/scmiqiang99/html/

#web2:

[root@web2 scmiqiang99]# mount 192.168.152.145:/web/html /usr/local/scmiqiang99/html/

6.6,在web1和web2设置开机自动挂载

7. ansible服务器的配置和使用

7.1,在综合服务器上安装ansible服务

[root@service /]# yum install epel-release -y

[root@service /]# yum install ansible -y7.2,进入ansible的配置文件目录定义host主机清单

[root@service /]# cd /etc/ansible

[root@service ansible]# ls

ansible.cfg hosts roles

[root@service ansible]# vim hosts 在host里面添加各个服务器的ip地址,如下图所示

7.3,在ansible服务器和其他服务器之间建立单向信任关系的免密通道

7.3,在ansible服务器和其他服务器之间建立单向信任关系的免密通道

#生成密钥对

[root@service ansible]# ssh-keygen -t rsa

#上传公钥到其他服务器,并验证免密通道是否验证成功

#传给web1

[root@service ansible]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.152.143

#测试免密通道是否建立成功

[root@service ansible]# ssh 'root@192.168.152.143'

Last login: Sun Mar 31 19:38:21 2024 from 192.168.152.1

[root@web1 ~]# #出现传输过去服务器的名字即成功

#传给web2

[root@service ansible]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.152.144

...

[root@service ansible]# ssh 'root@192.168.152.144'

Last login: Sun Mar 31 19:38:22 2024 from 192.168.152.1

[root@web2 ~]#

#传给lb1

[root@service ansible]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.152.146

...

[root@service ansible]# ssh 'root@192.168.152.146'

Last login: Sun Mar 31 19:38:17 2024 from 192.168.152.1

[root@lb1 ~]#

#传给lb2

[root@service ansible]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.152.147

...

[root@service ansible]# ssh 'root@192.168.152.147'

Last login: Sun Mar 31 19:38:21 2024 from 192.168.152.1

[root@lb2 ~]#

#传给防火墙服务器

[root@service ansible]# ssh-copy-id -i /root/.ssh/id_rsa.pub root@192.168.152.148

...

[root@service ansible]# ssh 'root@192.168.152.148'

Last login: Sun Mar 31 21:09:04 2024 from 192.168.152.1

[root@web-firewalld ~]# 8,安装prometheus和export程序

8.1,上传下载的源码包到linux服务器

8.2,解压源码包,并改名

[root@service prom]# tar -xf prometheus-2.43.0.linux-amd64.tar.gz

[root@service prom]# ls

grafana-enterprise-9.1.2-1.x86_64.rpm prometheus-2.43.0.linux-amd64

node_exporter-1.4.0-rc.0.linux-amd64.tar.gz prometheus-2.43.0.linux-amd64.tar.gz

[root@service prom]# mv prometheus-2.43.0.linux-amd64 prometheus

[root@service prom]# ls

grafana-enterprise-9.1.2-1.x86_64.rpm prometheus

node_exporter-1.4.0-rc.0.linux-amd64.tar.gz prometheus-2.43.0.linux-amd64.tar.gz8.3,修改环境变量

[root@service prom]# PATH=/prom/prometheus:$PATH

[root@service prom]# echo 'PATH=/prom/prometheus:$PATH'

PATH=/prom/prometheus:$PATH

[root@service prom]# echo 'PATH=/prom/prometheus:$PATH' >>/etc/profile

#出现下面内容说明修改成功

[root@service prom]# which prometheus

/prom/prometheus/prometheus8.4,把prometheus做成一个服务来管理,方便维护和使用,并启动prometheus

[root@service prom]# vim /usr/lib/systemd/system/prometheus.service

[root@service prom]# cat /usr/lib/systemd/system/prometheus.service

[Unit]

Description=prometheus

[Service]

ExecStart=/prom/prometheus/prometheus --config.file=/prom/prometheus/prometheus.yml

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target

#重新加载systemd相关的服务

[root@service prom]# systemctl daemon-reload

#启动prometheus

[root@service prom]# systemctl start prometheus

[root@service prom]# ps aux|grep prometheus

root 3093 0.6 2.1 798956 40896 ? Ssl 21:55 0:00 /prom/prometheus/prometheus --config.file=/prom/prometheus/prometheus.yml

root 3100 0.0 0.0 112824 976 pts/0 R+ 21:55 0:00 grep --color=auto prometheus

#设置开机启动

[root@service prom]# systemctl enable prometheus

Created symlink from /etc/systemd/system/multi-user.target.wants/prometheus.service to /usr/lib/systemd/system/prometheus.service.8.5,访问Prometheus 的web server

8.6,在其他机器上安装export程序

8.6,在其他机器上安装export程序

#将node_exporter传递到所有的服务器上去

[root@service prom]# ansible all -m copy -a 'src=node_exporter-1.4.0-rc.0.linux-amd64.tar.gz dest=/root/'

#在综合服务器上编写在其他机器上安装node_exporter的脚本

[root@service prom]# cat install_node_exporter.sh

#!/bin/bash

tar xf node_exporter-1.4.0-rc.0.linux-amd64.tar.gz -C /

cd /

mv node_exporter-1.4.0-rc.0.linux-amd64/ node_exporter

cd node_exporter/

PATH=/node_exporter/:$PATH

echo 'PATH=/node_exporter/:$PATH' >>/etc/profile

#生成node_exporter.service文件

cat >/usr/lib/systemd/system/node_exporter.service <<EOF

[Unit]

Description=node_exporter

[Service]

ExecStart=/node_exporter/node_exporter --web.listen-address 0.0.0.0:8090

ExecReload=/bin/kill -HUP $MAINPID

KillMode=process

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

#设置开机启动

systemctl enable node_exporter

systemctl start node_exporter

#在综合服务器上的ansible执行安装命令

[root@service prom]# ansible all -m script -a "/prom/install_node_exporter.sh"

8.7,在Prometheus服务器上添加被监控的服务器

8.8,重启Prometheus服务 并查看效果

[root@service prometheus]# service prometheus restart

Redirecting to /bin/systemctl restart prometheus.service 8.9,安装grafana出图软件,启动grafana并设置开机启动

8.9,安装grafana出图软件,启动grafana并设置开机启动

[root@service prom]# yum install grafana-enterprise-9.1.2-1.x86_64.rpm -y

[root@service prom]# systemctl start grafana-server

[root@service prom]# systemctl enable grafana-server

Created symlink from /etc/systemd/system/multi-user.target.wants/grafana-server.service to /usr/lib/systemd/system/grafana-server.service.8.10,查看grafana是否启动

[root@service prom]# ps aux|grep grafana

grafana 2242 0.5 4.0 1194500 75736 ? Ssl 19:41 0:00 /usr/sbin/grafana-server --config=/etc/grafana/grafana.ini --pidfile=/var/run/grafana/grafana-server.pid --packaging=rpm cfg:default.paths.logs=/var/log/grafana cfg:default.paths.data=/var/lib/grafana cfg:default.paths.plugins=/var/lib/grafana/plugins cfg:default.paths.provisioning=/etcgrafana/provisioning

root 2271 0.0 0.0 112824 976 pts/0 R+ 19:43 0:00 grep --color=auto grafana

[root@service prom]# netstat -anplut|grep grafana

tcp6 0 0 :::3000 :::* LISTEN 2242/grafana-server 8.11,登录,在浏览器里登录 http://192.168.152.145:3000 默认的用户名和密码是 用户名admin 密码admin

9.堡垒机+防火墙+路由器的配置

9.1,将web集群里的web1和web2,lb1和lb2上进行tcp wrappers 的配置,只允许堡垒机ssh过来,其他机器的访问拒绝

[root@service prom]# cat set_tcp_wrappers.sh

#!/bin/bash

#设置 /etc/hosts.allow文件的内容,只允许堡垒机访问sshd服务

echo 'sshd:192.168.152.145' >>/etc/hosts.allow

echo 'sshd:192.168.152.1' >>/etc/hosts.allow

#拒绝其他所有的机器访问

echo 'sshd:ALL' >>/etc/hosts.deny

#对web1,web2配置tcp_wrappers

[root@service prom]# ansible web -m script -a "/prom/set_tcp_wrappers.sh"

#对lb1,lb2配置tcp_wrappers

[root@service prom]# ansible lb -m script -a "/prom/set_tcp_wrappers.sh"9.2,测试堡垒机是否能连接过去,发现可以连接过去

[root@service prom]# ssh root@192.168.152.143

Last login: Mon Apr 1 20:21:14 2024 from 192.168.152.145

[root@web1 ~]# exit9.3,在防火墙服务器上编写脚本,实现snat和dnat功能,并且开启路由功能

开启路由功能

[root@web-firewalld ~]# vim /etc/sysctl.conf

#添加下面内容

net.ipv4.ip_forward = 1

#让内核读取服务,开启路由功能

[root@web-firewalld ~]# sysctl -p

net.ipv4.ip_forward = 1

[root@web-firewalld ~]# cat set_fw_snat_dnat.sh

#!/bin/bash

#开启路由功能

echo 1 >/proc/sys/net/ipv4/ip_forward

#清除防火墙规则

iptables=/usr/sbin/iptables

$iptables -F

$iptables -t nat -F

#设置 snat

$iptables -t nat -A POSTROUTING -s 192.168.152.0/24 -o ens36 -j MASQUERADE

#设置dnat 使用两个外网ip对应内部的2个vip,将vip发布出去

$iptables -t nat -A PREROUTING -d 192.168.31.252 -i ens36 -p tcp --dport 80 -j DNAT --to-destination 192.168.152.188

$iptables -t nat -A PREROUTING -d 192.168.31.251 -i ens36 -p tcp --dport 80 -j DNAT --to-destination 192.168.152.199

#发布堡垒机

$iptables -t nat -A PREROUTING -d 192.168.31.252 -i ens36 -p tcp --dport 2233 -j DNAT --to-destination 192.168.152.145:22

[root@web-firewalld ~]# bash set_fw_snat_dnat.sh 9.4,查看效果

[root@web-firewalld ~]# iptables -L -t nat -n

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DNAT tcp -- 0.0.0.0/0 192.168.31.252 tcp dpt:80 to:192.168.152.188

DNAT tcp -- 0.0.0.0/0 192.168.31.251 tcp dpt:80 to:192.168.152.199

DNAT tcp -- 0.0.0.0/0 192.168.31.252 tcp dpt:2233 to:192.168.152.145:22

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 192.168.152.0/24 0.0.0.0/0

#设置开机启动

[root@web-firewalld ~]# iptables-save >/etc/sysconfig/iptables_rules

[root@web-firewalld ~]# vim /etc/rc.local

#添加

iptables-retore </etc/sysconfig/iptables_rules

[root@web-firewalld ~]# chmod +x /etc/rc.d/rc.local 9.5,将整个web集群里的服务器的网关设置为防火墙服务器的LAN口的ip

#以web1为例进行修改,将其他机器(除了防火墙服务器)都按下面内容进行修改

vim /etc/sysconfig/network-scripts/ifcfg-ens33

[root@web1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO="none"

NAME="ens33"

UUID="ed6e7033-6d6c-407f-83db-4c73195969ec"

DEVICE="ens33"

ONBOOT="yes"

IPADDR=192.168.152.143

PREFIX=24

GATEWAY=192.168.152.148

DNS1=114.114.114.114

[root@web1 ~]#service network restart9.6,查看路由表,测试能否上网(snat)

[root@lb2 ~]# ping www.baidu.com

PING www.a.shifen.com (183.2.172.42) 56(84) bytes of data.

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=1 ttl=51 time=22.5 ms

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=2 ttl=51 time=20.8 ms

64 bytes from 183.2.172.42 (183.2.172.42): icmp_seq=3 ttl=51 time=21.3 ms

^Z

[1]+ 已停止 ping www.baidu.com

[root@lb2 ~]# ip route

default via 192.168.152.148 dev ens33 proto static metric 100

192.168.152.0/24 dev ens33 proto kernel scope link src 192.168.152.147 metric 100 9.7,在防火墙上面配置两个ens36的IP地址

[root@web-firewalld network-scripts]# pwd

/etc/sysconfig/network-scripts

[root@web-firewalld network-scripts]# cat ifcfg-ens36

BOOTPROTO="none"

NAME="ens36"

DEVICE="ens36"

ONBOOT="yes"

IPADDR1=192.168.31.252

IPADDR2=192.168.31.251

PREFIX=24

GATEWAY=192.168.31.1

DNS1=114.114.114.114

[root@web-firewalld network-scripts]# service network restart9.8,测试dnat

在最外边的客户机上测试dnat是否发布了内部的web服务

10 .压力测试

10.1,安装ab软件并进行测试

[root@sc-slave ~]# yum install httpd-tools -y

[root@sc-slave ~]# ab -n 10000 -c 1000 http://192.168.31.251/index.html

This is ApacheBench, Version 2.3 <$Revision: 1430300 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking 192.168.31.251 (be patient)

Completed 1000 requests

Completed 2000 requests

Completed 3000 requests

Completed 4000 requests

Completed 5000 requests

Completed 6000 requests

Completed 7000 requests

Completed 8000 requests

Completed 9000 requests

Completed 10000 requests

Finished 10000 requests

Server Software: nginx

Server Hostname: 192.168.31.251

Server Port: 80

Document Path: /index.html

Document Length: 21 bytes

Concurrency Level: 1000

Time taken for tests: 1.352 seconds

Complete requests: 10000

Failed requests: 128

(Connect: 0, Receive: 0, Length: 64, Exceptions: 64)

Write errors: 0

Total transferred: 2434320 bytes

HTML transferred: 208656 bytes

Requests per second: 7396.90 [#/sec] (mean)

Time per request: 135.192 [ms] (mean)

Time per request: 0.135 [ms] (mean, across all concurrent requests)

Transfer rate: 1758.44 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 78 262.3 0 1021

Processing: 8 27 38.3 15 420

Waiting: 0 27 38.3 15 419

Total: 13 105 288.4 16 1219

Percentage of the requests served within a certain time (ms)

50% 16

66% 16

75% 18

80% 34

90% 88

95% 1037

98% 1209

99% 1214

100% 1219 (longest request)

四.项目总结

1.遇到的问题:

1,设置双vip的时候出现了脑裂现象

2,一个lvs的负载均衡器不能实现负载均衡

3,lvs负载均衡开始不能实现负载均衡

2. 项目心得:

1,对高性能和高可用有了一定的了解,对系统的性能指标有了一定的认识。

2,对监控有了了解,监控是运维工作的基础部分,可以及时看到问题,进而解决问题

3,慢慢理解了集群的概念

4,对压力测试下整个集群的瓶颈有了整体的概念

5,对lvs的负载均衡(4层负载均衡)有了一定的了解

1971

1971

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?