| private[spark] classCoalescedRDD[T: ClassTag](

@transient varprev: RDD[T],

maxPartitions: Int,

balanceSlack: Double = 0.10)

extends RDD[T](prev.context,Nil) { // Nil since we implement getDependencies

override def getPartitions: Array[Partition] = {

val pc = newPartitionCoalescer(maxPartitions, prev, balanceSlack)

pc.run().zipWithIndex.map {

case (pg, i) =>

val ids = pg.arr.map(_.index).toArray

new CoalescedRDDPartition(i, prev, ids, pg.prefLoc)

}

}

override def compute(partition: Partition, context: TaskContext):Iterator[T] = {

partition.asInstanceOf[CoalescedRDDPartition].parents.iterator.flatMap { parentPartition =>

firstParent[T].iterator(parentPartition, context)

}

}

…… } /**

* Class that captures a coalesced RDD by essentially keeping track of parent partitions

* @param index of this coalesced partition

* @param rdd which it belongs to * parentsIndices它代表了当前CoalescedRDD对应分区索引的分区是由父RDD的哪几个分区组成的

* @param parentsIndices list of indices in the parent that have been coalesced into this partition * @param preferredLocation the preferred location for this partition

*/

private[spark] case class CoalescedRDDPartition(

index: Int,

@transient rdd: RDD[_],

parentsIndices: Array[Int],

@transient preferredLocation: Option[String] = None)extendsPartition {

var parents:Seq[Partition] =parentsIndices.map(rdd.partitions(_))

@throws(classOf[IOException])

private def writeObject(oos: ObjectOutputStream): Unit = Utils.tryOrIOException{

// Update the reference to parent partition at the time of task serialization

parents = parentsIndices.map(rdd.partitions(_))

oos.defaultWriteObject()

}

/**

* Computes the fraction of the parents' partitions containing preferredLocation within

* their getPreferredLocs.

* @return locality of this coalesced partition between 0 and 1

*/

def localFraction: Double = {

val loc = parents.count { p =>

val parentPreferredLocations = rdd.context.getPreferredLocs(rdd, p.index).map(_.host)

preferredLocation.exists(parentPreferredLocations.contains)

}

if (parents.size ==0)0.0 else(loc.toDouble /parents.size.toDouble)

}

} |

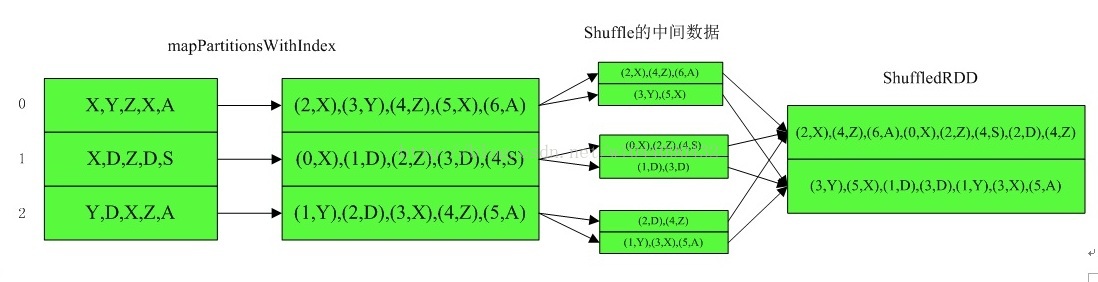

本文详细介绍了Spark中coalesce算子的工作原理,包括如何减少分区、是否进行shuffle以及CoalescedRDD的计算过程。接着提到了repartition、sample和takeSample等其他关键算子,探讨了它们在数据处理中的应用。

本文详细介绍了Spark中coalesce算子的工作原理,包括如何减少分区、是否进行shuffle以及CoalescedRDD的计算过程。接着提到了repartition、sample和takeSample等其他关键算子,探讨了它们在数据处理中的应用。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

422

422

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?