目录

2、efak服务UI里Node>kafka 这个页面内存和CPU以及Version展示异常

3、日志报错:Telnet [localhost:8085] has crash, please check it

4、日志报错:ERROR - JMX service url[xxxx:9999] create has error,msg is java.lang.NullPointerException

5、日志报错:Get kafka os memory from jmx has error, msg is null

6、日志报错:Get topic size from jmx has error, msg is null

7、日志报错:java.rmi.ConnectException: Connection refused to host: xxxx

环境

- kafka 2.4.1 (1.1以上版本都能支持,低版本不太清楚了)

- efak 3.0.1 当前时间官网下载的最新版(原名叫kafka-eagle)

- efak官网:http://www.kafka-eagle.org/

- jdk8

初印象

部署流程

网上很多,我这总结下吧(除非你刚好和别人博客的环境一摸一样,否则大概率吃瘪,跑不顺利)

首先,kafka-eagle监控比较全面,但是,不知道是官网持续升级原因还是咋的,文档不太完整(个人看的是当前时间最新版本v3.0.1)

官网linux部署流程

去官网上看DOCS部分,Install on Linux 照着步骤走完,如果你很顺利OK了(打开webUI,所有指标都正常监控起来了),那就不用往下看了

部署过程的命令行就不敲了,照着官方文档走就好,然后关于efak的配置文件我贴下我的(个人是单节点部署(kafka也是单节点))

配置文件几个关键点:

- zookeeper的配置要和kafka里配置zk的路径一样(IP:port后的路径)

- efak.zk.cluster.alias配置项配置哪几个集群别名,往后的配置就只需要配对应别名的即可

添加环境变量

注意优先配置/etc/profile

$ vi /etc/profile

export KE_HOME=/data/soft/new/efak

export PATH=$PATH:$KE_HOME/bin

$ source /etc/profile修改配置文件

修改config/system-config.properties

######################################

# multi zookeeper & kafka cluster list

# Settings prefixed with 'kafka.eagle.' will be deprecated, use 'efak.' instead

######################################

efak.zk.cluster.alias=cluster1

cluster1.zk.list=xdn10:2181

#cluster2.zk.list=xdn10:2181,xdn11:2181,xdn12:2181

######################################

# zookeeper enable acl

######################################

cluster1.zk.acl.enable=false

cluster1.zk.acl.schema=digest

cluster1.zk.acl.username=test

cluster1.zk.acl.password=test123

######################################

# broker size online list

######################################

cluster1.efak.broker.size=20

######################################

# zk client thread limit

######################################

kafka.zk.limit.size=16

######################################

# EFAK webui port

######################################

efak.webui.port=18048

######################################

# EFAK enable distributed

######################################

efak.distributed.enable=false

efak.cluster.mode.status=master

efak.worknode.master.host=localhost

efak.worknode.port=8085

######################################

# kafka jmx acl and ssl authenticate

######################################

cluster1.efak.jmx.acl=false

cluster1.efak.jmx.user=keadmin

cluster1.efak.jmx.password=keadmin123

cluster1.efak.jmx.ssl=false

cluster1.efak.jmx.truststore.location=/data/ssl/certificates/kafka.truststore

cluster1.efak.jmx.truststore.password=ke123456

######################################

# kafka offset storage

######################################

cluster1.efak.offset.storage=kafka

#cluster2.efak.offset.storage=zk

######################################

# kafka jmx uri

######################################

cluster1.efak.jmx.uri=service:jmx:rmi:///jndi/rmi://%s/jmxrmi

######################################

# kafka metrics, 15 days by default

######################################

efak.metrics.charts=true

efak.metrics.retain=7

######################################

# kafka sql topic records max

######################################

efak.sql.topic.records.max=5000

efak.sql.topic.preview.records.max=10

######################################

# delete kafka topic token

######################################

efak.topic.token=keadmin

######################################

# kafka sasl authenticate

######################################

cluster1.efak.sasl.enable=false

cluster1.efak.sasl.protocol=SASL_PLAINTEXT

cluster1.efak.sasl.mechanism=SCRAM-SHA-256

cluster1.efak.sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required username="kafka" password="kafka-eagle";

cluster1.efak.sasl.client.id=

cluster1.efak.blacklist.topics=

cluster1.efak.sasl.cgroup.enable=false

cluster1.efak.sasl.cgroup.topics=

cluster2.efak.sasl.enable=false

cluster2.efak.sasl.protocol=SASL_PLAINTEXT

cluster2.efak.sasl.mechanism=PLAIN

cluster2.efak.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="kafka-eagle";

cluster2.efak.sasl.client.id=

cluster2.efak.blacklist.topics=

cluster2.efak.sasl.cgroup.enable=false

cluster2.efak.sasl.cgroup.topics=

######################################

# kafka ssl authenticate

######################################

cluster3.efak.ssl.enable=false

cluster3.efak.ssl.protocol=SSL

cluster3.efak.ssl.truststore.location=

cluster3.efak.ssl.truststore.password=

cluster3.efak.ssl.keystore.location=

cluster3.efak.ssl.keystore.password=

cluster3.efak.ssl.key.password=

cluster3.efak.ssl.endpoint.identification.algorithm=https

cluster3.efak.blacklist.topics=

cluster3.efak.ssl.cgroup.enable=false

cluster3.efak.ssl.cgroup.topics=

######################################

# kafka sqlite jdbc driver address

######################################

#efak.driver=org.sqlite.JDBC

#efak.url=jdbc:sqlite:/hadoop/kafka-eagle/db/ke.db

#efak.username=root

#efak.password=www.kafka-eagle.org

######################################

# kafka mysql jdbc driver address

######################################

efak.driver=com.mysql.cj.jdbc.Driver

#efak.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

efak.url=jdbc:mysql://rm-wz9976qu64y5dzypjno.mysql.rds.aliyuncs.com/ke?useUnicode=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=Asia/Shanghai&allowMultiQueries=true

efak.username=1111

efak.password=1111

注意:

1、zk连接地址

###################################### # multi zookeeper & kafka cluster list # Settings prefixed with 'kafka.eagle.' will be deprecated, use 'efak.' instead ###################################### efak.zk.cluster.alias=cluster1 cluster1.zk.list=xdn10:2181 #cluster2.zk.list=xdn10:2181,xdn11:2181,xdn12:21812、数据存储连接方式

###################################### # kafka sqlite jdbc driver address ###################################### #efak.driver=org.sqlite.JDBC #efak.url=jdbc:sqlite:/hadoop/kafka-eagle/db/ke.db #efak.username=root #efak.password=www.kafka-eagle.org ###################################### # kafka mysql jdbc driver address ###################################### efak.driver=com.mysql.cj.jdbc.Driver #efak.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull efak.url=jdbc:mysql://rm-wz9976qu64y5dzypjno.mysql.rds.aliyuncs.com/ke?useUnicode=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=Asia/Shanghai&allowMultiQueries=true efak.username=1111 efak.password=1111如果使用mysql数据库,需要创建一个ke数据库,可以是个空库,也可以是创建好表,建表参考:附:mysql的ke数据库的表创建语句

以上是配置文件config/system-config.properties注意要修改的地方。

调整启动参数

调整启动JVM参数,修改bin/ke.sh

## 将 KE_JAVA_OPTS 最大最小容量根据需要调整,例如:

export KE_JAVA_OPTS="-server -Xmx512m -Xms512m -XX:MaxGCPauseMillis=20 -XX:+UseG1GC -XX:MetaspaceSize=128m -XX:InitiatingHeapOccupancyPercent=35 -XX:G1HeapRegionSize=16M -XX:MinMetaspaceFreeRatio=50 -XX:MaxMetaspaceFreeRatio=80"

kafka服务端要开启JMX

这里重点几个配置:开启JMX、设置JMX远程连接端口、设置JMX的服务绑定IP、设置JMX远程RMI通信端口

这几个配置分别对应:

-Dcom.sun.management.jmxremote

-Dcom.sun.management.jmxremote.port=9999

-Djava.rmi.server.hostname=x.x.x.x

-Dcom.sun.management.jmxremote.rmi.port=9998

大部分博客只有前两项配置,他们的环境网络比较通畅,如果你们服务器网络所有端口都互通,那么

-Dcom.sun.management.jmxremote.rmi.port=9998不用配,因为是如果你不配,就会是随机端口

- ip不用区分内网和外网ip,只要客户端能访问的通就行

- 端口也没有固定,只是参考。

- 端口配置有些博客或文章会写在kafka-server启动的那个脚本里

JMX_PORT=9999,效果一样,也可以的

配置方式

mx的配置在

kafka-run-class.sh里有些博客会要配置到

kafka-server-start.sh其实也可以,但我为了统一一个地方,就放kafka-run-class.sh里了

修改kafka-run-class.sh

(1)找到JMX settings位置

# JMX settings

if [ -z "$KAFKA_JMX_OPTS" ]; then

KAFKA_JMX_OPTS="-Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.local.only=false -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=x.x.x.x"

fi

配置

-Djava.rmi.server.hostname=x.x.x.x是某些docker跑kafka的服务,如果不配置,没有绑定到指定ip上会外部无法连接

(2)找到JMX port to use位置

开头注释的是原先配置。修改的逻辑是:判断是否是kafkaserver(这里插一句,改的是kafka-run-class.sh文件,但是我们启动kafkaserver、运行命令行都会依赖调用到这个文件,shell熟悉的话去看一眼kafkaserver启动文件就知道了),如果是kafkaserver才把那几个参数设置上,否则当使用命令行操作kafka时,会出现端口被占用的报错,因为它也尝试去启动jmx服务。

# JMX port to use

#if [ $JMX_PORT ]; then

# KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT "

#fi

JMX_PORT=9999

JMX_RMI_PORT=9998

ISKAFKASERVER="false"

if [[ "$*" =~ "kafka.Kafka" ]]; then

ISKAFKASERVER="true"

fi

if [ $JMX_PORT ] && [ "true" == "$ISKAFKASERVER" ]; then

KAFKA_JMX_OPTS="$KAFKA_JMX_OPTS -Dcom.sun.management.jmxremote.port=$JMX_PORT -Dcom.sun.management.jmxremote.rmi.port=$JMX_RMI_PORT "

echo set KAFKA_JMX_PORT:$KAFKA_JMX_OPTS

fi

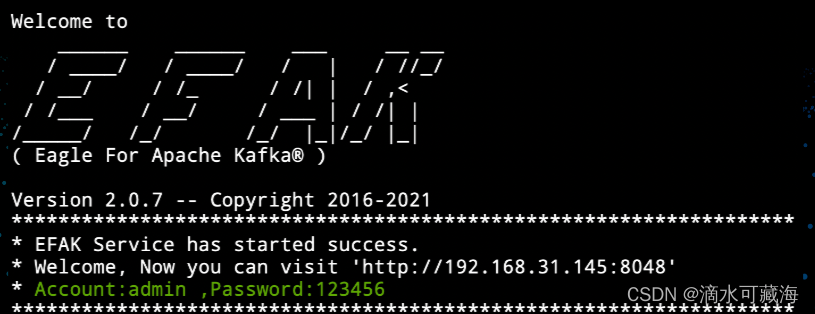

启动与停止

#启动

./ke.sh start

#停止

./ke.sh stop

#重启

./ke.sh restart

访问地址

http://x.x.x.x:8048

网络检查

上述涉及的所有IP、端口,都要互通,telnet要能连上。ping不通不重要,因为某些服务器会禁止ping,这个属于正常。

检查防火墙

1)、查看防火墙状态:active (running) 即是开启状态

systemctl status firewalld2)、查看已开发端口命令

firewall-cmd --list-all3)、开放端口后需要重新加载防火墙

firewall-cmd --zone=public --add-port=3306/tcp --permanent4)、查看已开发端口命令

firewall-cmd --reload

5)、firewalld的基本使用命令

启动: systemctl start firewalld

关闭: systemctl stop firewalld

查看状态: systemctl status firewalld

开机禁用 : systemctl disable firewalld

开机启用 : systemctl enable firewalld

配置安全组

如果是买的云服务器,去配置安全组开放对应端口

注意,内网IP也要单独配置开放,不会因为配了外网可访问就认为内网也可以访问

常见问题

1、efak服务启动后webUI访问空白或访问不了

原因:表结构未创建

如果你用的是sqlite数据库,你可能也不会碰上此问题

如果你的mysql的jdbc.url是类似(IP:端口)jdbc:mysql://127.0.0.1:3306/ke然后还没有时区问题的,那么你大可能不会碰上此问题

我估计原作者习惯用sqlite或者本地mysql进行测试,比较顺利。但是如果使用RDS或其他云服务器等形式上的Mysql,服务启动就会出问题,而且,,,,说实话,你不知道服务已经有问题,因为没有相关日志

先讲下他服务启动会做什么:花了点时间看他源码

@Component

public class StartupListener implements ApplicationContextAware {

private static ApplicationContext applicationContext;

@Override

public void setApplicationContext(ApplicationContext arg0) throws BeansException {

ContextSchema context = new ContextSchema();

context.start();

}

public static Object getBean(String beanName) {

if (applicationContext == null) {

applicationContext = ContextLoader.getCurrentWebApplicationContext();

}

return applicationContext.getBean(beanName);

}

public static <T> T getBean(String beanName, Class<T> clazz) {

return clazz.cast(getBean(beanName));

}

class ContextSchema extends Thread {

public void run() {

String jdbc = SystemConfigUtils.getProperty("efak.driver");

if (JConstants.MYSQL_DRIVER_V5.equals(jdbc) || JConstants.MYSQL_DRIVER_V8.equals(jdbc)) {

MySqlRecordSchema.schema();

} else if (JConstants.SQLITE_DRIVER.equals(jdbc)) {

SqliteRecordSchema.schema();

}

initKafkaMetaData();

}

private void initKafkaMetaData() {

KafkaCacheUtils.initKafkaMetaData();

}

}

}

- 建立数据库和对应表结构:如果已存在就跳过。MySqlRecordSchema.schema();

- 初始化kafka元信息:从zk里读取。initKafkaMetaData();

- (上方代码没有)定时器初始化:使用的是quartz,主要包括两类,一个是指标定时获取,配置的是1分钟1次;指标清理,每天1点,根据开放给我们的配置文件的指标保留时长配置项(默认15天)去清理指标数据(毕竟1分钟一次,而且存入数据库,不清理是会爆炸的)。【注意:定时器定时规则不开放配置,是写死在quartz配置文件里的】

坑在哪里?

建立数据库和对应表时,它拆解jdbc的url,就是原本咱们是例如:

efak.driver=com.mysql.cj.jdbc.Driver

efak.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull&useSSL=false&serverTimezone=Asia/Shanghai

efak.username=root

efak.password=123456

他会拆解url,提取出 host、port然后丢弃后面一长串的参数(这是它自动创建数据库表时一个插件步骤,efak正式监控服务不会有此操作),这么做的考虑应该是连数据库ke也会自动create。

但是这样的过程会有几个问题:

1、部分mysql版本会报时区不对导致无法创建mysql连接,就是serverTimezone配置项这项没有,会建立连接失败;连带着的是后面的所有连接参数都放弃了,如果某些mysql服务开了ssl,那更连不上。

这个问题某些服务环境下连报错都不会给你,开的异步匿名线程,异常信息被吞掉了。。。。。我还是跑源码才看到的

2、从如上源码可知,mysql数据库初始化如果失败了,那initKafkaMetaData();这行代码就不会被执行,那么后续所有和jmx的rmi调用和读取kafka流程都会报错

以上,当然有能正常执行的场景,尤其本地的时候。

解决

1.个人建议,手动创建数据库和表。

因为你要通过它自动化创建的话,要调整的地方还比较多,还要兼顾有些jdbcurl是域名形式的,域名形式的没有端口,那它读端口的代码你也得改,总之,个人尝试了下去改,改动地方还比较多,然后能在本地跑通,而跑RDS时,某些sql语句(内置的)还执行不了:我遇到的是建立索引的alter语句,最后还是手动执行了。

手动执行怎么弄呢?他的SQL都在代码里写死列举出来了,V3.0.1版本的sql表结构初始化语句在本文最后

2.对源码中如下代码进行try-catch

try {

if (JConstants.MYSQL_DRIVER_V5.equals(jdbc) || JConstants.MYSQL_DRIVER_V8.equals(jdbc)) {

MySqlRecordSchema.schema();

} else if (JConstants.SQLITE_DRIVER.equals(jdbc)) {

SqliteRecordSchema.schema();

}

}catch (Exception e){

LoggerUtils.print(StartupListener.class).info("db schema init error, efak.driver:{}", jdbc, e);

}

3.重新打包

打包不了,或者报什么source1.5啥的,在最外层pom文件加入如下配置

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<java.version>1.8</java.version>

</properties>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>${java.version}</source>

<target>${java.version}</target>

<encoding>${project.build.sourceEncoding}</encoding>

</configuration>

</plugin>

</plugins>

</build>

最好执行跳过测试的打包,也就是去执行build.sh这个文件里的命令:mvn clean package -DskipTests。否则它会去尝试连接zk和kafka,因为有很多test类.

打包好后,web工程target目录下的ke.war替换到部署目录里/usr/local/efak/kms/webapps目录下。

2、efak服务UI里Node>kafka 这个页面内存和CPU以及Version展示异常

- Kafka version is “-” or JMX Port is “-1” maybe kafka broker jmxport disable.

- The memory and cpu is null. may be the kafka broker down or blocked.

这是webUI的提示,这种大概率是没开启JMX服务或者JMX访问不通(这个可以在efak的服务日志里看到 连接xxx失败或拒绝连接)。导致原因也就是要么kafka配置JMX问题,要么就是网络不通,照着上述部署点去检查。

3、日志报错:Telnet [localhost:8085] has crash, please check it

这是你开了efak分布式部署配置,但实际并未分布式部署,此端口不通。此问题只是efak自身分部署通信不通问题,单节点部署就不用管了,不影响监控kafka,或者你去查查代码

我这边即使efak.distributed.enable=false 也会一直有这个报错,因该是代码有点问题。可以先不管。

4、日志报错:ERROR - JMX service url[xxxx:9999] create has error,msg is java.lang.NullPointerException

JMX远程连接不通,检查kafka端jmx配置和网络是否通畅,照着上述部署点去检查。

5、日志报错:Get kafka os memory from jmx has error, msg is null

JMX远程连接不通,检查kafka端jmx配置和网络是否通畅,照着上述部署点去检查。

6、日志报错:Get topic size from jmx has error, msg is null

JMX远程连接不通,检查kafka端jmx配置和网络是否通畅,照着上述部署点去检查。

7、日志报错:java.rmi.ConnectException: Connection refused to host: xxxx

JMX远程连接不通,检查kafka端jmx配置和网络是否通畅,照着上述部署点去检查

附:mysql的ke数据库的表创建语句

记得先创建一个名为 ke 的数据库

如下表创建语句在源码中都有

SET NAMES utf8mb4;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for ke_alarm_clusters

-- ----------------------------

DROP TABLE IF EXISTS `ke_alarm_clusters`;

CREATE TABLE `ke_alarm_clusters` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`type` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`server` text CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL,

`alarm_group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`alarm_times` int(0) NULL DEFAULT NULL,

`alarm_max_times` int(0) NULL DEFAULT NULL,

`alarm_level` varchar(4) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`is_normal` varchar(2) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT 'Y',

`is_enable` varchar(2) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT 'Y',

`created` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`modify` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_alarm_config

-- ----------------------------

DROP TABLE IF EXISTS `ke_alarm_config`;

CREATE TABLE `ke_alarm_config` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`alarm_group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`alarm_type` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`alarm_url` text CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL,

`http_method` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`alarm_address` text CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL,

`created` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`modify` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`cluster`, `alarm_group`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_alarm_consumer

-- ----------------------------

DROP TABLE IF EXISTS `ke_alarm_consumer`;

CREATE TABLE `ke_alarm_consumer` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`topic` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`lag` bigint(0) NULL DEFAULT NULL,

`alarm_group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`alarm_times` int(0) NULL DEFAULT NULL,

`alarm_max_times` int(0) NULL DEFAULT NULL,

`alarm_level` varchar(4) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`is_normal` varchar(2) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT 'Y',

`is_enable` varchar(2) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT 'Y',

`created` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`modify` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_alarm_crontab

-- ----------------------------

DROP TABLE IF EXISTS `ke_alarm_crontab`;

CREATE TABLE `ke_alarm_crontab` (

`id` bigint(0) NOT NULL,

`type` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`crontab` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`is_enable` varchar(2) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT 'Y',

`created` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`modify` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`, `type`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_connect_config

-- ----------------------------

DROP TABLE IF EXISTS `ke_connect_config`;

CREATE TABLE `ke_connect_config` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`connect_uri` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`version` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`alive` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`created` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`modify` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_consumer_bscreen_press

-- ----------------------------

DROP TABLE IF EXISTS `ke_consumer_bscreen_press`;

CREATE TABLE `ke_consumer_bscreen_press` (

`id` bigint unsigned NOT NULL,

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`topic` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`logsize` bigint(0) NULL DEFAULT NULL,

`difflogsize` bigint(0) NULL DEFAULT NULL,

`offsets` bigint(0) NULL DEFAULT NULL,

`diffoffsets` bigint(0) NULL DEFAULT NULL,

`lag` bigint(0) NULL DEFAULT NULL,

`timespan` bigint(0) NULL DEFAULT NULL,

`tm` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE,

INDEX `idx_timespan`(`timespan`) USING BTREE,

INDEX `idx_tm_cluster_diffoffsets`(`tm`, `cluster`, `diffoffsets`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 1 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_consumer_group

-- ----------------------------

DROP TABLE IF EXISTS `ke_consumer_group`;

CREATE TABLE `ke_consumer_group` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`topic` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`status` int(0) NULL DEFAULT NULL,

PRIMARY KEY (`cluster`, `group`, `topic`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_consumer_group_summary

-- ----------------------------

DROP TABLE IF EXISTS `ke_consumer_group_summary`;

CREATE TABLE `ke_consumer_group_summary` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`group` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`topic_number` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`coordinator` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`active_topic` int(0) NULL DEFAULT NULL,

`active_thread_total` int(0) NULL DEFAULT NULL,

PRIMARY KEY (`cluster`, `group`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_logsize

-- ----------------------------

DROP TABLE IF EXISTS `ke_logsize`;

CREATE TABLE `ke_logsize` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`topic` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`logsize` bigint(0) NULL DEFAULT NULL,

`diffval` bigint(0) NULL DEFAULT NULL,

`timespan` bigint(0) NULL DEFAULT NULL,

`tm` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

INDEX `idx_timespan`(`timespan`) USING BTREE,

INDEX `idx_tm_topic`(`tm`, `topic`) USING BTREE,

INDEX `idx_tm_cluster_diffval`(`tm`, `cluster`, `diffval`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_metrics

-- ----------------------------

DROP TABLE IF EXISTS `ke_metrics`;

CREATE TABLE `ke_metrics` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`broker` text CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL,

`type` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`key` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`value` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`timespan` bigint(0) NULL DEFAULT NULL,

`tm` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

INDEX `idx_tm_cluster_all`(`cluster`, `type`, `key`, `timespan`, `tm`) USING BTREE,

INDEX `idx_tm_cluster_key`(`cluster`, `type`, `key`, `tm`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_metrics_offline

-- ----------------------------

DROP TABLE IF EXISTS `ke_metrics_offline`;

CREATE TABLE `ke_metrics_offline` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`key` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`one` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`mean` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`five` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`fifteen` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`cluster`, `key`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_p_role

-- ----------------------------

DROP TABLE IF EXISTS `ke_p_role`;

CREATE TABLE `ke_p_role` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`name` varchar(64) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL COMMENT 'role name',

`seq` tinyint(0) NOT NULL COMMENT 'rank',

`description` varchar(128) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL COMMENT 'role describe',

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 4 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of ke_p_role

-- ----------------------------

INSERT INTO `ke_p_role` VALUES (1, 'Administrator', 1, 'Have all permissions');

INSERT INTO `ke_p_role` VALUES (2, 'Devs', 2, 'Own add or delete');

INSERT INTO `ke_p_role` VALUES (3, 'Tourist', 3, 'Only viewer');

-- ----------------------------

-- Table structure for ke_resources

-- ----------------------------

DROP TABLE IF EXISTS `ke_resources`;

CREATE TABLE `ke_resources` (

`resource_id` bigint(0) NOT NULL AUTO_INCREMENT,

`name` varchar(255) CHARACTER SET utf8 COLLATE utf8_general_ci NOT NULL COMMENT 'resource name',

`url` varchar(255) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`parent_id` int(0) NOT NULL,

PRIMARY KEY (`resource_id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 25 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of ke_resources

-- ----------------------------

INSERT INTO `ke_resources` VALUES (1, 'System', '/system', -1);

INSERT INTO `ke_resources` VALUES (2, 'User', '/system/user', 1);

INSERT INTO `ke_resources` VALUES (3, 'Role', '/system/role', 1);

INSERT INTO `ke_resources` VALUES (4, 'Resource', '/system/resource', 1);

INSERT INTO `ke_resources` VALUES (5, 'Notice', '/system/notice', 1);

INSERT INTO `ke_resources` VALUES (6, 'Topic', '/topic', -1);

INSERT INTO `ke_resources` VALUES (7, 'Message', '/topic/message', 6);

INSERT INTO `ke_resources` VALUES (8, 'Create', '/topic/create', 6);

INSERT INTO `ke_resources` VALUES (9, 'Alarm', '/alarm', -1);

INSERT INTO `ke_resources` VALUES (10, 'Add', '/alarm/add', 9);

INSERT INTO `ke_resources` VALUES (11, 'Modify', '/alarm/modify', 9);

INSERT INTO `ke_resources` VALUES (12, 'Cluster', '/cluster', -1);

INSERT INTO `ke_resources` VALUES (13, 'ZkCli', '/cluster/zkcli', 12);

INSERT INTO `ke_resources` VALUES (14, 'UserDelete', '/system/user/delete', 1);

INSERT INTO `ke_resources` VALUES (15, 'UserModify', '/system/user/modify', 1);

INSERT INTO `ke_resources` VALUES (16, 'Mock', '/topic/mock', 6);

INSERT INTO `ke_resources` VALUES (18, 'Create', '/alarm/create', 9);

INSERT INTO `ke_resources` VALUES (19, 'History', '/alarm/history', 9);

INSERT INTO `ke_resources` VALUES (20, 'Manager', '/topic/manager', 6);

INSERT INTO `ke_resources` VALUES (21, 'PasswdReset', '/system/user/reset', 1);

INSERT INTO `ke_resources` VALUES (22, 'Config', '/alarm/config', 9);

INSERT INTO `ke_resources` VALUES (23, 'List', '/alarm/list', 9);

INSERT INTO `ke_resources` VALUES (24, 'Hub', '/topic/hub', 6);

-- ----------------------------

-- Table structure for ke_role_resource

-- ----------------------------

DROP TABLE IF EXISTS `ke_role_resource`;

CREATE TABLE `ke_role_resource` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`role_id` int(0) NOT NULL,

`resource_id` int(0) NOT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 26 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of ke_role_resource

-- ----------------------------

INSERT INTO `ke_role_resource` VALUES (1, 1, 1);

INSERT INTO `ke_role_resource` VALUES (2, 1, 2);

INSERT INTO `ke_role_resource` VALUES (3, 1, 3);

INSERT INTO `ke_role_resource` VALUES (4, 1, 4);

INSERT INTO `ke_role_resource` VALUES (5, 1, 5);

INSERT INTO `ke_role_resource` VALUES (6, 1, 7);

INSERT INTO `ke_role_resource` VALUES (7, 1, 8);

INSERT INTO `ke_role_resource` VALUES (8, 1, 10);

INSERT INTO `ke_role_resource` VALUES (9, 1, 11);

INSERT INTO `ke_role_resource` VALUES (10, 1, 13);

INSERT INTO `ke_role_resource` VALUES (11, 2, 7);

INSERT INTO `ke_role_resource` VALUES (12, 2, 8);

INSERT INTO `ke_role_resource` VALUES (13, 2, 13);

INSERT INTO `ke_role_resource` VALUES (14, 2, 10);

INSERT INTO `ke_role_resource` VALUES (15, 2, 11);

INSERT INTO `ke_role_resource` VALUES (16, 1, 14);

INSERT INTO `ke_role_resource` VALUES (17, 1, 15);

INSERT INTO `ke_role_resource` VALUES (18, 1, 16);

INSERT INTO `ke_role_resource` VALUES (19, 1, 18);

INSERT INTO `ke_role_resource` VALUES (20, 1, 19);

INSERT INTO `ke_role_resource` VALUES (21, 1, 20);

INSERT INTO `ke_role_resource` VALUES (22, 1, 21);

INSERT INTO `ke_role_resource` VALUES (23, 1, 22);

INSERT INTO `ke_role_resource` VALUES (24, 1, 23);

INSERT INTO `ke_role_resource` VALUES (25, 1, 24);

-- ----------------------------

-- Table structure for ke_sql_history

-- ----------------------------

DROP TABLE IF EXISTS `ke_sql_history`;

CREATE TABLE `ke_sql_history` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`username` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`host` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`ksql` text CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL,

`status` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`spend_time` bigint(0) NULL DEFAULT NULL,

`created` varchar(32) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

`tm` varchar(16) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NULL DEFAULT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_topic_rank

-- ----------------------------

DROP TABLE IF EXISTS `ke_topic_rank`;

CREATE TABLE `ke_topic_rank` (

`cluster` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`topic` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`tkey` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`tvalue` bigint(0) NULL DEFAULT NULL,

PRIMARY KEY (`cluster`, `topic`, `tkey`) USING BTREE

) ENGINE = InnoDB CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Table structure for ke_user_role

-- ----------------------------

DROP TABLE IF EXISTS `ke_user_role`;

CREATE TABLE `ke_user_role` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`user_id` int(0) NOT NULL,

`role_id` tinyint(0) NOT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 2 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of ke_user_role

-- ----------------------------

INSERT INTO `ke_user_role` VALUES (1, 1, 1);

-- ----------------------------

-- Table structure for ke_users

-- ----------------------------

DROP TABLE IF EXISTS `ke_users`;

CREATE TABLE `ke_users` (

`id` bigint(0) NOT NULL AUTO_INCREMENT,

`rtxno` int(0) NOT NULL,

`username` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`password` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`email` varchar(64) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

`realname` varchar(128) CHARACTER SET utf8mb4 COLLATE utf8mb4_0900_ai_ci NOT NULL,

PRIMARY KEY (`id`) USING BTREE

) ENGINE = InnoDB AUTO_INCREMENT = 2 CHARACTER SET = utf8mb4 COLLATE = utf8mb4_0900_ai_ci ROW_FORMAT = Dynamic;

-- ----------------------------

-- Records of ke_users

-- ----------------------------

INSERT INTO `ke_users` VALUES (1, 1000, 'admin', '123456', 'admin@email.com', 'Administrator');

-- ----------------------------

-- View structure for ke_topic_consumer_group_summary_view

-- ----------------------------

DROP VIEW IF EXISTS `ke_topic_consumer_group_summary_view`;

CREATE ALGORITHM = UNDEFINED DEFINER = `root`@`localhost` SQL SECURITY DEFINER VIEW `ke_topic_consumer_group_summary_view` AS select `ke_consumer_group`.`cluster` AS `cluster`,`ke_consumer_group`.`topic` AS `topic`,count(distinct `ke_consumer_group`.`group`) AS `group_number`,count(distinct `ke_consumer_group`.`group`) AS `active_group` from `ke_consumer_group` where (`ke_consumer_group`.`status` = 0) group by `ke_consumer_group`.`cluster`,`ke_consumer_group`.`topic`;

SET FOREIGN_KEY_CHECKS = 1;

3281

3281

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?