利用sklearn中的load_breast_cancer数据集进行对数几率回归分类

先导入一下数据,再把矩阵调整成按列存储每个样本的形式

cancer = datasets.load_breast_cancer()

X,y = cancer['data'], cancer['target']

X = StandardScaler().fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.3, random_state=42)

m = X_train.shape[0]

m_test = X_test.shape[0]

n = X_train.shape[1]

X_train = np.array(X_train)

x = np.concatenate((X_train,np.ones((m,1),dtype=X_train.dtype)), axis=1).T

beta = np.zeros((n+1,1))

y_train.reshape(1,m)

定义sigmoid函数 S ( z ) = 1 1 + e − z S(z)=\frac{1}{1+e^{-z}} S(z)=1+e−z1

def Si(z):

return 1/(1+np.exp(-z))

强制确定牛顿迭代法的迭代深度,再进行迭代。老师的课件上给出的是累加的形式,但是一阶导可以用矩阵的形式表达,二阶导就是一个向量乘自己的转置,可以把 p 1 ( 1 − p 1 ) p_1(1-p_1) p1(1−p1)看作是每一项的系数,不妨把 p 1 , i p_{1,i} p1,i升为一个对角矩阵,然后再利用矩阵实现。

Max_Ite = 50

for t in range(Max_Ite):

p1 = Si(np.dot(beta.T, x))

grad = np.dot(x,(p1-y_train).T)

gg2 = np.dot(x, np.dot(np.diag((p1-p1*p1).reshape(m,)),x.T))

beta = beta - np.linalg.solve(gg2,grad)

#print("beta=", beta.T)

最后可以把 β = < ω , b > \beta=<\omega, b> β=<ω,b>打出来

利用对率回归进行预测,并检测准确性

y_pred = np.empty_like(y_test)

X_test = np.concatenate((X_test,np.ones((m_test,1),dtype=X_test.dtype)), axis=1).T

l = Si(np.dot(beta.T, X_test)).flatten()

for i in range(m_test):

if l[i]>0.5:

y_pred[i] = 1

else:

y_pred[i] = 0

print('precision: ', accuracy_score(y_test, y_pred))

但是神奇的是运行的中间结果爆掉了,算出来的 β \beta β不收敛。这组数据的大小是

print("shape=", n, m)

shape= 30 398

然后我就把每个数据第26个特征起以后的属性都不要了,也就是:

n = 25

X_train = X_train[:,0:n]

X_test = X_test[:,0:n]

最终得到的结果也还行:

precision: 0.9532163742690059

以下是完整代码:

#!/usr/bin/env python

# coding: utf-8

import numpy as np

from sklearn import datasets, linear_model

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

# import data

cancer = datasets.load_breast_cancer()

X,y = cancer['data'], cancer['target']

X = StandardScaler().fit_transform(X)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.3, random_state=42)

n = 25

X_train = X_train[:,0:n]

X_test = X_test[:,0:n]

m = X_train.shape[0]

m_test = X_test.shape[0]

n = X_train.shape[1]

X_train = np.array(X_train)

x = np.concatenate((X_train,np.ones((m,1),dtype=X_train.dtype)), axis=1).T

beta = np.zeros((n+1,1))

y_train.reshape(1,m)

#print("shape=", n, m)

def Si(z):

return 1/(1+np.exp(-z))

Max_Ite = 50

for t in range(Max_Ite):

p1 = Si(np.dot(beta.T, x))

grad = np.dot(x,(p1-y_train).T)

gg2 = np.dot(x, np.dot(np.diag((p1-p1*p1).reshape(m,)),x.T))

beta = beta - np.linalg.solve(gg2,grad)

#print("beta=", beta.T)

y_pred = np.empty_like(y_test)

X_test = np.concatenate((X_test,np.ones((m_test,1),dtype=X_test.dtype)), axis=1).T

l = Si(np.dot(beta.T, X_test)).flatten()

for i in range(m_test):

if l[i]>0.5:

y_pred[i] = 1

else:

y_pred[i] = 0

print('precision: ', accuracy_score(y_test, y_pred))

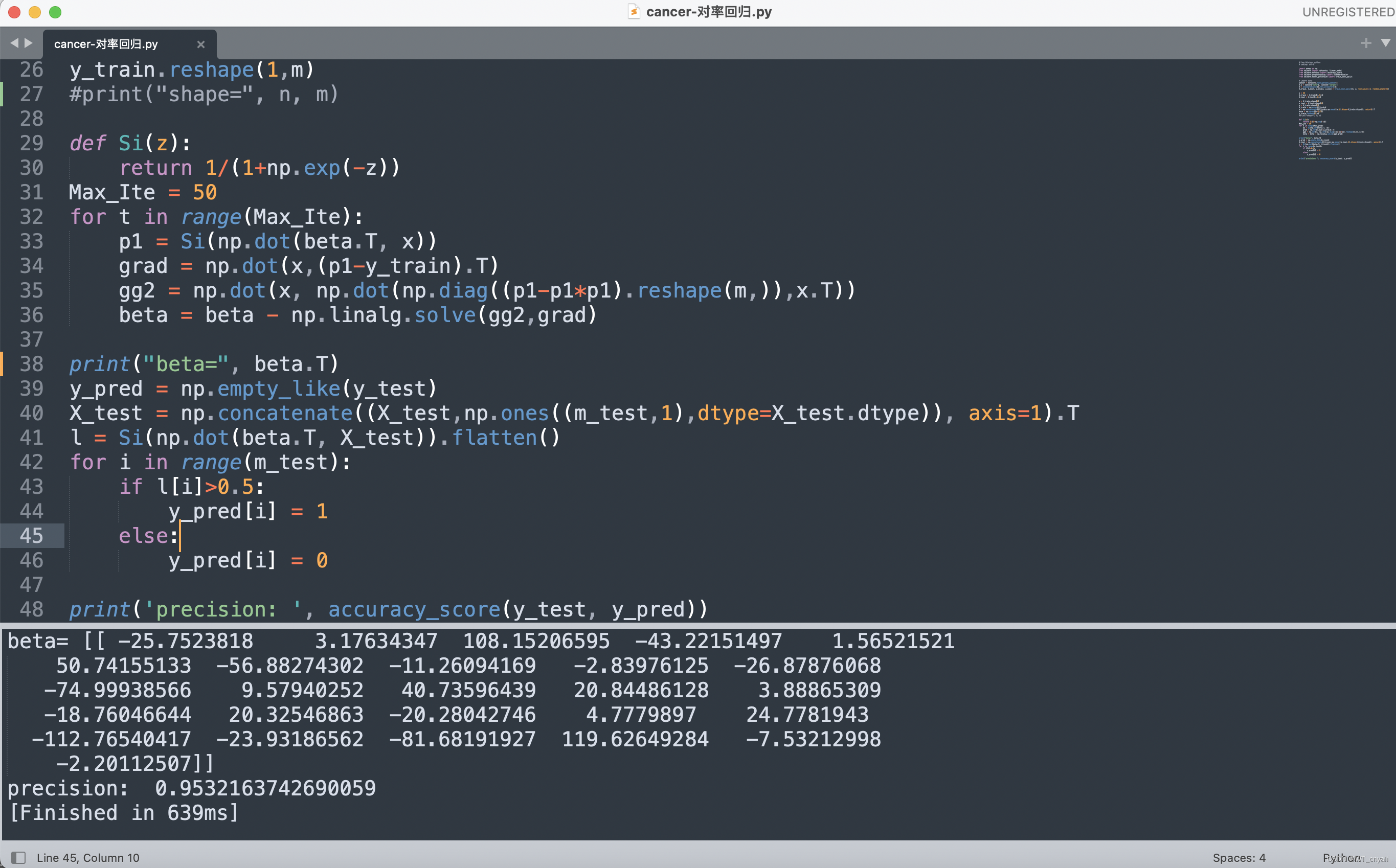

输出的 β \beta β和准确度为

beta= [[ -25.7523818 3.17634347 108.15206595 -43.22151497 1.56521521

50.74155133 -56.88274302 -11.26094169 -2.83976125 -26.87876068

-74.99938566 9.57940252 40.73596439 20.84486128 3.88865309

-18.76046644 20.32546863 -20.28042746 4.7779897 24.7781943

-112.76540417 -23.93186562 -81.68191927 119.62649284 -7.53212998

-2.20112507]]

precision: 0.9532163742690059

截图如下

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?