一、基础重要函数

dir():查看

help():帮助

Dataset:提供一种方式去获取数据及其对应的label值

Dataloader:为后面网络提供不同的数据形式

二、tensorboard

SummaryWriter(“logs”):在对应的文件夹会生成“logs”文件夹

从PIL到numpy,需要在add_image()中指定shape每一个数字/维表示的含义

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

writer = SummaryWriter("logs")

img_path ="D:\\Data\\hymenoptera_data\\train\\ants\\0013035.jpg"

img_PIL=Image.open(img_path)

img_array=np.array(img_PIL)

writer.add_image("test",img_array,1,dataformats='HWC')

for i in range(100):

writer.add_scalar("y=4x",4*i,i)

writer.close()

tensorboard --logdir logs:打开网址,查看曲线

三、transforms

transforms结构及用法:transforms相当于一个工具箱,创建具体的工具(对象实例化),图片经过transforms处理得到相应的结果。适用于图形。

使用时关注输入输出类型,官方文档,方法需要的参数。不知道什么类型的时候:print(),print(type()),debug.

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

import torch

from PIL import Image

img_path ="D:\\Data\\hymenoptera_data\\train\\ants\\0013035.jpg"

img = Image.open(img_path)

writer=SummaryWriter("logs1")

#ToTensor

trans_ten = transforms.ToTensor()

tensor_img = trans_ten(img)

writer.add_image("tensor_img",tensor_img)

#Normalize

trans_norm=transforms.Normalize([0.5,0.5,0.5],[0.5,0.5,0.5])

img_norm=trans_norm(tensor_img)

writer.add_image("Normalize",img_norm,0)

#Resize

trans_resize=transforms.Resize((512,512))

img_resize=trans_resize(img) #PIL→resize→PIL,resize只能传入PIL

img_resize= trans_ten(img_resize) #PIL→tensor

writer.add_image("Resize",img_resize,0)

#Compose - resize -2

trans_resize_2 = transforms.Resize(512)

trans_compose = transforms.Compose([trans_resize_2,trans_ten])

img_resize_2 = trans_compose(img)

writer.add_image("Resize",img_resize_2,1)

#RandomCrop

trans_random = transforms.RandomCrop(512)

trans_compose_2 = transforms.Compose([trans_random,trans_ten])

for i in range(10):

img_crop = trans_compose_2(img)

writer.add_image("RandomCrop",img_crop,i)

writer.close()

四、torchvision中数据集与transforms结合使用(dataset)

import torchvision

from torch.utils.tensorboard import SummaryWriter

dataset_transforms =torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

])

train_set = torchvision.datasets.CIFAR10(root="./dataset",train=True,transform=dataset_transforms,download=True)

test_set = torchvision.datasets.CIFAR10(root="./dataset",train=False,transform=dataset_transforms,download=True)

# print(test_set[0])

# img,target=test_set[0]

# print(img)

# print(target)

# print(test_set.classes[target])

# img.show()

writer=SummaryWriter("logs")

for i in range(10):

img,target=test_set[i]

writer.add_image("test_set",img,i)

writer.close()

五、dataloader使用

import torchvision.datasets

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data=torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor())

test_loader=DataLoader(dataset=test_data,batch_size=24,shuffle=True,num_workers=0,drop_last=False)

img,target=test_data[0]

print(img.shape)

print(target)

writer=SummaryWriter("logs1")

for epoch in range(2):

step=0

for data in test_loader:

imgs,targets=data

# print(imgs.shape)

# print(targets)

writer.add_images("Epoch: {}".format(epoch),imgs,step)

step+=1

writer.close()

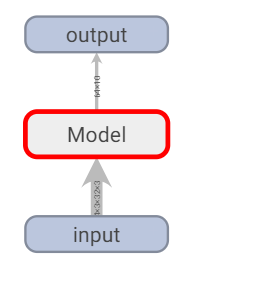

六、nn.Module简单应用

import torch

from torch import nn

class Model(nn.Module):

def __init__(self):#这里可以使用code→generate→

super().__init__()

def forward(self,input):

output = input+1

return output

model=Model()

x=torch.tensor(1)

output = model.forward(x)

print(output)

七、简单的卷积操作

import torch

import torch.nn.functional as F

input = torch.tensor([[1,2,0,3,1],

[0,1,2,3,1],

[1,2,1,0,0],

[5,2,3,1,1],

[2,1,0,1,1]])

kernel = torch.tensor([[1,2,1],

[0,1,0],

[2,1,0]])

input = torch.reshape(input,(1,1,5,5))

kernel = torch.reshape(kernel,[1,1,3,3])

print(input.shape)

print(kernel.shape)

output = F.conv2d(input,kernel,stride=1)

print(output)

output2 = F.conv2d(input,kernel,stride=2)

print(output2)

output3 = F.conv2d(input,kernel,stride=1,padding=1)

print(output3)

八、二维卷积的应用

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader = DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.conv1 = Conv2d(in_channels=3,out_channels=6,kernel_size=3,stride=1,padding=0)

def forward(self,x):

x = self.conv1(x)

return x

model = Model()

print(model)

writer = SummaryWriter("logs")

step = 0

for data in dataloader:

imgs,targets = data

output = model(imgs)#forwad调用的是__call__中的函数

#torch.Size([64, 3, 32, 32])

print(imgs.shape)

#torch.Size([64, 6, 30, 30])

#print(output.shape)

writer.add_images("input",imgs,step)

# torch.Size([64, 6, 30, 30])→[xxx,3,30,30]

output = torch.reshape(output,(-1,3,30,30))

print(output.shape)

writer.add_images("output",output,step)

step = step+1

writer.close()

九、最大池化的作用:保持图像的特征,减少数据量

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset,batch_size=64)

# input = torch.tensor([[1,2,0,3,1],

# [0,1,2,3,1],

# [1,2,1,0,0],

# [5,2,3,1,1],

# [2,1,0,1,1]],dtype=torch.float32)

# input = torch.reshape(input,(-1,1,5,5))

# print(input.shape)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3,ceil_mode=True)

def forward(self,input):

output = self.maxpool1(input)

return output

model = Model()

writer = SummaryWriter("logs1")

step = 0

for data in dataloader:

imgs,targets=data

writer.add_images("imgs",imgs,step)

output = model(imgs)

writer.add_images("output",output,step)#这里之所以不需要变化,是因为池化不会影响通道数

step+=1

writer.close()

十、非线性激活ReLU;Sigmoid;

import torch

from torch import nn

from torch.nn import ReLU, Sigmoid

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.tensor([[1,-0.5],

[-1,3]])

input = torch.reshape(input,(-1,1,2,2))

print(input.shape)

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.relu1 = ReLU()

self.sigmoid = Sigmoid()

def forward(self,input):

output = self.sigmoid(input)

return output

model = Model()

# output = model(input)

# print(output)

writer = SummaryWriter("logs1")

step = 0

for data in dataloader:

imgs,targets=data

writer.add_images("imgs",imgs,step)

output = model(imgs)

writer.add_images("output",output,step)

step+=1

writer.close()

十一、线性层

import torch

from torch import nn

from torch.nn import ReLU, Sigmoid, Linear

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./dataset",train=False,transform=torchvision.transforms.ToTensor(),download=True)

dataloader=DataLoader(dataset,batch_size=64)

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.linear1 = Linear(196608,10)#输入和输出

def forward(self,input):

output = self.linear1(input)

return output

model = Model()

for data in dataloader:

imgs,targets=data

print(imgs.shape)

#output = torch.reshape(input,(1,1,1,-1))

output = torch.flatten(imgs)

print(output.shape)

output = model(output)

print(output.shape)

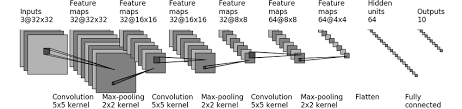

十二、Sequential 实用(类似于compose)

1*1卷积核也叫做全卷积网络,flatten也就是 1×1 卷积,全连接层(激活函数与BN层)

(Cifar model structure)

import torch

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

# self.conv1 = Conv2d(3,32,5,padding=2)

# self.maxpool1 = MaxPool2d(2)

# self.conv2 = Conv2d(32, 32, 5, padding=2)

# self.maxpool2 = MaxPool2d(2)

# self.conv3 = Conv2d(32,64,5,padding=2)

# self.maxpool3 = MaxPool2d(2)

# self.flatten =Flatten()

# self.linear1 = Linear(1024,64)

# self.linear2 = Linear(64,10)#分为十个类别,如果为概率,则选取最大的概率

self.model1 = Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32, 32, 5, padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self, x):

# x = self.conv1(x)

# x = self.maxpool1(x)

# x = self.conv2(x)

# x = self.maxpool2(x)

# x = self.conv3(x)

# x = self.maxpool3(x)

# x = self.flatten(x)

# x = self.linear1(x)

# x = self.linear2(x)

x = self.model1(x)

return x

model = Model()

print(model)

#对网络结构进行检验

input = torch.ones((64,3,32,32))

output = model(input)

print(output.shape)

writer = SummaryWriter("logs2")

writer.add_graph(model,input)

writer.close()

利用tensorboard得到的结果,双击可以放大:

203

203

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?