JSON数据样式

{"address":"中国.江苏.徐州","area":4300.21,"classess":[{"classesName":"地下矿山瓦斯检测实验室","num":10}],"level":"211","schoolName":"中国矿业大学","teachers":[{"name":"张院士","year":50},{"name":"王院士","year":60}]}

{"address":"中国.江苏.南京","area":1000.21,"classess":[{"classesName":"园林设计","num":20}],"level":"双一流","schoolName":"南京林业大学","teachers":[{"name":"张院士","year":50},{"name":"王院士","year":60}]}

数组 用schemaClass,schemaTeachers两个结构块去拆

import nj.yb.etllog.JdbcUtils

import org.apache.parquet.format.IntType

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.functions.{explode, from_json, get_json_object}

import org.apache.spark.sql.types.{ArrayType, IntegerType, StringType, StructField, StructType}

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.sql.{DataFrame, SparkSession}

object SchoolJson {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setAppName("schooljson")

.setMaster("local[*]")

val spark: SparkSession = SparkSession.builder().config(conf)

.config("hive.metastore.uris", "thrift://192.168.78.143:9083")

.enableHiveSupport()

.getOrCreate()

val sc: SparkContext = spark.sparkContext

import spark.implicits._

val optionRDD: RDD[String] = sc.textFile("in/school.txt")

// optionRDD.collect()foreach(println)

val schooljson: DataFrame = optionRDD.toDF("school")

val schoolDF: DataFrame = schooljson.select(

get_json_object($"school", "$.address").as("address"),

get_json_object($"school", "$.classess").as("classess"),

get_json_object($"school", "$.level").as("level"),

get_json_object($"school", "$.teachers").as("teachers")

)

println("------------schoolDF--------------")

schoolDF.show(false)

val schemaClass: ArrayType = ArrayType(

StructType(

Array(

StructField("classesName", StringType),

StructField("num", IntegerType)

)

)

)

val schemaTeachers: ArrayType = ArrayType(

StructType(

Array(

StructField("name", StringType),

StructField("year", IntegerType)

)

)

)

// from_json($"et", schema).alias("events")

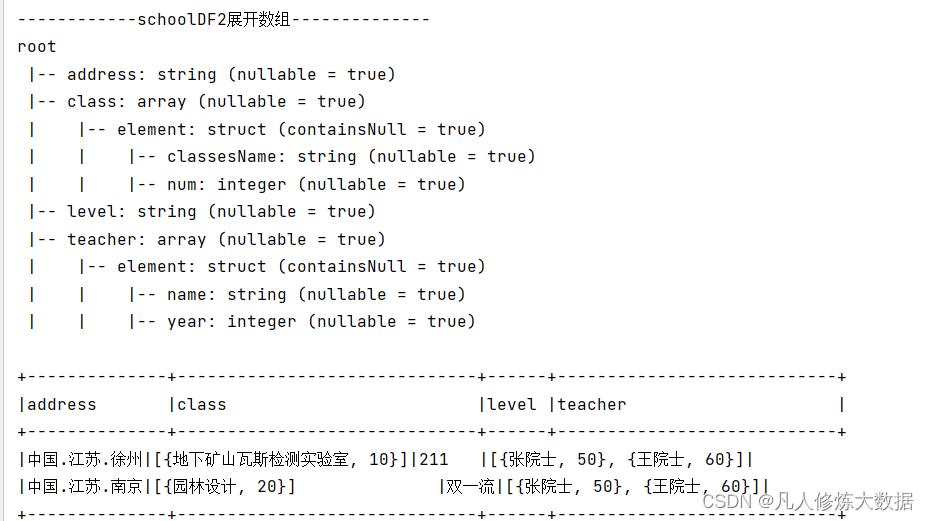

println("------------schoolDF2展开数组--------------")

val schoolDF2: DataFrame = schoolDF.select(

$"address",

from_json($"classess", schemaClass).as("class"),

$"level",

from_json($"teachers", schemaTeachers).as("teacher")

)

schoolDF2.printSchema()

schoolDF2.show(false)

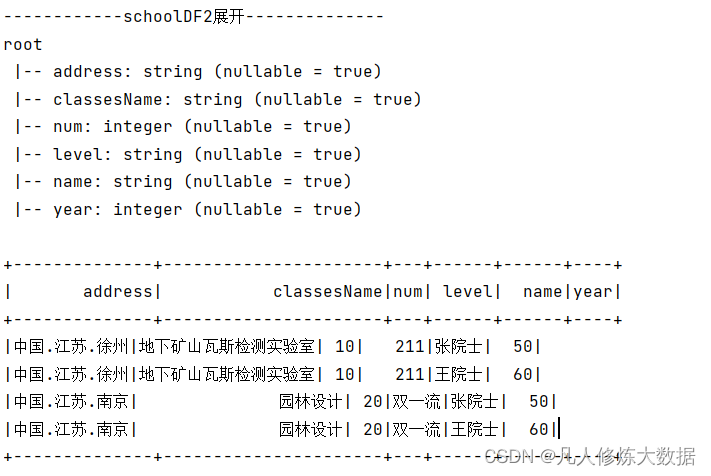

val schoolDF3: DataFrame = schoolDF2.withColumn("class", explode($"class"))

.withColumn("teacher", explode($"teacher")).select(

$"address",

$"class.*",

$"level",

$"teacher.*"

)

println("------------schoolDF2展开--------------")

schoolDF3.printSchema()

schoolDF3.show( )

JdbcUtils.dfToMysql(schoolDF3, "stu")

}

}

JdbcUtils如下 (连接数据库 hive配置)

import org.apache.spark.sql.{DataFrame, SaveMode, SparkSession}

import java.util.Properties

object JdbcUtils {

val url = "jdbc:mysql://192.168.78.141:3306/etclog"

val driver = "com.mysql.cj.jdbc.Driver"

val user = "root"

val password = "root"

val table_full_log: String = "full_log"//数据库命

private val prop = new Properties()

prop.setProperty("user", user)

prop.setProperty("password", password)

prop.setProperty("driver", driver)

/**

*

* @param df source DataFrame

* @param table target TableName

* @param op 0: Append 1:Overwrite

*/

def dfToMysql(df: DataFrame, table: String, op: Int = 1): Unit = {

if (op == 0) {

df.write.mode(SaveMode.Append).jdbc(url, table, prop)

} else if (op == 1) {

df.write.mode(SaveMode.Overwrite).jdbc(url, table, prop)

}

}

def dfToHive(df: DataFrame, table: String, op: Int = 1): Unit = {

if (op == 0) {

df.write.mode(SaveMode.Append).saveAsTable(table)

} else if (op == 1) {

df.write.mode(SaveMode.Overwrite).saveAsTable(table)

}

}

def dfToParquet(df: DataFrame, outPath: String, op: Int = 1): Unit = {

if (op == 0) {

df.write.mode(SaveMode.Append).parquet(outPath)

} else if (op == 1) {

df.write.mode(SaveMode.Overwrite).parquet(outPath)

}

}

def getDFfromMysql(spark: SparkSession, table: String): DataFrame = {

val frame: DataFrame = spark.read.jdbc(url, table, prop)

frame

}

def getDFfromHive(spark: SparkSession, table: String): DataFrame = {

val frame: DataFrame = spark.sql("select * from " + table)

frame

}

def getDFfromParquet(spark: SparkSession, path: String): DataFrame = {

val frame: DataFrame = spark.read.parquet(path)

frame

}

}

写入成功

436

436

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?