当应用调用CameraManager#openCamera获取到已打开的camera设备后,会调用createCaptureSession方法来完成camera stream创建和stream的相关配置。在createCaptureSession方法中,首先将应用的surfaces信息封装成可跨binder传递的OutputConfiguration对象,然后调用createCaptureSessionInternal方法来进行进一步的配置。

在createCaptureSessionInternal方法中,主要完成3件事情:1)检查camera状态和session是否已经创建,如果创建,则重置session状态;2)通过configureStreamsChecked方法完成camera stream创建和配置;3)根据isConstrainedHighSpeed标志位来创建不同的CameraCaptureSession对象,然后在session构造函数根据camera stream配置结果通过不同的回调上报session对象和状态。

/frameworks/base/core/java/android/hardware/camera2/impl/CameraDeviceImpl.java

/frameworks/base/core/java/android/hardware/camera2/impl/CameraCaptureSessionImpl.java

接下来分析configureStreamsChecked流程。在这个流程中,主要完成camera stream命令请求的重置,并根据应用传递的camera surface参数重新创建和配置camera stream(input和output)。具体分为以下4件事情:1)对比本地和当前的configuration差异,获取需要移除的camera stream信息;2)停止并清空底层的camera stream命令请求,为下一步重新配置camera stream命令做准备;3)根据1)中获取的结果,更新camera stream通路;4)调用endConfigure完成camera stream的配置。

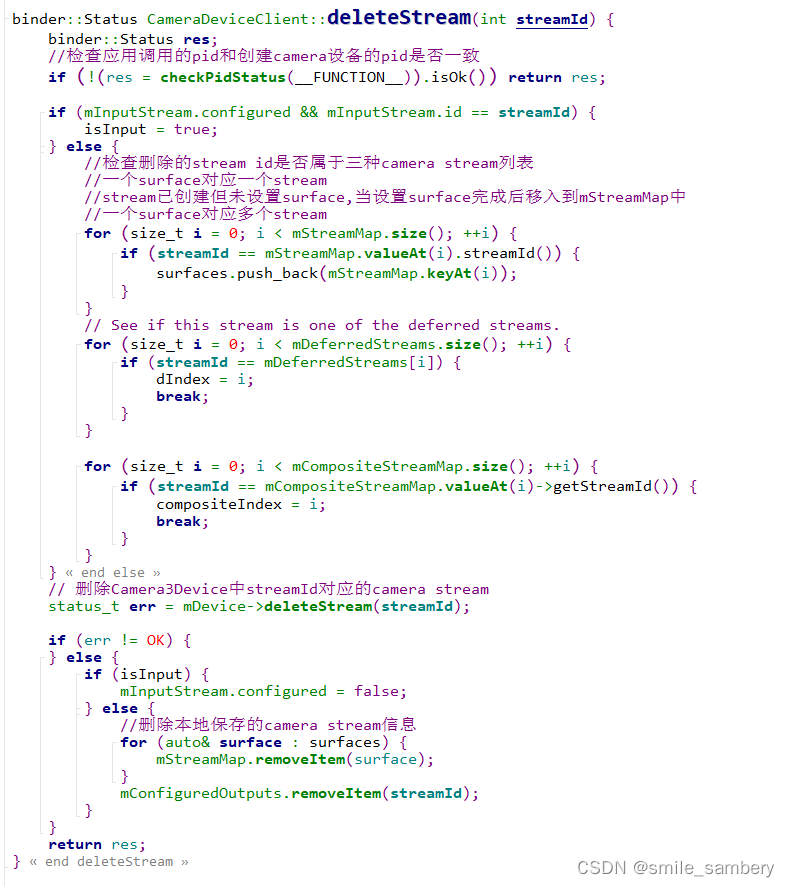

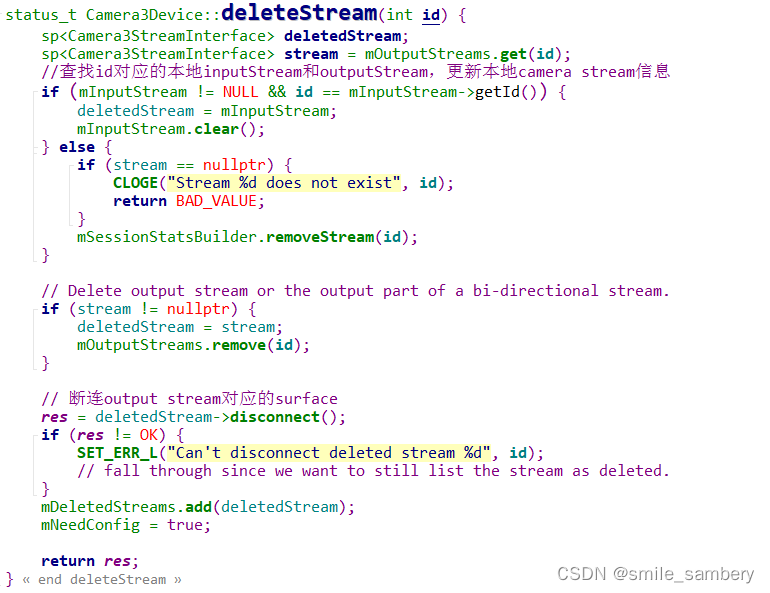

接下来分析更新camera stream的deleteStream流程。在这个流程中,mRemoteDevice通过binder调用到CameraDeviceClient#deleteStream方法中,主要完成2件事情:1)更新本地列表中缓存的camera stream信息,移除streamId对应的camera stream;2)调用Camera3Device#deleteStream,查找本地camera stream列表中id对应的deletedStream,然后调用deletedStream#disconnect,最终调用到Camera3OutputStream#disconnectLocked来完成surface连接并移除deletedStream本地记录。

/frameworks/av/service/camera/libcameraservice/api2/CameraDeviceClient.cpp

/frameworks/av/service/camera/libcameraservice/device3/Camera3Device.cpp

/frameworks/av/service/camera/libcameraservice/device3/Camera3OutputStream.cpp

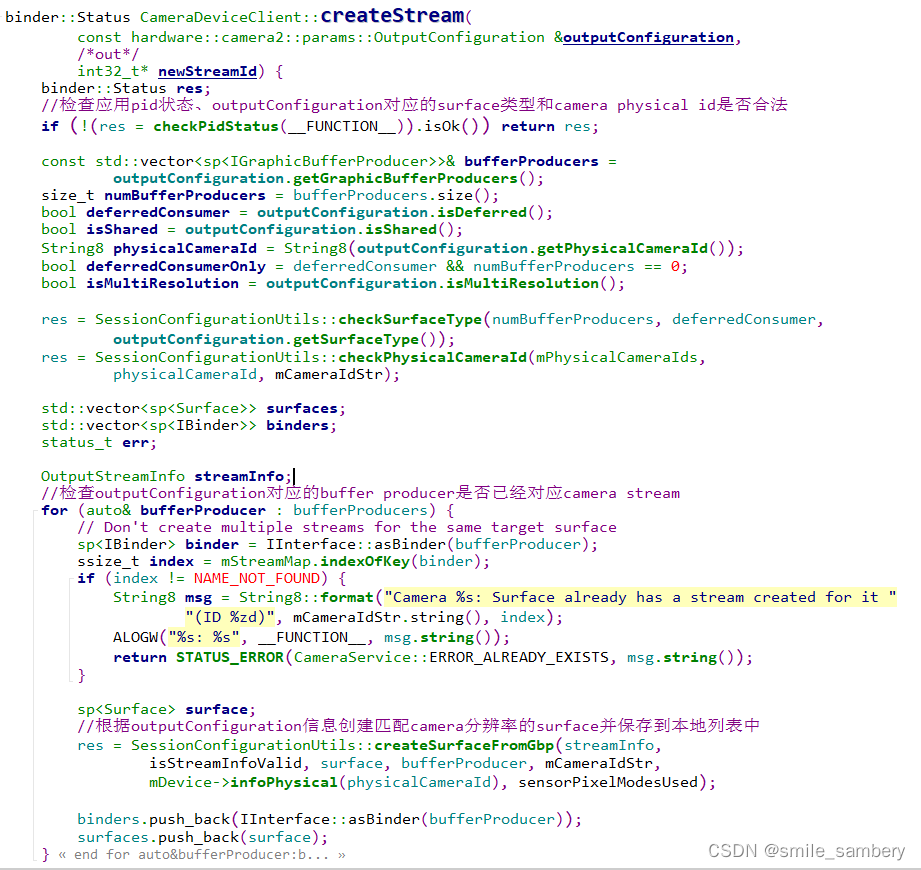

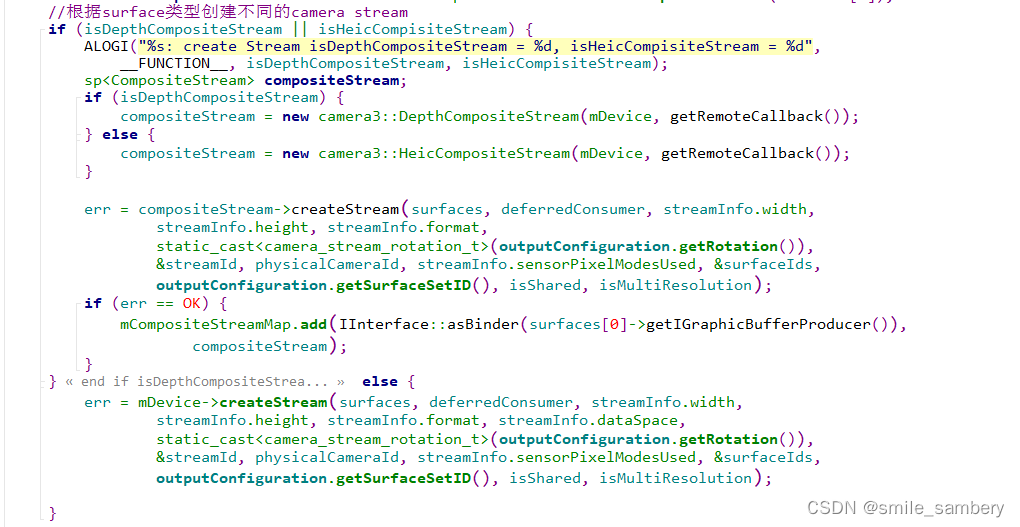

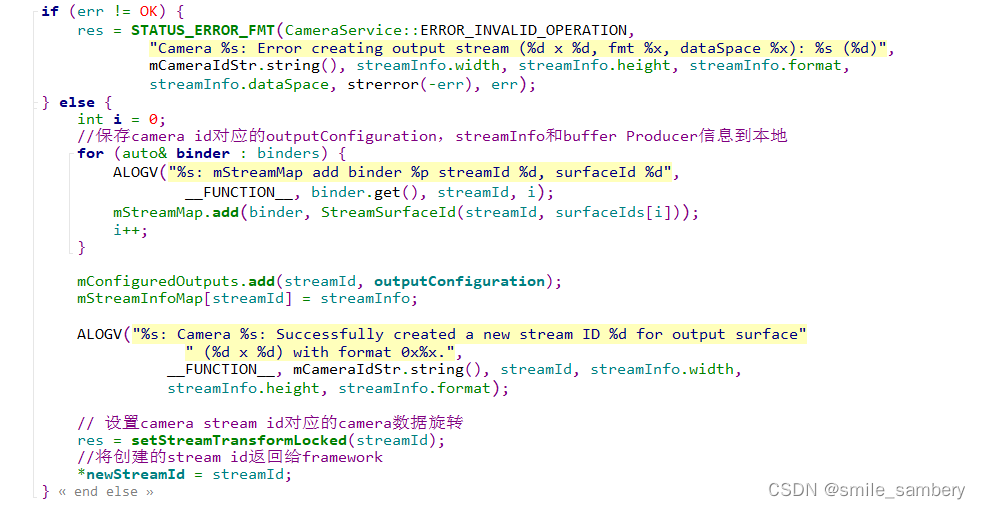

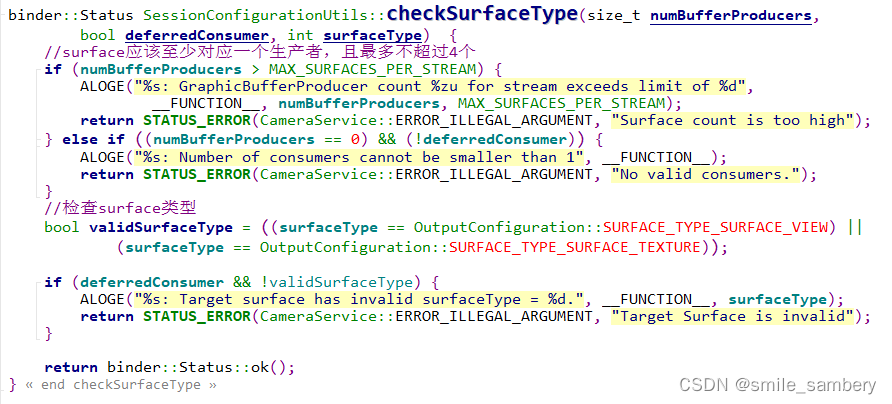

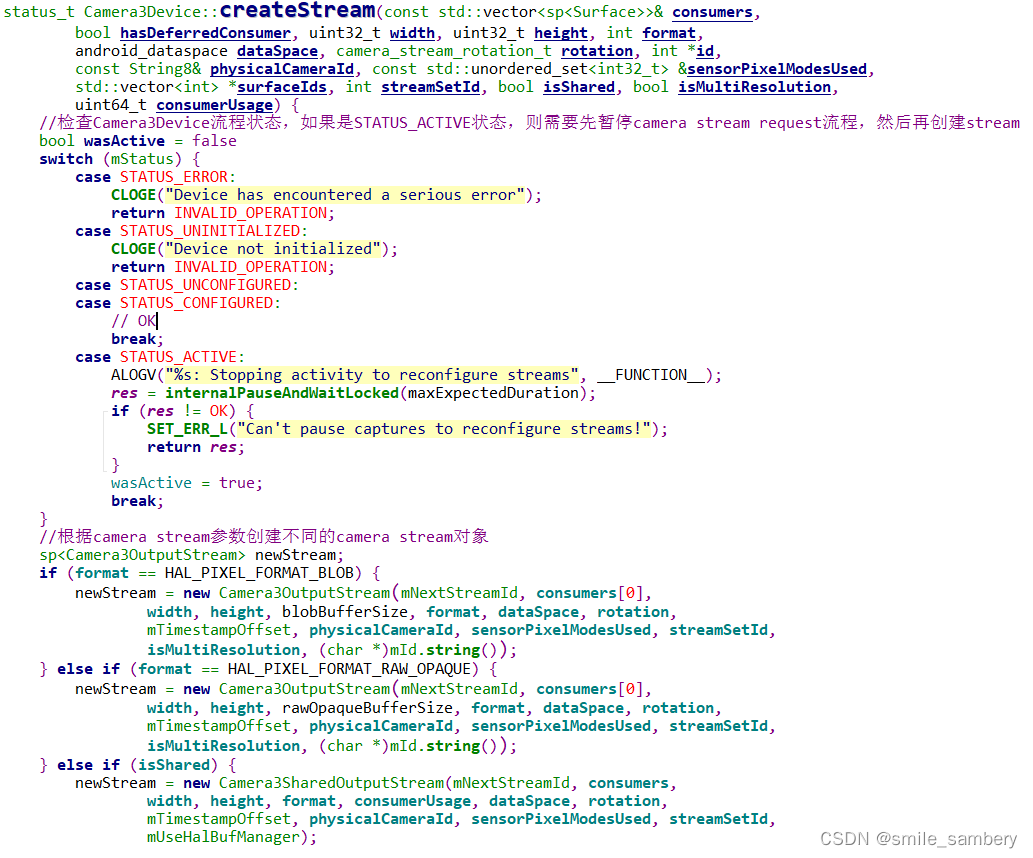

接着分析更新camera stream的createStream流程。在这个流程中,mRemoteDevice通过binder调用到CameraDeviceClient#createStream方法中,主要完成4件事情:1)检查应用pid状态、surface及stream之间的对应关系限制和camera physical id的合法性;2)检查createStream方法中的参数outputConfiguration对应的buffer producer是否已经创建,调用createSurfaceFromGbp方法创建最匹配outputConfiguration参数对应的camera surface并保存到本地。3)检查需要创建的camera stream属性,调用Camera3Device#createStream完成camera stream的创建,保存camera stream id对应的outputConfiguration信息和创建的surface;4)调用setStreamTransformLocked来完成camera stream相关的方向变换,将camera stream id返回给framework。

/frameworks/av/service/camera/libcameraservice/api2/CameraDeviceClient.cpp

/frameworks/av/service/camera/libcameraservice/utils/SessionConfigurationUtils.cpp

/frameworks/av/service/camera/libcameraservice/utils/SessionConfigurationUtils.cpp

/frameworks/av/service/camera/libcameraservice/device3/Camera3Device.cpp

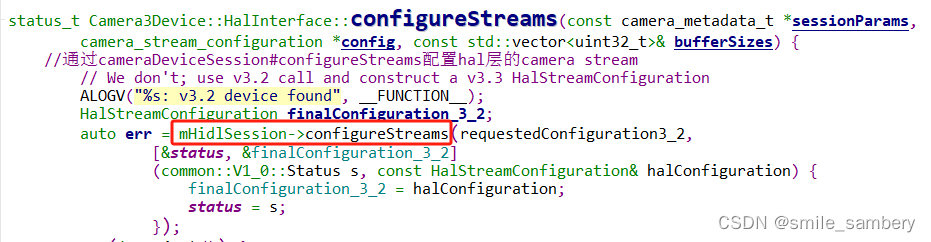

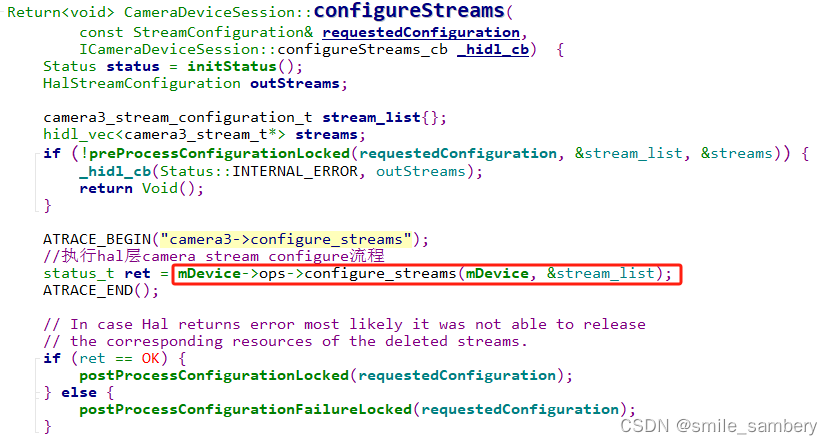

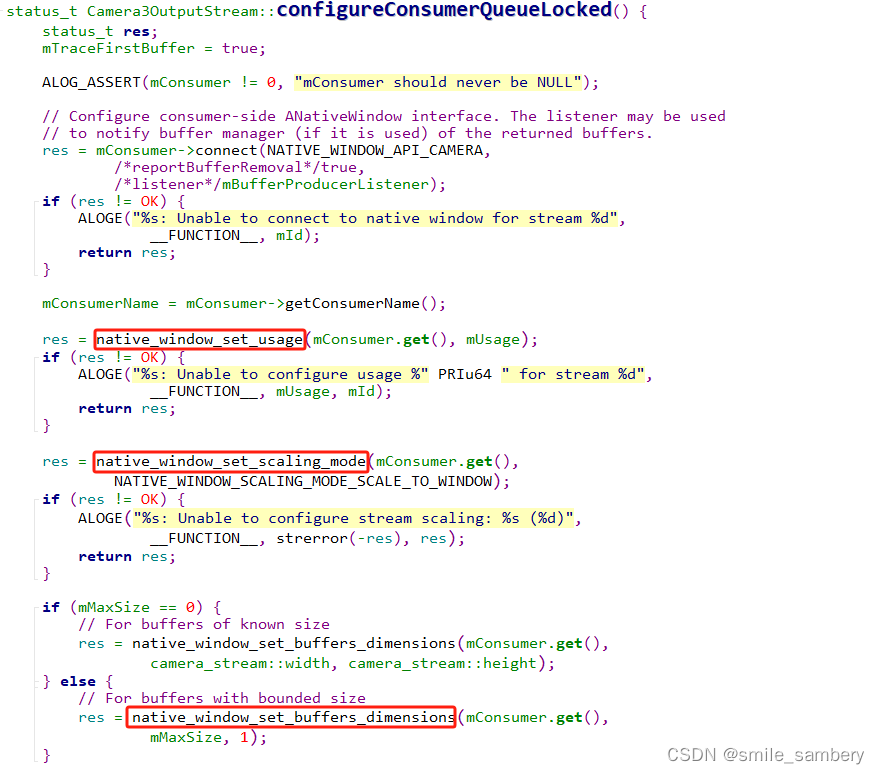

当camera stream创建完成后,mRemoteDevice#endConfigure方法通过binder调用到CameraDeviceClient#endConfigure,最终通过Camera3Device# configureStreams来完成camera stream的配置。在configureStreams方法中,通过filterParamsAndConfigureLocked获取默认session参数,并最终调用configureStreamsLocked来完成camera stream配置。在configureStreamsLocked方法中,首先暂停Camera3Device中的PreparerThread,然后调用inputStream/outputStream#startConfiguration获取到已经创建的camera stream,配置camera_stream_configuration参数,接着调用mInterface#configureStreams通过之前创建好的cameraDeviceSession完成hal层的camera stream配置,然后再调用inputStream/outputStream#finishConfiguration并最终通过Camera3OutputStream# configureConsumerQueueLocked来完成camera stream中surface参数的初始化配置,最后启动Camera3Device中的PreparerThread循环等待mPendingStreams中stream并执行buffer申请。至此,createCaptureSession流程分析完成,framework完成CameraCaptureSession的创建,native/hal层完成stream的创建和配置,并启动PreparerThread等待进一步的初始化流程。

/frameworks/av/service/camera/libcameraservice/device3/Camera3Device.cpp

/frameworks/av/service/camera/libcameraservice/device3/Camera3Device.cpp

/hardware/interfaces/camera/device/3.2/default/CameraDeviceSession.cpp

/frameworks/av/service/camera/libcameraservice/device3/Camera3OutputStream.cpp

/frameworks/av/service/camera/libcameraservice/device3/Camera3Device.cpp

503

503

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?