硬件

必要依赖

KubeKey 可以将 Kubernetes 和 KubeSphere 一同安装。针对不同的 Kubernetes 版本,需要安装的依赖项可能有所不同。您可以参考以下列表,查看是否需要提前在节点上安装相关的依赖项。

如果不安装

socat ,conntrack 运行时会提示报错

10:59:52 CST [ERRO] iZ8vb0i5txp0l737b52v40Z: conntrack is required.

10:59:52 CST [ERRO] iZ8vb0i5txp0l737b52v40Z: socat is required.

先安装conntrack

yum install conntrack-tools-1.4.4-7.el7.x86_64

是否安装成功

conntrack --version

conntrack v1.4.4 (conntrack-tools)

先安装socat

yum install socat

socat -V

安装 ebtables

查看是否安装

ebtables -V

安装ipset

查看是否安装

ipset -V

ipset v6.38, protocol version: 6

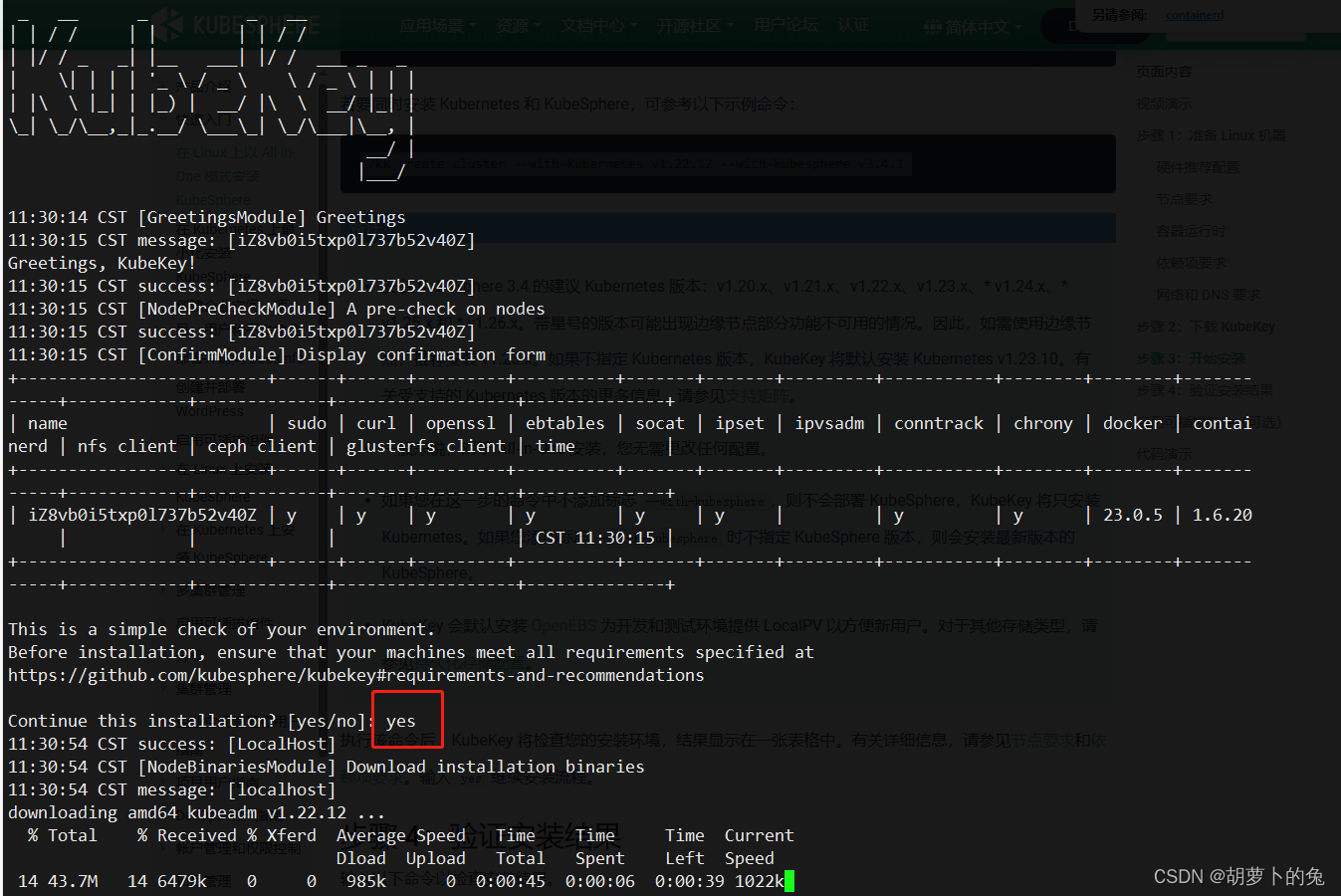

使用kubekey安装 kubernetes 和 kubesphere

./kk create cluster --with-kubernetes v1.22.12 --with-kubesphere v3.4.1

确定 yes

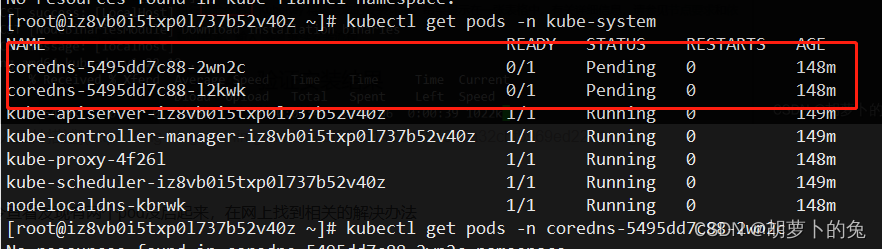

使用该命令查看发现有两个pod没启起来

kubectl get pods -n kube-system

coredns pod 无法启动可能是 flannel 没有安装

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

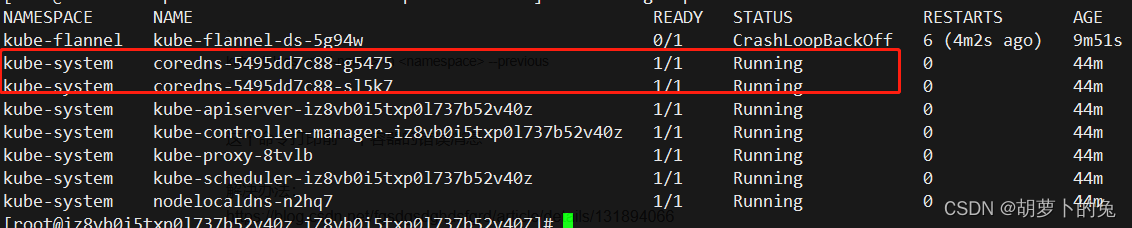

安装成功

查看报错日志

# 查看kuberctl.services日志

journalctl -f -u kubelet.service

networkPlugin cni failed to set up pod \"coredns-5495dd7c88-sl5k7_kube-system\" network: loadFlannelSubnetEnv failed: open /run/flannel/subnet.env: no such file or directory"

发现 /run/flannel/subnet.env配置文件不存在

在对应node节点上 新建 /run/flannel/subnet.env

vim /run/flannel/subnet.env

这个文件写入内容:

```shell

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

3. 重启 coredns pods:

```shell

kubectl delete pod coredns-xxx-xxxx -n kube-system

删除pod

kubectl delete pod coredns-5495dd7c88-2wn2c -n kube-system

kubectl delete pod coredns-5495dd7c88-l2kwk -n kube-system

查看

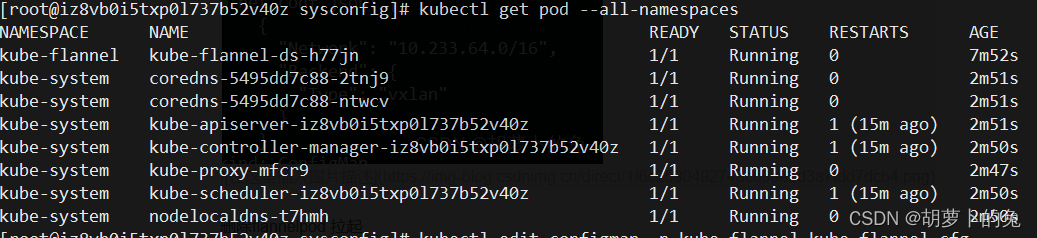

#查看pod 列表

kubectl get pod --all-namespaces

kubectl get pod -A

查看该pod信息 已启动

但是flannel没有启动

查看

kubectl logs -n kube-flannel kube-flannel-ds-m555p

main.go:332] Error registering network: failed to acquire lease: subnet "10.244.0.0/16" specified in the flannel net config doesn't contain "10.233.64.0/24" PodCIDR of the "iz8vb0i5txp0l737b52v40z" node

W1227 07:45:51.955429 1 reflector.go:347] github.com/flannel-io/flannel/pkg/subnet/kube/kube.go:459: watch of *v1.Node ended with: an error on the server ("unable to decode an event from the watch stream: context canceled") has prevented the request from succeeding

I1227 07:45:51.955456 1 main.go:520] Stopping shutdownHandler

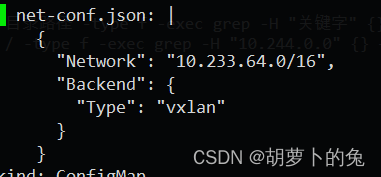

上面报错的意思是:flannel 子网在10.244.0.0 而 k8s 集群是10.233.64.0/24

所以修改flannel配置文件

查看flannel

kubectl edit configmap -n kube-flannel kube-flannel-cfg

编辑flannel

kubectl edit configmap -n kube-flannel kube-flannel-cfg

删除flannelpod 拉起

部署dashboard

部署

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

生成token

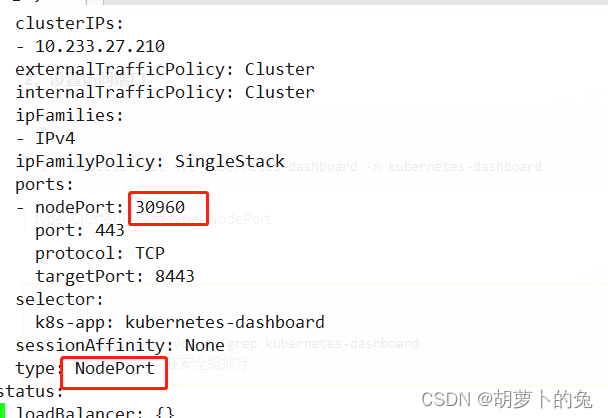

编辑dashborad的yaml文件

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

注意点:

1. type: ClusterIP 改为 type: NodePort

2. 30960端口放行

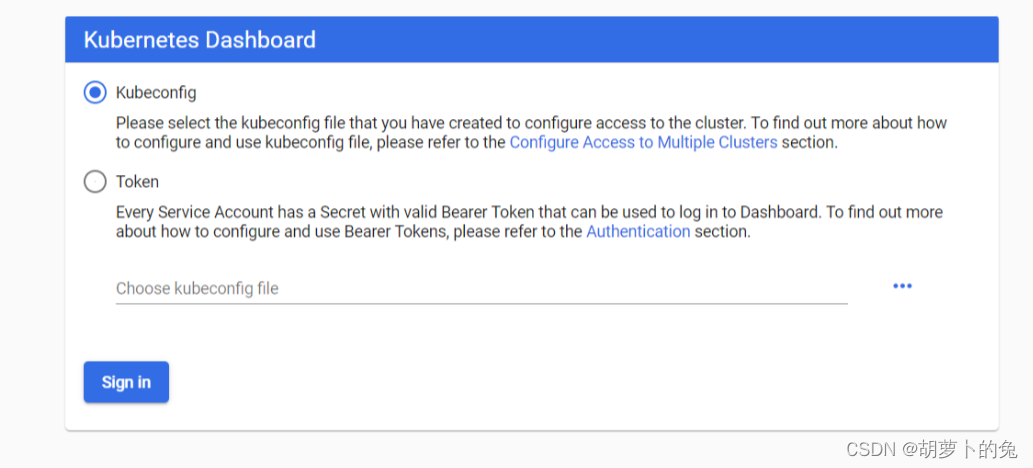

访问页面被浏览器拦截

在浏览器点击空白处,键盘键入thisisunsafe 就能正常打开了

出现授权页面

在命令行生成token

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

生成token ,填到input框登录,就进来了

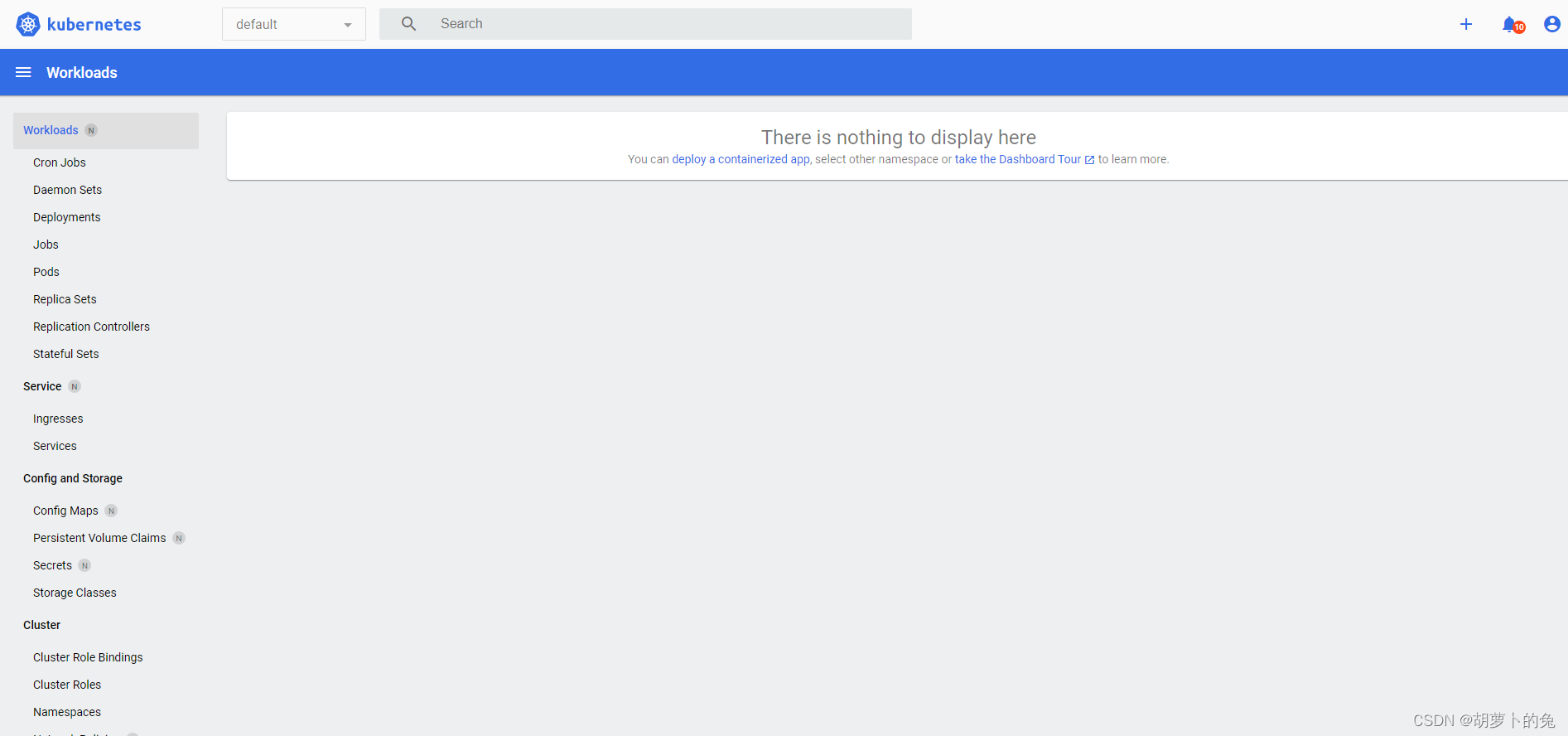

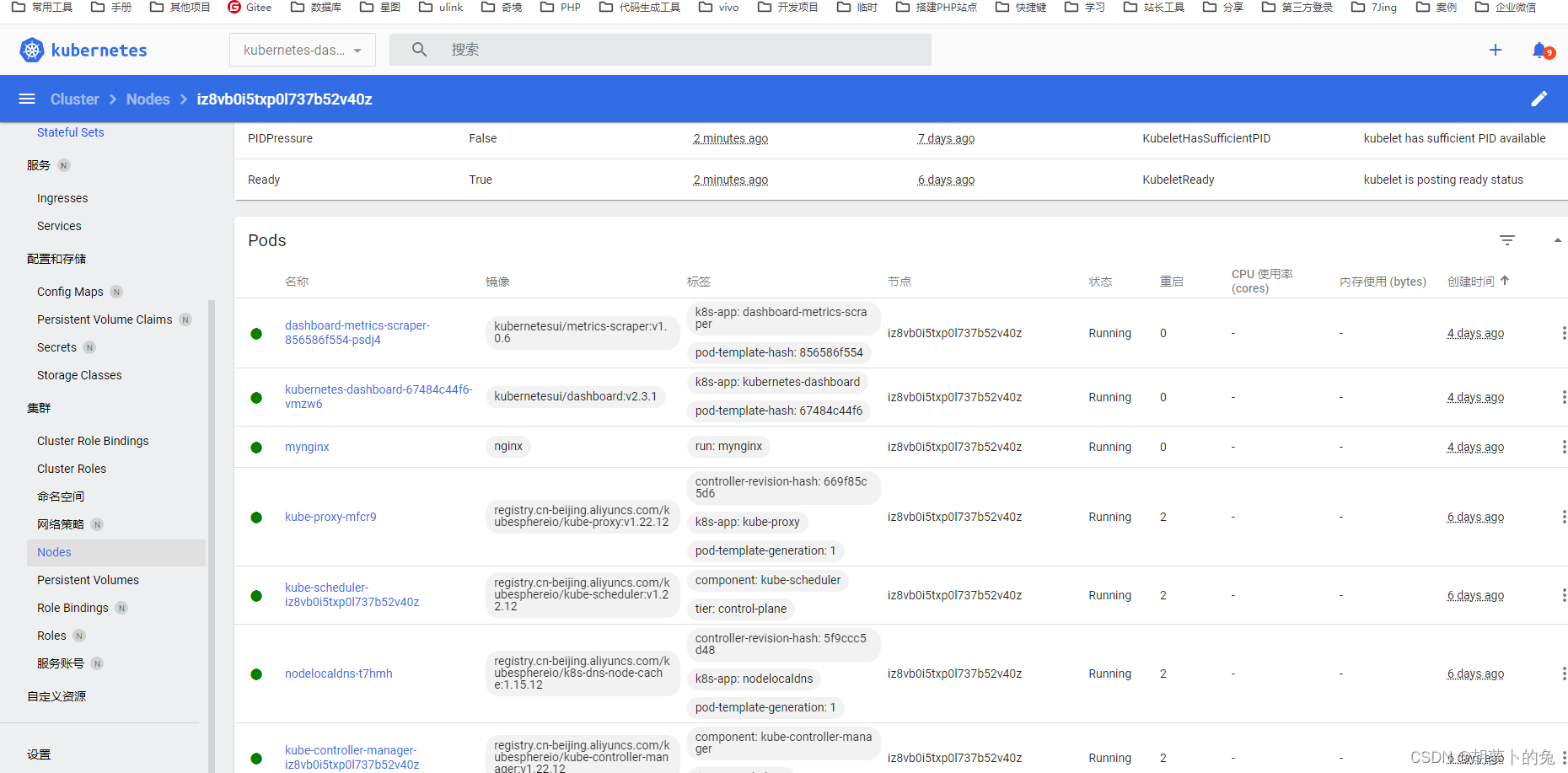

控制页面

https://segmentfault.com/a/1190000041999289

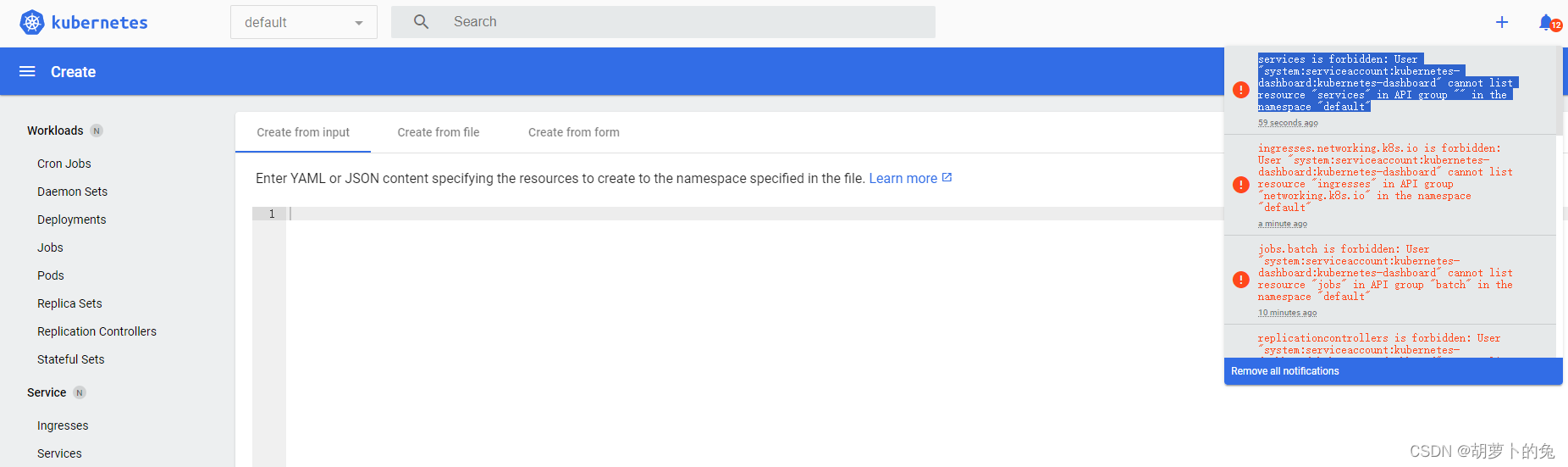

问题

首次登录,右上角报错

这是 rbac 权限问题。

此时 dashboard 还不能正常使用,dashboard 是空的,比如命名空间。此时还不能获取到集群的信息:

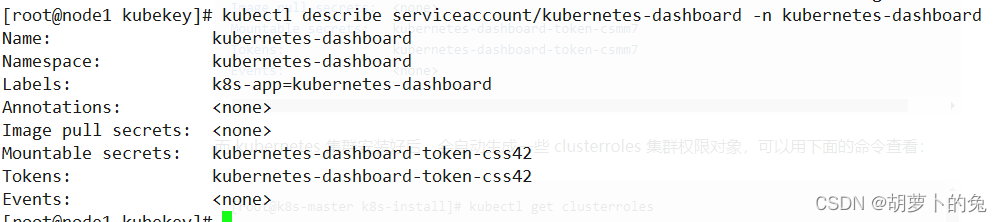

解决办法:

先创建一个账号,再创建一个有全部权限的 clusterroles,将二者用 clusterrolebinding 绑定起来。

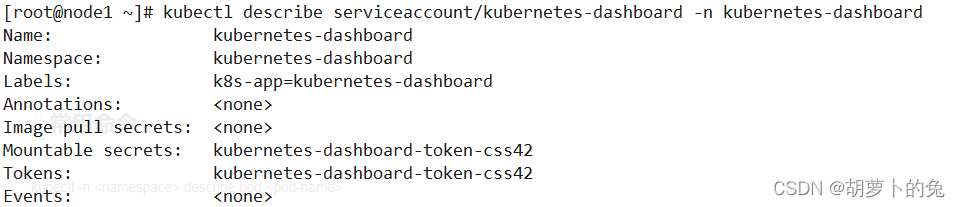

安装 yaml 文件时,已创建了一个名为 kubernetes-dashboard 的服务账户(serviceaccount),查看其详情:

kubectl describe serviceaccount/kubernetes-dashboard -n kubernetes-dashboard

而 kubernetes 集群安装好后,会自动生成一些 clusterroles 集群权限对象,可以用下面的命令查看:

kubectl get clusterroles

结果

NAME CREATED AT

admin 2023-12-26T03:42:54Z

cluster-admin 2023-12-26T03:42:54Z

edit 2023-12-26T03:42:54Z

flannel 2023-12-27T02:57:13Z

kubeadm:get-nodes 2023-12-26T03:42:57Z

kubernetes-dashboard 2023-12-29T08:37:38Z

system:aggregate-to-admin 2023-12-26T03:42:54Z

system:aggregate-to-edit 2023-12-26T03:42:54Z

system:aggregate-to-view 2023-12-26T03:42:54Z

system:auth-delegator 2023-12-26T03:42:54Z

system:basic-user 2023-12-26T03:42:54Z

system:certificates.k8s.io:certificatesigningrequests:nodeclient 2023-12-26T03:42:54Z

system:certificates.k8s.io:certificatesigningrequests:selfnodeclient 2023-12-26T03:42:54Z

system:certificates.k8s.io:kube-apiserver-client-approver 2023-12-26T03:42:55Z

system:certificates.k8s.io:kube-apiserver-client-kubelet-approver 2023-12-26T03:42:55Z

system:certificates.k8s.io:kubelet-serving-approver 2023-12-26T03:42:54Z

system:certificates.k8s.io:legacy-unknown-approver 2023-12-26T03:42:54Z

system:controller:attachdetach-controller 2023-12-26T03:42:55Z

system:controller:certificate-controller 2023-12-26T03:42:55Z

system:controller:clusterrole-aggregation-controller 2023-12-26T03:42:55Z

system:controller:cronjob-controller 2023-12-26T03:42:55Z

system:controller:daemon-set-controller 2023-12-26T03:42:55Z

system:controller:deployment-controller 2023-12-26T03:42:55Z

system:controller:disruption-controller 2023-12-26T03:42:55Z

system:controller:endpoint-controller 2023-12-26T03:42:55Z

system:controller:endpointslice-controller 2023-12-26T03:42:55Z

system:controller:endpointslicemirroring-controller 2023-12-26T03:42:55Z

system:controller:ephemeral-volume-controller 2023-12-26T03:42:55Z

system:controller:expand-controller 2023-12-26T03:42:55Z

system:controller:generic-garbage-collector 2023-12-26T03:42:55Z

system:controller:horizontal-pod-autoscaler 2023-12-26T03:42:55Z

system:controller:job-controller 2023-12-26T03:42:55Z

system:controller:namespace-controller 2023-12-26T03:42:55Z

system:controller:node-controller 2023-12-26T03:42:55Z

system:controller:persistent-volume-binder 2023-12-26T03:42:55Z

system:controller:pod-garbage-collector 2023-12-26T03:42:55Z

system:controller:pv-protection-controller 2023-12-26T03:42:55Z

system:controller:pvc-protection-controller 2023-12-26T03:42:55Z

system:controller:replicaset-controller 2023-12-26T03:42:55Z

system:controller:replication-controller 2023-12-26T03:42:55Z

system:controller:resourcequota-controller 2023-12-26T03:42:55Z

system:controller:root-ca-cert-publisher 2023-12-26T03:42:55Z

system:controller:route-controller 2023-12-26T03:42:55Z

system:controller:service-account-controller 2023-12-26T03:42:55Z

system:controller:service-controller 2023-12-26T03:42:55Z

system:controller:statefulset-controller 2023-12-26T03:42:55Z

system:controller:ttl-after-finished-controller 2023-12-26T03:42:55Z

system:controller:ttl-controller 2023-12-26T03:42:55Z

system:coredns 2023-12-26T03:42:58Z

system:discovery 2023-12-26T03:42:54Z

system:heapster 2023-12-26T03:42:54Z

system:kube-aggregator 2023-12-26T03:42:54Z

system:kube-controller-manager 2023-12-26T03:42:54Z

system:kube-dns 2023-12-26T03:42:54Z

system:kube-scheduler 2023-12-26T03:42:55Z

system:kubelet-api-admin 2023-12-26T03:42:54Z

system:monitoring 2023-12-26T03:42:54Z

system:node 2023-12-26T03:42:54Z

system:node-bootstrapper 2023-12-26T03:42:54Z

system:node-problem-detector 2023-12-26T03:42:54Z

system:node-proxier 2023-12-26T03:42:55Z

system:persistent-volume-provisioner 2023-12-26T03:42:54Z

system:public-info-viewer 2023-12-26T03:42:54Z

system:service-account-issuer-discovery 2023-12-26T03:42:55Z

system:volume-scheduler 2023-12-26T03:42:54Z

view 2023-12-26T03:42:54Z

详细地看一下集群管理员 cluster-admin ,*号表示对所有资源有所有权限:

kubectl describe clusterroles cluster-admin

查看

kubectl describe serviceaccount/kubernetes-dashboard -n kubernetes-dashboard

创建UI账号绑定

将kubernetes-dashboard这个服务账户和cluster-admin这个集群管理员权限对象绑定起来。

vim kubernetes-dashboard-ClusterRoleBinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

```shell

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

将服务账户 kubernetes-dashboard 跟 cluster-admin 这个集群管理员权限对象绑定起来:

```shell

kubectl create -f kubernetes-dashboard-ClusterRoleBinding.yaml

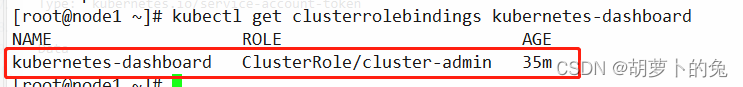

查看是否绑定成功

kubectl get clusterrolebindings kubernetes-dashboard

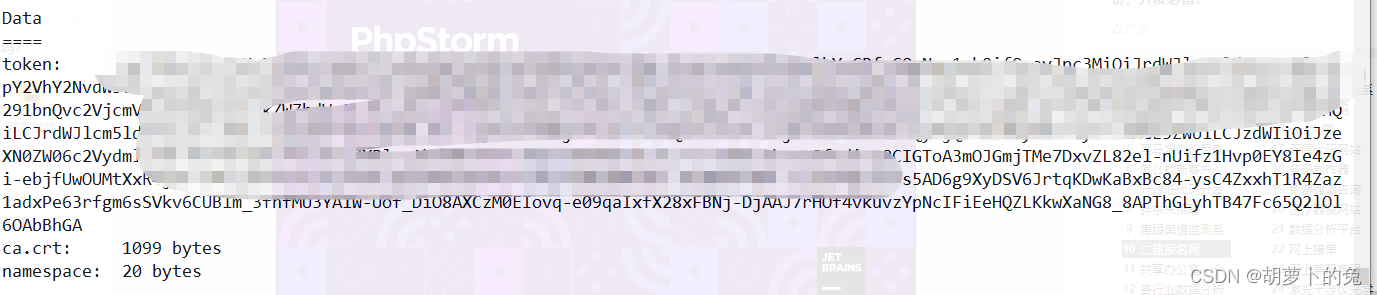

重新生成token

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-token | awk '{print $1}') |grep token|tail -1

结果:

安装ingress

注意:

2023 年 4 月 3 日,旧镜像仓库 k8s.gcr.io 将被冻结,Kubernetes 和相关子项目的镜像将不再推送到旧镜像仓库。

registry.k8s.io 镜像仓库取代了旧的

下载

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

#修改镜像

vi deploy.yaml

#将image的值改为如下值:

registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

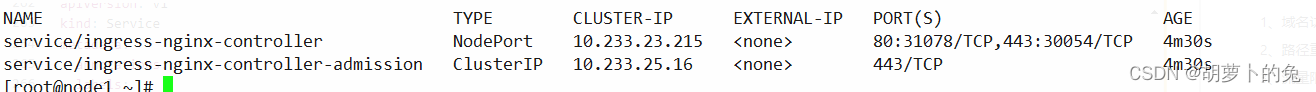

查看部署结果

kubectl get pod,svc -n ingress-nginx

如果下载不了,备份文件

如果镜像过期可以在阿里云镜像里找相关的

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: github.com/kubernetes/ingress-nginx/releases/tag/controller-v0.47.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

常用命令

kubectl -n <namespace> describe pod <pod-name>

kubectl -n kube-system describe pod coredns-5495dd7c88-bhs7w

也可以查看日记

kubectl logs <pod-name> -n <namespace> --previous

这个命令打印前一个容器的错误消息

#查看pod 列表

kubectl get pod --all-namespaces

kubectl get pod -A

#删除该命名空间下的所有pod

kubectl delete pods --all -n kube-system

查看被污染的节点

kubectl get no -o yaml | grep taint -A 5

查看该命名空间下的某个pod的详细信息

kubectl -n kube-system describe pod coredns-5495dd7c88-2pspf

查看kuberctl.services日志

journalctl -f -u kubelet.service

要查看Kubernetes中的Pod CIDR

查看集群网段

kubectl cluster-info dump | grep -m 1 cluster-cidr

获取Flannel的配置信息

kubectl get configmap -n kube-flannel configmap/kube-flannel-cfg

configmap/kube-flannel-cfg

查看集群配置信息

kubectl cluster-info

kubectl cluster-info dump

查看flannel

kubectl edit configmap -n kube-flannel kube-flannel-cfg

编辑flannel

kubectl edit configmap -n kube-flannel kube-flannel-cfg

查找

find / -name "*flannel.yml"

find 目录路径 -type f -exec grep -H "关键字" {} +

find / -type f -exec grep -H "10.244.0.0" {} +

问题

. K8S - 问题解决 - The connection to the server localhost:8080 was refused - did you specify

1. 现象:

安装K8S mananger后,执行kubectl get nodes始终出错

kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

-

原因分析

kubernetes master没有与本机绑定,集群初始化的时候没有绑定,导致kubectl 无法识别master是自身。 -

解决之道

此时设置在本机的环境变量即可解决问题。 -

解决过程

步骤一:设置环境变量

具体根据情况,此处记录linux设置该环境变量

方式一:编辑文件设置

vim /etc/profile

在底部增加新的环境变量 ` export KUBECONFIG=/etc/kubernetes/admin.conf`

方式二:直接追加文件内容

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

步骤二:使生效

source /etc/profile

Error from server (AlreadyExists): error when creating “kubernetes-dashboard-ClusterRoleBinding.yaml”: clusterrolebindings.rbac.authorization.k8s.io “kubernetes-dashboard” already exists

将资源定义应用到 Kubernetes 集群中

kubectl apply -f kubernetes-dashboard-ClusterRoleBinding.yaml

kubectl apply -f kubernetes-dashboard.yaml 是一个用于在 Kubernetes 集群中部署资源的命令。这个命令的含义如下:

kubectl:这是 Kubernetes 的命令行工具,用于与 Kubernetes 集群进行交互。

apply:这是 kubectl 的一个子命令,用于将资源定义应用到 Kubernetes 集群中。如果该资源已经存在,apply 会更新它以匹配定义的配置;如果该资源不存在,apply 会创建它。

-f 或 --filename:这个选项指定了包含资源定义的文件的路径。在这个例子中,它是 kubernetes-dashboard.yaml。这个文件应该包含了一个或多个资源的 YAML 描述。

总的来说,kubectl apply -f kubernetes-dashboard.yaml 命令的意思是:使用 kubernetes-dashboard.yaml 文件中的定义来创建或更新 Kubernetes 集群中的资源。

如果报错

Error from server (AlreadyExists): error when creating "kubernetes-dashboard-ClusterRoleBinding.yaml": clusterrolebindings.rbac.authorization.k8s.io "kubernetes-dashboard" already exists

则,删除

kubernetes-dashboard-ClusterRoleBinding.yaml

kubectl delete -f kubernetes-dashboard-ClusterRoleBinding.yaml

-

命令自动补全

-

命令大全

-

k8s 自动补全

- 下载

sudo yum install bash-completion

2. 查看安装到什么地方

whereis bash-completion

bash-completion: /usr/share/bash-completion

3. 允许你使用Bash的自动补全功能

source /usr/share/bash-completion/

4. 加载并运行

source ~/.bashrc

1115

1115

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?