Flume概述

1)Flume是一个分布式的,可靠的,可用的,非常有效率的对大数据的日志进行收集、聚集、移动信息的服务,Flume仅仅运行在linux环境下。

2)Flume是一个基于流式的简单的、灵活的架构,只需要编写三要素:source、channel、sink,然后执行一个命令即可。

3)Flume、kafka实时进行数据收集,spark、storm实时去处理,impala实时查询。

4)flume-ng 使用模板:bin/flume-ng agent --conf conf --name Agent-Name --conf-file Agent-FileFlume实时监控日志文件抽取数据至HDFS

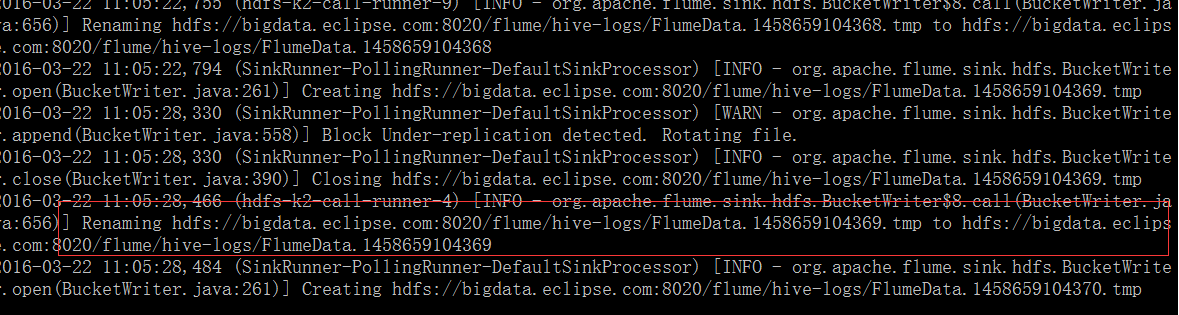

1) flume-tail-conf.properties配置文件如下a2.sources = r2 a2.channels = c2 a2.sinks = k2 ### define sources a2.sources.r2.typ = exec a2.sources.r2.bind = tail -F /opt/app/cdh5.3.6/hive-0.13.1-cdh5.3.6/logs/hive.log a2.sources.r2.shell = /bin/bash -c ### define channels a2.channels.c2.type = memory a2.channels.c2.capacity = 1000 a2.channels.c2.transactionCapacity = 100 ### define sinks a2.sinks.k2.type = hdfs a2.sinks.k2.hdfs.path = hdfs://bigdata.eclipse.com:8020/flume/hive-logs a2.sinks.k2.hdfs.fileType = DataStream a2.sinks.k2.hdfs.writeFormat = Text a2.sinks.k2.hdfs.batchSize = 10 ### bind the sources and sinks to the channels a2.sources.r2.channels=c2 a2.sinks.k2.channel = c22)执行监控命令

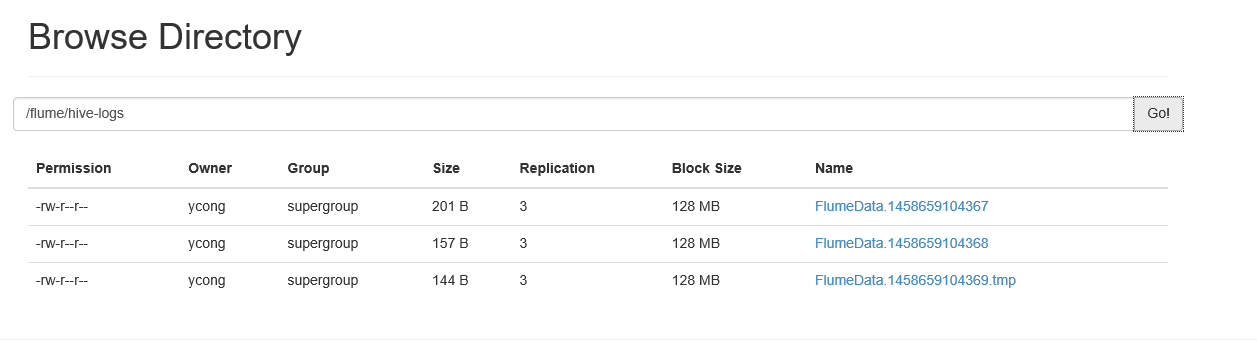

bin/flume-ng agent --conf conf --name a2 --conf-file conf/flume-tail-conf.properties -Dflume.root.logger=INFO,console3) 结果查看

Flume实时监控日志目录,抽取数据至HDFS

1) flume-spooling-conf.properties配置文件如下:

a2.sources = r2 a2.channels = c2 a2.sinks = k2 ### define sources a2.sources.r2.type = spooldir a2.sources.r2.spoolDir = /opt/datas/spooling a2.sources.r2.ignorePattern = ^(.)*\\.tmp$ a2.sources.r2.fileSuffix = .delete ### define channels a2.channels.c2.type = file a2.channels.c2.checkpointDir = /opt/app/cdh5.3.6/flume-1.5.0-cdh5.3.6/spoolinglogs/ckpdir a2.channels.c2.dataDirs = /opt/app/cdh5.3.6/flume-1.5.0-cdh5.3.6/spoolinglogs/datadir ### define sinks a2.sinks.k2.type = hdfs a2.sinks.k2.hdfs.path = hdfs://bigdata.eclipse.com:8020/flume/spooling-logs a2.sinks.k2.hdfs.fileType = DataStream a2.sinks.k2.hdfs.writeFormat = Text a2.sinks.k2.hdfs.batchSize = 10 ### bind the sources and sinks to the channels a2.sources.r2.channels=c2 a2.sinks.k2.channel = c22)执行命令

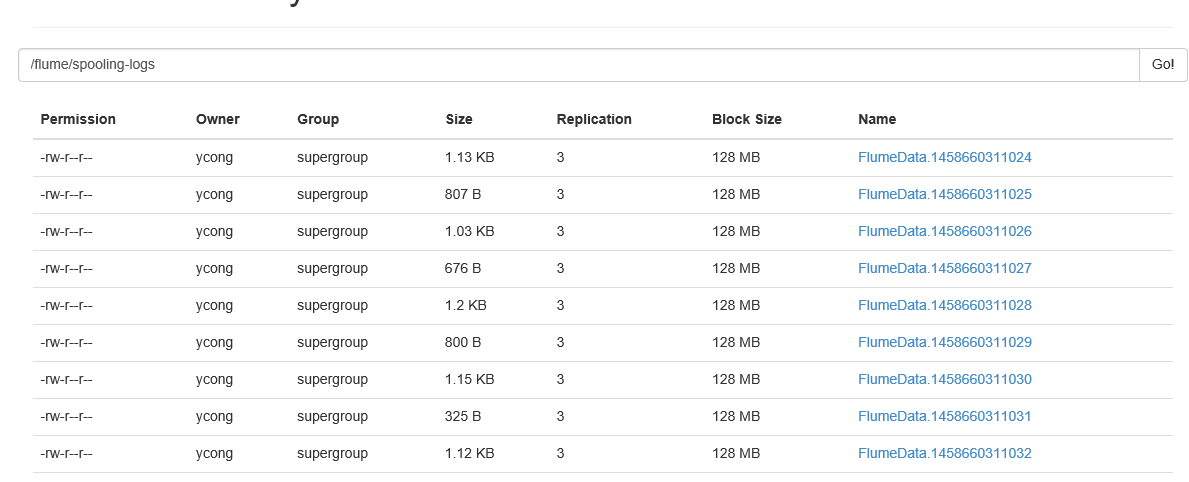

bin/flume-ng agent --conf conf --name a2 --conf-file conf/flume-spooling-conf.properties -Dflume.root.logger=INFO,console3)结果如下

Flume实时抽取监控目录数据

最新推荐文章于 2021-06-24 16:18:06 发布

403

403

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?