Redis原理之集群搭建

简介

redis最开始使用主从模式做集群,若master宕机需要手动配置slave转为master;后来为了高可用提出来哨兵模式,该模式下有一个哨兵监视master和slave,若master宕机可自动将slave转为master,但它也有一个问题,就是不能动态扩充;所以在3.x提出cluster集群模式

一主一从

最基础的主从复制模型,主节点负责处理写请求,从节点负责处理读请求,主节点使用RDB持久化模式,从节点使用AOF持久化模式:

- 主节点配置(6379.conf)

# If port 0 is specified Redis will not listen on a TCP socket.

port 6379

- 从节点配置(6389.conf)

# If port 0 is specified Redis will not listen on a TCP socket.

port 6389

# replicaof <masterip> <masterport>

replicaof 127.0.0.1 6379

- 启动

./src/redis-server 6379.conf

./src/redis-server 6389.conf

- 连接从节点命令:redis-cli -p 端口号,注意:从节点不能写操作。

ZBMAC-2f32839f6:redis yangyanping$ ./src/redis-cli -p 6389

127.0.0.1:6389> get name

"tom"

127.0.0.1:6389> set name jack

(error) READONLY You can't write against a read only replica.

127.0.0.1:6389>

- 查看主节点信息

127.0.0.1:6379> info

# Replication

role:master

connected_slaves:1

slave1:ip=127.0.0.1,port=6389,state=online,offset=686,lag=0

master_replid:578fe96cb7542fd63b107b8d61465318388b146b

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:686

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:686

一主多从

一个主节点可以有多个从节点,但每个从节点只能有一个主节点。一主多从适用于写少读多的场景,多个从节点可以分担读请求负载,提升并发:

- 从节点配置(6399.conf)

# If port 0 is specified Redis will not listen on a TCP socket.

port 6399

# replicaof <masterip> <masterport>

replicaof 127.0.0.1 6379

- 启动

./src/redis-server 6379.conf

./src/redis-server 6389.conf

./src/redis-server 6399.conf

- 查看主节点信息(有2个从节点)

# Replication

role:master

connected_slaves:1

slave0:ip=127.0.0.1,port=6389,state=online,offset=238,lag=1

master_replid:8150351eeee5569dabf93616e6a285dbd1d8d4ad

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:238

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:238

添加从节点

127.0.0.1:6389> slaveof 127.0.0.1 6379

127.0.0.1:6389> info

# Replication

role:slave

master_host:127.0.0.1

master_port:6379

master_link_status:up

master_last_io_seconds_ago:8

master_sync_in_progress:0

slave_repl_offset:266

slave_priority:100

slave_read_only:1

connected_slaves:0

master_replid:8150351eeee5569dabf93616e6a285dbd1d8d4ad

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:266

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:266

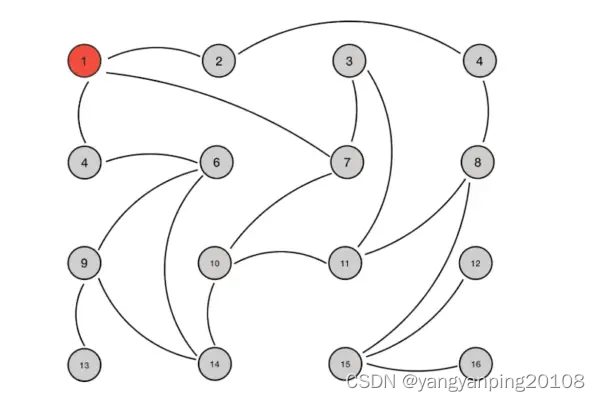

树状主从

上面的一主多从可以实现读请求的负载均衡,但当从节点数量多的时候,主节点的同步压力也是线性提升的,因此可以使用树状主从来分担主节点的同步压力:

复制原理

主从复制过程大体可以分为3个阶段:连接建立阶段(即准备阶段)、数据同步阶段、命令传播阶段。

在从节点执行 replicaof 命令后,复制过程便开始按下面的流程运作:

-

保存主节点信息:配置replicaof之后会在从节点保存主节点的信息。

-

主从建立socket连接:定时发现主节点以及尝试建立连接。

-

发送ping命令:从节点定时发送ping给主节点,主节点返回PONG。若主节点没有返回PONG或因阻塞无法响应导致超时,则主从断开,在下次定时任务时会从新ping主节点。

-

权限验证:若主节点开启了ACL或配置了requirepass参数,则从节点需要配置masteruser和masterauth参数才能保证主从正常连接。

-

同步数据集:首次连接,全量同步。

-

命令持续复制:全量同步完成后,保持增量同步。

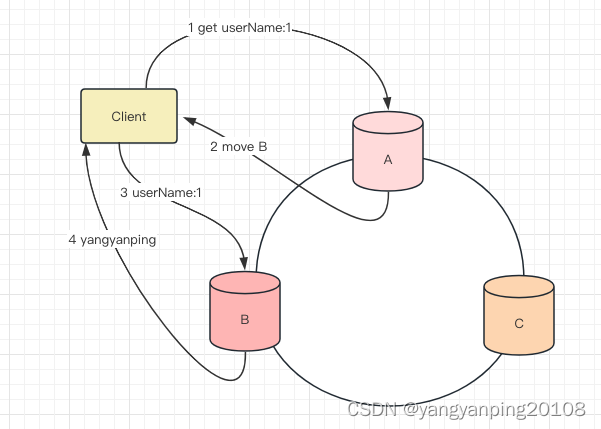

Gossip

Gossip protocol 也叫 Epidemic Protocol (流行病协议),实际上它还有很多别名,比如:“流言算法”、“疫情传播算法”等。

现在,我们通过一个具体的实例来深入体会一下 Gossip 传播的完整过程

为了表述清楚,我们先做一些前提设定

1、Gossip 是周期性的散播消息,把周期限定为 1 秒

2、被感染节点随机选择 k 个邻接节点(fan-out)散播消息,这里把 fan-out 设置为 3,每次最多往 3 个节点散播。

3、每次散播消息都选择尚未发送过的节点进行散播

4、收到消息的节点不再往发送节点散播,比如 A -> B,那么 B 进行散播的时候,不再发给 A。

注意:Gossip 过程是异步的,也就是说发消息的节点不会关注对方是否收到,即不等待响应;不管对方有没有收到,它都会每隔 1 秒向周围节点发消息。异步是它的优点,而消息冗余则是它的缺点。

集群中每个节点通过icing规则挑选要通信的节点,每个节点可能知道全部节点,也可能仅知道部分节点,只有这些节点彼此可以正常通信,最终它们会达到一致的状态。

redis-cluster设计

Redis-Cluster采用无中心结构,每个节点保存数据和整个集群状态,每个节点都和其他所有节点连接。

其结构特点:

- 所有的redis节点彼此互联(PING-PONG机制),内部使用二进制协议优化传输速度和带宽。

- 节点的fail是通过集群中超过半数的节点检测失效时才生效。

- 客户端与redis节点直连,不需要中间proxy层.客户端不需要连接集群所有节点,连接集群中任何一个可用节点即可。

- redis-cluster把所有的物理节点映射到[0-16383]slot上(不一定是平均分配),cluster 负责维护node<->slot<->value。

- Redis集群预分好16384个桶,当需要在 Redis 集群中放置一个 key-value 时,根据 CRC16(key) mod 16384的值,决定将一个key放到哪个桶中。

redis cluster节点

-

节点分配

现在我们有三个主节点分别是:A, B, C ,它们可以是一台机器上的三个端口,也可以是三台不同的服务器。那么,采用哈希槽 (hash slot)的方式来分配16384个slot 的话,它们三个节点分别承担的slot 区间是:

节点A覆盖0-5261;

节点B覆盖5262-10922;

节点C覆盖10923-16383. -

获取数据:

如果存入一个值,按照redis cluster哈希槽的算法: CRC16(‘key’) mod 16384 = 6782。 那么就会把这个key 的存储分配到 B 上了。同样,当我连接(A,B,C)任何一个节点想获取’key’这个key值时,也会这样的算法,然后内部跳转到B节点上获取数据 -

新增一个主节点:

新增一个节点D,redis cluster的这种做法是从各个节点的前面各拿取一部分slot到D上,我会在接下来的实践中实验。大致就会变成这样:

节点A覆盖1365-5261

节点B覆盖6827-10922

节点C覆盖12288-16383

节点D覆盖0-1364,5262-6826,10923-12287

同样删除一个节点也是类似,移动完成后就可以删除这个节点了。

-

客户端根据键的哈希值计算出一个槽(slot),槽的范围是0-16383。

-

客户端通过集群的内部映射表(cluster map)查找负责该槽的节点。

-

如果客户端连接的是集群中的某个节点,该节点会直接处理请求,并返回结果。

-

如果客户端连接的不是负责该槽的节点,则该节点会返回一个MOVED错误,指示客户端重新发送请求到正确的节点。

-

客户端根据MOVED错误中包含的信息,重新发送请求到正确的节点。

通过这种方式,Redis Cluster实现了请求的正确路由,确保每个键值对都能够被正确地处理。这种路由方式还允许集群在节点故障或新节点加入时进行自动的槽重分配,从而实现负载均衡和高可用性。

Redis Cluster主从模式

redis cluster 为了保证数据的高可用性,加入了主从模式,一个主节点对应一个或多个从节点,主节点提供数据存取,从节点则是从主节点拉取数据备份,当这个主节点挂掉后,就会有这个从节点选取一个来充当主节点,从而保证集群不会挂掉

上面那个例子里, 集群有ABC三个主节点, 如果这3个节点都没有加入从节点,如果B挂掉了,我们就无法访问整个集群了。A和C的slot也无法访问。

所以我们在集群建立的时候,一定要为每个主节点都添加了从节点, 比如像这样, 集群包含主节点A、B、C, 以及从节点A1、B1、C1, 那么即使B挂掉系统也可以继续正确工作。

B1节点替代了B节点,所以Redis集群将会选择B1节点作为新的主节点,集群将会继续正确地提供服务。 当B重新开启后,它就会变成B1的从节点。

不过需要注意,如果节点B和B1同时挂了,Redis集群就无法继续正确地提供服务了。

启动集群

- 配置和启动节点

拷贝redis.conf 文件为6个conf文件,分别为:7000.conf,7001.conf,7002.conf,7003.conf,7004.conf,7005.conf

7000.conf配置如下

# If port 0 is specified Redis will not listen on a TCP socket.

port 7000

# cluster node enable the cluster support uncommenting the following:

cluster-enabled yes

cluster-config-file nodes-7000.conf

# Most other internal time limits are multiple of the node timeout.

cluster-node-timeout 15000

#持久化方式

appendonly yes

7001.conf配置如下

# If port 0 is specified Redis will not listen on a TCP socket.

port 7001

# cluster node enable the cluster support uncommenting the following:

#开启cluster

cluster-enabled yes

# overlapping cluster configuration file names.

cluster-config-file nodes-7001.conf

# Most other internal time limits are multiple of the node timeout.

#节点通信时间

cluster-node-timeout 15000

#持久化方式

appendonly yes

分别启动6个节点

./src/redis-server 7000.conf

./src/redis-server 7001.conf

./src/redis-server 7002.conf

./src/redis-server 7003.conf

./src/redis-server 7004.conf

./src/redis-server 7005.conf

- 创建集群

使用create命令 --replicas 1 参数表示为每个主节点创建一个从节点,其他参数是实例的地址集合

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster create 127.0.0.1:7000 127.0.0.1:7001 127.0.0.1:7002 127.0.0.1:7003 127.0.0.1:7004 127.0.0.1:7005 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 127.0.0.1:7003 to 127.0.0.1:7000

Adding replica 127.0.0.1:7004 to 127.0.0.1:7001

Adding replica 127.0.0.1:7005 to 127.0.0.1:7002

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.....

>>> Performing Cluster Check (using node 127.0.0.1:7000)

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

- 测试存取值

客户端连接集群redis-cli需要带上 -c ,redis-cli -c -p 端口号

根据redis-cluster的key值分配,name应该分配到节点7001[5461-10922]上,下面显示redis cluster自动从7000跳转到了7001节点。

ZBMAC-2f32839f6:7001 yangyanping$ ./src/redis-cli -c -h 127.0.0.1 -p 7000

127.0.0.1:7000> set name yyp

-> Redirected to slot [5798] located at 127.0.0.1:7001

OK

127.0.0.1:7001> get name

"yyp"

- 测试一下7000从节点获取name值

ZBMAC-2f32839f6:7001 yangyanping$ ./src/redis-cli -c -h 127.0.0.1 -p 7000

127.0.0.1:7000> get name

-> Redirected to slot [5798] located at 127.0.0.1:7001

"yyp"

127.0.0.1:7001>

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli -p 8001

127.0.0.1:8001> set name yyp

(error) MOVED 5798 127.0.0.1:8002

127.0.0.1:8001> cluster keyslot name

(integer) 5798

- 查看节点信息

ZBMAC-2f32839f6:src yangyanping$ redis-cli --cluster check 127.0.0.1:7001

-bash: redis-cli: command not found

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster check 127.0.0.1:7001

127.0.0.1:7001 (bb006647...) -> 1 keys | 5462 slots | 1 slaves.

127.0.0.1:7000 (a4b3e517...) -> 0 keys | 5461 slots | 1 slaves.

127.0.0.1:7002 (189dfc4e...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 1 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:7001)

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

ZBMAC-2f32839f6:src yangyanping$

- 添加一个主新节点

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster add-node --slave 127.0.0.1:7006 127.0.0.1:7000

>>> Adding node 127.0.0.1:7006 to cluster 127.0.0.1:7000

>>> Performing Cluster Check (using node 127.0.0.1:7000)

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 127.0.0.1:7006 to make it join the cluster.

[OK] New node added correctly.

- 添加一个从节点

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster add-node 127.0.0.1:7007 127.0.0.1:7000 --cluster-slave

>>> Adding node 127.0.0.1:7007 to cluster 127.0.0.1:7000

>>> Performing Cluster Check (using node 127.0.0.1:7000)

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 127.0.0.1:7006

slots: (0 slots) master

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

Automatically selected master 127.0.0.1:7006

>>> Send CLUSTER MEET to node 127.0.0.1:7007 to make it join the cluster.

Waiting for the cluster to join

......

>>> Configure node as replica of 127.0.0.1:7006.

[OK] New node added correctly.

- cluster nodes

127.0.0.1:7000> cluster nodes

ac1e10dff352c589be32a1f30d8b60908061b9ab :0@0 master,fail,noaddr - 1613616058156 1613616058054 0 disconnected

bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001@17001 master - 0 1613616183287 2 connected 5461-10922

d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003@17003 slave a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 0 1613616180250 4 connected

09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 127.0.0.1:7006@17006 master - 0 1613616184296 7 connected

538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005@17005 slave 189dfc4e575ed82809a8c564cf669065ba5f0323 0 1613616181262 6 connected

c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004@17004 slave bb0066474dc1e3dbe739dd07b11b92d18a824e3d 0 1613616182000 5 connected

a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000@17000 myself,master - 0 1613616183000 1 connected 0-5460

75337fd01a908ba5771e18569aa9db715026e22b 127.0.0.1:7007@17007 slave 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 0 1613616181000 7 connected

189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002@17002 master - 0 1613616182275 3 connected 10923-16383

127.0.0.1:7000>

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster check 127.0.0.1:7001

127.0.0.1:7001 (bb006647...) -> 0 keys | 5462 slots | 1 slaves.

127.0.0.1:7000 (a4b3e517...) -> 0 keys | 5461 slots | 1 slaves.

127.0.0.1:7002 (189dfc4e...) -> 0 keys | 5461 slots | 1 slaves.

127.0.0.1:7006 (09dbf0d6...) -> 0 keys | 0 slots | 1 slaves.

[OK] 0 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:7001)

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

S: 75337fd01a908ba5771e18569aa9db715026e22b 127.0.0.1:7007

slots: (0 slots) slave

replicates 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

M: 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 127.0.0.1:7006

slots: (0 slots) master

1 additional replica(s)

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

ZBMAC-2f32839f6:src yangyanping$

- 分配哈希槽

注意:新添加的节点是没有哈希曹的,所以并不能正常存储数据,需要给新添加的节点分配哈希曹:哈希槽的配置不均匀,可能导致数据不同步;

1、重新分配哈希槽

./src/redis-cli --cluster reshard ip:port -a 密码

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster reshard 127.0.0.1:7000

>>> Performing Cluster Check (using node 127.0.0.1:7000)

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

M: 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 127.0.0.1:7006

slots: (0 slots) master

1 additional replica(s)

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

S: 75337fd01a908ba5771e18569aa9db715026e22b 127.0.0.1:7007

slots: (0 slots) slave

replicates 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

输入要分配多少个哈希槽(数量)?比如我要分配1000个哈希槽

How many slots do you want to move (from 1 to 16384)? 1000

输入指定要分配哈希槽的节点ID,如上上图端口号为7006的master节点哈希槽的数量为0(选择任意一个节点作为目标节点进行分配哈希槽)

What is the receiving node ID? 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc

4、选择需要分配的哈希槽来源,输入all

输入all 需要分配给目标节点的哈希槽来着当前集群的其他主节点(每个节点拿出的数量为集群自动决定)

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: all

Ready to move 1000 slots.

Source nodes:

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

Destination node:

M: 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 127.0.0.1:7006

slots: (0 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 5461 from bb0066474dc1e3dbe739dd07b11b92d18a824e3d

Moving slot 5462 from bb0066474dc1e3dbe739dd07b11b92d18a824e3d

Moving slot 5463 from bb0066474dc1e3dbe739dd07b11b92d18a824e3d

Moving slot 5464 from bb0066474dc1e3dbe739dd07b11b92d18a824e3d

ZBMAC-2f32839f6:src yangyanping$ ./redis-cli --cluster check 127.0.0.1:7001

127.0.0.1:7001 (bb006647...) -> 0 keys | 5128 slots | 1 slaves.

127.0.0.1:7000 (a4b3e517...) -> 0 keys | 5128 slots | 1 slaves.

127.0.0.1:7002 (189dfc4e...) -> 0 keys | 5128 slots | 1 slaves.

127.0.0.1:7006 (09dbf0d6...) -> 0 keys | 1000 slots | 1 slaves.

[OK] 0 keys in 4 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 127.0.0.1:7001)

M: bb0066474dc1e3dbe739dd07b11b92d18a824e3d 127.0.0.1:7001

slots:[5795-10922] (5128 slots) master

1 additional replica(s)

M: a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3 127.0.0.1:7000

slots:[333-5460] (5128 slots) master

1 additional replica(s)

M: 189dfc4e575ed82809a8c564cf669065ba5f0323 127.0.0.1:7002

slots:[11256-16383] (5128 slots) master

1 additional replica(s)

S: 538aa48329926cb47d306b22cf88a8a13231cd91 127.0.0.1:7005

slots: (0 slots) slave

replicates 189dfc4e575ed82809a8c564cf669065ba5f0323

S: 75337fd01a908ba5771e18569aa9db715026e22b 127.0.0.1:7007

slots: (0 slots) slave

replicates 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc

S: d9679c584f284ab7b0c1bea1294f48b150ed8be8 127.0.0.1:7003

slots: (0 slots) slave

replicates a4b3e517ac2c46e23fa9d438c8f643c9a6d64ac3

M: 09dbf0d62d1d14b6b54ebcd67f3456a68ae590fc 127.0.0.1:7006

slots:[0-332],[5461-5794],[10923-11255] (1000 slots) master

1 additional replica(s)

S: c87fac94a13544d4a108122611011c6e2d385384 127.0.0.1:7004

slots: (0 slots) slave

replicates bb0066474dc1e3dbe739dd07b11b92d18a824e3d

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

Hash Tags

分片,就是一个hash的过程:对key做md5,sha1等hash算法,根据hash值分配到不同的机器上。

为了实现将key分到相同机器,就需要相同的hash值,即相同的key(改变hash算法也行,但不简单)。

但key相同是不现实的,因为key都有不同的用途。例如user:user1:name保存用户的姓名,user:user1:age保存用户的年纪,两个key不可能同名。

仔细观察user:user1:name和user:user1:age,两个key其实有相同的地方,即user1。能不能拿这一部分去计算hash呢?

这就是 Hash Tag 。允许用key的部分字符串来计算hash。

127.0.0.1:8001> set user:{yyp}:name yangyanping

OK

127.0.0.1:8001> set user:{yyp}:age 28

OK

127.0.0.1:8001> set user:{yyp}:sex "男"

OK

127.0.0.1:8001> mget user:{yyp}:name user:{yyp}:age user:{yyp}:sex

1) "yangyanping"

2) "28"

3) "\xe7\x94\xb7"

127.0.0.1:8001>

参考:

Redis-Cluster集群

Redis集群

redis集群扩容(添加新节点)

[GossIP] https://baijiahao.baidu.com/s?id=1757406864789902142&wfr=spider&for=pc

2443

2443

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?