多媒体编程——摄像头录像预览

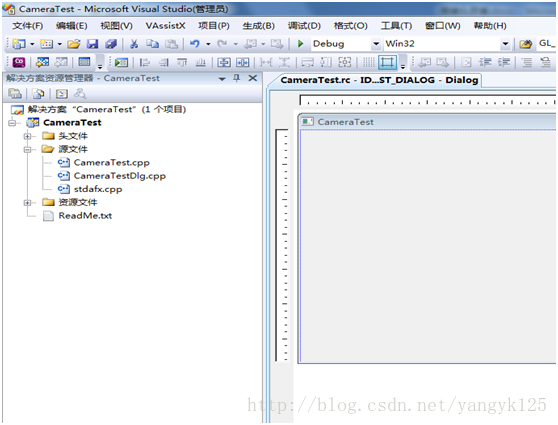

1、 新建MFC工程,选择对话框工程。

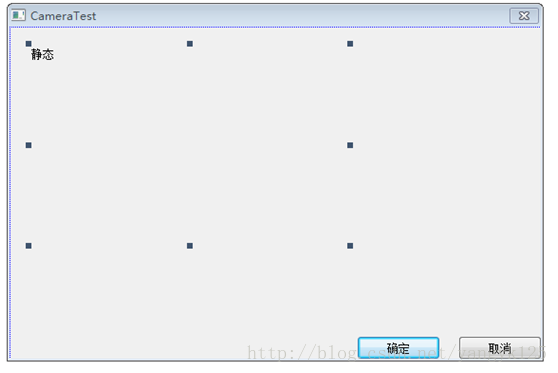

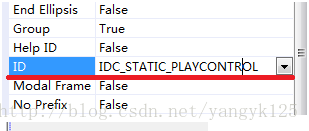

2、新建一个static控件。

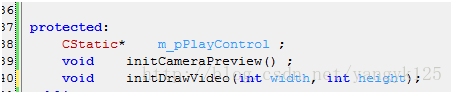

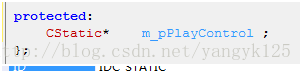

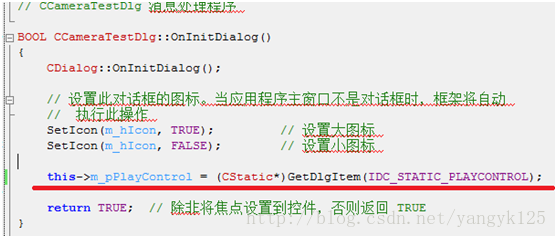

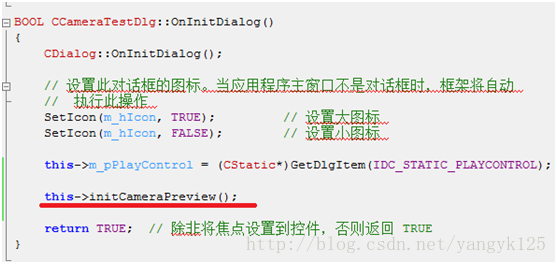

3、修改ID,并且在OnInitDialog里面获取指针。

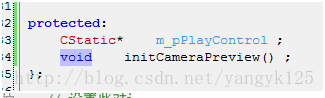

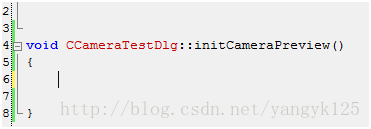

4、加一个成员函数,并且在OnInitDialog里面调用,作为我们的例子代码的放置位置。

5、添加完整头文件

#include <qedit.h>

#include <dshow.h>

#include <dxtrans.h>

#pragma comment(lib,"strmiids.lib")

//DirectDraw

#include <ddraw.h>

#pragma comment(lib,"ddraw.lib")

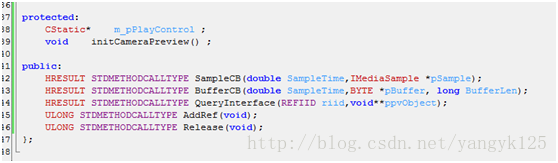

#pragma comment(lib,"dxguid.lib")并且让Dialog同时继承类型 ISampleGrabberCB。

6、添加读取摄像头的代码

摄像头读取,使用的是DirectXSDK中的DirectShow组件

需要编写的代码如下

void CCameraTestDlg::initCameraPreview()

{

HRESULT hr = CoInitialize(NULL);

if(FAILED(hr))

return ;

IBaseFilter* pCameraFilter = NULL ;

//检查并加载系统摄像头。

CComPtr <IMoniker> pMoniker =NULL;

ULONG cFetched;

CComPtr <ICreateDevEnum> pDevEnum =NULL;

hr = CoCreateInstance (CLSID_SystemDeviceEnum, NULL, CLSCTX_INPROC,IID_ICreateDevEnum, (void **) &pDevEnum);

if (FAILED(hr))

return ;

CComPtr <IEnumMoniker> pClassEnum = NULL;

hr = pDevEnum->CreateClassEnumerator (CLSID_VideoInputDeviceCategory, &pClassEnum, 0);

if (FAILED(hr))

return ;

if (pClassEnum == NULL)

return ;

if (SUCCEEDED(pClassEnum->Next (1, &pMoniker, &cFetched)))

{

hr = pMoniker->BindToObject(0,0,IID_IBaseFilter, (void**)&pCameraFilter);

if (FAILED(hr))

return ;

}

if(pCameraFilter == NULL)

return ;

//加载DirectShow对象

IGraphBuilder* pGraphBuilder = NULL ;

hr = CoCreateInstance (CLSID_FilterGraph, NULL, CLSCTX_INPROC,IID_IGraphBuilder, (void **) &pGraphBuilder);

if (FAILED(hr))

return ;

// Create the capture graph builder

ICaptureGraphBuilder2* pCaptureGraphBuilder = NULL ;

hr = CoCreateInstance (CLSID_CaptureGraphBuilder2 , NULL, CLSCTX_INPROC,IID_ICaptureGraphBuilder2, (void **) &pCaptureGraphBuilder);

if (FAILED(hr))

return ;

//Create sampleGrabber

IBaseFilter* pSampleGrabberFilter = NULL ;

hr = CoCreateInstance(CLSID_SampleGrabber, NULL, CLSCTX_INPROC,IID_IBaseFilter, (void**)&pSampleGrabberFilter);

if (FAILED(hr))

return ;

ISampleGrabber* pSampleGrabber = NULL ;

hr = pSampleGrabberFilter->QueryInterface(IID_ISampleGrabber, (void**)&pSampleGrabber);

if (FAILED(hr))

return ;

//设置视频格式

AM_MEDIA_TYPE mt;

ZeroMemory(&mt, sizeof(AM_MEDIA_TYPE));

mt.majortype = MEDIATYPE_Video ;

mt.subtype = MEDIASUBTYPE_RGB32;

hr = pSampleGrabber->SetMediaType(&mt);

if (FAILED(hr))

return ;

hr = pSampleGrabber->SetOneShot(FALSE);

if (FAILED(hr))

return ;

hr = pSampleGrabber->SetBufferSamples(FALSE);

if (FAILED(hr))

return ;

hr = pSampleGrabber->SetCallback(this, 1);

if (FAILED(hr))

return ;

// Obtain interfaces for media control and Video Window

IMediaControl* pMediaControl = NULL ;

hr = pGraphBuilder->QueryInterface(IID_IMediaControl,(LPVOID *) &pMediaControl);

if (FAILED(hr))

return ;

// Attach the filter graph to the capture graph

hr = pCaptureGraphBuilder->SetFiltergraph(pGraphBuilder);

if (FAILED(hr))

return ;

// Add Capture filter to our graph.

hr = pGraphBuilder->AddFilter(pCameraFilter, L"Video Capture");

if (FAILED(hr))

return ;

hr = pGraphBuilder->AddFilter(pSampleGrabberFilter, L"Sample Grabber");

if (FAILED(hr))

return ;

//将摄像头和采集连接

hr = pCaptureGraphBuilder->RenderStream(NULL,&MEDIATYPE_Video,pCameraFilter,NULL,pSampleGrabberFilter);

if (FAILED(hr))

return ;

//连接之后取得来源视频格式

AM_MEDIA_TYPE amt;

hr = pSampleGrabber->GetConnectedMediaType(&amt);

if (FAILED(hr))

return ;

//获取摄像头采集画面的分辨率

int width = 0 ;

int height = 0 ;

VIDEOINFOHEADER* pVideoHeader = reinterpret_cast<VIDEOINFOHEADER*>(amt.pbFormat);

if(pVideoHeader)

{

width = pVideoHeader->bmiHeader.biWidth ;

height= pVideoHeader->bmiHeader.biHeight ;

if (amt.cbFormat != 0)

{

CoTaskMemFree((PVOID)mt.pbFormat);

mt.cbFormat = 0;

mt.pbFormat = NULL;

}

if (amt.pUnk != NULL)

{

mt.pUnk->Release();

mt.pUnk = NULL;

}

}

else

return ;

//调用run之后才真正开始得到图像。

pMediaControl->Run();

}

HRESULT CCameraTestDlg::SampleCB(double SampleTime,IMediaSample *pSample)

{

return E_FAIL;

}

HRESULT CCameraTestDlg::BufferCB(double SampleTime,BYTE *pBuffer, long BufferLen)

{

OutputDebugString(_T("BufferCB image data!\n"));

return E_FAIL;

}

HRESULT CCameraTestDlg::QueryInterface(REFIID riid,void** ppvObject)

{

return S_OK ;

}

ULONG CCameraTestDlg::AddRef(void)

{

return 0 ;

}

ULONG CCameraTestDlg::Release(void)

{

return 0 ;

}

此刻运行,已经能看到OutputDebugString不停的输出了,说明已经获取到了数据。只不过还没添加渲染,看不到图像。

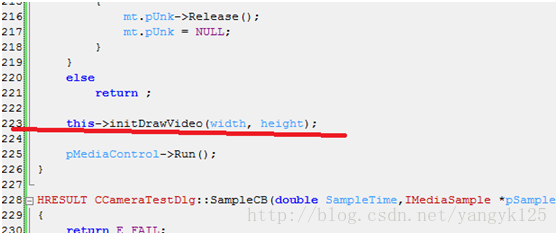

7、添加视频渲染初始化函数,initDrawVideo(int width, int height)

并且在初始化摄像头成功,获取了分辨率那个地方,调用它。

新增加一个渲染函数。

void DisplayLine(LPBYTE pBuf,int nPixelBytes)第一个参数是数据buf,第二个参数是字节数。

后面完整代码:

// CameraTestDlg.h : 头文件

//

#pragma once

//DirectShow

#include <qedit.h>

#include <dshow.h>

#include <dxtrans.h>

#pragma comment(lib,"strmiids.lib")

//DirectDraw

#include <ddraw.h>

#pragma comment(lib,"ddraw.lib")

#pragma comment(lib,"dxguid.lib")

// CCameraTestDlg 对话框

class CCameraTestDlg : public CDialog

, public ISampleGrabberCB

{

// 构造

public:

CCameraTestDlg(CWnd* pParent = NULL); // 标准构造函数

// 对话框数据

enum { IDD = IDD_CAMERATEST_DIALOG };

protected:

virtual void DoDataExchange(CDataExchange* pDX); // DDX/DDV 支持

// 实现

protected:

HICON m_hIcon;

// 生成的消息映射函数

virtual BOOL OnInitDialog();

afx_msg void OnPaint();

afx_msg HCURSOR OnQueryDragIcon();

DECLARE_MESSAGE_MAP()

protected:

CStatic* m_pPlayControl ;

LPDIRECTDRAW7 m_lpDDraw; // DirectDraw 对象指针

LPDIRECTDRAWSURFACE7 m_lpDDSOverlay ; // DirectDraw 离屏表面指针

DDSURFACEDESC2 m_stOverlayDdsd; // DirectDraw 表面描述

LPDIRECTDRAWSURFACE7 m_lpDDSPrimary; // DirectDraw 主表面指针

DDSURFACEDESC2 m_stPrimaryDdsd; // DirectDraw 表面描述

LPDIRECTDRAWCLIPPER m_pDirectDrawCliper ;//

void initCameraPreview() ;

void initDrawVideo(int width, int height);

void DisplayLine(LPBYTE pBuf,int nPixelBytes);

public:

HRESULT STDMETHODCALLTYPE SampleCB(double SampleTime,IMediaSample *pSample);

HRESULT STDMETHODCALLTYPE BufferCB(double SampleTime,BYTE *pBuffer, long BufferLen);

HRESULT STDMETHODCALLTYPE QueryInterface(REFIID riid,void**ppvObject);

ULONG STDMETHODCALLTYPE AddRef(void);

ULONG STDMETHODCALLTYPE Release(void);

};

实现文件代码如下:

// CameraTestDlg.cpp : 实现文件

//

#include "stdafx.h"

#include "CameraTest.h"

#include "CameraTestDlg.h"

#ifdef _DEBUG

#define new DEBUG_NEW

#endif

// CCameraTestDlg 对话框

CCameraTestDlg::CCameraTestDlg(CWnd* pParent /*=NULL*/)

: CDialog(CCameraTestDlg::IDD, pParent)

{

m_hIcon = AfxGetApp()->LoadIcon(IDR_MAINFRAME);

}

void CCameraTestDlg::DoDataExchange(CDataExchange* pDX)

{

CDialog::DoDataExchange(pDX);

}

BEGIN_MESSAGE_MAP(CCameraTestDlg, CDialog)

ON_WM_PAINT()

ON_WM_QUERYDRAGICON()

//}}AFX_MSG_MAP

END_MESSAGE_MAP()

// CCameraTestDlg 消息处理程序

BOOL CCameraTestDlg::OnInitDialog()

{

CDialog::OnInitDialog();

// 设置此对话框的图标。当应用程序主窗口不是对话框时,框架将自动

// 执行此操作

SetIcon(m_hIcon, TRUE); // 设置大图标

SetIcon(m_hIcon, FALSE); // 设置小图标

this->m_pPlayControl = (CStatic*)GetDlgItem(IDC_STATIC_PLAYCONTROL);

this->initCameraPreview();

return TRUE; // 除非将焦点设置到控件,否则返回TRUE

}

// 如果向对话框添加最小化按钮,则需要下面的代码

// 来绘制该图标。对于使用文档/视图模型的MFC 应用程序,

// 这将由框架自动完成。

void CCameraTestDlg::OnPaint()

{

if (IsIconic())

{

CPaintDC dc(this); // 用于绘制的设备上下文

SendMessage(WM_ICONERASEBKGND, reinterpret_cast<WPARAM>(dc.GetSafeHdc()), 0);

// 使图标在工作区矩形中居中

int cxIcon = GetSystemMetrics(SM_CXICON);

int cyIcon = GetSystemMetrics(SM_CYICON);

CRect rect;

GetClientRect(&rect);

int x = (rect.Width() - cxIcon + 1) / 2;

int y = (rect.Height() - cyIcon + 1) / 2;

// 绘制图标

dc.DrawIcon(x, y, m_hIcon);

}

else

{

CDialog::OnPaint();

}

}

//当用户拖动最小化窗口时系统调用此函数取得光标

//显示。

HCURSOR CCameraTestDlg::OnQueryDragIcon()

{

return static_cast<HCURSOR>(m_hIcon);

}

void CCameraTestDlg::initCameraPreview()

{

HRESULT hr = CoInitialize(NULL);

if(FAILED(hr))

return ;

IBaseFilter* pCameraFilter = NULL ;

//检查并加载系统摄像头。

CComPtr <IMoniker> pMoniker =NULL;

ULONG cFetched;

CComPtr <ICreateDevEnum> pDevEnum =NULL;

hr = CoCreateInstance (CLSID_SystemDeviceEnum, NULL, CLSCTX_INPROC,IID_ICreateDevEnum, (void **) &pDevEnum);

if (FAILED(hr))

return ;

CComPtr <IEnumMoniker> pClassEnum = NULL;

hr = pDevEnum->CreateClassEnumerator (CLSID_VideoInputDeviceCategory, &pClassEnum, 0);

if (FAILED(hr))

return ;

if (pClassEnum == NULL)

return ;

if (SUCCEEDED(pClassEnum->Next (1, &pMoniker, &cFetched)))

{

hr = pMoniker->BindToObject(0,0,IID_IBaseFilter, (void**)&pCameraFilter);

if (FAILED(hr))

return ;

}

if(pCameraFilter == NULL)

return ;

//加载DirectShow对象

IGraphBuilder* pGraphBuilder = NULL ;

hr = CoCreateInstance (CLSID_FilterGraph, NULL, CLSCTX_INPROC,IID_IGraphBuilder, (void **) &pGraphBuilder);

if (FAILED(hr))

return ;

// Create the capture graph builder

ICaptureGraphBuilder2* pCaptureGraphBuilder = NULL ;

hr = CoCreateInstance (CLSID_CaptureGraphBuilder2 , NULL, CLSCTX_INPROC,IID_ICaptureGraphBuilder2, (void **) &pCaptureGraphBuilder);

if (FAILED(hr))

return ;

//Create sampleGrabber

IBaseFilter* pSampleGrabberFilter = NULL ;

hr = CoCreateInstance(CLSID_SampleGrabber, NULL, CLSCTX_INPROC,IID_IBaseFilter, (void**)&pSampleGrabberFilter);

if (FAILED(hr))

return ;

ISampleGrabber* pSampleGrabber = NULL ;

hr = pSampleGrabberFilter->QueryInterface(IID_ISampleGrabber, (void**)&pSampleGrabber);

if (FAILED(hr))

return ;

//设置视频格式

AM_MEDIA_TYPE mt;

ZeroMemory(&mt, sizeof(AM_MEDIA_TYPE));

mt.majortype = MEDIATYPE_Video ;

mt.subtype = MEDIASUBTYPE_RGB32;

hr = pSampleGrabber->SetMediaType(&mt);

if (FAILED(hr))

return ;

hr = pSampleGrabber->SetOneShot(FALSE);

if (FAILED(hr))

return ;

hr = pSampleGrabber->SetBufferSamples(FALSE);

if (FAILED(hr))

return ;

hr = pSampleGrabber->SetCallback(this, 1);

if (FAILED(hr))

return ;

// Obtain interfaces for media control and Video Window

IMediaControl* pMediaControl = NULL ;

hr = pGraphBuilder->QueryInterface(IID_IMediaControl,(LPVOID *) &pMediaControl);

if (FAILED(hr))

return ;

// Attach the filter graph to the capture graph

hr = pCaptureGraphBuilder->SetFiltergraph(pGraphBuilder);

if (FAILED(hr))

return ;

// Add Capture filter to our graph.

hr = pGraphBuilder->AddFilter(pCameraFilter, L"Video Capture");

if (FAILED(hr))

return ;

hr = pGraphBuilder->AddFilter(pSampleGrabberFilter, L"Sample Grabber");

if (FAILED(hr))

return ;

//将摄像头和采集连接

hr = pCaptureGraphBuilder->RenderStream(NULL,&MEDIATYPE_Video,pCameraFilter,NULL,pSampleGrabberFilter);

if (FAILED(hr))

return ;

//连接之后取得来源视频格式

AM_MEDIA_TYPE amt;

hr = pSampleGrabber->GetConnectedMediaType(&amt);

if (FAILED(hr))

return ;

//获取摄像头采集画面的分辨率

int width = 0 ;

int height = 0 ;

VIDEOINFOHEADER* pVideoHeader = reinterpret_cast<VIDEOINFOHEADER*>(amt.pbFormat);

if(pVideoHeader)

{

width = pVideoHeader->bmiHeader.biWidth ;

height= pVideoHeader->bmiHeader.biHeight ;

if (amt.cbFormat != 0)

{

CoTaskMemFree((PVOID)mt.pbFormat);

mt.cbFormat = 0;

mt.pbFormat = NULL;

}

if (amt.pUnk != NULL)

{

mt.pUnk->Release();

mt.pUnk = NULL;

}

}

else

return ;

this->initDrawVideo(width, height);

pMediaControl->Run();

}

HRESULT CCameraTestDlg::SampleCB(double SampleTime,IMediaSample *pSample)

{

return E_FAIL;

}

HRESULT CCameraTestDlg::BufferCB(double SampleTime,BYTE *pBuffer, long BufferLen)

{

this->DisplayLine(pBuffer, 32/8);

return E_FAIL;

}

HRESULT CCameraTestDlg::QueryInterface(REFIID riid,void** ppvObject)

{

return S_OK ;

}

ULONG CCameraTestDlg::AddRef(void)

{

return 0 ;

}

ULONG CCameraTestDlg::Release(void)

{

return 0 ;

}

void CCameraTestDlg::initDrawVideo(int width, int height)

{

HRESULT hr = DirectDrawCreateEx(NULL, (LPVOID*)&m_lpDDraw,IID_IDirectDraw7, NULL);

if (FAILED(hr))

return ;

// 设置协作层

hr = m_lpDDraw->SetCooperativeLevel(this->m_hWnd, DDSCL_NORMAL);

if (FAILED(hr))

return ;

// 创建主表面

ZeroMemory(&m_stPrimaryDdsd, sizeof(DDSURFACEDESC2));

m_stPrimaryDdsd.dwSize = sizeof(DDSURFACEDESC2);

m_stPrimaryDdsd.dwFlags = DDSD_CAPS ;

m_stPrimaryDdsd.ddsCaps.dwCaps = DDSCAPS_PRIMARYSURFACE;

hr = m_lpDDraw->CreateSurface(&m_stPrimaryDdsd, &m_lpDDSPrimary, NULL);

if (FAILED(hr))

return ;

// 创建缓存表面

ZeroMemory(&m_stOverlayDdsd, sizeof(DDSURFACEDESC2));

m_stOverlayDdsd.dwSize = sizeof(DDSURFACEDESC2);

m_stOverlayDdsd.ddsCaps.dwCaps = DDSCAPS_VIDEOMEMORY | DDSCAPS_OFFSCREENPLAIN ;

m_stOverlayDdsd.dwFlags = DDSD_CAPS | DDSD_HEIGHT | DDSD_WIDTH | DDSD_PIXELFORMAT ;

m_stOverlayDdsd.dwWidth = width;

m_stOverlayDdsd.dwHeight = height;

struct DDPIXELFORMATDEF : public DDPIXELFORMAT

{

DDPIXELFORMATDEF(UINT dwFlags,

UINT dwFourCC = 0x0,

UINT dwUnionBitCount = 0x0,

UINT dwUnionBitMask1 = 0x0,UINT dwUnionBitMask2 = 0x0,

UINT dwUnionBitMask3 = 0x0,UINT dwUnionBitMask4 = 0x0)

{

__super::dwFlags = dwFlags ;

__super::dwFourCC = dwFourCC ;

__super::dwRGBBitCount = dwUnionBitCount ;

__super::dwRBitMask = dwUnionBitMask1 ;

__super::dwGBitMask = dwUnionBitMask2 ;

__super::dwBBitMask = dwUnionBitMask3 ;

__super::dwRGBAlphaBitMask = dwUnionBitMask4 ;

}

};

//上面摄像头那里强制的设置的RGB32,则这里就使用RGB32。

m_stOverlayDdsd.ddpfPixelFormat = DDPIXELFORMATDEF(DDPF_RGB,0x0,32,0x00FF0000,0x0000FF00,0x000000FF,0xFF000000) ;

m_stOverlayDdsd.ddpfPixelFormat.dwSize = sizeof(DDPIXELFORMAT);

hr = m_lpDDraw->CreateSurface(&m_stOverlayDdsd, &m_lpDDSOverlay, NULL);

if (FAILED(hr))

{

m_stOverlayDdsd.ddsCaps.dwCaps = DDSCAPS_SYSTEMMEMORY | DDSCAPS_OFFSCREENPLAIN ;//使用内存再次尝试创建。

hr = m_lpDDraw->CreateSurface(&m_stOverlayDdsd, &m_lpDDSOverlay, NULL);

if (FAILED(hr))

return ;

}

hr = m_lpDDraw->CreateClipper(0,&m_pDirectDrawCliper,NULL);

if (FAILED(hr))

return ;

hr = m_pDirectDrawCliper->SetHWnd(0,this->m_pPlayControl->m_hWnd);

if (FAILED(hr))

return ;

hr = m_lpDDSPrimary->SetClipper(m_pDirectDrawCliper);

if (FAILED(hr))

return ;

}

void CCameraTestDlg::DisplayLine(LPBYTE pBuf,int nPixelBytes)

{

HRESULT hr; // DirectDraw 函数返回值

hr = m_lpDDSOverlay->Lock(NULL,&m_stOverlayDdsd,DDLOCK_WAIT|DDLOCK_WRITEONLY,NULL);

if (FAILED(hr))

return ;

LPBYTE p_YUV_RGB = pBuf ;

int dwByteCountPerLine = m_stOverlayDdsd.dwWidth*nPixelBytes; //m_stOverlayDdsd.ddpfPixelFormat.dwRGBBitCount/8 ;

//摄像头读取的画面上下是反过来的,这个就自己处理了,就在下面的循环里处理就好了。

LPBYTE lpSurface = (LPBYTE)m_stOverlayDdsd.lpSurface;

if(lpSurface)

{

for (int i=0;i<m_stOverlayDdsd.dwHeight;i++)

{

memcpy(lpSurface, p_YUV_RGB, dwByteCountPerLine);

p_YUV_RGB += dwByteCountPerLine;

lpSurface += m_stOverlayDdsd.lPitch;

}

}

hr = m_lpDDSOverlay->Unlock(NULL);

if (FAILED(hr))

return ;

RECT rect;

::GetClientRect(m_pPlayControl->m_hWnd,&rect);

POINT point = {0,0};

::ClientToScreen(m_pPlayControl->m_hWnd,&point);

rect.right = rect.right - rect.left + point.x;

rect.left = point.x;

rect.bottom = rect.bottom - rect.top + point.y;

rect.top = point.y;

hr = m_lpDDSPrimary->Blt(&rect, m_lpDDSOverlay, NULL, DDBLT_WAIT, NULL);

if (FAILED(hr))

return ;

}

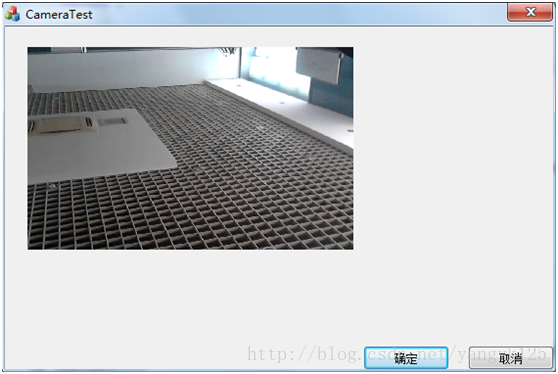

运行截图如下:

补充说明:

对于DirectDraw创建Surface的时候,显卡内存模式支持的像素格式比系统内存支持像素格式要少。比方说这个例子里面直接初始化的是RGB32的像素格式的,而其实系统显卡通常默认是不支持的,但是软件Surface是支持的。为了获得更快的渲染效率,通常实际开发的时候还是采用系统显卡支持的像素格式,比如YUYV NV12等等。

1349

1349

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?