一、安装

关闭防火墙

systemctl stop firewalld.service

systemctl disable firewalld.service

安装Docker引擎

yum install -y yum-utils

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

systemctl start docker

systemctl enable docker

#测试

docker run hello-world

阿里镜像加速方法: 容器镜像服务 (aliyun.com)

二、镜像管理

搜索镜像

[root@localhost ~]# docker search ubuntu

下载镜像

[root@localhost ~]# docker pull ubuntu

查看本地镜像

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu latest 9873176a8ff5 2 weeks ago 72.7MB

hello-world latest d1165f221234 4 months ago 13.3kB

三、容器基础操作

列出容器

[root@localhost ~]# docker ps [OPTIONS]

-a #所有容器

-q #只显示容器编号

启动容器

docker run [OPTIONS] IMAGES [COMMAND] [ARG...]

[OPTIONS]:选项

-i:表示启动一个可以交互的容器,并持续打开标准输入

-t:表示使用终端关联到容器的标准输入输出上

-d:表示将容器放置到后台运行

-p 主机端口:容器端口 表示端口映射 80:80

-P 容器端口 表示随机端口映射

--rm:退出后即删除容器

-name:给运行的容器定义一个名字

IMAGE:要运行的镜像名称

COMMAND:表示启动容器时要运行的命令

交互式启动容器

[root@localhost ~]# docker run -it ubuntu /bin/bash

root@31a59f39e04b:/# cat /etc/issue

Ubuntu 20.04.2 LTS \n \l

非交互式启动容器

[root@localhost ~]# docker run -d --name myubuntu1 ubuntu /bin/sleep 3000

bace9e29e9d69c26e0baaac122cc20d06f83fb5742b64d3636493c7b2a3f6802

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bace9e29e9d6 ubuntu "/bin/sleep 3000" 8 seconds ago Up 7 seconds myubuntu1

#可在宿主机进程查看sleep进程

[root@localhost ~]# ps aux|grep sleep |grep -v grep

root 2046 0.0 0.0 2500 376 ? Ss 15:48 0:00 /bin/sleep 3000

启动一次之后自动删除容器

[root@localhost ~]# docker run --rm ubuntu /bin/echo Hello DockerHello Docker

进入一个正在运行的容器

[root@localhost ~]# docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESbace9e29e9d6 ubuntu "/bin/sleep 3000" 2 minutes ago Up 2 minutes myubuntu1[root@localhost ~]# docker exec -it myubuntu1 /bin/bashroot@bace9e29e9d6:/# ps PID TTY TIME CMD 7 pts/0 00:00:00 bash 15 pts/0 00:00:00 ps

退出容器

exit #交互模式停止并退出,后台模式退出

Ctrl + P + Q #退出

停止容器

[root@localhost ~]# docker stop myubuntu1

myubuntu1

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

重启容器

[root@localhost ~]# docker restart myubuntu1

myubuntu1

[root@localhost ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bace9e29e9d6 ubuntu "/bin/sleep 3000" 5 minutes ago Up 6 seconds myubuntu1

删除停止的容器

[root@localhost ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bace9e29e9d6 ubuntu "/bin/sleep 3000" 6 minutes ago Up 34 seconds myubuntu1

31a59f39e04b ubuntu "/bin/bash" 8 minutes ago Exited (0) 7 minutes ago gracious_benz

[root@localhost ~]# docker rm gracious_benz

gracious_benz

[root@localhost ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bace9e29e9d6 ubuntu "/bin/sleep 3000" 6 minutes ago Up 57 seconds myubuntu1

强制删除容器

#普通删除失败

[root@localhost ~]# docker rm myubuntu1

Error response from daemon: You cannot remove a running container bace9e29e9d69c26e0baaac122cc20d06f83fb5742b64d3636493c7b2a3f6802. Stop the container before attempting removal or force remove

#强制删除

[root@localhost ~]# docker rm -f myubuntu1

myubuntu1

#一次性删除多个容器

[root@localhost ~]# docker rm -f $(docker ps -a -q)

查看容器的元数据

[root@localhost ~]# docker run -d --name myubuntu1 ubuntu /bin/sleep 3000

2e34c8f69fb93e1f9cbce318a8cf1a0c71cd406c9aff5c88831ffe9a2718a0a2

#可以查看容器的详情

[root@localhost ~]# docker inspect myubuntu1

查看容器日志

[root@localhost ~]# docker run hello-world 2>&1 >>/dev/null

[root@localhost ~]# docker ps -a |grep hello

734d3222aee6 hello-world "/hello" 2 minutes ago Exited (0) 2 minutes ago zen_lederberg

[root@localhost ~]# docker logs zen_lederberg

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

四、容器的高级操作

映射端口

[root@localhost ~]# docker run 镜像名 -p 宿主机端口:容器端口

[root@localhost ~]# docker pull nginx

[root@localhost ~]# docker run --name nginx-test -p 8080:80 -d nginx

243b39da1e6bb92c996875ff65cd794ffceeee9cef6d02b41482449195618830

[root@localhost ~]# curl 127.0.0.1:8080

#也可以使用了浏览器访问,宿主机ip:8080

挂载数据卷

[root@localhost ~]# docker run -v 宿主机目录:容器端目录

我们下载百度的首页保存到本地,将这个页面设置为nginx的首页

[root@localhost ~]# mkdir html

[root@localhost ~]# cd html/

[root@localhost html]# wget www.baidu.com -O index.html

[root@localhost html]# docker run --name nginx_baidu -p 8080:80 -v /root/html/:/usr/share/nginx/html -d nginx

de53e1e325a86b855b5ec52371527e43b665de8c21369e374f153f96f75f01ed

[root@localhost html]# curl 127.0.0.1:8080

进到容器看一下

[root@localhost html]# docker exec -it nginx_baidu /bin/bash

root@ec7833eecd18:/# cat /usr/share/nginx/html/index.html

用inspect命令查看

[root@localhost html]# docker inspect nginx_baidu

...

"Mounts": [

{

"Type": "bind",

"Source": "/root/html",

"Destination": "/usr/share/nginx/html",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

...

传递环境变量

docker run -e 键=值

[root@localhost html]# docker run --name nginx_env -e MY_ENV1=aaaaaaaaa -e MY_ENV2=bbbbbbbbb nginx printenv

HOSTNAME=53a90f926b4d

HOME=/root

PKG_RELEASE=1~buster

NGINX_VERSION=1.21.1

MY_ENV1=aaaaaaaaa

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

MY_ENV2=bbbbbbbbb

NJS_VERSION=0.6.1

PWD=/

容器内安装软件

[root@localhost ~]# docker exec -it nginx_baidu /bin/bash

root@ec7833eecd18:/# apt update && apt install curl

五、Dockerfile指令

1.FROM

基础镜像,当前镜像是基于哪个镜像的

#基于centosFROM centos

2.MAINTAINER

镜像维护者的姓名和邮箱

MAINTAINER yantao "yantao@qq.com"

3.RUN

在build时,让容器运行命令

注意:Dockerfile 的指令每执行一次都会在 docker 上新建一层。所以过多无意义的层,会造成镜像膨胀过大

#基于centos

FROM centos

#安装wget

RUN yum install wget

RUN wget -O redis.tar.gz "http://download.redis.io/releases/redis-5.0.3.tar.gz"

RUN tar -xvf redis.tar.gz

#以上执行会创建 3 层镜像。可简化为以下格式:

FROM centosRUN yum install wget \

&& wget -O redis.tar.gz "http://download.redis.io/releases/redis-5.0.3.tar.gz" \

&& tar -xvf redis.tar.gz

如上,以 && 符号连接命令,这样执行后,只会创建 1 层镜像。

4.EXPOSE

当前容器对外暴露的端口

EXPOSE 80EXPOSE 3306

5.WORKDIR

指定工作目录。用 WORKDIR 指定的工作目录,会在构建镜像的每一层中都存在。(WORKDIR 指定的工作目录,必须是提前创建好的)

WORKDIR <工作目录路径>WORKDIR /usr/share/nginx/html

6.ENV

设置环境变量,定义了环境变量,那么在后续的指令中,就可以使用这个变量。

ENV <key> <value>

ENV JAVA_HOME /usr/local/jdk1.8

ENV APP vim

#构建容器时,运行命令

RUN yum install $APP -y

7.COPY

复制指令,从上下文目录中复制文件或者目录到容器里指定路径。

COPY [--chown=<user>:<group>] <源路径1> <目标路径>

#将readme.md文件拷贝进容器/usr/local/路径下

COPY readme.txt /usr/local/readme.txt

[–chown=:]:可选参数,用户改变复制到容器内文件的拥有者和属组。

8.ADD

ADD 指令和 COPY 的使用格式一致

ADD 的优点:会自动解压压缩包,但是这会增加构建的时间

9.VOLUME

数据卷,通常在运行时指定,不写在Dockerfile里

VOLUME ["<路径1>", "<路径2>"...]

10.CMD

指定一个容器启动时要运行的命令,它有两个特点

1.在写了多个时候,只会运行最后一个

2.CMD会被docker run 之后的命令替换掉

CMD ["<可执行文件或命令>","<param1>","<param2>",...] CMD ["<param1>","<param2>",...] # 该写法是为 ENTRYPOINT 指令指定的程序提供默认参数

11.ENTRYPOINT

1.在写了多个时候,只会运行最后一个

2.不会被docker run 之后的命令替换掉,而是可以接收deocker run 之后的参数作为指令的参数

ENTRYPOINT ["<executeable>","<param1>","<param2>",...]

12.ONBUILD

在A镜像中写入该指令,B继承A的镜像构建时,会触发该指令,该指令会优先于A的其他指令执行

六、DockerHub

1.登录

默认登录的是官方的仓库 https://hub.docker.com

[root@localhost ~]# docker login

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username: yantao

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

账号密码保存在这里

[root@localhost ~]# cat .docker/config.json

{

"auths": {

"https://index.docker.io/v1/": {

"auth": "QWERTYUIOPASDFGHJK=="

}

}

}

[root@localhost ~]# echo "QWERTYUIOPASDFGHJK==" |base64 -d

2.构建镜像

[root@localhost ~]# vim Dockerfile

FROM ubuntu

MAINTAINER yantao "yantao@qq.com"

RUN apt-get update && apt-get install -y nginx

EXPOSE 80

[root@localhost ~]# docker build -t myubutu:v1 .

[root@localhost ~]#

[root@localhost ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myubutu v1 9ab6687ba7f6 About a minute ago 162MB

3.提交

#先打命名空间和标签

[root@localhost ~]# docker tag myubutu:v1 yantao/myubutu:v1

[root@localhost ~]# docker push yantao/myubutu:v1

The push refers to repository [docker.io/yantao/myubutu]

d120a77425e0: Pushed

7555a8182c42: Pushed

v1: digest: sha256:7f7a276b5010a5929eac3d16ae15b7e598dccde475b608a823d4383482f95f1d size: 741

七、网络

1:本地回环网卡: 127.0.0.1

2:内置网卡: 192.168.236.132

3:docker0网卡: 172.17.0.1

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cb:fe:6f brd ff:ff:ff:ff:ff:ff

inet 192.168.236.132/24 brd 192.168.236.255 scope global noprefixroute dynamic ens32

valid_lft 1337sec preferred_lft 1337sec

inet6 fe80::bbd5:f6b6:7f60:1969/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:87:54:fb:c6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:87ff:fe54:fbc6/64 scope link

valid_lft forever preferred_lft forever

[root@localhost ~]# docker run -d -P --name tomcat01 tomcat

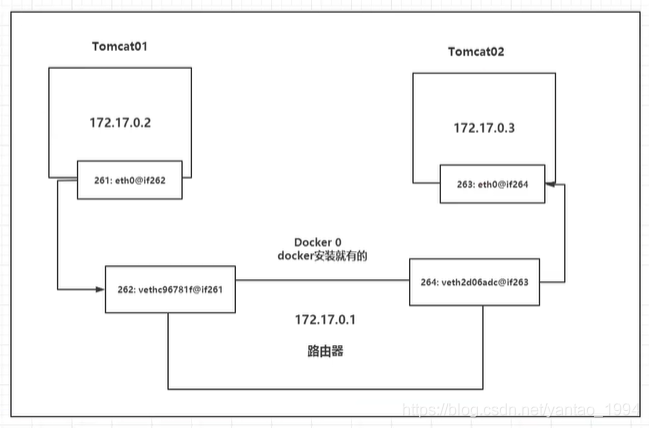

#查看容器内部网络地址,发现容器启动时会得到一个 82: eth0@if83 网卡,这是docker0 分配的

[root@localhost ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

82: eth0@if83: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

#我们尝试ping这个网址,发现可以ping通

[root@localhost ~]# ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.403 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.105 ms

原理

1、我们每启动一个docker容器,docker就会给docker容器分配一个ip。我们只要安装了docker,就会有一个docker0的网卡,它采用桥接模式,实现上网,使用的是evth-pair技术。

再次检查宿主机ip

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cb:fe:6f brd ff:ff:ff:ff:ff:ff

inet 192.168.236.132/24 brd 192.168.236.255 scope global noprefixroute dynamic ens32

valid_lft 1310sec preferred_lft 1310sec

inet6 fe80::bbd5:f6b6:7f60:1969/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:87:54:fb:c6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:87ff:fe54:fbc6/64 scope link

valid_lft forever preferred_lft forever

83: veth2b31778@if82: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 1a:a9:91:ab:e0:c3 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::18a9:91ff:feab:e0c3/64 scope link

valid_lft forever preferred_lft forever

我们发现多了个网卡 83: veth2b31778@if82

2、我们再启动一个容器,发现又多了一个网卡 85: veth3441fbb@if84

[root@localhost ~]# docker run -d -P --name tomcat02 tomcat

aee685dc1ecbe4610e8591a925e76c9306346f78f029489f972f23baef86b926

[root@localhost ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:cb:fe:6f brd ff:ff:ff:ff:ff:ff

inet 192.168.236.132/24 brd 192.168.236.255 scope global noprefixroute dynamic ens32

valid_lft 1063sec preferred_lft 1063sec

inet6 fe80::bbd5:f6b6:7f60:1969/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:87:54:fb:c6 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:87ff:fe54:fbc6/64 scope link

valid_lft forever preferred_lft forever

83: veth2b31778@if82: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 1a:a9:91:ab:e0:c3 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::18a9:91ff:feab:e0c3/64 scope link

valid_lft forever preferred_lft forever

85: veth3441fbb@if84: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether c2:da:74:51:8e:30 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::c0da:74ff:fe51:8e30/64 scope link

valid_lft forever preferred_lft forever

#我们发现每产生一个容器,就会成对的产生一对网卡。#evth-pair 就是一对虚拟设备接口,他们都是成对出现的,一段连接着协议,一段彼此相连#正因为有这个特性,enth-pair 充当一个桥梁,连接着各种虚拟网络设备的#OpenStac,Docker容器之间的连接,都是使用enth-pair技术

3、tomcat01和tomcat02也是能相互ping通的,他们是靠docker0间接通信的。

小结

Docker 使用的是Linux的桥接,宿主机中是一个docker容器的网桥docker0。 Docker中的所有网络接口都是虚拟的,虚拟的转发效率高。只要容器删除,对应的一对就没了。

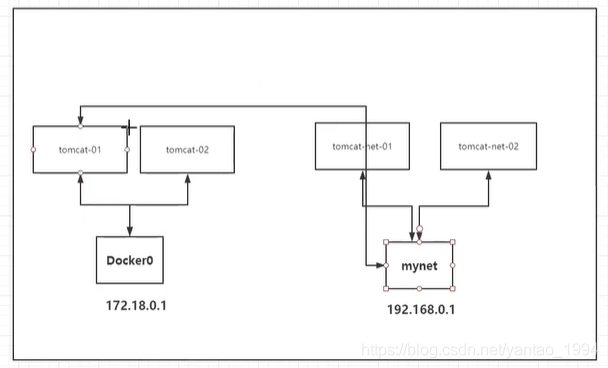

自定义网络

查看所有docker网络类型

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

fbf0e2b5c208 bridge bridge local

ad138fb4b1f5 host host local

f3622983e4ac none null local

网络模式

bridge: 桥接 docker

none: 不配置网络

host: 和宿主机共享网络

测试

#我们直接启动的命令 --net bridge ,而这个模式即是docker0

docker run -d -P --name tomcat01 tomcat

docker run -d -P --name tomcat01 --net bridge tomcat

新建网络模式 mynet

[root@localhost ~]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

0b83ece87b65f0406a91f57527650cf1126317115658e5682d8ce1a0d1ab0e34

查看所有网络模式

[root@localhost ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

fbf0e2b5c208 bridge bridge local

ad138fb4b1f5 host host local

0b83ece87b65 mynet bridge local

f3622983e4ac none null local

mynet详情

[root@localhost ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "0b83ece87b65f0406a91f57527650cf1126317115658e5682d8ce1a0d1ab0e34",

"Created": "2021-07-07T11:48:59.931114931+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

[root@localhost ~]# docker run -d -P --name tomcat-net-01 --net mynet tomcat

099a63ef597ab94b5f5924332660bbf58d9c2cfe9c57a178a57ab8eb6fbfa55e

[root@localhost ~]# docker run -d -P --name tomcat-net-02 --net mynet tomcat

3028d877a9703992f81126027647e4be5352241946f785726692905ba0cadf98

再次查看mynet,发现有两个容器在使用这个网络模式

[root@localhost ~]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "0b83ece87b65f0406a91f57527650cf1126317115658e5682d8ce1a0d1ab0e34",

"Created": "2021-07-07T11:48:59.931114931+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"099a63ef597ab94b5f5924332660bbf58d9c2cfe9c57a178a57ab8eb6fbfa55e": {

"Name": "tomcat-net-01",

"EndpointID": "f49f75964fb74012a884176a4dd7094866f79496c93ac59c01537251d0d4beba",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"3028d877a9703992f81126027647e4be5352241946f785726692905ba0cadf98": {

"Name": "tomcat-net-02",

"EndpointID": "c085e591dfd13d8156e52cf4dc5f0699e620f82c722bc9f9d5aa7e690b058391",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

现在可以互ping

[root@localhost ~]# docker exec -it tomcat-net-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.281 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.109 ms

#或者使用容器名字

[root@localhost ~]# docker exec -it tomcat-net-01 ping tomcat-net-02

PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.212 ms

64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.210 ms

#反向ping

[root@localhost ~]# docker exec -it tomcat-net-02 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.165 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.095 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.072 ms

我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐我们平时这样使用网络。

好处:

例如

redis集群使用网络A,edis集群使用网络B,保证集群是安全和健康的。

网络连通

[root@localhost ~]# docker network connect 网络 容器

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

[root@localhost ~]# docker network connect mynet tomcat01

#然后保证tomcat01启动,再次查看mynet。我们发现多了个 tomcat01 容器,那么tomcat01和tomcat-net-01就在一个网络下,就可以互相通信了。

[root@localhost ~]# docker network inspect mynet

...

"Containers": {

"01cac03bd07fd0da2759c2b34a4e8ec43c65b213c883698094923922b2ac4a22": {

"Name": "tomcat01",

"EndpointID": "96523ed4e88ec44bb740e964194ec0b5fc8cdff1a978cda68f9947495271c84d",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"099a63ef597ab94b5f5924332660bbf58d9c2cfe9c57a178a57ab8eb6fbfa55e": {

"Name": "tomcat-net-01",

"EndpointID": "f49f75964fb74012a884176a4dd7094866f79496c93ac59c01537251d0d4beba",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

},

"3028d877a9703992f81126027647e4be5352241946f785726692905ba0cadf98": {

"Name": "tomcat-net-02",

"EndpointID": "c085e591dfd13d8156e52cf4dc5f0699e620f82c722bc9f9d5aa7e690b058391",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

}

},

...

[root@localhost ~]# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=1.05 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.192 ms

顺便一提,我们在容器的角度来查看,会发现容器有两个网络 bridge,mynet

[root@localhost ~]# docker inspect tomcat01

...

"Networks": {

"bridge": {

"IPAMConfig": null,

"NetworkID": "fbf0e2b5c208e834145b55f90d28de6da711ed1a9343a3e1de9d7a90b63921a4",

"EndpointID": "e08065eb5fc0de0cec5e5dd3eb02c5e741e6237c83fef9c0b8844635afbd9962",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.2",

},

"mynet": {

"IPAMConfig": {},

"Aliases": [

"01cac03bd07f"

],

"NetworkID": "0b83ece87b65f0406a91f57527650cf1126317115658e5682d8ce1a0d1ab0e34",

"EndpointID": "96523ed4e88ec44bb740e964194ec0b5fc8cdff1a978cda68f9947495271c84d",

"Gateway": "192.168.0.1",

"IPAddress": "192.168.0.4",

}

}

...

实战

部署redis

创建一个redis专用网络

[root@localhost ~]# docker network create redis --subnet 172.38.0.0/16

[root@localhost ~]# docker network ls|grep redis

996a8894654f redis bridge local

[root@localhost ~]# docker network inspect redis

[

{

"Name": "redis",

"Id": "996a8894654fe73475980d2d4291be49e3c0be5c9610319c01bd3c10f34dbbb6",

"Created": "2021-07-07T16:47:29.46130939+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.38.0.0/16"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

使用脚本创建六个redis配置

for port in $(seq 1 6); \do \mkdir -p /mydata/redis/node-${port}/conftouch /mydata/redis/node-${port}/conf/redis.confcat << EOF >/mydata/redis/node-${port}/conf/redis.confport 6379bind 0.0.0.0cluster-enabled yescluster-config-file nodes.confcluster-node-timeout 5000cluster-announce-ip 172.38.0.1${port}cluster-announce-port 6379cluster-announce-bus-port 16379appendonly yesEOFdone

[root@localhost ~]# ls /mydata/redis/

node-1 node-2 node-3 node-4 node-5 node-6

[root@localhost ~]# cat /mydata/redis/node-1/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.11

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

启动六个redis

#1

docker run -p 6371:6379 -p 16371:16379 --name redis-1 -v /mydata/redis/node-1/data:/data -v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#2

docker run -p 6372:6379 -p 16372:16379 --name redis-2 -v /mydata/redis/node-2/data:/data -v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#3

docker run -p 6373:6379 -p 16373:16379 --name redis-3 -v /mydata/redis/node-3/data:/data -v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#4

docker run -p 6374:6379 -p 16374:16379 --name redis-4 -v /mydata/redis/node-4/data:/data -v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#5

docker run -p 6375:6379 -p 16375:16379 --name redis-5 -v /mydata/redis/node-5/data:/data -v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

#6

docker run -p 6376:6379 -p 16376:16379 --name redis-6 -v /mydata/redis/node-6/data:/data -v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf -d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

[root@localhost ~]# docker ps |grep redis

0250ddfde7fc redis:5.0.9-alpine3.11 。。。 redis-6

d45d33d0b76e redis:5.0.9-alpine3.11 。。。 redis-5

f2115047a45d redis:5.0.9-alpine3.11 。。。 redis-4

52d720bde4bf redis:5.0.9-alpine3.11 。。。 redis-3

540db24971f7 redis:5.0.9-alpine3.11 。。。 redis-2

a2bab19d74ed redis:5.0.9-alpine3.11 。。。 redis-1

创建集群

[root@localhost ~]# docker exec -it redis-1 /bin/sh

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0

.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 7 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

Adding extra replicas...

Adding replica 172.38.0.13:6379 to 172.38.0.11:6379

M: efd8a6f2628b532a709e25aa347a6b1194460c88 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: c57daabcf9dbda7fc09466a36c32762f70eebe1d 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 123737d8deb0c1f1c18f5d35b67daa2654fb278a 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 123737d8deb0c1f1c18f5d35b67daa2654fb278a 172.38.0.13:6379

replicates efd8a6f2628b532a709e25aa347a6b1194460c88

S: 8761eea1ba0e71c49443c7894f92a28f11ee97c4 172.38.0.14:6379

replicates 123737d8deb0c1f1c18f5d35b67daa2654fb278a

S: 9954088fd2299f4da0a351c8591a8f26ba8328e1 172.38.0.15:6379

replicates efd8a6f2628b532a709e25aa347a6b1194460c88

S: 5f37a1014d16ca8ef16fd72343c447ee70252fee 172.38.0.16:6379

replicates c57daabcf9dbda7fc09466a36c32762f70eebe1d

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: efd8a6f2628b532a709e25aa347a6b1194460c88 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: 5f37a1014d16ca8ef16fd72343c447ee70252fee 172.38.0.16:6379

slots: (0 slots) slave

replicates c57daabcf9dbda7fc09466a36c32762f70eebe1d

S: 8761eea1ba0e71c49443c7894f92a28f11ee97c4 172.38.0.14:6379

slots: (0 slots) slave

replicates 123737d8deb0c1f1c18f5d35b67daa2654fb278a

M: c57daabcf9dbda7fc09466a36c32762f70eebe1d 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 123737d8deb0c1f1c18f5d35b67daa2654fb278a 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 9954088fd2299f4da0a351c8591a8f26ba8328e1 172.38.0.15:6379

slots: (0 slots) slave

replicates efd8a6f2628b532a709e25aa347a6b1194460c88

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

进去集群

/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3 #3个集群

cluster_current_epoch:7

cluster_my_epoch:1

cluster_stats_messages_ping_sent:202

cluster_stats_messages_pong_sent:200

cluster_stats_messages_sent:402

cluster_stats_messages_ping_received:195

cluster_stats_messages_pong_received:202

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:402

127.0.0.1:6379> cluster nodes #3个主,3个从

5f37a1014d16ca8ef16fd72343c447ee70252fee 172.38.0.16:6379@16379 slave c57daabcf9dbda7fc09466a36c32762f70eebe1d 0 1625649853052 7 connected

efd8a6f2628b532a709e25aa347a6b1194460c88 172.38.0.11:6379@16379 myself,master - 0 1625649853000 1 connected 0-5460

8761eea1ba0e71c49443c7894f92a28f11ee97c4 172.38.0.14:6379@16379 slave 123737d8deb0c1f1c18f5d35b67daa2654fb278a 0 1625649853555 5 connected

c57daabcf9dbda7fc09466a36c32762f70eebe1d 172.38.0.12:6379@16379 master - 0 1625649853000 2 connected 5461-10922

123737d8deb0c1f1c18f5d35b67daa2654fb278a 172.38.0.13:6379@16379 master - 0 1625649853000 3 connected 10923-16383

9954088fd2299f4da0a351c8591a8f26ba8328e1 172.38.0.15:6379@16379 slave efd8a6f2628b532a709e25aa347a6b1194460c88 0 1625649853454 6 connected

127.0.0.1:6379>

设置缓存

127.0.0.1:6379> set name Bob

-> Redirected to slot [5798] located at 172.38.0.12:6379 #第2个主机在响应,当它挂了,从机应该上来

OK

127.0.0.1:6379> get name

-> Redirected to slot [5798] located at 172.38.0.16:6379

"Bob"

检测高可用

#我们停止redis2,

[root@localhost ~]# docker stop redis-2

#从redis3进入集群,查看

[root@localhost ~]# docker exec -it redis-3 /bin/sh

/data # redis-cli -c

127.0.0.1:6379> get name #是从redis6获取到的

-> Redirected to slot [5798] located at 172.38.0.16:6379

"Bob"

SpringBoot微服务打包Docker镜像

https://www.bilibili.com/video/BV1og4y1q7M4?p=39&spm_id_from=pageDriver

部署MySQL

[root@localhost ~]# docker pull mysql:5.7

[root@localhost ~]# docker run -d -p 3310:3306 -v /home/mysql/conf:/etc/mysql/conf.d -v /home/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 --name mysql01 mysql:5.7

4980

4980

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?