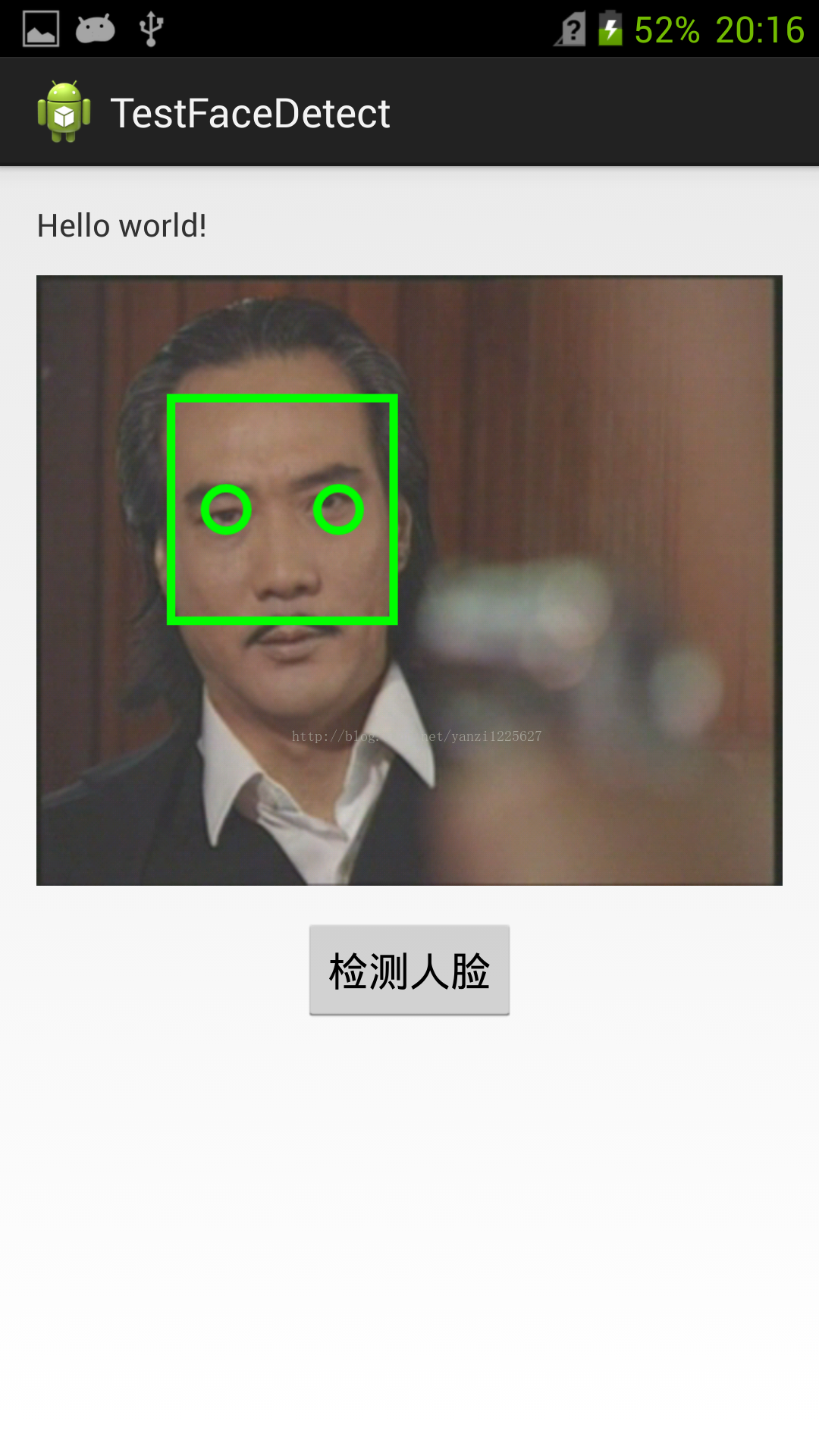

Demo功能:利用android自带的人脸识别进行识别,标记出眼睛和人脸位置。点击按键后进行人脸识别,完毕后显示到imageview上。

第一部分:布局文件activity_main.xml

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:id="@+id/layout_main"

android:layout_width="match_parent"

android:layout_height="match_parent"

android:paddingBottom="@dimen/activity_vertical_margin"

android:paddingLeft="@dimen/activity_horizontal_margin"

android:paddingRight="@dimen/activity_horizontal_margin"

android:paddingTop="@dimen/activity_vertical_margin"

tools:context=".MainActivity" >

<TextView

android:id="@+id/textview_hello"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="@string/hello_world" />

<ImageView

android:id="@+id/imgview"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_below="@id/textview_hello" />

<Button

android:id="@+id/btn_detect_face"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_below="@id/imgview"

android:layout_centerHorizontal="true"

android:text="检测人脸" />

</RelativeLayout>注意:ImageView四周的padding由布局文件里的这四句话决定:

android:paddingBottom="@dimen/activity_vertical_margin"

android:paddingLeft="@dimen/activity_horizontal_margin"

android:paddingRight="@dimen/activity_horizontal_margin"

android:paddingTop="@dimen/activity_vertical_margin"而上面的两个margin定义在dimens.xml文件里:

<resources>

<!-- Default screen margins, per the Android Design guidelines. -->

<dimen name="activity_horizontal_margin">16dp</dimen>

<dimen name="activity_vertical_margin">16dp</dimen>

</resources>

这里采用的都是默认的,可以忽略!

第二部分:MainActivity.java

package org.yanzi.testfacedetect;

import org.yanzi.util.ImageUtil;

import org.yanzi.util.MyToast;

import android.app.Activity;

import android.graphics.Bitmap;

import android.graphics.Bitmap.Config;

import android.graphics.BitmapFactory;

import android.graphics.Canvas;

import android.graphics.Color;

import android.graphics.Paint;

import android.graphics.Point;

import android.graphics.PointF;

import android.graphics.Rect;

import android.media.FaceDetector;

import android.media.FaceDetector.Face;

import android.os.Bundle;

import android.os.Handler;

import android.os.Message;

import android.util.DisplayMetrics;

import android.util.Log;

import android.view.Menu;

import android.view.View;

import android.view.View.OnClickListener;

import android.view.ViewGroup;

import android.view.ViewGroup.LayoutParams;

import android.widget.Button;

import android.widget.ImageView;

import android.widget.ProgressBar;

import android.widget.RelativeLayout;

public class MainActivity extends Activity {

static final String tag = "yan";

ImageView imgView = null;

FaceDetector faceDetector = null;

FaceDetector.Face[] face;

Button detectFaceBtn = null;

final int N_MAX = 2;

ProgressBar progressBar = null;

Bitmap srcImg = null;

Bitmap srcFace = null;

Thread checkFaceThread = new Thread(){

@Override

public void run() {

// TODO Auto-generated method stub

Bitmap faceBitmap = detectFace();

mainHandler.sendEmptyMessage(2);

Message m = new Message();

m.what = 0;

m.obj = faceBitmap;

mainHandler.sendMessage(m);

}

};

Handler mainHandler = new Handler(){

@Override

public void handleMessage(Message msg) {

// TODO Auto-generated method stub

//super.handleMessage(msg);

switch (msg.what){

case 0:

Bitmap b = (Bitmap) msg.obj;

imgView.setImageBitmap(b);

MyToast.showToast(getApplicationContext(), "检测完毕");

break;

case 1:

showProcessBar();

break;

case 2:

progressBar.setVisibility(View.GONE);

detectFaceBtn.setClickable(false);

break;

default:

break;

}

}

};

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

initUI();

initFaceDetect();

detectFaceBtn.setOnClickListener(new OnClickListener() {

@Override

public void onClick(View v) {

// TODO Auto-generated method stub

mainHandler.sendEmptyMessage(1);

checkFaceThread.start();

}

});

}

@Override

public boolean onCreateOptionsMenu(Menu menu) {

// Inflate the menu; this adds items to the action bar if it is present.

getMenuInflater().inflate(R.menu.main, menu);

return true;

}

public void initUI(){

detectFaceBtn = (Button)findViewById(R.id.btn_detect_face);

imgView = (ImageView)findViewById(R.id.imgview);

LayoutParams params = imgView.getLayoutParams();

DisplayMetrics dm = getResources().getDisplayMetrics();

int w_screen = dm.widthPixels;

// int h = dm.heightPixels;

srcImg = BitmapFactory.decodeResource(getResources(), R.drawable.kunlong);

int h = srcImg.getHeight();

int w = srcImg.getWidth();

float r = (float)h/(float)w;

params.width = w_screen;

params.height = (int)(params.width * r);

imgView.setLayoutParams(params);

imgView.setImageBitmap(srcImg);

}

public void initFaceDetect(){

this.srcFace = srcImg.copy(Config.RGB_565, true);

int w = srcFace.getWidth();

int h = srcFace.getHeight();

Log.i(tag, "待检测图像: w = " + w + "h = " + h);

faceDetector = new FaceDetector(w, h, N_MAX);

face = new FaceDetector.Face[N_MAX];

}

public boolean checkFace(Rect rect){

int w = rect.width();

int h = rect.height();

int s = w*h;

Log.i(tag, "人脸 宽w = " + w + "高h = " + h + "人脸面积 s = " + s);

if(s < 10000){

Log.i(tag, "无效人脸,舍弃.");

return false;

}

else{

Log.i(tag, "有效人脸,保存.");

return true;

}

}

public Bitmap detectFace(){

// Drawable d = getResources().getDrawable(R.drawable.face_2);

// Log.i(tag, "Drawable尺寸 w = " + d.getIntrinsicWidth() + "h = " + d.getIntrinsicHeight());

// BitmapDrawable bd = (BitmapDrawable)d;

// Bitmap srcFace = bd.getBitmap();

int nFace = faceDetector.findFaces(srcFace, face);

Log.i(tag, "检测到人脸:n = " + nFace);

for(int i=0; i<nFace; i++){

Face f = face[i];

PointF midPoint = new PointF();

float dis = f.eyesDistance();

f.getMidPoint(midPoint);

int dd = (int)(dis);

Point eyeLeft = new Point((int)(midPoint.x - dis/2), (int)midPoint.y);

Point eyeRight = new Point((int)(midPoint.x + dis/2), (int)midPoint.y);

Rect faceRect = new Rect((int)(midPoint.x - dd), (int)(midPoint.y - dd), (int)(midPoint.x + dd), (int)(midPoint.y + dd));

Log.i(tag, "左眼坐标 x = " + eyeLeft.x + "y = " + eyeLeft.y);

if(checkFace(faceRect)){

Canvas canvas = new Canvas(srcFace);

Paint p = new Paint();

p.setAntiAlias(true);

p.setStrokeWidth(8);

p.setStyle(Paint.Style.STROKE);

p.setColor(Color.GREEN);

canvas.drawCircle(eyeLeft.x, eyeLeft.y, 20, p);

canvas.drawCircle(eyeRight.x, eyeRight.y, 20, p);

canvas.drawRect(faceRect, p);

}

}

ImageUtil.saveJpeg(srcFace);

Log.i(tag, "保存完毕");

//将绘制完成后的faceBitmap返回

return srcFace;

}

public void showProcessBar(){

RelativeLayout mainLayout = (RelativeLayout)findViewById(R.id.layout_main);

progressBar = new ProgressBar(MainActivity.this, null, android.R.attr.progressBarStyleLargeInverse); //ViewGroup.LayoutParams.WRAP_CONTENT

RelativeLayout.LayoutParams params = new RelativeLayout.LayoutParams(ViewGroup.LayoutParams.WRAP_CONTENT, ViewGroup.LayoutParams.WRAP_CONTENT);

params.addRule(RelativeLayout.ALIGN_PARENT_TOP, RelativeLayout.TRUE);

params.addRule(RelativeLayout.CENTER_HORIZONTAL, RelativeLayout.TRUE);

progressBar.setVisibility(View.VISIBLE);

//progressBar.setLayoutParams(params);

mainLayout.addView(progressBar, params);

}

}

关于上述代码,注意以下几点:

1、在initUI()函数里初始化UI布局,主要是将ImageView的长宽比设置。根据srcImg的长宽比及屏幕的宽度,设置ImageView的宽度为屏幕宽度,然后根据比率得到ImageView的高。然后将Bitmap设置到ImageView里。一旦设置了ImageView的长和宽,Bitmap会自动缩放填充进去,所以对Bitmap就无需再缩放了。

2、initFaceDetect()函数里初始化人脸识别所需要的变量。首先将Bitmap的ARGB格式转换为RGB_565格式,这是android自带人脸识别要求的图片格式,必须进行此转化:this.srcFace = srcImg.copy(Config.RGB_565, true);

然后实例化这两个变量:

FaceDetector faceDetector = null;

FaceDetector.Face[] face;

faceDetector = new FaceDetector(w, h, N_MAX);

face = new FaceDetector.Face[N_MAX];

FaceDetector就是用来进行人脸识别的类,face是用来存放识别得到的人脸信息。N_MAX是允许的人脸个数最大值。

3、真正的人脸识别在自定义的方法detectFace()里,核心代码:faceDetector.findFaces(srcFace, face)。在识别后,通过Face f = face[i];得到每个人脸f,通过 float dis = f.eyesDistance();得到两个人眼之间的距离,f.getMidPoint(midPoint);得到人脸中心的坐标。下面这两句话得到左右人眼的坐标:

Point eyeLeft = new Point((int)(midPoint.x - dis/2), (int)midPoint.y);

Point eyeRight = new Point((int)(midPoint.x + dis/2), (int)midPoint.y);下面是得到人脸的矩形:

Rect faceRect = new Rect((int)(midPoint.x - dd), (int)(midPoint.y - dd), (int)(midPoint.x + dd), (int)(midPoint.y + dd));注意这里Rect的四个参数其实就是矩形框左上顶点的x 、y坐标和右下顶点的x、y坐标。

4、实际应用中发现,人脸识别会发生误判。所以增加函数checkFace(Rect rect)来判断,当人脸Rect的面积像素点太小时则视为无效人脸。这里阈值设为10000,实际上这个值可以通过整个图片的大小进行粗略估计到。

5、为了让用户看到正在识别的提醒,这里动态添加一个ProgressBar。代码如下:

public void showProcessBar(){

RelativeLayout mainLayout = (RelativeLayout)findViewById(R.id.layout_main);

progressBar = new ProgressBar(MainActivity.this, null, android.R.attr.progressBarStyleLargeInverse); //ViewGroup.LayoutParams.WRAP_CONTENT

RelativeLayout.LayoutParams params = new RelativeLayout.LayoutParams(ViewGroup.LayoutParams.WRAP_CONTENT, ViewGroup.LayoutParams.WRAP_CONTENT);

params.addRule(RelativeLayout.ALIGN_PARENT_TOP, RelativeLayout.TRUE);

params.addRule(RelativeLayout.CENTER_HORIZONTAL, RelativeLayout.TRUE);

progressBar.setVisibility(View.VISIBLE);

//progressBar.setLayoutParams(params);

mainLayout.addView(progressBar, params);

}事实上这个ProgressBar视觉效果不是太好,用ProgressDialog会更好。这里只不过是提供动态添加ProgressBar的方法。

6、程序中设置了checkFaceThread线程用来检测人脸,mainHandler用来控制UI的更新。这里重点说下Thread的构造方法,这里是模仿源码中打开Camera的方法。如果一个线程只需执行一次,则通过这种方法是最好的,比较简洁。反之,如果这个Thread在执行后需要再次执行或重新构造,不建议用这种方法,建议使用自定义Thread,程序逻辑会更容易 控制。在线程执行完毕后,设置button无法再点击,否则线程再次start便会挂掉。

Thread checkFaceThread = new Thread(){

@Override

public void run() {

// TODO Auto-generated method stub

Bitmap faceBitmap = detectFace();

mainHandler.sendEmptyMessage(2);

Message m = new Message();

m.what = 0;

m.obj = faceBitmap;

mainHandler.sendMessage(m);

}

};原图:

识别后:

最后特别交代下,当人眼距离少于100个像素时会识别不出来。如果静态图片尺寸较少,而手机的densityDpi又比较高的话,当图片放在drawable-hdpi文件夹下时会发生检测不到人脸的情况,同样的测试图片放在drawable-mdpi就可以正常检测。原因是不同的文件夹下,Bitmap加载进来后的尺寸大小不一样。

后续会推出Camera里实时检测并绘制人脸框,进一步研究眨眼检测,眨眼控制拍照的demo,敬请期待。如果您觉得笔者在认真的写博客,请为我投上一票。

CSDN2013博客之星评选:

http://vote.blog.csdn.net/blogstaritem/blogstar2013/yanzi1225627

本文demo下载链接:

http://download.csdn.net/detail/yanzi1225627/6783575

参考文献:

461

461

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?