cpm是CMU开源项目OpenPose的前身,目前在MPII竞赛single person中排名第七。

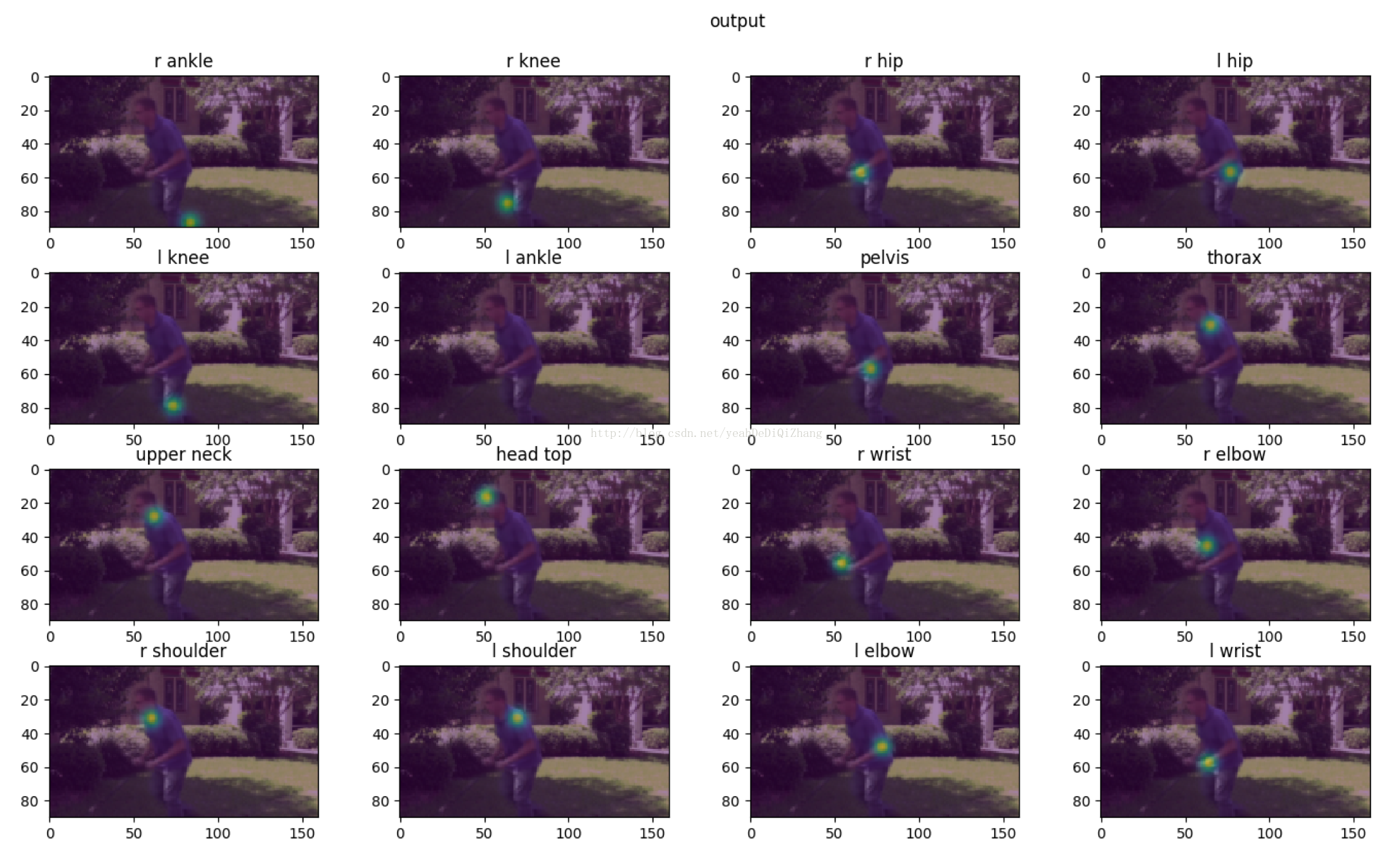

Pose estimation任务属于FCN的一种,输入是一张人体姿势图,输出n张热力图,代表n个关节的响应。

CPM网络结构如下图所示,X代表了经典的VGG结构,在每个stage末用1✖️1卷积输出heatmap,与label计算loss(中间监督)。

在cpm中,网络有一个格外的输入: center map,center map为一个高斯响应。因为cpm处理的是单人pose的问题,如果图片中有多人,那么center map可以告诉网络,目前要处理的那个人的位置。 因为这样的设置,cpm也可以自底向上地处理多人pose的问题。如psycharo给出的TensorFlow版本,测试的时候先用一个person net来预测图片中每个人的中心位置,然后对中心位置来预测pose。

用TensorFlow实现CPM:

第一步实现网络。

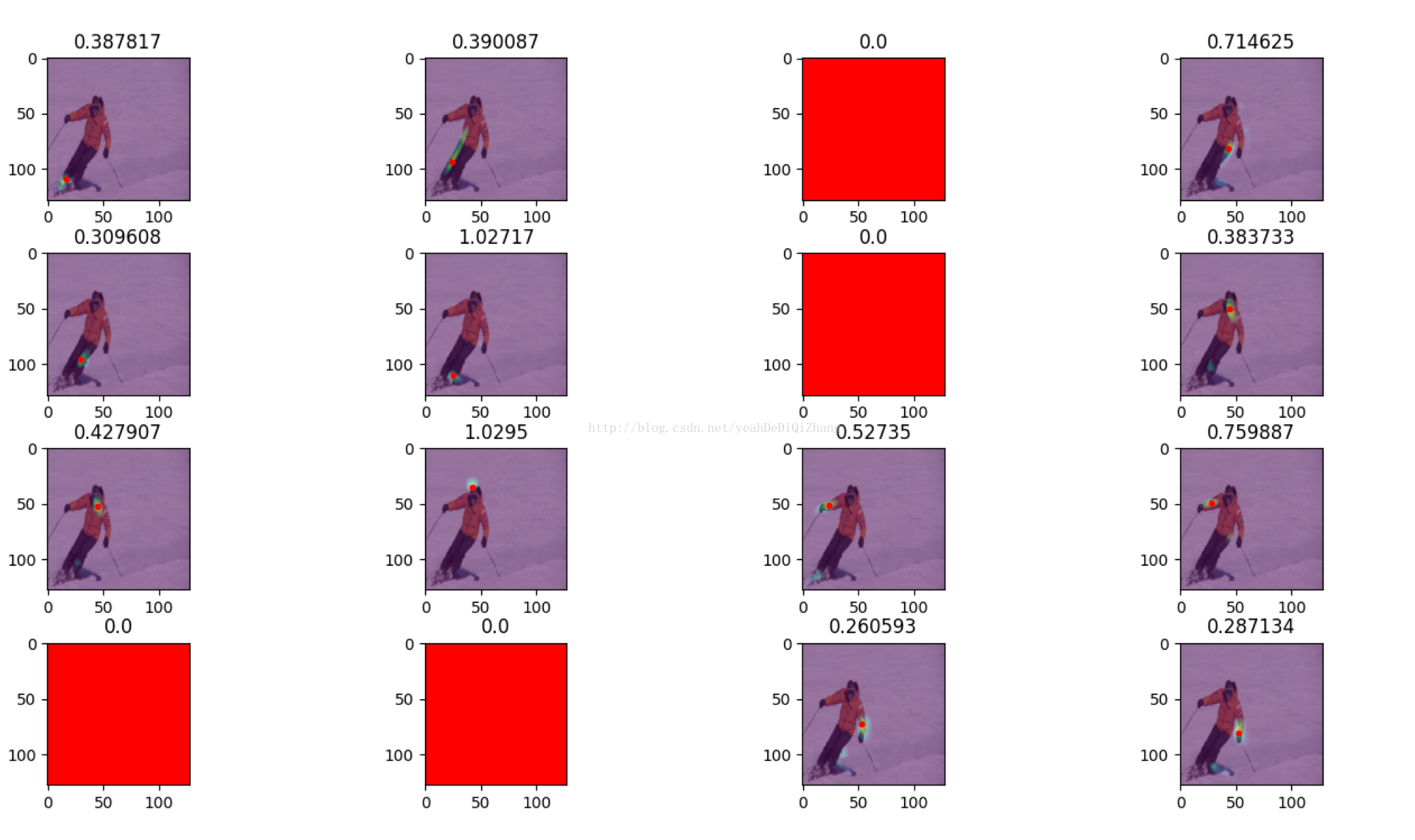

首先定义卷积部分,要注意到每个stage结束输出的heatmap不应该加relu激活函数,否则训练的时候会梯度爆炸,即输出的heatmap会随机地出现某一通道数值全为0(找不到关节点),如下图,红点代表heatmap最大值位置。

def conv2d(inputs, shape, strides, padding='SAME', stddev=0.005, activation_fn=True):

kernel = tf.Variable(tf.truncated_normal(shape,

dtype=tf.float32, stddev=stddev))

conv = tf.nn.conv2d(inputs, kernel, strides, padding=padding)

bias = tf.Variable(tf.truncated_normal([shape[-1]],

dtype=tf.float32, stddev=stddev))

conv = tf.nn.bias_add(conv, bias)

if activation_fn:

conv = tf.nn.relu(conv)

return conv接着定义stage ≥ 2 部分

def muti_conv2d(inputs):

Mconv1_stage = conv2d(inputs, [11, 11, 47, 128], [1, 1, 1, 1])

Mconv2_stage = conv2d(Mconv1_stage, [11, 11, 128, 128], [1, 1, 1, 1])

Mconv3_stage = conv2d(Mconv2_stage, [11, 11, 128, 128], [1, 1, 1, 1])

Mconv4_stage = conv2d(Mconv3_stage, [1, 1, 128, 128], [1, 1, 1, 1])

Mconv5_stage = conv2d(Mconv4_stage, [1, 1, 128, joints_num], [1, 1, 1, 1], activation_fn=False)

return Mconv5_stagedef cpm(images, center_map):

pool_center_map = tf.nn.avg_pool(center_map, ksize=[1, 9, 9, 1], strides=[1, 8, 8, 1], padding='SAME')

#stage 1

conv1_stage1 = conv2d(images, [9, 9, 3, 128], [1, 1, 1, 1])

pool1_stage1 = tf.nn.max_pool(conv1_stage1, [1, 3, 3, 1], [1, 2, 2, 1], padding='SAME')

conv2_stage1 = conv2d(pool1_stage1, [9, 9, 128, 128], [1, 1, 1, 1])

pool2_stage1 = tf.nn.max_pool(conv2_stage1, [1, 3, 3, 1], [1, 2, 2, 1], padding='SAME')

conv3_stage1 = conv2d(pool2_stage1, [9, 9, 128, 128], [1, 1, 1, 1])

pool3_stage1 = tf.nn.max_pool(conv3_stage1, [1, 3, 3, 1], [1, 2, 2, 1], padding='SAME')

conv4_stage1 = conv2d(pool3_stage1, [5, 5, 128, 32], [1, 1, 1, 1])

conv5_stage1 = conv2d(conv4_stage1, [9, 9, 32, 512], [1, 1, 1, 1])

conv6_stage1 = conv2d(conv5_stage1, [1, 1, 512, 512], [1, 1, 1, 1])

conv7_stage1 = conv2d(conv6_stage1, [1, 1, 512, joints_num], [1, 1, 1, 1], activation_fn=False)

tf.add_to_collection('heatmaps', conv7_stage1)

#stage 2

conv1_stage2 = conv2d(images, [9, 9, 3, 128], [1, 1, 1, 1])

pool1_stage2 = tf.nn.max_pool(conv1_stage2, [1, 3, 3, 1], [1, 2, 2, 1], padding='SAME')

conv2_stage2 = conv2d(pool1_stage2, [9, 9, 128, 128], [1, 1, 1, 1])

pool2_stage2 = tf.nn.max_pool(conv2_stage2, [1, 3, 3, 1], [1, 2, 2, 1], padding='SAME')

conv3_stage2 = conv2d(pool2_stage2, [9, 9, 128, 128], [1, 1, 1, 1])

pool3_stage2 = tf.nn.max_pool(conv3_stage2, [1, 3, 3, 1], [1, 2, 2, 1], padding='SAME')

conv4_stage2 = conv2d(pool3_stage2, [5, 5, 128, 32], [1, 1, 1, 1])

concat_stage2 = tf.concat(axis=3, values=[conv4_stage2, conv7_stage1, pool_center_map])

Mconv_stage2 = muti_conv2d(concat_stage2)

tf.add_to_collection('heatmaps', Mconv_stage2)

#stage3

conv1_stage3 = conv2d(pool3_stage2, [5, 5, 128, 32], [1, 1, 1, 1])

concat_stage3 = tf.concat(axis=3, values=[conv1_stage3, Mconv_stage2, pool_center_map])

Mconv_stage3 = muti_conv2d(concat_stage3)

tf.add_to_collection('heatmaps', Mconv_stage3)

#stage4

conv1_stage4 = conv2d(pool3_stage2, [5, 5, 128, 32], [1, 1, 1, 1])

concat_stage4 = tf.concat(axis=3, values=[conv1_stage4, Mconv_stage3, pool_center_map])

Mconv_stage4 = muti_conv2d(concat_stage4)

tf.add_to_collection('heatmaps', Mconv_stage4)

# stage5

conv1_stage5 = conv2d(pool3_stage2, [5, 5, 128, 32], [1, 1, 1, 1])

concat_stage5 = tf.concat(axis=3, values=[conv1_stage5, Mconv_stage4, pool_center_map])

Mconv_stage5 = muti_conv2d(concat_stage5)

tf.add_to_collection('heatmaps', Mconv_stage5)

# stage6

conv1_stage6 = conv2d(pool3_stage2, [5, 5, 128, 32], [1, 1, 1, 1])

concat_stage6 = tf.concat(axis=3, values=[conv1_stage6, Mconv_stage5, pool_center_map])

Mconv_stage6 = muti_conv2d(concat_stage6)

return Mconv_stage6

第二步定义loss

image_batch, heatmap_batch, center_map_batch = read_and_decode(['your tfrecord path'])

output = cpm(image_batch, center_map_batch)

loss = tf.nn.l2_loss(heatmap_batch - output)

interm_loss = tf.reduce_sum(tf.stack([tf.nn.l2_loss(heatmap_batch - o) for o in tf.get_collection('heatmaps')]))

total_loss = loss + interm_loss

optimizer = tf.train.AdamOptimizer(1e-4).minimize(total_loss)

参考资料:

- http://blog.csdn.net/shenxiaolu1984/article/details/51094959

- http://blog.csdn.net/zimenglan_sysu/article/details/52077138

- http://blog.csdn.net/layumi1993/article/details/51854722

- http://hypjudy.github.io/2017/05/04/pose-estimation/

GitHub相关代码:

1163

1163

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?