kubeadm和二进制安装方式对比:

kubeadm安装的k8s默认etcd没有高可用,需要手动做成高可用,其次证书默认有效期为1年需要手工调整,如果解决了这两点是比较稳定的,kubeadm将组件放到pod里面,可以自动实现故障恢复,但是由于自动化程序较高,对于理解各个组件不如二进制友好,二进制通过将服务放到systemed管理便于debug,操作起来不如kubeadm方便。

节点规划

| 192.168.167.128 | master1 |

| 192.168.167.129 | master2 + node1 |

| 192.168.167.130 | master3 + node2 |

具体配置端口号

| 组件 | 默认端口号 |

| API server | 8080 hhtp 6443 https |

| controller manager | 10252 |

| scheduler | 10251 |

| kubelete | 10250 10255 只读 |

| etcd | 2379 供客户端访问 2380 供etcd集群内部节点之间访问 |

| 集群DNS服务 | 53 udp 53 tcp |

准备工作

-

添加hosts解析和修改hostname

[root@master1 ~]#vim /etc/hosts

192.168.167.128 master1

192.168.167.129 master2

192.168.167.130 master3

192.168.167.129 node1

192.168.167.130 node2

[root@master1 ~]# vim /etc/hostname

master1

关闭防火墙和swap,开启ip转发

systemctl stop firewalld && systemctl disable firewalld.

echo "vm.swappiness = 0">> /etc/sysctl.conf

echo "net.ipv4.ip_forward=1" >> /etc/sysctl.conf

sysctl -p

关闭/etc/fstab swap关闭selinux

setenforce 0

vim /etc/selinux/config低于4.18的内核需要升级

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

#安装最新版:

yum --enablerepo=elrepo-kernel install kernel-ml kernel-ml-devel -y

#查看当前可用内核版本:

awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

#选择最新内核版本,0代表查看当前可用内核版本列表的左侧索引号

grub2-set-default 0

#生成grub文件

grub2-mkconfig -o /boot/grub2/grub.cfg

#重启linux

reboot部署

说明

在正式环境中应确保 Master 的高可用,并启用安全访问机制,至少包括以下几方面。

1.Master 的 kube-apiserver 、 kube-controller-mansger 和 kube-scheduler 服务至少以 3

个节点的多实例方式部署。

2.Master 启用基于 CA 认证的 HTTPS 安全机制。

3. etcd 至少以 3 个节点的集群模式部署 。

4. etcd 集群启用基于 CA 认证的 HTTPS 安全机制 。

5. Master 启用 RBAC 授权模式

创建 CA 根证书

如果组织能够提供统一的 CA 认证中心,则直接使用组织颁发的 CA 证书即可 。 如果没有统

一的 CA 认证中心,则可以通过颁发自签名的 CA 证书来完成安全配置 。

etcd 和 Kubernetes 在制作 CA 证书时,均需要基于 CA 根证书,本文以为 Kubernetes

和 etcd 使用同一套 CA 根证书为例,对 CA 证书的制作进行说明。

CA 证书的制作可以使用 openssl 、 easyrsa 、 cfssl 等工具完成 ,本文以 openssl 为例进

行说明。下面是创建 CA 根证书的命 令,包括私钥文 件 ca.key 和证 书文件 ca.crt:

一、部署安全的 etcd 高可用集群

etcd 作为 Kubernetes 集群 的主数据库,在安装 Kubernetes 各服务之前需要首先安装和

启动。 etcd-v3.4.13-linux-amd64.tar.gz

1.下载etcd 二进制文件,配宣 systemd 服务

Releases · etcd-io/etcd · GitHub github地址

Etcd 国内加速下载 | newbe 国内加速地址

解压缩后得到 etcd 和 etcdctl 文件, 将它们复制 到 /usr/bin 目 录下

然后将其部署为一个 systemd 的服务,创建 systemd 服务配置文件/usr/l ib/systemd/

system/etcd . s ervice, 内容示例如下 :

[Unit]

Description=etcd key-values store

Documentation=https://github/com/etcd-io/etcd

After=network.target

[Service]

EnvironmentFile=/etc/etcd/etcd.conf

ExecStart=/usr/bin/etcd

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

2.创 建 etcd 的 CA 证书

1 下载cfssl工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O cfssl -P /usr/local/bin/

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O cfssljson -P /usr/local/bin/

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O cfssl-certinfo -P /usr/local/bin/

chmod +x /usr/local/bin/cfssl*

2 创建 CA 根证书

mkdir /etc/ssl/etcd

生成默认的配置文件和证书签名请求文件

cfssl print-defaults config > ca-config.json

cfssl print-defaults csr > ca-csr.json修改ca配置文件

{

"signing": {

"default": {

"expiry": "43800h"

},

"profiles": {

"server": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth"

]

},

"client": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"client auth"

]

},

"kubernetes": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

},

"etcd": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

修改CA请求文件

[root@master3 pki]# cat ca-csr.json

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Guangdong",

"L": "Guangzhou",

"O": "etcd",

"OU": "System"

}

]

}

生成CA证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca该命令会在当前目录下生成ca.csr、ca-key.pem、ca.pem三个文件。

3 创建etcd证书

创建etcd证书签名请求etcd-csr.json

[root@master3 pki]# cat etcd-csr.json

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.167.128",

"192.168.167.129",

"192.168.167.130"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Guangdong",

"L": "Guangzhou",

"O": "etcd",

"OU": "System"

}

]

}

hosts中三个ip即是三个ETCD节点,因为共用证书,所以写一起了。

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=etcd etcd-csr.json | cfssljson -bare etcd生成etcd.pem etcd-key.pem,三个节点共用此证书。

附:数字证书中主题(Subject)中字段的含义

一般的数字证书产品的主题通常含有如下字段:

公用名称 (Common Name) 简称:CN 字段,对于 SSL 证书,一般为网站域名;而对于代码签名证书则为申请单位名称;而对于客户端证书则为证书申请者的姓名;

组织名称,公司名称(Organization Name) 简称:O 字段,对于 SSL 证书,一般为网站域名;而对于代码签名证书则为申请单位名称;而对于客户端单位证书则为证书申请者所在单位名称;

组织单位名称,公司部门(Organization Unit Name) 简称:OU字段

证书申请单位所在地

所在城市 (Locality) 简称:L 字段

所在省份 (State/Provice) 简称:S 字段,State:州,省

所在国家 (Country) 简称:C 字段,只能是国家字母缩写,如中国:CN

3. etcd 参数配置说明

接下来对 3 个 etcd 节点进行配置。 etcd 节点的配置方式包括启动参数、环境变量、配

置文件等,本例使用环境变量方式将其配置到 /etc/etcd/etcd .conf 文件中,供 systemd 服务

读取 。配置文件/etc/etcd/etcd.conf 的内容示例如下:红色部分改成对用主机的地址和名字

ETCD_NAME=etcd1

ETCD_DATA_DIR=/data/etcd

ETCD_CERT_FILE=/etc/ssl/etcd/etcd.pem

ETCD_KEY_FILE=/etc/ssl/etcd/etcd-key.pem

ETCDTRUSTED_CA_FILE=/etc/ssl/etcd/ca.pem

ETCD_CLIENT_CERT_AUTH=true

ETCD_LISTEN_CLIENT_URLS=https://192.168.167.128:2379

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.167.128:2379

ETCD_PEER_CERT_FILE=/etc/ssl/etcd/etcd.pem

ETCD_PEER_KEY_FILE=/etc/ssl/etcd/etcd-key.pem

ETCD_PEER_TRUSTED_CA_FILE=/etc/ssl/etcd/ca.pem

ETCD_LISTEN_PEER_URLS=https://192.168.167.128:2380

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.167.128:2380

ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

ETCD_INITIAL_CLUSTER="etcd1=https://192.168.167.128:2380,etcd2=https://192.168.167.129:2380,etcd3=https://192.168.167.130:2380"

ETCD_INITIAL_CLUSTER_STATE=new #初始化为new 后面改为existing

ETCD_TRUSTED_CA_FILE=/etc/ssl/etcd/ca.pem

ETCD_PEER_CLIENT_CERT_AUTH=true

4 . 启 动 etcd 集群

基于 systemd 的配置,在 3 台主机上分别启动 etcd 服务,并设置为开机自启动:

# systemctl restart etcd && systemctl enable etcd

然后用 etcdct l 客户 端命令行工具携带客户端 CA 证书,运行 etcdctl endpoint health 命

令访问 etcd 集群,验证集群状态是否正常,命令如下 :

etcdctl --cacert=/etc/ssl/etcd/ca.pem --cert=/etc/ssl/etcd/etcd.pem --key=/etc/ssl/etcd/etcd-key.pem --endpoints=https://192.168.167.128:2379,https://192.168.167.129:2379,https://192.169.167.130:2379 endpoint health

endpoint status cluster 这个命令也可以

member list 这个命令可以查看节点id

member remove id 删除节点 再删除数据目录 ,再添加 member add name --peer-urls=https://xxxx:2380 再添加回节点

二、部署安全的 Kubernetes Master 高可用集群

从官网github下载对应的二进制包首先,从 Kubernetes 的官方 GitHub 代码库页面下载各组件的二进制文件,在 Releases页面找到需要下载的版本号,单击 CHANGELOG 链 接,跳转到已编译好的 Server binaries和Node binaries https://github.com/kubernetes/kubernetes

在 Kubemetes 的 Master 节点上需要部署的服务包括 etcd 、 kube-apiserver 、kube-controller-manager 和 kube-scheduler 。

在工作节点 (Worker Node ) 上需要部署的服务包括 docker 、 kubelet 和 kube-proxy 。

将 Kubernetes 的 二进制可执行文件复制到/usr/bin目录下, 然后在 /usr/lib/systemd/system 目录下为各服务创建 systemd 服务配置文件这样就完成 了软件的安装 。

1.部署 kube-apiserver服务

设置 kube-apiserver 服务需要的 CA 相关证书。准备 master_ssl.cnf 文件用于生成x509 v 3 版本 的证书其中192.168.167.139为后面apiserver的vip

#创建证书申请文件

[root@master1 etcd]# cat apiserver.json

{

"CN": "k8s-apiserver",

"hosts": [

"127.0.0.1",

#k8s内部通过DNS访问的,默认都要有

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local",

#自定义可信任IP,主要是三个master的IP

"192.168.167.128",

"192.168.167.129",

"192.168.167.130",

"192.168.167.139",

"master1",

"master2",

"master3"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "k8s",

"OU": "system"

}

]

}

生成证书命令

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-crs.json | cfssljson -bare apiserver

将证书 apiserver.pem apiserver-key.pem 分发到其他节点

#为kube-apiserver 服务创建 systemd 服务,然后启动systemctl start kube-apiserver && systemctl enable kube-apiserver && mkdir /var/log/kubernetes

[root@master1 kubernetes]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kube-apiserver \

--log-dir=/var/log/kubernetes \

--v=2 \

--logtostderr=false \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--service-cluster-ip-range=10.0.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.167.128:2379,https://192.168.167.129:2379,https://192.168.167.130:2379 \

--etcd-cafile=/etc/ssl/etcd/ca.pem \

--etcd-certfile=/etc/ssl/etcd/etcd.pem \

--etcd-keyfile=/etc/ssl/etcd/etcd-key.pem \

--client-ca-file=/etc/ssl/etcd/ca.pem \

--tls-cert-file=/etc/ssl/etcd/apiserver.pem \

--tls-private-key-file=/etc/ssl/etcd/apiserver-key.pem \

--kubelet-client-certificate=/etc/ssl/etcd/apiserver.pem \

--kubelet-client-key=/etc/ssl/etcd/apiserver-key.pem \

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

#参数说明如下图:

2 创建客户端 CA 证 书

kube-controller-manager 、 kube-scheduler 、 kubelet 和 kube-proxy 服务作为客户端连接

kube-apiserver 服务,需要为它们创建客户端 CA 证书进行访问 。

[root@master1 etcd]# vim /etc/ssl/etcd/admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "system"

}

]

}

生成证书命令,并分发到其他机器

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#创建客户端连接 kube-apiserve 服务所需的 kubeconfig 配置文件,并分发

本节为 kube -controller-manager 、 kube-scheduler 、 kubelet 和 kube-proxy 服务统一创建

一 个 kubeconfig 文件作为连接 kube-apiserver 服务的配置文件,后续也作为 kubectl 命令 行

工具连接 kube-apiserver 服务的配置文件 。

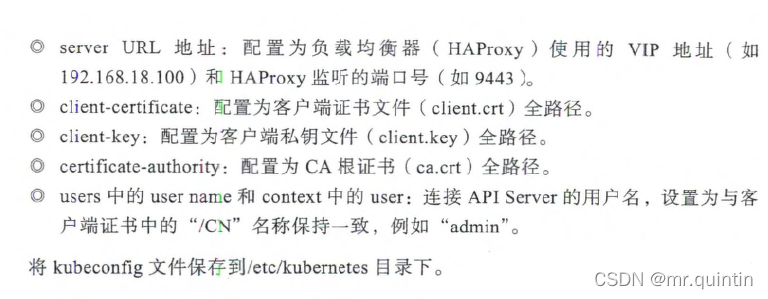

在 Kubecon fig 文件中主要设置访问 kube- api server 的 URL 地址及所需 CA 证书等的相

关参数:

[root@master1 etcd]# vim /etc/kubernetes/kubeconfig

apiVersion: v1

kind: Config

clusters:

- name: default

cluster:

server: https://192.168.167.139:9443

certificate-authority: /etc/ssl/etcd/ca.pem

users:

- name: admin

user:

client-certificate: /etc/ssl/etcd/admin.pem

client-key: /etc/ssl/etcd/admin-key.pem

contexts:

- context:

cluster: default

user: admin

name: default

current-context: default

配置说明如下图:

3 部署 kube-controller-manager 服务

并分发,systemctl start kube-controller-manager && systemctl enable kube-controller-manager

[root@master1 log]# vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=kube controller Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kube-controller-manager \

--log-dir=/var/log/kubernetes \

--v=2 \

--logtostderr=false \

--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

--service-cluster-ip-range=10.0.0.0/16 \

--service-account-private-key-file=/etc/ssl/etcd/apiserver-key.pem \

--root-ca-file=/etc/ssl/etcd/ca.pem \

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

4 部署kube-schedule

并分发 到其他机器 启动 systemctl statrt kube-scheduler.service && systemctl enable kube-scheduler.service

[root@master1 system]# vim kube-scheduler.service

[Unit]

Description=kubernetes schefuler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kube-scheduler \

--log-dir=/var/log/kubernetes \

--v=2 \

--logtostderr=false \

--kubeconfig=/etc/kubernetes/kubeconfig \

--leader-elect=true \

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

5 apiserver高可用架构

nginx配置文件,要记得配置开机自启

crontab -e

* * * * * if [[ `ps -ef | grep nginx | grep -v "grep" | wc -l` < 1 ]];then cd /data/nginx/ && ./nginx; fiyum install keepalived -y

systemctl enable keepalived.service

keeplived配置文件,

Hint: Some lines were ellipsized, use -l to show in full.

[root@master1 nginx]# vim /etc/keepalived/keepalived.conf

##############master的配置######################

!master Configuration File for keepalived

global_defs {

vrrp_script check_nginx {

script "/data/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 #主机网卡

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.167.139/24

}

}

track_script{

check_nginx

}

}

######下面是backup的配置###########################

! Configuration File for keepalived

!master Configuration File for keepalived

global_defs {

vrrp_script check_nginx {

script "/data/check_nginx.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.167.139/24

}

}

track_script{

check_nginx

}

}

验证:关闭master的systemctl stop keepalieved 看ip能否切换

三、部署node 服务

在 No de 上 需要部署 Docker 、 kubelet 、 kube-proxy, 在成功加入 Kubernetes 集群后 ,

还需要部署 CNI 网 络插件 、 DNS 插件等管理组件

1 部署 kubelet 服务

[root@master2 system]# vim kubelete.service

[Unit]

Description=kubernetes schefuler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kubelet \

[Unit]

Description=kubernetes schefuler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kubelete \

--log-dir=/var/log/kubernetes \

--v=2 \

--logtostderr=false \

--kubeconfig=/etc/kubernetes/kubeconfig \

--config=/etc/kubernetes/kubelet.config \

--hostname-override=192.168.167.129 \

--network-plugin=cni

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

配置文件 kub ele t. c o n fi g 的内容示例如下:

配置文件 kub ele t. c o n fi g 的内容示例如下:

[root@master2 system]# vim /etc/kubernetes/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

cgroupDriver: cgroupfs

clusterDNS: [ "192.168.167.100" ]

clusterDomain: cluster.local

authentication:

anonymous:

enabled: true

systemctl start kubelet.service && systemctl enable kubelet.service

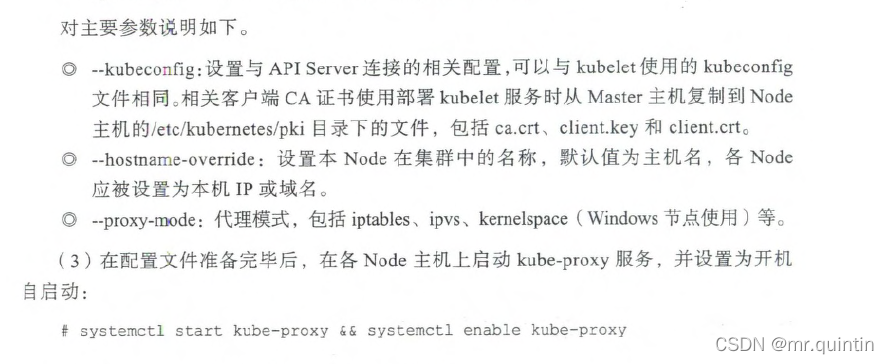

2 部署 kube-proxy 服务

[root@master2 system]# vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=kubernetes schefuler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/bin/kubelet \

--log-dir=/var/log/kubernetes \

--v=2 \

--logtostderr=false \

--kubeconfig=/etc/kubernetes/kubeconfig \

--hostname-override=192.168.167.129 \

--proxy-mode=ipvs

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

systemctl start kube-proxy.service && systemctl enable kube-proxy.service

3 在 Master 上通过 kubectl 验证 Node 信息

kubectl --kubeconfig=/etc/kubernetes/kubeconfig get nodes

#ps:也可以将文件 ln -s /etc/kubernetes/kubeconfig ~/.kube/config ,就不需要指定文件了

我们可以看 到 各 Node 的状态为 " NotReady " , 这是因为还没有部署 CKI 网络插件 ,

无法设置容器网络。

类似于通 过 kubeadm 创建 Kubernetes 集群,例如选择 Calico CNI 插件运行下面的命

令一键完成 CNI 网络插件的部署:详见下一节

补充:Kubernetes 除了提供了基于 CA 证书的认证方式,也提供了基 千 HTTP Token 的简单

认证方式。

四 部署网络插件Calico

#获取calico的配置文件

wget https://docs.projectcalico.org/manifests/calico-etcd.yaml---

# Source: calico/templates/calico-etcd-secrets.yaml

# The following contains k8s Secrets for use with a TLS enabled etcd cluster.

# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# The keys below should be uncommented and the values populated with the base64

# encoded contents of each file that would be associated with the TLS data.

# Example command for encoding a file contents: cat <file> | base64 -w 0

# etcd-key: null

# etcd-cert: null

# etcd-ca: null

etcd-key: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBMmd0cG01emhNVUxBMzJnc2RLUlhTUXBjL25jeEZXU0RzWkJMWXI1dlNzSzg1Yk15CmN3NnNVVFFMNVJPOGRXTGZxcHJFSmovcHl2bURPdGI3WXQ2NTJjUGpMMS90b0VxVDJIUjdlbGtxOEVIVDgwM3EKRFh1bkl3M3ZrYXpsZUR5dDArN2toYXUrekR6UCtjZXlXTjR6L01WdUJoSTVlYnAxRlBQOVFqemI0M3Y2dTdKbAorOERmK2ZZb05YNVZscDFpL1R2MGVjeit6Y0o1cGR2cUtlU2g5a3ZaNmtEQjQzbUR3NVV2T0wzRyt4dDVOWklFClhlUFB1NzQvWjBJcWcyYW90NGtyLzcwYktwTUI0WXhvQU1iK0lDcHJPREk1WVgwdWdHTnRMRjQ5aGloUHlVS2IKVENZeHp3RitDejJKTkVYeWdldTBlVW4vaDBSbFFsUytBT1hFNlFJREFRQUJBb0lCQUdmOG4xNEdZR05VMlpUeQpJcGx5TXVwemxjd0ozZys4d3cyd3FqTXFiUHN6aWxEbHVENmxGY3NZVGovdXZLY2pBMVppdnY4YTVnM1dGeDY5Cm5tQVZwbjdUYkFxUTdrdk9wVm5LbTRUSzk2NWpSb2kzZE5ML1VNTm9Cd1ByZC9oeXY5ZmVDNEF3V3lzZUtYV1AKSm1BNWdJT2hTSXArc0loOFF4QStHRjg1bFFpVW01S2pRdEljVS9OdEpnZ2VxRkE1RHo1TzhKMW1tSXlndlV2bgpOQysyK3BkQW0zYm1NVnNTRGRhaFI1d09ocm1LSmt3N0xxRGFLeW1oekFVT21BcVhyZm1STml3cGwzVmZGMUVzCmpPU2REQUNXZmgvcTcyY3hkSWE1dkkrdDQyc25hRHNuUThBcXo5S3ZTMUkra1p1dm1HanA4NEo5M2JqTVpVNnEKS0NwajhURUNnWUVBNUpSNmxuQ0UyZGk0d3FmTUgxZGRUL2R5L3NCTGRKN1lqUTQyTUhzR1pjd3E1TUJ0ZENQdApOdkRKSjNuMEl5NFFJRktTMDFaeTV2Szl1OXFvNzVXbHpYamxqQ2hGMXpxSktIRWZtb21IWEVDUHV2bmFUcE5ECmJFR3pwYnVVaHI2cVNXOXJubDdINTlHZkVUSHh6M0VLYk1VaVpBNmMyaEcxUG5OMWp5QmZodFVDZ1lFQTlETm0KSUJSenJkNTExOW8xT2dRSlorUTU2eFVHN1BIYnBmL3ZjTmdNcnBINkZDdFBpOUxFcTA0ODUzaWdCbFdiMVJJaApYVDM4Q1VkOXZHa0JScFVoNHdwS2VxTHc0Q3JMUWpoeFZ2WXA3aDJKeHV1UlhUUXhVeHl6aVVjczhZR1Q5dXBECkhUUUVQN1RXclBQeS8wSXZ3R2xQNW1yWVpEUGdpSTRuUXpmZWQ4VUNnWUJWWlRNM0tPbzVDTUpkMXBwbnl4Zk4KWEVEVjg3azg1R3M2cDJpUFRDYmp1UmI5UnZhZFFSN0tPOEtxd09ENGs2anFxbTY0RGIvM2tCQmFNaUtTLzNGbQpCaGliK0IrL2ZOcnBCUG90bmR1NEYxYWJIK1R6SGdrK1ZIMXRHVnN2eStPS2M5bmMzTVFLVTBIK0JvNjBWU0x6CjUrSWxSMkJLQi91U1RVaEtKR29MMFFLQmdBNGw4RFBKbTQ0bVJtT1VJK05SbW8zblhpZXRsekloTFIybi8ySmwKVWc3SExGc0F5MklKZXhXRnVlZWJTSUkxY1lyZHV3c2c3VHBJWnhPRWJldDk5bkdtQmZ5bTloZTJ3d0g2TFM3aApvanBHZ2RZQmpmRjlkYUlnRnBMTWllRVFKZGQzYVI0SEJudithVFdxQ0prYlo1TFpSeVROYWU0Y1IxVUNEMnBJCmtWNFZBb0dCQUt3SUtNMGFPUDMxUlp6bTREWWdST1gxbHg4SzRCUEEzb2tlbjVmTVVVT1JBaGdLaUpaeVJJU0gKU3k3Um8wTUViN3kwUmhOMXhxbGJNTXJ4T09ncEhrWjJxcmlMcXY2SFdYOTZPb291MEtoUW9QcE5ZbmRBaFE5NQpGa0VTVDlESnFmNXQzTkh2UGZIRko4eDdaTDI1RktTbExseTBlOXNuVWQ4Q2Zhd0dzTHlKCi0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

etcd-cert: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQrakNDQXVLZ0F3SUJBZ0lVWURhSzdFaEVYSDA4Nk5RZlhLczQ4MDRXbW9vd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RWpBUUJnTlZCQWdUQ1VkMVlXNW5aRzl1WnpFU01CQUdBMVVFQnhNSgpSM1ZoYm1kNmFHOTFNUTB3Q3dZRFZRUUtFd1JsZEdOa01ROHdEUVlEVlFRTEV3WlRlWE4wWlcweERUQUxCZ05WCkJBTVRCR1YwWTJRd0hoY05Nakl3TXpFNU1Ea3pPREF3V2hjTk1qY3dNekU0TURrek9EQXdXakJrTVFzd0NRWUQKVlFRR0V3SkRUakVTTUJBR0ExVUVDQk1KUjNWaGJtZGtiMjVuTVJJd0VBWURWUVFIRXdsSGRXRnVaM3BvYjNVeApEVEFMQmdOVkJBb1RCR1YwWTJReER6QU5CZ05WQkFzVEJsTjVjM1JsYlRFTk1Bc0dBMVVFQXhNRVpYUmpaRENDCkFTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTm9MYVp1YzRURkN3TjlvTEhTa1Ywa0sKWFA1M01SVmtnN0dRUzJLK2IwckN2T1d6TW5NT3JGRTBDK1VUdkhWaTM2cWF4Q1kvNmNyNWd6clcrMkxldWRuRAo0eTlmN2FCS2s5aDBlM3BaS3ZCQjAvTk42ZzE3cHlNTjc1R3M1WGc4cmRQdTVJV3J2c3c4ei9uSHNsamVNL3pGCmJnWVNPWG02ZFJUei9VSTgyK043K3J1eVpmdkEzL24yS0RWK1ZaYWRZdjA3OUhuTS9zM0NlYVhiNmlua29mWkwKMmVwQXdlTjVnOE9WTHppOXh2c2JlVFdTQkYzano3dStQMmRDS29ObXFMZUpLLys5R3lxVEFlR01hQURHL2lBcQphemd5T1dGOUxvQmpiU3hlUFlZb1Q4bENtMHdtTWM4QmZnczlpVFJGOG9IcnRIbEovNGRFWlVKVXZnRGx4T2tDCkF3RUFBYU9Cb3pDQm9EQU9CZ05WSFE4QkFmOEVCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUF3R0ExVWRFd0VCL3dRQ01BQXdIUVlEVlIwT0JCWUVGRUlYekNpZGNLUWJaZCtKWW9tZQo0L2pKUDRpYU1COEdBMVVkSXdRWU1CYUFGSUxBSVdaTEZwZmp2ZC9odlJlYzIyR2xDUHROTUNFR0ExVWRFUVFhCk1CaUhCSDhBQUFHSEJNQ29wNENIQk1Db3A0R0hCTUNvcDRJd0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFKc3gKRGdMczdBeUg3OWlyalFqRit5aXl0U3ZEMjlSTTV0WFRuTy9uNDdXRmhVMjE5WEhxWi84L0Vkc0c3TCs4RzhVdwpwdEY4ckpHK2ZibnEyTXhRQzVWTHcycUo1WHZ0dHZSZDZoOGtJSVptc2owSlFsSGdvVzhmbkR4c1duZ0ErVDdtCjlZOGZYc3NkTGxvdjZ3WWZNd2ZwV2x4SVFTbUkrQ1JuaXVGZkZGbkhUbFdNL0NpNHZuemVBWWxUTlduaHBaMW0KWXhHTEQrN0E4UEg1dzkya2MzNWVBZGo2dWthaWhib1UwVUF0MUhZSlhJU2phZ2NhREFDbGZZREIxeUdaRFNUdwpvSE1IYjdRVjBoVWxobU1MYjg1TzRpTXdLZUxuMThuWHRVblZjWTVqUjZja2xWbFdPa0F6NXFqOVJ0YlhjckI1Cno5VmZrRDE1eTlzTGc2cVp1cDg9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

etcd-ca: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR2RENDQXFTZ0F3SUJBZ0lVZVA0akhUUkVScDl1ZkVLZEsvUkNGK3hLbklrd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pERUxNQWtHQTFVRUJoTUNRMDR4RWpBUUJnTlZCQWdUQ1VkMVlXNW5aRzl1WnpFU01CQUdBMVVFQnhNSgpSM1ZoYm1kNmFHOTFNUTB3Q3dZRFZRUUtFd1JsZEdOa01ROHdEUVlEVlFRTEV3WlRlWE4wWlcweERUQUxCZ05WCkJBTVRCR1YwWTJRd0hoY05Nakl3TXpFNU1Ea3pNREF3V2hjTk1qY3dNekU0TURrek1EQXdXakJrTVFzd0NRWUQKVlFRR0V3SkRUakVTTUJBR0ExVUVDQk1KUjNWaGJtZGtiMjVuTVJJd0VBWURWUVFIRXdsSGRXRnVaM3BvYjNVeApEVEFMQmdOVkJBb1RCR1YwWTJReER6QU5CZ05WQkFzVEJsTjVjM1JsYlRFTk1Bc0dBMVVFQXhNRVpYUmpaRENDCkFTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTUZ6a3JITDF3TU1QcFJ1UEVuajZZbUcKZHRVUEdKMWxhNVJmZVo5ZGEraXFwZTRUQ3ZWMWxTMVppNXBiK2ZPZzFHYUNaQlc5THJlTEcrOHY3NVJZNHR3ZQoyejVzVDdNNGYwdllLdDBWQk8xejJlMU5oODFvejFTY2UraTVyczdEc2paQjV6SG15UWNwWUJlNjFrSXNlMEUzCkFSeHM2dUtCWEd3c0IwcS9PcHhoV2pmcFd1M1BCeDkxclhCWVpOdFBDLzd5SXUraEVCWkhMOGNxditrR2NFYVQKMkR1OEFLZjI4MFZudzBhcm9JQ1pEdEsvUVVwNDBZS1ZoQjNyNlRMaUNsNHJ5YzV6aFBDSkJxdmVXemsyWEpRQgo1NjVrSnZLUFV2M08vdUxxeER1bW00SU5xWDlNcE8wUWErNHA5Y1cyclVRdTk4WjdhWnQ0SGhUYlJQbE5PQUVDCkF3RUFBYU5tTUdRd0RnWURWUjBQQVFIL0JBUURBZ0VHTUJJR0ExVWRFd0VCL3dRSU1BWUJBZjhDQVFJd0hRWUQKVlIwT0JCWUVGSUxBSVdaTEZwZmp2ZC9odlJlYzIyR2xDUHROTUI4R0ExVWRJd1FZTUJhQUZJTEFJV1pMRnBmagp2ZC9odlJlYzIyR2xDUHROTUEwR0NTcUdTSWIzRFFFQkN3VUFBNElCQVFDcGRYb1RtOW9UM1dlcXBEQmVkVk94CnZuSTJiQnhENGJRTWdHb0hNNzQyKzIweG0vdzdvd002VXFSbDRoTlJHMzhPeTlSakQ2d3Y3NUMwT3IvNm5LOTIKeHdTQTlzL21qdnVKbEJ0N0N5SkV6TC80RVFlR0p1UXdpWHVFM09kUnhhVVlpNEIwb3BBR2JWODhzVFVLelhKRwpoYm12REEwOUJaME54dS9kaHRqUktEL0JOV3BiZE9rOWJZZ3hpdGpkQmJTdU9hUDR6ZWxzVy9sRGlMSkRoL2swCk1SNHNhTllsUU9zTUhXTlpyamVrRitQd3YxaU9MdjV6Z3VhUDJoT242d2JHMG8rWktBaVhmK0NkZXdEZ1E3VmQKZmR0YmV0K3h4VVhaRDNRWE9ObHpjbG1ZdncydS9Rc3VzT1BUYy9MWFRVcnhiSnozSzNDUlB0bnVMT05Tak1QbwotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

---

# Source: calico/templates/calico-config.yaml

# This ConfigMap is used to configure a self-hosted Calico installation.

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "https://192.168.167.128:2379,https://192.168.167.129:2379,https://192.168.167.130:2379"

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_key: /calico-secrets/etcd-key

etcd_cert: /calico-secrets/etcd-cert

etcd_ca: /calico-secrets/etcd-ca

# Typha is disabled.

typha_service_name: "none"

# Configure the backend to use.

calico_backend: "bird"

# Configure the MTU to use

veth_mtu: "1440"

# The CNI network configuration to install on each node. The special

# values in this config will be automatically populated.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"etcd_endpoints": "__ETCD_ENDPOINTS__",

"etcd_key_file": "__ETCD_KEY_FILE__",

"etcd_cert_file": "__ETCD_CERT_FILE__",

"etcd_ca_cert_file": "__ETCD_CA_CERT_FILE__",

"mtu": __CNI_MTU__,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

---

# Source: calico/templates/rbac.yaml

# Include a clusterrole for the kube-controllers component,

# and bind it to the calico-kube-controllers serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

rules:

# Pods are monitored for changing labels.

# The node controller monitors Kubernetes nodes.

# Namespace and serviceaccount labels are used for policy.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

- serviceaccounts

verbs:

- watch

- list

# Watch for changes to Kubernetes NetworkPolicies.

- apiGroups: ["networking.k8s.io"]

resources:

- networkpolicies

verbs:

- watch

- list

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-kube-controllers

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-kube-controllers

subjects:

- kind: ServiceAccount

name: calico-kube-controllers

namespace: kube-system

---

# Include a clusterrole for the calico-node DaemonSet,

# and bind it to the calico-node serviceaccount.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: calico-node

rules:

# The CNI plugin needs to get pods, nodes, and namespaces.

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

# Used to discover service IPs for advertisement.

- watch

- list

- apiGroups: [""]

resources:

- nodes/status

verbs:

# Needed for clearing NodeNetworkUnavailable flag.

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-node.yaml

# This manifest installs the calico-node container, as well

# as the CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: apps/v1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

updateStrategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

k8s-app: calico-node

annotations:

# This, along with the CriticalAddonsOnly toleration below,

# marks the pod as a critical add-on, ensuring it gets

# priority scheduling and that its resources are reserved

# if it ever gets evicted.

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

hostNetwork: true

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

priorityClassName: system-node-critical

initContainers:

# This container installs the CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: calico/cni:v3.11.3

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# CNI MTU Config variable

- name: CNI_MTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

- mountPath: /calico-secrets

name: etcd-certs

securityContext:

privileged: true

# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes

# to communicate with Felix over the Policy Sync API.

- name: flexvol-driver

image: calico/pod2daemon-flexvol:v3.11.3

volumeMounts:

- name: flexvol-driver-host

mountPath: /host/driver

securityContext:

privileged: true

containers:

# Runs calico-node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: calico/node:v3.11.3

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Set noderef for node controller.

- name: CALICO_K8S_NODE_REF

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

valueFrom:

configMapKeyRef:

name: calico-config

key: veth_mtu

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.0.0.0/16"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

exec:

command:

- /bin/calico-node

- -felix-live

- -bird-live

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -felix-ready

- -bird-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /run/xtables.lock

name: xtables-lock

readOnly: false

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

- name: policysync

mountPath: /var/run/nodeagent

volumes:

# Used by calico-node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the etcd TLS secrets with mode 400.

# See https://kubernetes.io/docs/concepts/configuration/secret/

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

defaultMode: 0440

# Used to create per-pod Unix Domain Sockets

- name: policysync

hostPath:

type: DirectoryOrCreate

path: /var/run/nodeagent

# Used to install Flex Volume Driver

- name: flexvol-driver-host

hostPath:

type: DirectoryOrCreate

path: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

---

# Source: calico/templates/calico-kube-controllers.yaml

# See https://github.com/projectcalico/kube-controllers

apiVersion: apps/v1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

# The controllers can only have a single active instance.

replicas: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

nodeSelector:

beta.kubernetes.io/os: linux

tolerations:

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- key: node-role.kubernetes.io/master

- key: node.kubernetes.io/not-ready

effect: NoSchedule

- key: node.kubernetes.io/unreachable

effect: NoSchedule

serviceAccountName: calico-kube-controllers

priorityClassName: system-cluster-critical

# The controllers must run in the host network namespace so that

# it isn't governed by policy that would prevent it from working.

hostNetwork: true

containers:

- name: calico-kube-controllers

image: calico/kube-controllers:v3.11.3

env:

# The location of the etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Choose which controllers to run.

- name: ENABLED_CONTROLLERS

value: policy,namespace,serviceaccount,workloadendpoint,node

volumeMounts:

# Mount in the etcd TLS secrets.

- mountPath: /calico-secrets

name: etcd-certs

readinessProbe:

exec:

command:

- /usr/bin/check-status

- -r

volumes:

# Mount in the etcd TLS secrets with mode 400.

# See https://kubernetes.io/docs/concepts/configuration/secret/

- name: etcd-certs

#hostPath:

# path: /etc/ssl/etcd

secret:

secretName: calico-etcd-secrets

defaultMode: 0400

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-kube-controllers

namespace: kube-system

---

# Source: calico/templates/calico-typha.yaml

---

# Source: calico/templates/configure-canal.yaml

---

# Source: calico/templates/kdd-crds.yaml

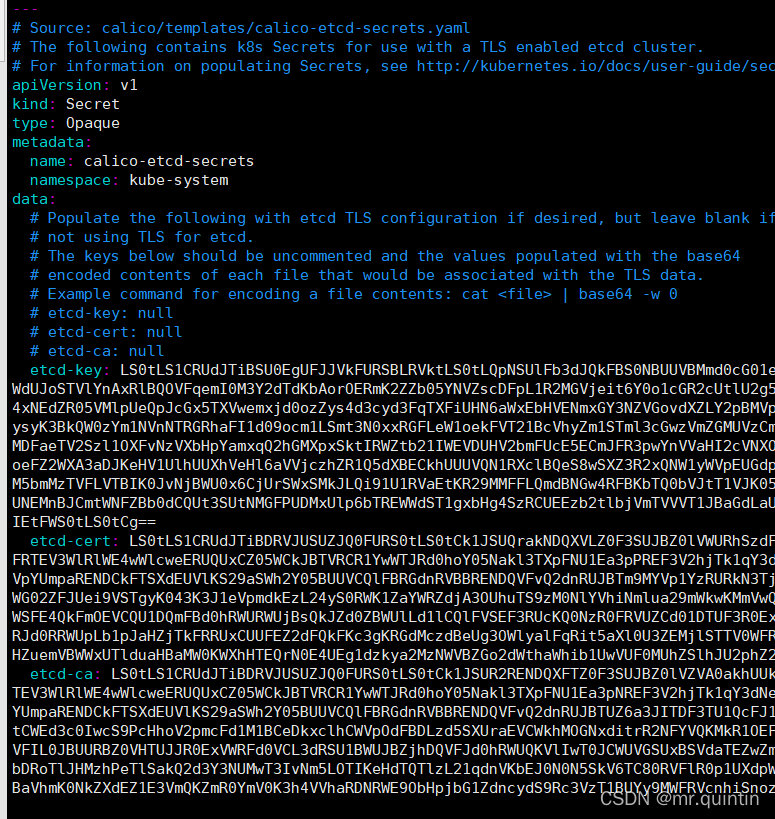

修改配置文件的地方很多,一个一个的说明

1.secert/data下面的数据是将证书格式通过命令转换后得来的,然后放过来就行了

cat /etc/ssl/etcd/etcd.pem | base64 -w 0

2

ConfigMap里指定证书,前面的目录是pod内的路径下面还有很多引用所以不用改,后面将我们的真实证书路径挂在到这个上面就行,所以这里只修改我们的证书名字就行

在Daemonset和deployment的配置中添加挂在映射到上面说的/calico-secerts就行了

配置到这就修改完成了,此时启动

kubectl apply -f calico-etcd.yml我遇到了两个问题,第一个简单说calico-controllers容器启动需要初始容器pasue,但是pasue容器镜像无法从k8s官网拉下来没解决方法是通过docker pull 然后tags成一样的名字即可,node节点都需要

docker pull registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

docker tag registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0 k8s.gcr.io/pause:3.2

第二个问题,calico-node 需要通过calico容器网络转发到apiserver的vip192.168.167.139,但是证书当时创建的时候没有配置这个域名10.0.0.1,导致对其不信任拒绝了请求,所以要在申请证书文件中添加这个地址,然后重新生成证书分发到各个节点

E0401 07:28:56.811389 1 reflector.go:123] pkg/mod/k8s.io/client-go@v0.0.0-20191114101535-6c5935290e33/tools/cache/reflector.go:96: Failed to list *v1.Namespace: Get https://10.0.0.1:443/api/v1/namespaces?limit=500&resourceVersion=0: x509: certificate is valid for 127.0.0.1, 192.168.167.128, 192.168.167.129, 192.168.167.130, 192.168.167.139, not 10.0.0.1

#这个创建证书的命令不用多说了,之前讲过

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes apiserver-crs.json | cfssljson -bare apiserver

至此calico插件安装完成,nodes节点状态也对了

ps:如果发现显示401 认证等问题删除secert和configmap重试一下

五 部署DNS集群

为了使 Kubemetes 集群正常工作 , 我们还需要部署 DNS 服 务,建议使用 coreDNS 进

行部署

1 部署 CoreDNS 服务

部署 CoreDNS 服务时需要 创 建 3 个资源对象 : 1 个 ConfigMap 、 1 个 Deployment 和 1

个 Service 。 在启用 了 RBAC 的集群中 , 还可以设置 ServiceAccount 、 ClusterRole 、

ClusterRoleBinding 对 CoreDNS 容器进行权限设置 。

deployment和service创建yml文件如下

在deployment中需要修改参数:

kubernetes cluster.local 10.0.0.0/16 #要和calico配置的网段一致

在service中需要修改参数 clusterIP: 10.0.136.136 #要和kubelet中的配置文件中指定的dns地址一致

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

#创建用于Pod访问的服务账号,指明了命名空间,那么创建Deployment也要指明

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

#定义集群角色,叫system:coredns,以system一般作为系统使用,为了区分角色功能

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

#绑定服务用户到角色

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local 10.0.0.0/16 {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

#定义配置文件,方便Pod使用及修改和扩展,官网最后括号旁边STUBDOMAINS需要删除,不然Pod启动失败,提示未知参数

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

beta.kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.8.0

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

#创建Deployment

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.0.136.136

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

#创建Server,暴露端口,指定集群IP

2 验证

通过创建一个pod和一个service来验证

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.13-alpine

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service-nodeport

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

nodePort: 30025

type: NodePort

selector:

name: nginx

通过运行pod执行解析service的域名如果能返回具体地址则证明dns正常工作。

5720

5720

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?