前言

本次是第四篇。写这个是学习和验证的过程,思路是慢慢的成熟的。

第一篇,写一个通用框架,做到拿来就能用。

第二篇,实现mmap功能,内核中的read_buf和write_buf都映射到用户空间,然后呢。写read_buf和write_buf的最后一个字节为‘R’和'W',然后再release函数中打印这两个字节。更加复杂的验证,根据需要自行添加,写的太复杂,意义不大。

第三篇,通过测试app,控制复制src_buf到dst_buf,复制方式可以使用DMA引擎和memcpy,并计算复制过程中消耗的微秒数,并在测试app中验证复制是否准确,尽最大努力保证整个流程的准确无误。

第四篇,实验内容和第三篇相同,不同的是,本次使用dma_alloc_coherent替代了dma_map_single

一 dma_alloc_coherent()函数

dma_alloc_coherent()函数为执行DMA的设备分配未缓存的内存。它分配页面,返回CPU可见的(虚拟)地址,并将第三个参数设置为设备可见的地址。缓冲区会自动放置在设备访问它的位置。参数dev是device数据结构指针。可以在带有GFP_ATOMIC标志的中断上下文中调用此函数。参数size是要分配的区域长度(以字节为单位),参数gfp是标准的GFP标志。dma_alloc_coherent()函数返回两个值:用于CPU访问的虚拟地址cpu_addr和用于设备访问的DMA地址dma_handle。确保CPU虚拟地址和DMA地址都与最小PAGE_SIZE对齐(该最小PAGE_SIZE大于或等于请求的大小)。要解除映射并释放这样的DMA区域,需要调用以下函数:

dma_free_coherent(dev, size, cpu_addr, dma_handle)

其中dev和size与上面dma_alloc_coherent()调用的相同,cpu_addr和dma_handle是dma_alloc_coherent()返回给你的值。不得在中断上下文中调用此函数。

申请内存应用范例:

struct xxx {

char *src_buf;

dma_addr_t src_addr;

};

css_dev->src_buf= dma_alloc_coherent(css_dev->chan_dev,

PAGE_ALIGN(css_dev->buf_size),

&css_dev->src_addr,

GFP_DMA | GFP_KERNEL);释放内存应用范例

dma_free_coherent(css_dev->chan_dev,

PAGE_ALIGN(css_dev->buf_size),

css_dev->src_buf,

css_dev->src_addr);二 dma_map_single函数

流式DMA映射:使用带缓存的映射,并根据使用dma_map_single()和dma_unmap_single()所需的操作来清除或使其无效。这与一致性映射不同,因为映射处理的是预先选择的地址,这些地址通常针对单次DMA传输进行映射,之后马上解除映射。对于非一致性处理器,dma_map_single()函数将调用dma_map_single_attrs(),后者又调用arm_dma_map_page()来确保缓存中的任何数据被适当地丢弃或写回。可以在中断上下文中调用流式DMA映射函数。每个映射/解除映射有两种版本,一种是对单个区域进行映射/解除映射,另一种是对分散列表进行映射/解除映射。本次使用第一种。

#define dma_map_single(dev, addr, size, dir)

其中dev是device数据结构指针,addr是用kmalloc()分配的虚拟缓冲区地址指针,size是缓冲区大小,方向选择包括DMA_BIDIRECTIONAL、DMA_TO_DEVICE或DMA_FROM_DEVICE。dma_handle是为设备返回的DMA总线地址。

解除映射内存区域:

#define dma_unmap_single(dev, hnd, size, dir)

当DMA活动完成时(例如,从中断中得知DMA传输完成),需要调用dma_unmap_single()。如下是流式DMA映射的规则:

缓冲区只能在指定的方向上使用。

被映射的缓冲区属于设备,而不是处理器。

设备驱动程序必须保持对缓冲区的控制,直到它被解除映射。

在映射之前,用于向设备发送数据的缓冲区必须包含数据。(也就是说,先申请src_buf,在dma_map_single调用之前,写src_buf,dma_map_single写src_buf是无效的,这也是我测试得到的结果。)

在DMA仍处于活动状态时,不得解除对缓冲区的映射,否则将导致严重的系统不稳定。

申请内存应用范例:

struct xxx {

char *src_buf;

dma_addr_t src_addr;

};

css_dev->src_buf = (char*)__get_free_pages(GFP_KERNEL|GFP_DMA,css_dev->buf_size_order);

if(css_dev->src_buf == NULL || IS_ERR(css_dev->src_buf)){

DEBUG_CSS("__get_free_pages error");

return -ENOMEM;

}

css_dev->src_addr = dma_map_single(css_dev->chan_dev, css_dev->src_buf, css_dev->buf_size, DMA_TO_DEVICE); 释放内存应用范例:先解除对总线地址的映射,再释放内存空间。

dma_unmap_single(css_dev->chan_dev,css_dev->src_addr,css_dev->buf_size,DMA_TO_DEVICE);

free_page((unsigned long )css_dev->src_buf);三 测试代码

应用代码:csi_single_mmap.c

#include <unistd.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <stdlib.h>

#include <sys/mman.h>

#include <sys/ioctl.h>

#include <stdio.h>

#include <fcntl.h>

#define DEBUG_INFO(format,...) printf("%s:%d"format"\n",\

__func__,__LINE__,##__VA_ARGS__);

#define _CSS_DMA_DEV_NAME "/dev/css_dma"

#define CSS_DMA_IMAGE_SIZE (1280*800)

#define CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL 0x1001

#define CSI_DMA_FILL_CHAR_AND_RUN_DMAENGIEN_IOCTL 0x1002

#define CSI_DMA_FILL_CHAR_RUN_MEMCPY_IOCTL 0x1003

enum _css_dev_buf_type{

_CSS_DEV_READ_BUF = 0,

_CSS_DEV_WRITE_BUF,

_CSS_DEV_UNKNOWN_BUF_TYPE,

_CSS_DEV_MAX_BUF_TYPE,

};

struct __css_dev_ {

char *read_buf;

char *write_buf;

}_css_dev_;

static int cycle_dmaengine_test(struct __css_dev_ *css_dev,int fd,int count){

int i = 0,j = 0;

char c = 'a' - 1;

for(i = 0;i < count;i++){

c = c + 1;

if(ioctl(fd,

CSI_DMA_FILL_CHAR_AND_RUN_DMAENGIEN_IOCTL,

&c) < 0)

{

perror("ioctl");

DEBUG_INFO("ioctl CSI_DMA_FILL_CHAR_IOCTL fail");

return -1;

}

for(j = 0;j < CSS_DMA_IMAGE_SIZE;j++){

if(css_dev->read_buf[j] != c || css_dev->write_buf[j] != c){

DEBUG_INFO("error:css_dev->read_buf[%d] = %c,css_dev->write_buf[%d] = %c",

j,css_dev->read_buf[j],

j,css_dev->write_buf[j]);

return -1;

}

}

DEBUG_INFO("set c = %c ok",c);

if(c == 'z'){

c = 'a' - 1;

}

}

}

static int cycle_memcpy_test(struct __css_dev_ *css_dev,int fd,int count){

int i = 0,j = 0;

char c = 'a' - 1;

for(i = 0;i < count;i++){

c = c + 1;

if(ioctl(fd,

CSI_DMA_FILL_CHAR_RUN_MEMCPY_IOCTL,

&c) < 0)

{

perror("ioctl");

DEBUG_INFO("ioctl CSI_DMA_FILL_CHAR_IOCTL fail");

return -1;

}

for(j = 0;j < CSS_DMA_IMAGE_SIZE;j++){

if(css_dev->read_buf[j] != c || css_dev->write_buf[j] != c){

DEBUG_INFO("error:css_dev->read_buf[%d] = %c,css_dev->write_buf[%d] = %c",

j,css_dev->read_buf[j],

j,css_dev->write_buf[j]);

return -1;

}

}

DEBUG_INFO("set c = %c ok",c);

if(c == 'z'){

c = 'a' - 1;

}

}

}

int main(int argc,char *argv[])

{

struct __css_dev_ *css_dev = &_css_dev_;

char *name = _CSS_DMA_DEV_NAME;

int fd = open(name,O_RDWR);

if(fd < 0){

DEBUG_INFO("open %s",name);

return -1;

}

DEBUG_INFO("open %s ok",name);

if(ioctl(fd,

CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL,

_CSS_DEV_READ_BUF) < 0)

{

perror("ioctl");

DEBUG_INFO("ioctl fail");

goto fail;

}

css_dev->read_buf = (char*)mmap(NULL,

CSS_DMA_IMAGE_SIZE,

PROT_READ|PROT_WRITE,

MAP_SHARED,fd,0);

if(css_dev->read_buf == NULL){

perror("mmap");

DEBUG_INFO("mmap fail");

goto fail;

}

DEBUG_INFO("css_dev->read_buf = %p",css_dev->read_buf);

if(ioctl(fd,

CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL,

_CSS_DEV_WRITE_BUF) < 0)

{

perror("ioctl");

DEBUG_INFO("ioctl fail");

goto fail;

}

css_dev->write_buf = (char*)mmap(NULL,

CSS_DMA_IMAGE_SIZE,

PROT_READ|PROT_WRITE,

MAP_SHARED,fd,0);

if(css_dev->write_buf == NULL){

perror("mmap");

DEBUG_INFO("mmap fail");

goto fail;

}

DEBUG_INFO("css_dev->write_buf = %p",css_dev->write_buf);

cycle_dmaengine_test(css_dev,fd,10);

cycle_memcpy_test(css_dev,fd,10);

fail:

close(fd);

return 0;

}驱动代码:csi_single.c

#include <linux/init.h>

#include <linux/module.h>

#include <linux/types.h>

#include <linux/kernel.h>

#include <linux/fs.h>

#include <linux/slab.h>

#include <linux/miscdevice.h>

#include <linux/mutex.h>

#include <linux/device.h>

#include <linux/uaccess.h>

#include <linux/gfp.h>

#include <linux/dma-mapping.h>

#include <linux/dmaengine.h>

#include <linux/platform_device.h>

#include <linux/completion.h>

#include <linux/platform_data/dma-imx.h>

#include <linux/timer.h>

#define DEBUG_CSS(format,...)\

printk(KERN_INFO"info:%s:%s:%d: "format"\n",\

__FILE__,__func__,__LINE__,\

##__VA_ARGS__)

#define DEBUG_CSS_ERR(format,...)\

printk("\001" "1""error:%s:%s:%d: "format"\n",\

__FILE__,__func__,__LINE__,\

##__VA_ARGS__)

#define CSS_DMA_IMAGE_SIZE (1280*800)

#define CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL 0x1001

#define CSI_DMA_FILL_CHAR_AND_RUN_DMAENGIEN_IOCTL 0x1002

#define CSI_DMA_FILL_CHAR_RUN_MEMCPY_IOCTL 0x1003

enum _css_dev_buf_type{

_CSS_DEV_READ_BUF = 0,

_CSS_DEV_WRITE_BUF,

_CSS_DEV_UNKNOWN_BUF_TYPE,

_CSS_DEV_MAX_BUF_TYPE,

};

struct _css_dev_{

struct file_operations _css_fops;

struct miscdevice misc;

int buf_size;

int buf_size_order;

char *src_buf;

char *dst_buf;

char *user_src_buf_vaddr;

char *user_dst_buf_vaddr;

dma_addr_t src_addr;

dma_addr_t dst_addr;

struct spinlock slock;

struct mutex open_lock;

char name[10];

enum _css_dev_buf_type buf_type;

struct device *dev;

struct dma_chan * dma_m2m_chan;

struct completion dma_m2m_ok;

struct imx_dma_data m2m_dma_data;

struct timeval tv_start,tv_end;

struct device *chan_dev;

struct dma_device *dma_dev;

struct dma_async_tx_descriptor *dma_m2m_desc;

};

#define _to_css_dev_(file) (struct _css_dev_ *)container_of(file->f_op,struct _css_dev_,_css_fops)

static int _css_open(struct inode *inode, struct file *file)

{

struct _css_dev_ *css_dev = _to_css_dev_(file);

DEBUG_CSS("css_dev->name = %s",css_dev->name);

return 0;

}

static ssize_t _css_read(struct file *file, char __user *ubuf, size_t size, loff_t *ppos)

{

struct _css_dev_ *css_dev = _to_css_dev_(file);

DEBUG_CSS("css_dev->name = %s",css_dev->name);

return 0;

}

static int _css_mmap (struct file *file, struct vm_area_struct *vma)

{

struct _css_dev_ *css_dev = _to_css_dev_(file);

char *p = NULL;

char **user_addr;

switch(css_dev->buf_type){

case _CSS_DEV_READ_BUF:

p = css_dev->src_buf;

user_addr = (char**)&css_dev->user_src_buf_vaddr;

break;

case _CSS_DEV_WRITE_BUF:

p = css_dev->dst_buf;

user_addr = (char**)&css_dev->user_dst_buf_vaddr;

break;

default:

p = NULL;

return -EINVAL;

break;

}

if (remap_pfn_range(vma, vma->vm_start, virt_to_phys(p) >> PAGE_SHIFT,

vma->vm_end-vma->vm_start, vma->vm_page_prot)) {

DEBUG_CSS_ERR( "remap_pfn_range error\n");

return -EAGAIN;

}

css_dev->buf_type = _CSS_DEV_UNKNOWN_BUF_TYPE;

*user_addr = (void*)vma->vm_start;

DEBUG_CSS("mmap ok user_addr = %p kernel addr = %p",*user_addr,p);

return 0;

}

static ssize_t _css_write(struct file *file, const char __user *ubuf, size_t size, loff_t *ppos)

{

struct _css_dev_ *css_dev = _to_css_dev_(file);

DEBUG_CSS("css_dev->name = %s",css_dev->name);

return size;

}

static int _css_release (struct inode *inode, struct file *file)

{

struct _css_dev_ *css_dev = _to_css_dev_(file);

DEBUG_CSS("css_dev->name = %s",css_dev->name);

DEBUG_CSS("css_dev->src_buf[%d] = %c",css_dev->buf_size - 1,

((char*)css_dev->src_buf)[css_dev->buf_size - 1]);

DEBUG_CSS("css_dev->dst_buf[%d] = %c",css_dev->buf_size - 1,

((char*)css_dev->dst_buf)[css_dev->buf_size - 1]);

return 0;

}

static int _css_set_buf_type(struct file *file, enum _css_dev_buf_type buf_type)

{

unsigned long flags;

struct _css_dev_ *css_dev = _to_css_dev_(file);

DEBUG_CSS("buf_type=%d",buf_type);

if(buf_type >= _CSS_DEV_MAX_BUF_TYPE){

DEBUG_CSS_ERR("invalid buf type");

return -EINVAL;

}

spin_lock_irqsave(&css_dev->slock,flags);

css_dev->buf_type = buf_type;

spin_unlock_irqrestore(&css_dev->slock,flags);

return 0;

}

static void css_dma_async_tx_callback(void *dma_async_param)

{

struct _css_dev_ *css_dev = (struct _css_dev_ *)dma_async_param;

complete(&css_dev->dma_m2m_ok);

}

static int css_dmaengine_cpy(struct _css_dev_ *css_dev)

{

dma_cookie_t cookie;

enum dma_status dma_status;

do_gettimeofday(&css_dev->tv_start);

css_dev->dma_m2m_desc = css_dev->dma_dev->device_prep_dma_memcpy(css_dev->dma_m2m_chan,

css_dev->dst_addr,

css_dev->src_addr,

css_dev->buf_size,0);

css_dev->dma_m2m_desc->callback = css_dma_async_tx_callback;

css_dev->dma_m2m_desc->callback_param = css_dev;

init_completion(&css_dev->dma_m2m_ok);

cookie = dmaengine_submit(css_dev->dma_m2m_desc);

if(dma_submit_error(cookie)){

DEBUG_CSS("dmaengine_submit error");

return -EINVAL;

}

dma_async_issue_pending(css_dev->dma_m2m_chan);

wait_for_completion(&css_dev->dma_m2m_ok);

dma_status = dma_async_is_tx_complete(css_dev->dma_m2m_chan,cookie,NULL,NULL);

if(DMA_COMPLETE != dma_status){

DEBUG_CSS("error:dma_status = %d",dma_status);

}

do_gettimeofday(&css_dev->tv_end);

DEBUG_CSS("dma used time = %ld us",

(css_dev->tv_end.tv_sec - css_dev->tv_start.tv_sec)*1000000

+ (css_dev->tv_end.tv_usec - css_dev->tv_start.tv_usec));

return 0;

}

static long _css_unlocked_ioctl(struct file *file, unsigned int cmd, unsigned long arg)

{

int ret = 0;

struct _css_dev_ *css_dev = _to_css_dev_(file);

char *p;

switch(cmd){

case CSI_DMA_SET_CUR_MAP_BUF_TYPE_IOCTL:

ret = _css_set_buf_type(file,(enum _css_dev_buf_type)arg);

break;

case CSI_DMA_FILL_CHAR_AND_RUN_DMAENGIEN_IOCTL:

p = (char*)arg;

memset(css_dev->src_buf,*p,css_dev->buf_size);

css_dmaengine_cpy(css_dev);

break;

case CSI_DMA_FILL_CHAR_RUN_MEMCPY_IOCTL:

if(css_dev->src_buf == NULL || css_dev->dst_buf == NULL){

DEBUG_CSS("buf is NULL");

return 0;

}

p = (char*)arg;

do_gettimeofday(&css_dev->tv_start);

memset(css_dev->src_buf,*p,css_dev->buf_size);

do_gettimeofday(&css_dev->tv_end);

DEBUG_CSS("memset used time = %ld us",

(css_dev->tv_end.tv_sec - css_dev->tv_start.tv_sec)*1000000

+ (css_dev->tv_end.tv_usec - css_dev->tv_start.tv_usec));

do_gettimeofday(&css_dev->tv_start);

memcpy(css_dev->dst_buf,css_dev->src_buf,css_dev->buf_size);

do_gettimeofday(&css_dev->tv_end);

DEBUG_CSS("memcpy used time = %ld us",

(css_dev->tv_end.tv_sec - css_dev->tv_start.tv_sec)*1000000

+ (css_dev->tv_end.tv_usec - css_dev->tv_start.tv_usec));

break;

default:

DEBUG_CSS_ERR("unknown cmd = %x",cmd);

ret = -EINVAL;

break;

}

return ret;

}

static struct _css_dev_ _global_css_dev = {

.name = "lkmao",

._css_fops = {

.owner = THIS_MODULE,

.mmap = _css_mmap,

.open = _css_open,

.release = _css_release,

.read = _css_read,

.write = _css_write,

.unlocked_ioctl = _css_unlocked_ioctl,

},

.misc = {

.minor = MISC_DYNAMIC_MINOR,

.name = "css_dma",

},

.buf_type = _CSS_DEV_UNKNOWN_BUF_TYPE,

.user_src_buf_vaddr = NULL,

.user_dst_buf_vaddr = NULL,

.m2m_dma_data = {

.peripheral_type = IMX_DMATYPE_MEMORY,

.priority = DMA_PRIO_HIGH,

},

};

static bool css_dma_filter_fn(struct dma_chan *chan, void *filter_param)

{

if(!imx_dma_is_general_purpose(chan)){

DEBUG_CSS("css_dma_filter_fn error");

return false;

}

chan->private = filter_param;

return true;

}

static int css_dmaengine_init(struct _css_dev_ *css_dev)

{

dma_cap_mask_t dma_m2m_mask;

struct dma_slave_config dma_m2m_config = {0};

css_dev->m2m_dma_data.peripheral_type = IMX_DMATYPE_MEMORY;

css_dev->m2m_dma_data.priority = DMA_PRIO_HIGH;

dma_cap_zero(dma_m2m_mask);

dma_cap_set(DMA_MEMCPY,dma_m2m_mask);

css_dev->dma_m2m_chan = dma_request_channel(dma_m2m_mask,css_dma_filter_fn,&css_dev->m2m_dma_data);

if(!css_dev->dma_m2m_chan){

DEBUG_CSS("dma_request_channel error");

return -EINVAL;

}

dma_m2m_config.direction = DMA_MEM_TO_MEM;

dma_m2m_config.dst_addr_width = DMA_SLAVE_BUSWIDTH_4_BYTES;

dmaengine_slave_config(css_dev->dma_m2m_chan,&dma_m2m_config);

css_dev->dma_dev = css_dev->dma_m2m_chan->device;

css_dev->chan_dev = css_dev->dma_m2m_chan->device->dev;

css_dev->src_buf = dma_alloc_coherent(css_dev->chan_dev,

PAGE_ALIGN(css_dev->buf_size),

&css_dev->src_addr,

GFP_DMA | GFP_KERNEL);

if(css_dev->src_buf == NULL || IS_ERR(css_dev->src_buf)){

DEBUG_CSS("dma_alloc_coherent error");

return -ENOMEM;

}

css_dev->dst_buf = dma_alloc_coherent(css_dev->chan_dev,

PAGE_ALIGN(css_dev->buf_size),

&css_dev->dst_addr,

GFP_DMA | GFP_KERNEL);

if(css_dev->dst_buf == NULL || IS_ERR(css_dev->dst_buf)){

DEBUG_CSS("dma_alloc_coherent error");

return -ENOMEM;

}

return 0;

}

static int css_dev_init(struct platform_device *pdev,struct _css_dev_ *css_dev)

{

css_dev->misc.fops = &css_dev->_css_fops;

pr_debug("css_init init ok");

mutex_init(&css_dev->open_lock);

spin_lock_init(&css_dev->slock);

printk("KERN_ALERT = %s",KERN_ALERT);

css_dev->dev = &pdev->dev;

css_dev->buf_size = CSS_DMA_IMAGE_SIZE;

css_dev->buf_size_order = get_order(css_dev->buf_size);

if(misc_register(&css_dev->misc) != 0){

DEBUG_CSS("misc_register error");

return -EINVAL;

}

platform_set_drvdata(pdev,css_dev);

css_dmaengine_init(css_dev);

DEBUG_CSS("32bit:css_dev->src_buf = %p,css_dev->dst_buf = %p",css_dev->src_buf,css_dev->dst_buf);

DEBUG_CSS("32bit:css_dev->src_addr = %x,css_dev->dst_addr = %x",css_dev->src_addr,css_dev->dst_addr);

return 0;

}

static int css_probe(struct platform_device *pdev)

{

struct _css_dev_ *css_dev = (struct _css_dev_ *)&_global_css_dev;

if(css_dev_init(pdev,css_dev)){

return -EINVAL;

}

DEBUG_CSS("init ok");

return 0;

}

static int css_remove(struct platform_device *pdev)

{

struct _css_dev_ *css_dev = &_global_css_dev;

dma_free_coherent(css_dev->chan_dev,

PAGE_ALIGN(css_dev->buf_size),

css_dev->src_buf,

css_dev->src_addr);

dma_free_coherent(css_dev->chan_dev,

PAGE_ALIGN(css_dev->buf_size),

css_dev->dst_buf,

css_dev->dst_addr);

misc_deregister(&css_dev->misc);

dma_release_channel(css_dev->dma_m2m_chan);

DEBUG_CSS("exit ok");

return 0;

}

static const struct of_device_id css_of_ids[] = {

{.compatible = "css_dma"},

{},

};

MODULE_DEVICE_TABLE(of,css_of_ids);

static struct platform_driver css_platform_driver = {

.probe = css_probe,

.remove = css_remove,

.driver = {

.name = "css_dma",

.of_match_table = css_of_ids,

.owner = THIS_MODULE,

},

};

static int __init css_init(void)

{

int ret_val;

ret_val = platform_driver_register(&css_platform_driver);

if(ret_val != 0){

DEBUG_CSS("platform_driver_register error");

return ret_val;

}

DEBUG_CSS("platform_driver_register ok");

return 0;

}

static void __exit css_exit(void)

{

platform_driver_unregister(&css_platform_driver);

}

module_init(css_init);

module_exit(css_exit);

MODULE_LICENSE("GPL");

Makefile:

export ARCH=arm

export CROSS_COMPILE=arm-linux-gnueabihf-

KERN_DIR = /home/lkmao/imx/linux/linux-imx

FILE_NAME=csi_single

obj-m += $(FILE_NAME).o

APP_NAME=csi_single_mmap

all:

make -C $(KERN_DIR) M=$(shell pwd) modules

sudo cp $(FILE_NAME).ko /big/nfsroot/jiaocheng_rootfs/home/root/

sudo scp $(FILE_NAME).ko root@192.168.0.3:/home/root/

arm-linux-gnueabihf-gcc -o $(APP_NAME) $(APP_NAME).c

sudo cp $(APP_NAME) /big/nfsroot/jiaocheng_rootfs/home/root/

sudo scp $(APP_NAME) root@192.168.0.3:/home/root/

.PHONY:clean

clean:

make -C $(KERN_DIR) M=$(shell pwd) clean

rm $(APP_NAME) -rf

设备树配置

sdma_m2m{

compatible = "css_dma";

};测试过程和结果:

1 make编译Makefile

2 进入开发板加载模块:insmod csi_single.ko

root@ATK-IMX6U:~# insmod csi_single.ko

[ 41.430795] KERN_ALERT = 1

[ 41.433620] info:/big/csi_driver/css_dma/csi_single.c:css_dev_get_dma_addr:267: 32bit:p = 94c00000,dma_addr = 94c00000

[ 41.447390] info:/big/csi_driver/css_dma/csi_single.c:css_dev_init:337: 32bit:css_dev->src_buf = 94d00000,css_dev->dst_buf = 94e00000

[ 41.463653] info:/big/csi_driver/css_dma/csi_single.c:css_probe:355: init ok

[ 41.474842] info:/big/csi_driver/css_dma/csi_single.c:css_init:398: platform_driver_register ok

root@ATK-IMX6U:~#

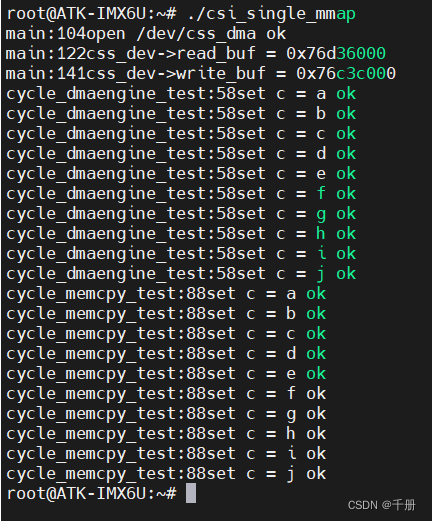

3 运行测试应用:./csi_single_mmap

执行结果如下图所示:

测试分两个部分,一个是dma引擎复制数据的部分,第二个是memcpy复制数据的部分

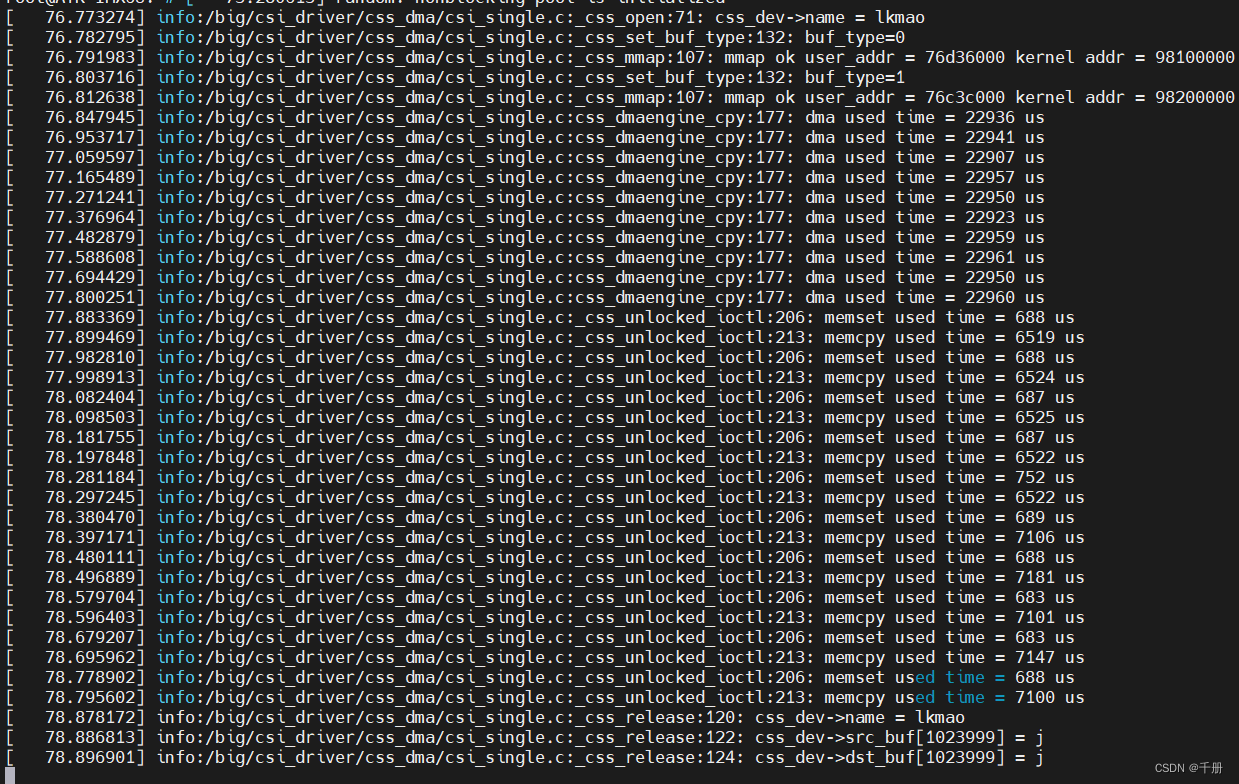

驱动中的调试信息

应用输出的调试信息:

小结

DMA引擎20多毫秒,memcpy7毫秒,相比第三篇的测试,速度慢了,memset 0.7毫秒。

299

299

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?