TTS业务指标计算

1.创建vip_open_num数据表∶

hive> create table vip_open_num(reporttime string,course_id string,account_state string,num int,open_type string) row format delimited fields terminated by '|';

2计算VIP学员开通数量(正式+试听)

hive> insert into vip_open_num select reporttime,course_id,account_state,count(*),"new_add" from vip where reporttime = '2022-10-09' and operation_mode = 'add' and study_mode = 'vip' group by reporttime,course_id,account_state;

hive> select * from vip_open_num;

OK

2022-10-09 big audition 1 new_add

2022-10-09 bilg normal 1 new_add

2022-10-09 jsd audition 1 new_add

2022-10-09 jsd normal 1 new_add

Time taken: 2.707 seconds, Fetched: 4 row(s)

3.脱产转vip

insert into vip_open_num select reporttime,after_course_id,account_state,count(*),"offline2vip" from vip where reporttime = '2022-10-09' and operation_mode = 'update' and before_class_study_mode='offline' and after_class_study_mode='vip' group by reporttime,after_course_id,account_state;

4.vip转vip转入

insert into vip_open_num select reporttime,after_course_id,account_state,count(*),"vip2vip" from vip where reporttime = '2022-10-09' and operation_mode = 'update' and before_class_study_mode='vip' and after_class_study_mode='vip' and before_course_id != after_course_id group by reporttime,after_course_id,account_state;

5.查询指定课程方向新增vip开通数量

select course_id,sum(num) from vip_open_num where reportTime = '2022-10-09' and course_id= 'big';

select sum(num) from vip_open_num where reportTime = '2022-10-09' and course_id= 'big';

6.查询整个TTS新增vip开通数量

select sum(num) from vip_open_num where reportTime ='2022-10-09' and open_type !='vip2vip';

数据仓库的概念和维度建模

数据仓库是用于数据分析,为企业的各种决策提供数据分析的支撑,以及提供各种分析报表。

数据仓库是不是数据库?

关系型数据库(Mysql,Oracle)按用途来划分,可以归为两种:

①业务型数据库

用于日常的业务数据的CRUD,而且事务型操作较多。

②分析型数据库

用于历史数据分析,大部分的操作都是读数据,而事务性操作很少(因为历史数据大部分都为读)

前者叫数据库(用于业务处理),后者叫数据仓库(用于分析处理)

数据仓库的特点:

1.面向主题(维度)设计

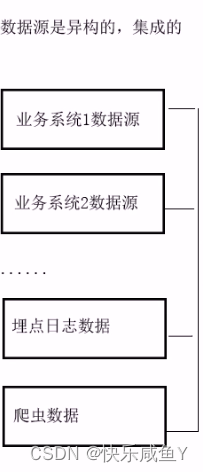

2.数据源是异构的,集成的。所以数据仓库的数据规模要远远大于数据库

3.数据仓库里的数据一般都是历史数据

4.数据仓库操作数据的特点:大多数为读,即查询分析

5.数据仓库查询分析数据时,一般都带有时间维度的

为什么要建立数仓?

比如我们查询每一个月的PV,UV是多少,这种查询,完全可以从业务数库来查询得到。

但是面对复杂或细粒度查询时,比如: 20~30岁女性用户在过去五年的第一季度化妆品类商品的购买行为与公司进行的促销活动方案之间的关系。

这种查询就需要从数据仓库里查询得到。

建立数据仓库的目的—>是为了更好的查询和分析业务数据—>从而为公司的决策提供有力的支撑

==============================

详细说明

一、数据仓库是什么

可以理解为∶面向分析的存储系统。

也就是说数仓是存数据的,企业的各种数据往里面塞,主要目的是为了有效分析数据,后续会基于它产出供分析挖掘的数据,或者数据应用需要的数据,如企业的分析性报告和各类报表,为企业的决策提供支持。

先看关系型数据库,它可以被划分为两大基本类型∶操作型数据库和分析型数据库。

1.操作型数据库

主要面向应用,用于业务支撑,支持对实际业务的处理,也可以叫业务型数据库。

可以理解为通常意义上的数据库(后端开发同学口中的经常提到的就是这种)。

2.分析型数据库

主要面向数据分析,侧重决策支持,作为公司的单独数据存储,负责利用历史数据对公司各主题域进分析

由于分析型数据库中的操作都是查询,因此也就不需要严格满足关系型数据库一些设计规范,这样的情况下再将它归为数据库不太合适,也容易不引起混淆,所以称之为数据仓库。

这里可以说一下,数据处理大致可以分成两大类:OLTP(联机事务处理)和OLAP(联机分析处理)。

1 ) OLTP(联机事务处理)就是操作型数据库的主要应用,更侧重于基本的、日常的事务处理,包括数据的增删改查。

2 ) OLAP(联机分析处理)就是分析型数据库的主要应用,以多维度的方式分析数据,这个后续会整理。

二、数据仓库有什么特点

相对于数据库,数据仓库有以下特点

1)面向主题

数据仓库通过一个个主题域将多个业务系统的数据加载到一起,为了各个主题(如∶用户、订单、商品等)进行分析而建,操作型数据库是为了支撑各种业务而建立。

2)集成性

数据仓库会将不同源数据库中的数据汇总到一起。

3)历史性

较之操作型数据库,数据仓库的数据是为企业数据分析而建立,所以数据被加载后一般情况下将被长期保留,前者通常保存几个月,后者可能几年甚至几十年。

4 )时变性

是指数据仓库包含来自其时间范围不同时间段的数据快照,有了这些数据快照以后,用户便可将其汇总,生成各历史阶段的数据分析报告。

5)稳定性

数据仓库中的数据一般仅执行查询操作,很少会有删除和更新。但是需定期加载和刷新数据。

三、为什么搭建数据仓库

简单来说,就是为了有效分析数据。

你说直接从业务数据库中取数据来做分析?

也不是不可以,就是业务系统多,业务复杂时,会发现结构复杂,数据脏乱,难以理解,缺少历史,大规模查询缓慢这些问题。

举个最常见的例子,拿电商行业来说,基本每家电商公司都会经历,从只需要业务数据库到要数据仓库的阶段。

第一阶段,电商早期启动非常容易,入行门槛低。找个外包团队,做了一个可以下单的网页前端+几台服务器+一个MySQL,就能开门迎客了。这好比手工作坊时期。

第二阶段,流量来了,客户和订单都多起来了,普通查询已经有压力了,这个时候就需要升级架构变成多台服务器和多个业务数据库(量大+分库分表),这个阶段的业务数字和指标还可以勉强从业务数据库里查询。初步进入工业化。

第三个阶段,一般需要3-5年左右的时间,随着业务指数级的增长,数据量的会陡增,公司角色也开始多了起来,大家需要面临的问题越来越复杂,越来越深入。高管们关心的问题,从最初非常粗放的:“昨天的收入是多少”、“上个月的PV、UV是多少”,逐渐演化到非常精细化和具体的用户的集群分析,特定用户在某种使用场景中,例如“20~30岁女性用户在过去五年的第一季度化妆品类商

品的购买行为与公司进行的促销活动方案之间的关系”。

========================

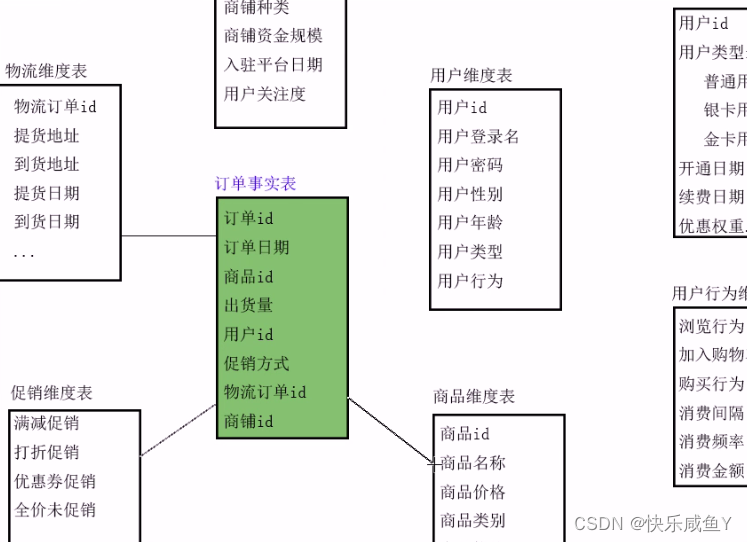

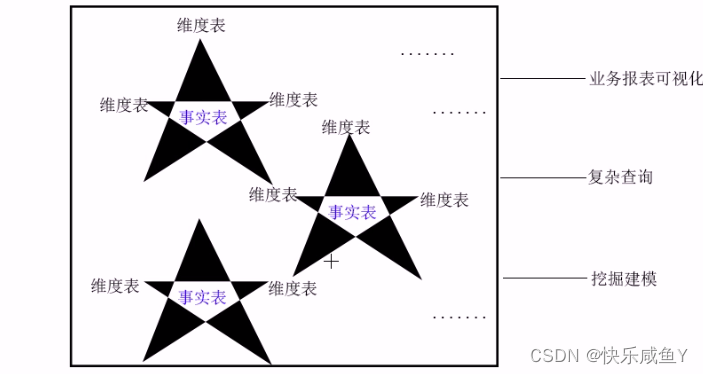

数据仓库的维度建模

所谓的维度建模,指的是围绕一张事实表,拆分为多张维度表,通过外键关联起来。维度建模有两种模型:

①星型图模型

各维度表直接和事实表相连

②雪花图模型

存在一个或多个维度表,通过另外的维度表和事实表。比星型图更复杂

===================

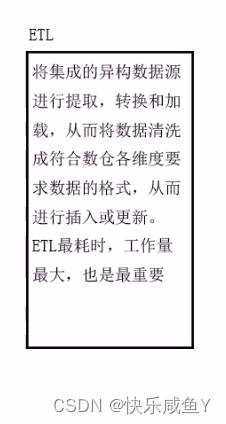

数据仓库的整体架构

用表的方式+sql方式来管理、查询和分析的技术框架都可以当数仓

数据仓库(MySQL,Hive,Hbase+Phoenix,SparkSql,Shark,Impala)

以上数仓技术的使用场景有区别,比如MysqI和Hive

1.从DB规模,Hive要远大于Mysql

2.从扩展的灵活性,Hive要优于Mysql,因为Hive底层基于Hadoop,所以扩展(加节点)很方便

3.从查询的即时性,Mysql要优于Hive。

一些关键的单词:

1.DW Data Warehouse数据仓库

2.DM Data Market数据集市(数据仓库的子集)

3.DC Data Cube数据立方体

===========================

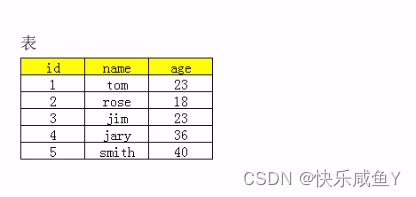

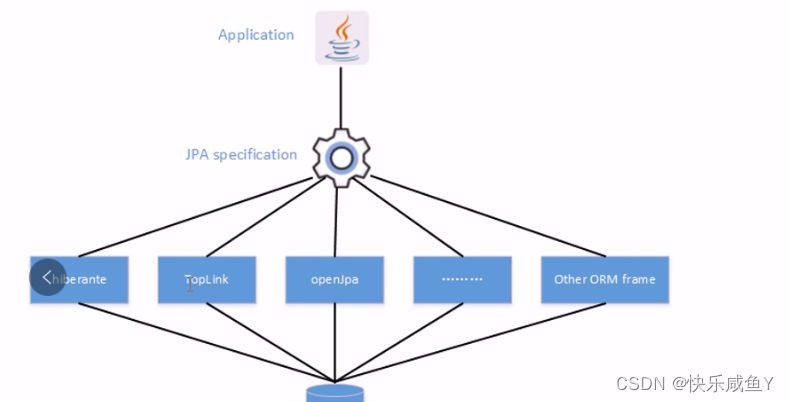

JPA

1.对于新增

insert表名values() ;如果通过JPA规则:

①创建Student类{

private int id;

private String name;

privat int age ;

}

②执行插入方法:

save(Student stu)

实际上底层会解析为Sql执行

即通过JPA规范,我们就是通过对象+方法来操作数据库,没有sql语句了

SpringData 支持JPA规范。

Sun统一了JPA规范,其中最强大的是Hibernate。所以我们用的都是Hibernate提供的JPA规范。然后通过SpringData做了封装和实现

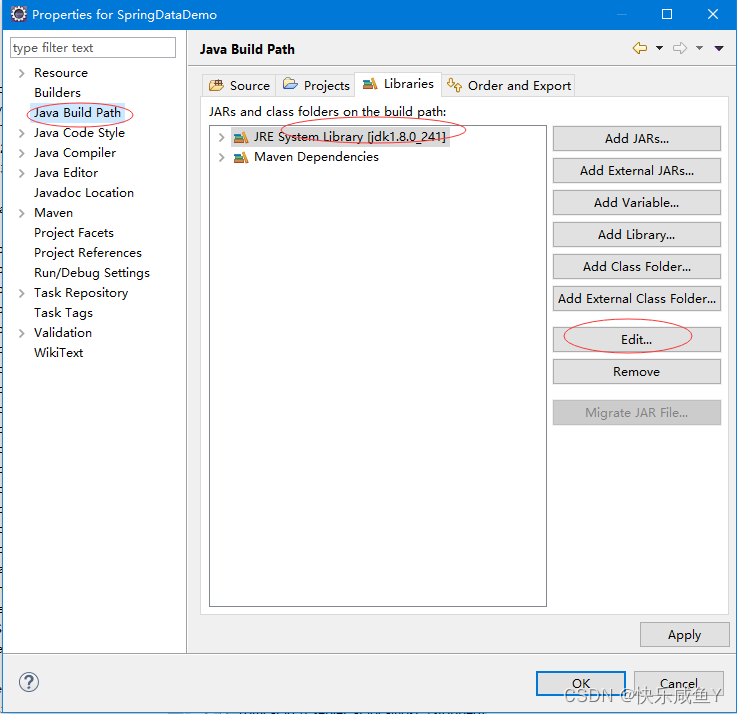

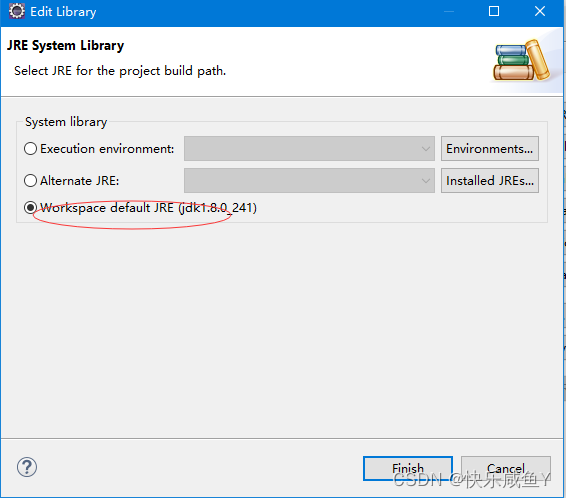

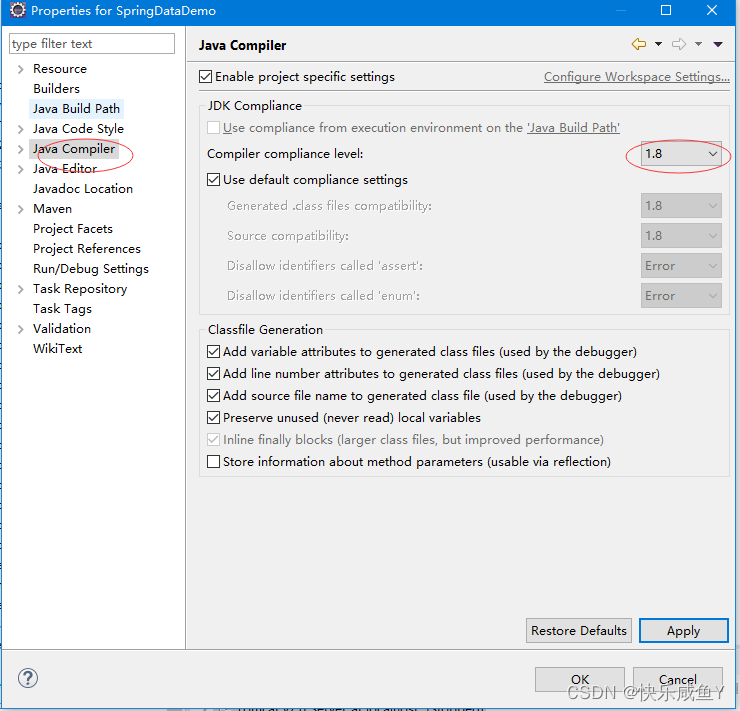

创建一个maven项目(并修改jdk为1.8)

Hibernate 操作数据库(mysql)

创建resources目录(存放spring配置)

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:aop="http://www.springframework.org/schema/aop"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:jdbc="http://www.springframework.org/schema/jdbc"

xmlns:tx="http://www.springframework.org/schema/tx"

xmlns:jpa="http://www.springframework.org/schema/data/jpa"

xsi:schemaLocation="

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/jdbc http://www.springframework.org/schema/jdbc/spring-jdbc.xsd

http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx.xsd

http://www.springframework.org/schema/data/jpa

http://www.springframework.org/schema/data/jpa/spring-jpa.xsd">

<!--准备一个数据源对象 -->

<bean id="dataSource" class="org.springframework.jdbc.datasource.DriverManagerDataSource">

<!-- 注册驱动类 -->

<property name="driverClassName" value="com.mysql.jdbc.Driver"></property>

<!-- 登录数据库的用户名和密码 -->

<property name="username" value="root"></property>

<property name="password" value="root"></property>

<!-- url -->

<property name="url" value="jdbc:mysql://hadoop01:3306/spring_data"></property>

</bean>

<!--二:配置spring data jpa的核心 LocalContainerEntityManagerFactoryBean-->

<bean id="entityManagerFactory" class="org.springframework.orm.jpa.LocalContainerEntityManagerFactoryBean">

<!--1:注入数据源-->

<property name="dataSource" ref="dataSource"></property>

<!--2:指定JPA供应商的适配器,用来设置hibernate中的参数设置

generateDdl:true:表示没有数据库表,自动创建数据库表

showSql:true:表示控制台会显示hibernate底层生成的sql语句,用于开发测试

database:MYSQL:表示指定底层操作的数据库是MYSQL

databasePlatform:org.hibernate.dialect.MySQL5Dialect,方言的作用用于查询

-->

<property name="jpaVendorAdapter">

<bean class="org.springframework.orm.jpa.vendor.HibernateJpaVendorAdapter"/>

</property>

<!--2:指定包扫描,扫描com.atguigu.domain,扫描所有实体类,映射数据库表-->

<property name="packagesToScan" value="cn.com.domain"></property>

<property name="jpaProperties">

<props>

<!-- 二级缓存相关 -->

<!--

<prop key="hibernate.cache.region.factory_class">org.hibernate.cache.ehcache.EhCacheRegionFactory</prop>

<prop key="net.sf.ehcache.configurationResourceName">ehcache-hibernate.xml</prop>

-->

<!-- 生成的数据表的列的映射策略 -->

<prop key="hibernate.ejb.naming_strategy">org.hibernate.cfg.ImprovedNamingStrategy</prop>

<!-- hibernate 基本属性 -->

<prop key="hibernate.dialect">org.hibernate.dialect.MySQL5InnoDBDialect</prop>

<prop key="hibernate.show_sql">true</prop>

<prop key="hibernate.format_sql">true</prop>

<prop key="hibernate.hbm2ddl.auto">update</prop>

</props>

</property>

</bean>

<!-- 3. 配置事务管理器 -->

<bean class="org.springframework.orm.jpa.JpaTransactionManager" id="transactionManager">

<property name="entityManagerFactory" ref="entityManagerFactory"/>

</bean>

<!-- 4. 配置支持注解的事务 -->

<tx:annotation-driven transaction-manager="transactionManager"/>

<!-- 5. 配置 SpringData -->

<!-- 加入 jpa 的命名空间 -->

<!-- base-package: 扫描 Repository Bean 所在的 package -->

<jpa:repositories base-package="cn.com"

entity-manager-factory-ref="entityManagerFactory"></jpa:repositories>

<!--组件的扫描,但是如果使用spring整合junit测试,该行代码可以不写-->

<context:component-scan base-package="cn.com"/>

<context:component-scan base-package="cn.com.service"/>

</beans>

建一个数据库

mysql> create database spring_data;

Query OK, 1 row affected (0.26 sec)

pom文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.com</groupId>

<artifactId>SpringDataDemo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>SpringDataDemo</name>

<url>http://maven.apache.org</url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<scope>test</scope>

</dependency>

<!-- mysql数据库驱动 -->

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

</dependency>

<!-- Spring -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

<version>4.3.5.RELEASE</version>

</dependency>

<!-- SpringData JDBC -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

<version>4.3.5.RELEASE</version>

</dependency>

<!--SpringData Jpa-->

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-jpa</artifactId>

<version>1.3.0.RELEASE</version>

</dependency>

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate-entitymanager</artifactId>

<version>4.3.6.Final</version>

</dependency>

</dependencies>

</project>

代码

package cn.com.domain;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.Table;

/**

* 创建JPA的实体对象类,对应数据库的一张表

* 当前类的一个实例对象就对应表中的一条记录

* 知识点

* 1.@Entity表示当前类是JPA的实体对象类

* 2.@Table 可以指定对应的表名

* 3.@Id表示主键属性。必须加在get属性名()方法上

* 4.@GeneratedValue表示主键自动递增

*

*

*/

@Entity

@Table(name="stu")

public class Student {

private Integer id;

private String name;

private Integer age;

public Student() {

super();

}

public Student(Integer id, String name, Integer age) {

super();

this.id = id;

this.name = name;

this.age = age;

}

@GeneratedValue

@Id

public Integer getId() {

return id;

}

public void setId(Integer id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public Integer getAge() {

return age;

}

public void setAge(Integer age) {

this.age = age;

}

@Override

public String toString() {

return "Student [id=" + id + ", name=" + name + ", age=" + age + "]";

}

}

工厂

package cn.com.service;

import java.util.List;

import org.springframework.data.jpa.repository.Modifying;

import org.springframework.data.jpa.repository.Query;

import org.springframework.data.repository.Repository;

import org.springframework.transaction.annotation.Transactional;

import cn.com.domain.Student;

/**

* 此接口用于编写符合PA规范的方法,实现CRUD

* 第一个泛型:实体对象类的class

* 第二个泛型:主键类型

*

*

*

*

*

*

*/

public interface StudentRepository extends Repository<Student, Integer>{

void save(Student s1);

List<Student> findAll();

Student findById(Integer id);

List<Student> findByName(String name);

List<Student> findByNameAndAge(String name, Integer id);

List<Student> findByAgeGreaterThan(int i);

List<Student> findByAgeBetween(int i, int j);

@Query(nativeQuery=true,value="select * from stu")

List<Student> queryAll();

@Query(nativeQuery=true,value="select count(*) from stu")

int queryCount();

@Query(nativeQuery=true,value="select * from stu where name=?1")

List<Student> selectByName(String name);

@Query(nativeQuery=true,value="select * from stu where name=?1 and age=?2")

List<Student> selectByNameAndAge(String name, int age);

@Query(nativeQuery=true,value="select * from stu order by age asc")

List<Student> selectByAgeAndAsc();

/**

* 如果要实现更新或删除,

* @Transactional---开启事务

* @Modifying----允许修改

*/

@Transactional@Modifying

@Query(nativeQuery=true,value="delete from stu;")

public void delAl1();

}

启动类

package cn.com.service;

import java.util.List;

import org.junit.Test;

import org.springframework.aop.framework.autoproxy.ProxyCreationContext;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import cn.com.domain.Student;

public class TestDemo {

//建表

@Test

public void connect(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

}

//

@Test

public void save_update(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//实现新增数据

//如果通过构造方法传数据,必须再额外声明一个空构造器

Student s2=new Student(2,"rose",18);

Student s3=new Student(3,"jim",30);

Student s4=new Student(4,"jary2",25);

// s1.setId(1);

// s1.setName("tom");

// s1.setAge(23);

proxy.save(s2);

proxy.save(s3);

proxy.save(s4);

//根据主键进行更新,如果主键有则更新,不存在则插入

Student s1=new Student(1,"tom",15);

proxy.save(s1);

}

//查询

@Test

public void find_all(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//查询整表

//返回实体对象的结果值

List<Student> results=proxy.findAll();

System.out.println(results);

}

//

@Test

public void find_by_id(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//根据id条件查询

Student result=proxy.findById(2);

System.out.println(result);

}

//根据姓名查数据

@Test

public void find_by_name(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//根据name条件查询

List<Student> results=proxy.findByName("tom");

System.out.println(results);

}

//根据多个条件查数据

@Test

public void find_by_name_and_age(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//根据name条件查询

//中间用And连接

//此外,传参时顺序和属性名一致

List<Student> results=proxy.findByNameAndAge("tom",15);

System.out.println(results);

}

//

@Test

public void find_by_age_greaterThan(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

List<Student> results=proxy.findByAgeGreaterThan(20);

System.out.println(results);

}

@Test

public void find_by_age_between(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

List<Student> results=proxy.findByAgeBetween(16,24);

System.out.println(results);

}

@Test

public void queryAll(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//自定义查整表

List<Student> results=proxy.queryAll();

System.out.println(results);

}

//查询整表的记录数

@Test

public void queryCount(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//

int count=proxy.queryCount();

System.out.println(count);

}

//按姓名查

@Test

public void selectByName(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//

List<Student> results=proxy.selectByName("tom");

System.out.println(results);

}

//根据姓名和年龄查数据

@Test

public void selectByNameAndAge(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//

List<Student> results=proxy.selectByNameAndAge("tom",15);

System.out.println(results);

}

//根据年龄实现升序排序,并返回结果集

@Test

public void selectByAgeAndAsc(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

StudentRepository proxy=context.getBean(StudentRepository.class);

//

List<Student> results=proxy.selectByAgeAndAsc();

System.out.println(results);

}

//删除表

}

Hibernate 操作hive

启动Hive的jdbc服务:

./hive --service hiveserver2 &

hive客户端创建一个数据库

hive> create database park;

建表

hive> create table stu(id int,name string) row format delimited fields terminated by ',';

新建一个文档

[root@hadoop01 home]# vim stu.txt

1,tom

2,rose

3,jim

4,zh

hive> use park;

加载数据到hive表

hive> load data local inpath '/home/stu.txt' into table stu;

Loading data to table default.stu

Table default.stu stats: [numFiles=1, totalSize=24]

OK

Time taken: 2.728 seconds

hive> select * from stu;

OK

1 tom

2 rose

3 jim

4 zh

Time taken: 1.065 seconds, Fetched: 4 row(s)

新建一个maven工程

spring配置

<?xml version="1.0" encoding="UTF-8"?>

<beans:beans xmlns="http://www.springframework.org/schema/hadoop"

xmlns:beans="http://www.springframework.org/schema/beans"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="

http://www.springframework.org/schema/beans

http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/context

http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/hadoop

http://www.springframework.org/schema/hadoop/spring-hadoop.xsd"

>

<!-- 配置HDFS地址 -->

<configuration>

fs.defaultFS=hdfs://hadoop01:9000

</configuration>

<!-- Hive驱动 -->

<beans:bean id="hiveDriver" class="org.apache.hive.jdbc.HiveDriver" />

<!-- Hive数据源 -->

<beans:bean id="hiveDataSource"

class="org.springframework.jdbc.datasource.SimpleDriverDataSource">

<beans:constructor-arg name="driver" ref="hiveDriver" />

<beans:constructor-arg name="url"

value="jdbc:hive2://hadoop01:10000" />

<beans:constructor-arg name="username" value="root" />

<beans:constructor-arg name="password" value="root" />

</beans:bean>

<!-- Hive客户端工厂 -->

<hive-client-factory id="hiveClientFactory" hive-data-source-ref="hiveDataSource" />

<!-- Hive模板类,用于操作hive -->

<hive-template id="hiveTemplate" />

</beans:beans>

pom文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.com</groupId>

<artifactId>SpringDataHive</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>SpringDataHive</name>

<url>http://maven.apache.org</url>

<properties>

<spring.version>4.1.6.RELEASE</spring.version>

<slf4j.version>1.7.6</slf4j.version>

<log4j.version>1.2.17</log4j.version>

<hamcrest.version>1.3</hamcrest.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<spring.hadoop.version>2.5.0.RELEASE</spring.hadoop.version>

<hadoop.version>2.7.1</hadoop.version>

<hive.version>1.2.1</hive.version>

</properties>

<dependencies>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<!-- junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<scope>test</scope>

</dependency>

<!-- Spring -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

<version>${spring.version}</version>

<exclusions>

<exclusion>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-beans</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-aop</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Logging -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jcl-over-slf4j</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<!-- Spring Data Hadoop -->

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-hadoop</artifactId>

<version>${spring.hadoop.version}</version>

<exclusions>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-context-support</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Spring Data Jdbc -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Spring Test -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-test</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Spring Tx -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-tx</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Hadoop -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<scope>compile</scope>

</dependency>

<!-- Hive -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-service</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.thrift</groupId>

<artifactId>libfb303</artifactId>

<version>0.9.1</version>

</dependency>

<!-- runtime Hive deps start -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-common</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<!-- <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-builtins</artifactId>

<version>${hive.version}</version> <scope>runtime</scope> </dependency> -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-shims</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-serde</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-contrib</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<!-- runtime Hive deps end -->

<dependency>

<groupId>org.codehaus.groovy</groupId>

<artifactId>groovy</artifactId>

<version>1.8.5</version>

<scope>runtime</scope>

</dependency>

</dependencies>

</project>

bean类

package cn.com.domain;

public class Student {

private int id;

private String name;

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

@Override

public String toString() {

return "Student [id=" + id + ", name=" + name + "]";

}

}

启动测试类

package cn.com.service;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.util.ArrayList;

import java.util.List;

import org.junit.Test;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import org.springframework.data.hadoop.hive.HiveClient;

import org.springframework.data.hadoop.hive.HiveClientCallback;

import org.springframework.data.hadoop.hive.HiveTemplate;

import cn.com.domain.Student;

public class TestDemo {

@Test

public void select_hive(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

//注入Hive的模板类,通过此对象实现对Hive的CRUD

HiveTemplate proxy=(HiveTemplate) context.getBean("hiveTemplate");

//注意,查询前先切换指定的数据库

proxy.query("use park");

// List<String> results = proxy.query("select name from stu");

//hive 只能单列查询,返回第一列结果

List<String> results = proxy.query("select * from stu");

System.out.println(results);

}

@Test

public void select_execute(){

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

//注入Hive的模板类,通过此对象实现对Hive的CRUD

HiveTemplate proxy=(HiveTemplate) context.getBean("hiveTemplate");

//注意,查询前先切换指定的数据库

proxy.query("use park");

List<Student> results=proxy.execute(new HiveClientCallback<List<Student>>() {

/**

* 执行查询,并将结果返回

*/

@Override

public List<Student> doInHive(HiveClient hiveClient) throws Exception {

//创建和hive的连接状态

Connection conn=hiveClient.getConnection();

PreparedStatement ps=conn.prepareStatement("select * from stu");

ResultSet rs = ps.executeQuery();

List<Student> students=new ArrayList<Student>();

while(rs.next()){

int id=rs.getInt("id");

String name=rs.getString("name");

Student student=new Student();

student.setId(id);

student.setName(name);

//将每条对象封装到对象里。并加到结果集

students.add(student);

}

return students;

}

});

System.out.println(results);

}

}

创建一个maven工程(实现从hive查询数据,封装到数据库)

创建一个数据库

mysql> create database ttsdb;

spring配置

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns:hadoop="http://www.springframework.org/schema/hadoop"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:jdbc="http://www.springframework.org/schema/jdbc"

xmlns:tx="http://www.springframework.org/schema/tx"

xmlns:jpa="http://www.springframework.org/schema/data/jpa"

xmlns:aop="http://www.springframework.org/schema/aop"

xsi:schemaLocation="

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd

http://www.springframework.org/schema/hadoop http://www.springframework.org/schema/hadoop/spring-hadoop.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context.xsd

http://www.springframework.org/schema/jdbc http://www.springframework.org/schema/jdbc/spring-jdbc.xsd

http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx.xsd

http://www.springframework.org/schema/data/jpa http://www.springframework.org/schema/data/jpa/spring-jpa-1.3.xsd

http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop.xsd">

<!--准备一个数据源对象 -->

<bean id="dataSource" class="org.springframework.jdbc.datasource.DriverManagerDataSource">

<!-- 注册驱动类 -->

<property name="driverClassName" value="com.mysql.jdbc.Driver"></property>

<!-- 登录数据库的用户名和密码 -->

<property name="username" value="root"></property>

<property name="password" value="root"></property>

<!-- url -->

<property name="url" value="jdbc:mysql://hadoop01:3306/ttsdb"></property>

</bean>

<!--二:配置spring data jpa的核心 LocalContainerEntityManagerFactoryBean-->

<bean id="entityManagerFactory" class="org.springframework.orm.jpa.LocalContainerEntityManagerFactoryBean">

<!--1:注入数据源-->

<property name="dataSource" ref="dataSource"></property>

<property name="jpaVendorAdapter">

<bean class="org.springframework.orm.jpa.vendor.HibernateJpaVendorAdapter"/>

</property>

<!--2:指定包扫描,扫描com.atguigu.domain,扫描所有实体类,映射数据库表-->

<property name="packagesToScan" value="cn.com.domain"></property>

<property name="jpaProperties">

<props>

<!-- 二级缓存相关 -->

<!--

<prop key="hibernate.cache.region.factory_class">org.hibernate.cache.ehcache.EhCacheRegionFactory</prop>

<prop key="net.sf.ehcache.configurationResourceName">ehcache-hibernate.xml</prop>

-->

<!-- 生成的数据表的列的映射策略 -->

<prop key="hibernate.ejb.naming_strategy">org.hibernate.cfg.ImprovedNamingStrategy</prop>

<!-- hibernate 基本属性 -->

<prop key="hibernate.dialect">org.hibernate.dialect.MySQL5InnoDBDialect</prop>

<prop key="hibernate.show_sql">true</prop>

<prop key="hibernate.format_sql">true</prop>

<prop key="hibernate.hbm2ddl.auto">update</prop>

</props>

</property>

</bean>

<!-- 3. 配置事务管理器 -->

<bean class="org.springframework.orm.jpa.JpaTransactionManager" id="transactionManager">

<property name="entityManagerFactory" ref="entityManagerFactory"/>

</bean>

<!-- 4. 配置支持注解的事务 -->

<tx:annotation-driven transaction-manager="transactionManager"/>

<!-- 5. 配置 SpringData -->

<!-- 加入 jpa 的命名空间 -->

<!-- base-package: 扫描 Repository Bean 所在的 package -->

<jpa:repositories base-package="cn.com"

entity-manager-factory-ref="entityManagerFactory"></jpa:repositories>

<!--组件的扫描,但是如果使用spring整合junit测试,该行代码可以不写-->

<context:component-scan base-package="cn.com"/>

<context:component-scan base-package="cn.com.service"/>

<!-- Hive模板类,用于操作hive -->

<!-- 配置HDFS地址 -->

<hadoop:configuration>

fs.defaultFS=hdfs://hadoop01:9000

</hadoop:configuration>

<!-- Hive驱动 -->

<bean id="hiveDriver" class="org.apache.hive.jdbc.HiveDriver" />

<!-- Hive数据源 -->

<bean id="hiveDataSource"

class="org.springframework.jdbc.datasource.SimpleDriverDataSource">

<constructor-arg name="driver" ref="hiveDriver" />

<constructor-arg name="url"

value="jdbc:hive2://hadoop01:10000" />

<constructor-arg name="username" value="root" />

<constructor-arg name="password" value="root" />

</bean>

<!-- Hive客户端工厂 -->

<hadoop:hive-client-factory id="hiveClientFactory" hive-data-source-ref="hiveDataSource" />

<!-- Hive模板类,用于操作hive -->

<hadoop:hive-template id="hiveTemplate" />

</beans>

pom文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>cn.com</groupId>

<artifactId>SpringDataTTS</artifactId>

<version>0.0.1-SNAPSHOT</version>

<packaging>jar</packaging>

<name>SpringDataTTS</name>

<url>http://maven.apache.org</url>

<properties>

<spring.version>4.1.6.RELEASE</spring.version>

<slf4j.version>1.7.6</slf4j.version>

<log4j.version>1.2.17</log4j.version>

<hamcrest.version>1.3</hamcrest.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<spring.hadoop.version>2.5.0.RELEASE</spring.hadoop.version>

<hadoop.version>2.7.1</hadoop.version>

<hive.version>1.2.1</hive.version>

</properties>

<dependencies>

<dependency>

<groupId>jdk.tools</groupId>

<artifactId>jdk.tools</artifactId>

<version>1.8</version>

<scope>system</scope>

<systemPath>${JAVA_HOME}/lib/tools.jar</systemPath>

</dependency>

<!-- junit -->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.10</version>

<scope>test</scope>

</dependency>

<!-- Spring -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-core</artifactId>

<version>${spring.version}</version>

<exclusions>

<exclusion>

<groupId>commons-logging</groupId>

<artifactId>commons-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-beans</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-context</artifactId>

<version>${spring.version}</version>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-aop</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Logging -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jcl-over-slf4j</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<!-- Spring Data Hadoop -->

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-hadoop</artifactId>

<version>${spring.hadoop.version}</version>

<exclusions>

<exclusion>

<groupId>org.springframework</groupId>

<artifactId>spring-context-support</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Spring Data Jdbc -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Spring Test -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-test</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Spring Tx -->

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-tx</artifactId>

<version>${spring.version}</version>

</dependency>

<!-- Hadoop -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<scope>compile</scope>

</dependency>

<!-- Hive -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-service</artifactId>

<version>${hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.thrift</groupId>

<artifactId>libfb303</artifactId>

<version>0.9.1</version>

</dependency>

<!-- runtime Hive deps start -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-common</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<!-- <dependency> <groupId>org.apache.hive</groupId> <artifactId>hive-builtins</artifactId>

<version>${hive.version}</version> <scope>runtime</scope> </dependency> -->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-shims</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-serde</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-contrib</artifactId>

<version>${hive.version}</version>

<scope>runtime</scope>

</dependency>

<!-- runtime Hive deps end -->

<dependency>

<groupId>org.codehaus.groovy</groupId>

<artifactId>groovy</artifactId>

<version>1.8.5</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-jpa</artifactId>

<version>1.8.0.RELEASE</version>

</dependency>

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate-entitymanager</artifactId>

<version>4.3.6.Final</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.38</version>

</dependency>

</dependencies>

</project>

代码

package cn.com.domain;

import javax.persistence.Entity;

import javax.persistence.GeneratedValue;

import javax.persistence.Id;

import javax.persistence.Table;

@Entity

@Table(name="vip_open_num")

public class VipBean {

private Integer id;

private String reporttime;

private String courseId;

private String accountState;

private Integer num;

private String openType;

public VipBean() {

super();

}

public VipBean(Integer id, String reporttime, String courseId, String accountState, Integer num, String openType) {

super();

this.id = id;

this.reporttime = reporttime;

this.courseId = courseId;

this.accountState = accountState;

this.num = num;

this.openType = openType;

}

@GeneratedValue

@Id

public Integer getId() {

return id;

}

public void setId(Integer id) {

this.id = id;

}

public String getReporttime() {

return reporttime;

}

public void setReporttime(String reporttime) {

this.reporttime = reporttime;

}

public String getCourseId() {

return courseId;

}

public void setCourseId(String courseId) {

this.courseId = courseId;

}

public String getAccountState() {

return accountState;

}

public void setAccountState(String accountState) {

this.accountState = accountState;

}

public Integer getNum() {

return num;

}

public void setNum(Integer num) {

this.num = num;

}

public String getOpenType() {

return openType;

}

public void setOpenType(String openType) {

this.openType = openType;

}

@Override

public String toString() {

return "VipBean [reporttime=" + reporttime + ", courseId=" + courseId + ", accountState=" + accountState

+ ", num=" + num + ", openType=" + openType + "]";

}

}

package cn.com.service;

import org.springframework.data.repository.Repository;

import cn.com.domain.VipBean;

public interface VipRepository extends Repository<VipBean, Integer>{

void save(VipBean bean);

}

package cn.com.service;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.util.ArrayList;

import java.util.List;

import org.springframework.context.ApplicationContext;

import org.springframework.context.support.ClassPathXmlApplicationContext;

import org.springframework.data.hadoop.hive.HiveClient;

import org.springframework.data.hadoop.hive.HiveClientCallback;

import org.springframework.data.hadoop.hive.HiveTemplate;

import cn.com.domain.VipBean;

public class HiveService {

public static void main(String[] args) {

ApplicationContext context=new ClassPathXmlApplicationContext("applicationContext.xml");

HiveTemplate proxy=(HiveTemplate) context.getBean("hiveTemplate");

proxy.query("use ttsdb");

VipRepository vipProxy=context.getBean(VipRepository.class);

List<VipBean> results=proxy.execute(new HiveClientCallback<List<VipBean>>() {

@Override

public List<VipBean> doInHive(HiveClient hiveClient) throws Exception {

Connection conn=hiveClient.getConnection();

PreparedStatement ps = conn.prepareStatement("select * from vip_open_num");

ResultSet rs = ps.executeQuery();

List<VipBean> vips=new ArrayList<VipBean>();

while(rs.next()){

String reporttime=rs.getString("reporttime");

String course_id=rs.getString("course_id");

String account_state=rs.getString("account_state");

int num=rs.getInt("num");

String open_type=rs.getString("open_type");

VipBean vipBean=new VipBean();

vipBean.setReporttime(reporttime);

vipBean.setCourseId(course_id);

vipBean.setAccountState(account_state);

vipBean.setNum(num);

vipBean.setOpenType(open_type);

vips.add(vipBean);

}

return vips;

}

});

for (VipBean bean : results) {

bean.setId(null);

vipProxy.save(bean);

}

}

}

数据库数据

mysql> select * from vip_open_num;

+----+---------------+-----------+------+-------------+------------+

| id | account_state | course_id | num | open_type | reporttime |

+----+---------------+-----------+------+-------------+------------+

| 1 | audition | big | 1 | new_add | 2022-10-09 |

| 2 | normal | bilg | 1 | new_add | 2022-10-09 |

| 3 | audition | jsd | 1 | new_add | 2022-10-09 |

| 4 | normal | jsd | 1 | new_add | 2022-10-09 |

| 5 | audition | big | 2 | offline2vip | 2022-10-09 |

| 6 | audition | big | 1 | vip2vip | 2022-10-09 |

| 7 | normal | jsd | 2 | vip2vip | 2022-10-09 |

+----+---------------+-----------+------+-------------+------------+

7 rows in set (0.00 sec)

1270

1270

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?