目录

一. Kubernetes

1.Kubernetes简介

- 在Docker 作为高级容器引擎快速发展的同时,在Google内部,容器技术已经应用了很多年,Borg系统运行管理着成千上万的容器应用。

- Kubernetes项目来源于Borg,可以说是集结了Borg设计思想的精华,并且吸收了Borg系统中的经验和教训。

- Kubernetes对计算资源进行了更高层次的抽象,通过将容器进行细致的组合,将最终的应用服务交给用户。

- Kubernetes的好处:

隐藏资源管理和错误处理,用户仅需要关注应用的开发。

服务高可用、高可靠。

可将负载运行在由成千上万的机器联合而成的集群中。

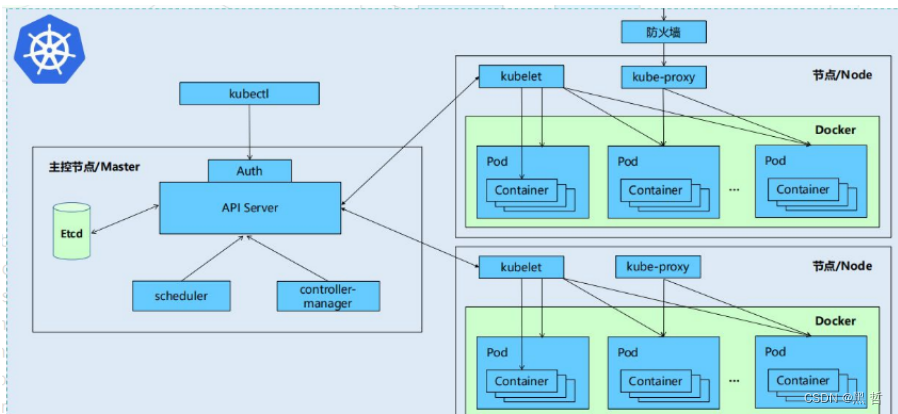

2.kubernetes设计架构

1).Kubernetes集群包含有节点代理kubelet和Master组件(APIs, scheduler, etc),一切都基于分布式的存储系统。

2).Kubernetes主要由以下几个核心组件组成:

etcd:保存了整个集群的状态

apiserver:提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注和发现等机制

controller manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等

scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

kubelet:负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

Container runtime:负责镜像管理以及Pod和容器的真正运行(CRI)

kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡

3).除了核心组件,还有一些推荐的 Add-ons:

kube-dns:负责为整个集群提供DNS服务

Ingress Controller:为服务提供外网入口

Heapster:提供资源监控

Dashboard:提供GUI

Federation:提供跨可用区的集群

Fluentd-elasticsearch:提供集群日志采集、存储与查询

4).Kubernetes设计理念和功能其实就是一个类似Linux的分层架构.

核心层:Kubernetes最核心的功能,对外提供API构建高层的应用,对内提供插件式应用执行环境

应用层:部署(无状态应用、有状态应用、批处理任务、集群应用等)和路由(服务发现、DNS解析等)

管理层:系统度量(如基础设施、容器和网络的度量),自动化(如自动扩展、动态Provision等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

接口层:kubectl命令行工具、客户端SDK以及集群联邦

生态系统:在接口层之上的庞大容器集群管理调度的生态系统,可以划分为两个范畴

Kubernetes外部:日志、监控、配置管理、CI、CD、Workflow、FaaS、OTS应用、ChatOps等

Kubernetes内部:CRI、CNI、CVI、镜像仓库、Cloud Provider、集群自身的配置和管理等

3. Kubernetes 部署

node11当作仓库,再开node22(master),node33,node44当主机使用

[root@node22 ~]# docker images 查看镜像

REPOSITORY TAG IMAGE ID CREATED SIZE

busybox latest 7a80323521cc 3 weeks ago 1.24MB

nginx latest 605c77e624dd 7 months ago 141MB

reg.westos.org/westos/game2048 latest 19299002fdbe 5 years ago 55.5MB

[root@node22 ~]# docker rmi reg.westos.org/westos/game2048 删除不需要镜像

Untagged: reg.westos.org/westos/game2048:latest

Untagged: reg.westos.org/westos/game2048@sha256:8a34fb9cb168c420604b6e5d32ca6d412cb0d533a826b313b190535c03fe9390

Deleted: sha256:19299002fdbedc133c625488318ba5106b8a76ca6e34a6a8fec681fb54f5e4c7

Deleted: sha256:a8ba4f00c5b89c2994a952951dc7b043f18e5ef337afdb0d4b8b69d793e9ffa7

Deleted: sha256:e2ea5e1f4b9cfe6afb588167bb38d833a5aa7e4a474053083a5afdca5fff39f0

Deleted: sha256:1b2dc5f636598b4d6f54dbf107a3e34fcba95bf08a7ab5a406d0fc8865ce2ab2

Deleted: sha256:af457147a7ab56e4d77082f56d1a0d6671c1a44ded1f85fea99817231503d7b4

Deleted: sha256:011b303988d241a4ae28a6b82b0d8262751ef02910f0ae2265cb637504b72e36

在33和44主机上安装docker

[root@node22 yum.repos.d]# scp docker-ce.repo node33:/etc/yum.repos.d/ 复制配置文件

[root@node22 yum.repos.d]# scp docker-ce.repo node44:/etc/yum.repos.d/

[root@node22 yum.repos.d]# scp CentOS-Base.repo node33:/etc/yum.repos.d/

[root@node22 yum.repos.d]# scp CentOS-Base.repo node44:/etc/yum.repos.d/

[root@node22 sysctl.d]# scp docker.conf node33:/etc/sysctl.d

[root@node22 sysctl.d]# scp docker.conf node44:/etc/sysctl.d

[root@node33 ~]# yum install -y docker-ce 下载

[root@node44 ~]# yum install -y docker-ce

[root@node33 ~]# systemctl enable --now docker 设定开机自启

[root@node44 ~]# systemctl enable --now docker

[root@node22 yum.repos.d]# cd /etc/sysctl.d/

[root@node22 sysctl.d]# scp docker.conf node33:/etc/sysctl.d/

[root@node22 sysctl.d]# scp docker.conf node44:/etc/sysctl.d/

[root@node33 ~]# sysctl --system 启动

[root@node44 ~]# sysctl --system

[root@node22 sysctl.d]# cd /etc/docker/

[root@node22 docker]# vim daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

[root@node22 docker]# systemctl daemon-reload

[root@node22 docker]# systemctl restart docker

[root@node22 docker]# docker info

Logging Driver: json-file

Cgroup Driver: systemd

[root@node22 docker]# scp daemon.json node33:/etc/docker

root@node33's password:

daemon.json 100% 156 112.0KB/s 00:00

[root@node22 docker]# scp daemon.json node44:/etc/docker

root@node44's password:

daemon.json

[root@node33 ~]# systemctl restart docker

[root@node44 ~]# systemctl restart docker

[root@node33 ~]# docker info

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 1

[root@node44 ~]# docker info

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 1

[root@node22 docker]# docker volume prune 清除多余的卷

[root@node22 docker]# docker network prune 清除多余的网络

WARNING! This will remove all custom networks not used by at least one container.

Are you sure you want to continue? [y/N] y

Deleted Networks:

mynet2

mynet1禁掉所有主机交换分区:

[root@node22 docker]# swapoff -a

[root@node22 docker]# vim /etc/fstab

/dev/mapper/rhel-root / xfs defaults 0 0

UUID=d319ed7a-9c18-4cda-b34a-9e2399f1f1fc /boot xfs defaults 0 0

#/dev/mapper/rhel-swap swap swap defaults 0 0

[root@node33 ~]# swapoff -a

[root@node33 ~# vim /etc/fstab

/dev/mapper/rhel-root / xfs defaults 0 0

UUID=d319ed7a-9c18-4cda-b34a-9e2399f1f1fc /boot xfs defaults 0 0

#/dev/mapper/rhel-swap swap swap defaults 0 0

[root@node44 ~]# swapoff -a

[root@node44 ~]# vim /etc/fstab

/dev/mapper/rhel-root / xfs defaults 0 0

UUID=d319ed7a-9c18-4cda-b34a-9e2399f1f1fc /boot xfs defaults 0 0

#/dev/mapper/rhel-swap swap swap defaults 0 0安装 kubeadm、kubelet 和 kubectl:

[root@node22 ~]# cd /etc/yum.repos.d/

[root@node22 yum.repos.d]# vim k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

[root@node22 yum.repos.d]# yum install -y kubeadm-1.23.10-0 kubectl-1.23.10-0

kubelet-1.23.10-0

[root@node22 yum.repos.d]# systemctl enable --now kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node33 ~]# cd /etc/yum.repos.d/

[root@node33 yum.repos.d]# vim k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

[root@node33 yum.repos.d]# yum install -y kubeadm-1.23.10-0 kubectl-1.23.10-0

kubelet-1.23.10-0

[root@node33 yum.repos.d]# systemctl enable --now kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node44 ~]# cd /etc/yum.repos.d/

[root@node44 yum.repos.d]# vim k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

[root@node44 yum.repos.d]# yum install -y kubeadm-1.23.10-0 kubectl-1.23.10-0

kubelet-1.23.10-0

[root@node44 yum.repos.d]# systemctl enable --now kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.使用 kubeadm创建集群:(镜像只需要在master上下载)

[root@node22 yum.repos.d]# kubeadm config images list --image-repository

registry.aliyuncs.com/google_containers列出镜像,使用阿里云

I0824 20:46:03.347973 12710 version.go:255] remote version is much newer: v1.25.0; falling back to: stable-1.23

registry.aliyuncs.com/google_containers/kube-apiserver:v1.23.10

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.23.10

registry.aliyuncs.com/google_containers/kube-scheduler:v1.23.10

registry.aliyuncs.com/google_containers/kube-proxy:v1.23.10

registry.aliyuncs.com/google_containers/pause:3.6

registry.aliyuncs.com/google_containers/etcd:3.5.1-0

registry.aliyuncs.com/google_containers/coredns:v1.8.6

[root@node22 yum.repos.d]# kubeadm config images pull --image-repository

registry.aliyuncs.com/google_containers 拉取镜像

将镜像上传到私有仓库(node11)中:

[root@node11 ~]# cd harbor/

[root@node11 harbor]# docker-compose up -d

[+] Running 10/0

⠿ Container harbor-log Running 0.0s

⠿ Container registryctl Running 0.0s

⠿ Container harbor-db Running 0.0s

⠿ Container registry Running 0.0s

⠿ Container harbor-portal Running 0.0s

⠿ Container redis Running 0.0s

⠿ Container harbor-core Running 0.0s

⠿ Container nginx Running 0.0s

⠿ Container chartmuseum Running 0.0s

⠿ Container harbor-jobservice Running 0.0s

[root@node11 harbor]# docker-compose ps

NAME COMMAND SERVICE STATUS PORTS

chartmuseum "./docker-entrypoint…" chartmuseum running (healthy)

harbor-core "/harbor/entrypoint.…" core running (healthy)

harbor-db "/docker-entrypoint.…" postgresql running (healthy)

harbor-jobservice "/harbor/entrypoint.…" jobservice running (healthy)

harbor-log "/bin/sh -c /usr/loc…" log running (healthy) 127.0.0.1:1514->10514/tcp

harbor-portal "nginx -g 'daemon of…" portal running (healthy)

nginx "nginx -g 'daemon of…" proxy running (healthy) 0.0.0.0:80->8080/tcp, 0.0.0.0:443->8443/tcp, :::80->8080/tcp, :::443->8443/tcp

redis "redis-server /etc/r…" redis running (healthy)

registry "/home/harbor/entryp…" registry running (healthy)

registryctl "/home/harbor/start.…" registryctl running (healthy)

[root@node11 docker]# cd certs.d/

[root@node11 certs.d]# cd reg.westos.org/

[root@node11 reg.westos.org]# scp ca.crt node22:/etc/docker/certs.d/reg.westos.org/ca.crt 搞定证书问题

root@node22's password:

ca.crt 100% 2163 21.4KB/s 00:00

[root@node22 yum.repos.d]# cd /etc/docker/

[root@node22 docker]# vim daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

[root@node22 docker]# systemctl restart docker

[root@node22 docker]# docker info

[root@node22 docker]# scp -r certs.d/ daemon.json node33:/etc/docker

root@node33's password:

redhat-entitlement-authority.crt 100% 2626 20.1KB/s 00:00

ca.crt 100% 2163 2.7MB/s 00:00

daemon.json 100% 206 280.8KB/s 00:00

[root@node22 docker]# scp -r certs.d/ daemon.json node44:/etc/docker

root@node44's password:

redhat-entitlement-authority.crt 100% 2626 2.7MB/s 00:00

ca.crt 100% 2163 3.0MB/s 00:00

daemon.json 100% 206 379.3KB/s 00:00

[root@node33 yum.repos.d]# systemctl restart docker

[root@node44 yum.repos.d]# systemctl restart docker

[root@node33 yum.repos.d]# docker info

[root@node44 yum.repos.d]# docker info

[root@node22 docker]# docker images | grep google | awk '{print $1":"$2}' | awk -F/ '{system("docker tag "$0" reg.westos.org/k8s/"$3" ")}'

[root@node22 docker]# docker images |grep k8s

reg.westos.org/k8s/kube-apiserver v1.23.10 9ca5fafbe8dc 6 days ago 135MB

reg.westos.org/k8s/kube-controller-manager v1.23.10 91a4a0d5de4e 6 days ago 125MB

reg.westos.org/k8s/kube-scheduler v1.23.10 d5c0efb802d9 6 days ago 53.5MB

reg.westos.org/k8s/kube-proxy v1.23.10 71b9bf9750e1 6 days ago 112MB

reg.westos.org/k8s/etcd 3.5.1-0 25f8c7f3da61 9 months ago 293MB

reg.westos.org/k8s/coredns v1.8.6 a4ca41631cc7 10 months ago 46.8MB

reg.westos.org/k8s/pause 3.6 6270bb605e12 12 months ago 683kB

[root@node22 docker]# docker images |grep k8s | awk '{system("docker push "$1":"$2" ")}'

[root@node22 ~]# kubeadm config images list --image-repository reg.westos.org/k8s

I0824 21:18:19.347031 16164 version.go:255] remote version is much newer: v1.25.0; falling back to: stable-1.23

reg.westos.org/k8s/kube-apiserver:v1.23.10

reg.westos.org/k8s/kube-controller-manager:v1.23.10

reg.westos.org/k8s/kube-scheduler:v1.23.10

reg.westos.org/k8s/kube-proxy:v1.23.10

reg.westos.org/k8s/pause:3.6

reg.westos.org/k8s/etcd:3.5.1-0

reg.westos.org/k8s/coredns:v1.8.6初始化集群:

[root@node22 ~]# kubeadm config images list --image-repository reg.westos.org/k8s

[root@node22 docker]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository reg.westos.org/k8s

[root@node22 ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

[root@node22 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node22 NotReady control-plane,master 69s v1.23.10

[root@node22 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7b56f6bc55-2pwnh 0/1 Pending 0 78s

kube-system coredns-7b56f6bc55-g458w 0/1 Pending 0 78s

kube-system etcd-node22 1/1 Running 0 96s

kube-system kube-apiserver-node22 1/1 Running 0 92s

kube-system kube-controller-manager-node22 1/1 Running 0 92s

kube-system kube-proxy-5qtrm 1/1 Running 0 78s

kube-system kube-scheduler-node22 1/1 Running 0 96s

[root@node22 ~]# vim .bash_profile每次登陆的时候可以自动加载

export PATH

export KUBECONFIG=/etc/kubernetes/admin.conf配置kubectl:

[root@node22 ~]# yum install -y bash-completion.noarch

[root@node22 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc 设置自动补齐

[root@node22 ~]# source .bashrc

[root@node22 ~]# kubectl

alpha autoscale create exec logs rollout version

annotate certificate debug explain options run wait

api-resources cluster-info delete expose patch scale

api-versions completion describe get plugin set

apply config diff help port-forward taint

attach cordon drain kustomize proxy top

auth cp edit label replace uncordon安装网络组件:

[root@node22 ~]# wget

https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

[root@node22 ~]# vim kube-flannel.yml

[root@node11 ~]# docker pull rancher/mirrored-flannelcni-flannel:v0.19.1

v0.19.1: Pulling from rancher/mirrored-flannelcni-flannel

627fad6f28f7: Pull complete

822d6622770d: Pull complete

114b0f60ea1e: Pull complete

b526ceac2273: Pull complete

c8f165a7aabd: Pull complete

5471aac3004e: Pull complete

b1ef1bc567b5: Pull complete

Digest: sha256:e3b0f06121b0f7a11a684e910bba89b3ab49cd6e73aeb6c719f83b2456946366

Status: Downloaded newer image for rancher/mirrored-flannelcni-flannel:v0.19.1

docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

[root@node11 ~]# docker tag rancher/mirrored-flannelcni-flannel:v0.19.1

reg.westos.org/rancher/mirrored-flannelcni-flannel:v0.19.1

[root@node11 ~]# docker login reg.westos.org

Username: admin

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@node11 ~]# docker push reg.westos.org/rancher/mirrored-flannelcni-flannel:v0.19.1

The push refers to repository [reg.westos.org/rancher/mirrored-flannelcni-flannel]

e013af5998e0: Pushed

a54964d7f23a: Pushed

6eb0f374accb: Pushed

7573a2550ff4: Pushed

925ee3cfcc84: Pushed

1365b7a83fb1: Pushed

16f2b0951240: Pushed

v0.19.1: digest: sha256:09dfa4ceff1006752e26337c836f52b660d0127dc2f5f66f380f8daf80cbd0d9 size: 1785

[root@node22 ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@node22 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-gq22n 1/1 Running 0 46s

kube-system coredns-7b56f6bc55-2pwnh 1/1 Running 0 41m

kube-system coredns-7b56f6bc55-g458w 1/1 Running 0 41m

kube-system etcd-node22 1/1 Running 0 42m

kube-system kube-apiserver-node22 1/1 Running 0 42m

kube-system kube-controller-manager-node22 1/1 Running 0 42m

kube-system kube-proxy-5qtrm 1/1 Running 0 41m

kube-system kube-scheduler-node22 1/1 Running 0 42m

[root@node22 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node22 Ready control-plane,master 42m v1.23.10

其他主机只需要做下面操作:

[root@node33 yum.repos.d]# kubeadm join 192.168.0.22:6443 --token 400yjx.91zhv9mxdje0be7w \

> --discovery-token-ca-cert-hash sha256:2f9bd3ce9d972679af0a32322253594ed2deff22e91440c1b9664c7d5da6424c

[root@node33 yum.repos.d]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

reg.westos.org/k8s/kube-proxy v1.23.10 71b9bf9750e1 6 days ago 112MB

rancher/mirrored-flannelcni-flannel v0.19.1 252b2c3ee6c8 2 weeks ago 62.3MB

busybox latest 7a80323521cc 3 weeks ago 1.24MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 3 months ago 8.09MB

reg.westos.org/k8s/pause 3.6 6270bb605e12 12 months ago 683kB

[root@node44 yum.repos.d]# kubeadm join 192.168.0.22:6443 --token

400yjx.91zhv9mxdje0be7w \

> --discovery-token-ca-cert-hash

sha256:2f9bd3ce9d972679af0a32322253594ed2deff22e91440c1b9664c7d5da6424c

[root@node44 yum.repos.d]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

reg.westos.org/k8s/kube-proxy v1.23.10 71b9bf9750e1 6 days ago 112MB

rancher/mirrored-flannelcni-flannel v0.19.1 252b2c3ee6c8 2 weeks ago 62.3MB

busybox latest 7a80323521cc 3 weeks ago 1.24MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 3 months ago 8.09MB

reg.westos.org/k8s/pause 3.6 6270bb605e12 12 months ago 683kB

[root@node22 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node22 Ready control-plane,master 54m v1.23.10

node33 Ready <none> 10m v1.23.10

node44 Ready <none> 46s v1.23.10

[root@node22 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-6vx7v 1/1 Running 0 11m

kube-flannel kube-flannel-ds-gq22n 1/1 Running 0 13m

kube-flannel kube-flannel-ds-hwsf2 1/1 Running 0 104s

kube-system coredns-7b56f6bc55-2pwnh 1/1 Running 0 54m

kube-system coredns-7b56f6bc55-g458w 1/1 Running 0 54m

kube-system etcd-node22 1/1 Running 0 54m

kube-system kube-apiserver-node22 1/1 Running 0 54m

kube-system kube-controller-manager-node22 1/1 Running 0 54m

kube-system kube-proxy-5qtrm 1/1 Running 0 54m

kube-system kube-proxy-g6mp9 1/1 Running 0 11m

kube-system kube-proxy-rmvxl 1/1 Running 0 104s

kube-system kube-scheduler-node22 1/1 Running 0 54m

[root@node22 ~]# kubeadm token create --print-join-command如果token过期可以使用此命令创建新的token(24小时有效期)

kubeadm join 192.168.0.22:6443 --token tj4l5m.6sw83edwt54y4vr8 --discovery-token-ca-cert-hash sha256:2f9bd3ce9d972679af0a32322253594ed2deff22e91440c1b9664c7d5da6424cMaster查看状态:

kubectl get cs 查看cs信息

kubectl get node 查看node 信息;

kubectl get pod -n kube-system

注:每次开机之前要先执行此命令,这是一个变量;

export KUBECONFIG=/etc/kubernetes/admin.conf

1281

1281

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?