一. 配置hadoop插件

1. 安装插件

将hadoop-eclipse-plugin-1.1.2.jar复制到eclipse/plugins目录下,重启eclipse

2. 打开MapReduce视图

window -> open perspective -> other 选择Map/Reduce 图标是一个蓝色的象

3. 添加一个MapReduce环境

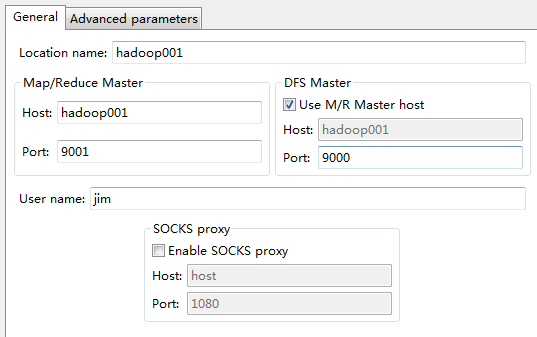

在eclipse下端,控制台旁边会多一个Tab “Map/Reduce Locations”, 在下面空白的地方点右键,选择“New Hadoop location”,配置如下:

Location name(起一个名字)

Map/Reduce Master (Job Tracker的IP和端口,根据mapred-site.xml配置的mapred.job.tracker来填写)

DFS Master (Name Node的IP和端口,根据core-site.xml中配置的fs.default.name来填写)

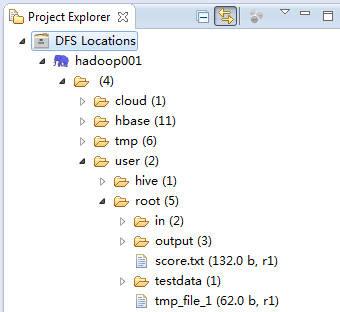

4. 使用eclipse对HDFS内容进行修改

经过上一步骤,左侧“Project Explorer”中会出现配置好的HDFS,点击右键,可以进行新建文件,删除文件等操作。

注意:每一次操作完在eclipse中不能马上显示变化,必须得刷新一下。

二. 开发hadoop程序

1. WordCount.java

public class WordCount {

public static class TokenizerMapper extends

Mapper<Object, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(Object key, Text value, Context context)

throws IOException, InterruptedException {

StringTokenizer itr = new StringTokenizer(value.toString());

while (itr.hasMoreTokens()) {

word.set(itr.nextToken());

context.write(word, one);

}

}

}

public static class IntSumReducer extends

Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable result = new IntWritable();

public void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

result.set(sum);

context.write(key, result);

}

}

public static void main(String[] args) throws Exception {

// 使用程序生成临时jar文件

File jarFile = EJob.createTempJar("bin");

EJob.addClasspath("/cloud/hadoop/conf");

ClassLoader classLoader = EJob.getClassLoader();

Thread.currentThread().setContextClassLoader(classLoader);

// 设置hadoop配置参数

Configuration conf = new Configuration();

conf.set("fs.default.name", "hdfs://hadoop001:9000");

conf.set("hadoop.job.user", "root");

conf.set("mapred.job.tracker", "hadoop001:9001");

Job job = new Job(conf, "word count");

((JobConf) job.getConfiguration()).setJar(jarFile.toString());

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

String input = "hdfs://hadoop001:9000/user/root/tmp_file_1";

String output = "hdfs://hadoop001:9000/user/root/tmp_file_2";

FileInputFormat.addInputPath(job, new Path(input));

FileOutputFormat.setOutputPath(job, new Path(output));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}2. Ejob.java

// 生成临时jar文件的类

public class EJob {

// To declare global field

private static List<URL> classPath = new ArrayList<URL>();

// To declare method

public static File createTempJar(String root) throws IOException {

if (!new File(root).exists()) {

return null;

}

Manifest manifest = new Manifest();

manifest.getMainAttributes().putValue("Manifest-Version", "1.0");

final File jarFile = File.createTempFile("EJob-", ".jar", new File(

System.getProperty("java.io.tmpdir")));

Runtime.getRuntime().addShutdownHook(new Thread() {

public void run() {

jarFile.delete();

}

});

JarOutputStream out = new JarOutputStream(

new FileOutputStream(jarFile), manifest);

createTempJarInner(out, new File(root), "");

out.flush();

out.close();

return jarFile;

}

private static void createTempJarInner(JarOutputStream out, File f,

String base) throws IOException {

if (f.isDirectory()) {

File[] fl = f.listFiles();

if (base.length() > 0) {

base = base + "/";

}

for (int i = 0; i < fl.length; i++) {

createTempJarInner(out, fl[i], base + fl[i].getName());

}

} else {

out.putNextEntry(new JarEntry(base));

FileInputStream in = new FileInputStream(f);

byte[] buffer = new byte[1024];

int n = in.read(buffer);

while (n != -1) {

out.write(buffer, 0, n);

n = in.read(buffer);

}

in.close();

}

}

public static ClassLoader getClassLoader() {

ClassLoader parent = Thread.currentThread().getContextClassLoader();

if (parent == null) {

parent = EJob.class.getClassLoader();

}

if (parent == null) {

parent = ClassLoader.getSystemClassLoader();

}

return new URLClassLoader(classPath.toArray(new URL[0]), parent);

}

public static void addClasspath(String component) {

if ((component != null) && (component.length() > 0)) {

try {

File f = new File(component);

if (f.exists()) {

URL key = f.getCanonicalFile().toURL();

if (!classPath.contains(key)) {

classPath.add(key);

}

}

} catch (IOException e) {

}

}

}

}

本文介绍了如何在Windows上使用Eclipse进行Hadoop开发。首先,通过在Eclipse的plugins目录下添加hadoop-eclipse-plugin.jar来安装插件,并重启Eclipse。接着,打开MapReduce视图,配置Map/Reduce和DFS Master的地址。然后,可以在Project Explorer中看到HDFS并进行文件操作。最后,简单提到了开发Hadoop程序的步骤,如创建WordCount.java。

本文介绍了如何在Windows上使用Eclipse进行Hadoop开发。首先,通过在Eclipse的plugins目录下添加hadoop-eclipse-plugin.jar来安装插件,并重启Eclipse。接着,打开MapReduce视图,配置Map/Reduce和DFS Master的地址。然后,可以在Project Explorer中看到HDFS并进行文件操作。最后,简单提到了开发Hadoop程序的步骤,如创建WordCount.java。

1931

1931

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?