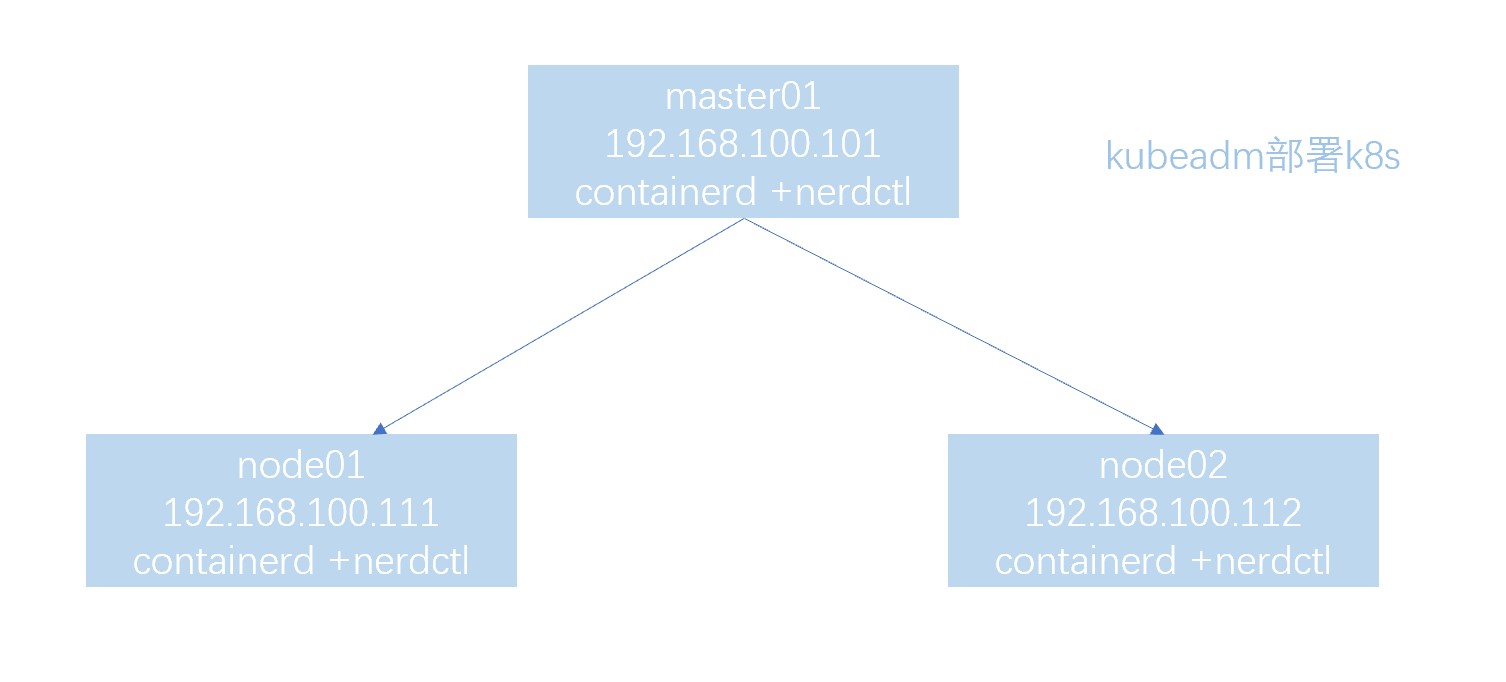

使用kubeadm部署kubernetes

部署架构图如下:(操作系统环境:Ubuntu 20.04.3 LTS)

安装运行时

k8s集群的master节点和node节点主机均需要提前安装好容器运行时和网络插件,此处均使用二进制包,通过脚本进行安装:

root@k8s-master01:~# cd /usr/local/src/

root@k8s-master01:/usr/local/src# tar -xf runtime-docker20.10.19-containerd1.6.20-binary-install.tar.gz

root@k8s-master01:/usr/local/src# cat runtime-install.sh #安装脚本内容如下,可以传递docker或containerd参数

#!/bin/bash

DIR=`pwd`

PACKAGE_NAME="docker-20.10.19.tgz"

DOCKER_FILE=${DIR}/${PACKAGE_NAME}

if test -z ${USERNAME};then

USERNAME=docker

fi

centos_install_docker(){

grep "Kernel" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统是`cat /etc/redhat-release`,即将开始系统初始化、配置docker-compose与安装docker" && sleep 1

systemctl stop firewalld && systemctl disable firewalld && echo "防火墙已关闭" && sleep 1

systemctl stop NetworkManager && systemctl disable NetworkManager && echo "NetworkManager" && sleep 1

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux && setenforce 0 && echo "selinux 已关闭" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

lsmod |grep conntrack &>/dev/null|| /usr/sbin/modprobe ip_conntrack

lsmod |grep br_netfilter &>/dev/null|| /usr/sbin/modprobe br_netfilter

/usr/sbin/sysctl -p

/bin/tar xvf ${DOCKER_FILE}

\cp docker/* /usr/local/bin

mkdir /etc/docker && \cp daemon.json /etc/docker

\cp containerd.service /lib/systemd/system/containerd.service

\cp docker.service /lib/systemd/system/docker.service

\cp docker.socket /lib/systemd/system/docker.socket

\cp ${DIR}/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose

groupadd docker && useradd docker -s /sbin/nologin -g docker

id -u ${USERNAME} &> /dev/null

if [ $? -ne 0 ];then

useradd ${USERNAME}

usermod ${USERNAME} -G docker

else

usermod ${USERNAME} -G docker

fi

docker_install_success_info

fi

}

ubuntu_install_docker(){

grep "Ubuntu" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统是`cat /etc/issue`,即将开始系统初始化、配置docker-compose与安装docker" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

lsmod |grep conntrack &>/dev/null || /usr/sbin/modprobe ip_conntrack

lsmod |grep br_netfilter &>/dev/null|| /usr/sbin/modprobe br_netfilter

/usr/sbin/sysctl -p

/bin/tar xvf ${DOCKER_FILE}

\cp docker/* /usr/local/bin

mkdir /etc/docker && \cp daemon.json /etc/docker

\cp containerd.service /lib/systemd/system/containerd.service

\cp docker.service /lib/systemd/system/docker.service

\cp docker.socket /lib/systemd/system/docker.socket

\cp ${DIR}/docker-compose-Linux-x86_64_1.28.6 /usr/bin/docker-compose

groupadd docker && useradd docker -r -m -s /sbin/nologin -g docker

id -u ${USERNAME} &> /dev/null

if [ $? -ne 0 ];then

groupadd -r ${USERNAME}

useradd -r -m -s /bin/bash -g ${USERNAME} ${USERNAME}

usermod ${USERNAME} -G docker

else

usermod ${USERNAME} -G docker

fi

docker_install_success_info

fi

}

centos_install_containerd(){

if [ $? -eq 0 ];then

/bin/echo "当前系统是`cat /etc/redhat-release`,即将开始系统初始化、安装配置containerd、nerdctl、cni、runc等" && sleep 1

systemctl stop firewalld && systemctl disable firewalld && echo "防火墙已关闭" && sleep 1

systemctl stop NetworkManager && systemctl disable NetworkManager && echo "NetworkManager" && sleep 1

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux && setenforce 0 && echo "selinux 已关闭" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

lsmod |grep conntrack &>/dev/null|| /usr/sbin/modprobe ip_conntrack

lsmod |grep br_netfilter &>/dev/null|| /usr/sbin/modprobe br_netfilter

/usr/sbin/sysctl -p

DIR=`pwd`

PACKAGE_NAME="containerd-1.6.20-linux-amd64.tar.gz"

CONTAINERD_FILE=${DIR}/${PACKAGE_NAME}

NERDCTL="nerdctl-1.3.0-linux-amd64.tar.gz"

CNI="cni-plugins-linux-amd64-v1.2.0.tgz"

RUNC="runc.amd64"

mkdir -p /etc/containerd /etc/nerdctl

tar xvf ${CONTAINERD_FILE} && cp bin/* /usr/local/bin/

\cp runc.amd64 /usr/bin/runc && chmod a+x /usr/bin/runc

\cp config.toml /etc/containerd/config.toml

\cp containerd.service /lib/systemd/system/containerd.service

#CNI

mkdir /opt/cni/bin -p

tar xvf ${CNI} -C /opt/cni/bin/

#nerdctl

tar xvf ${NERDCTL} -C /usr/local/bin/

\cp nerdctl.toml /etc/nerdctl/nerdctl.toml

containerd_install_success_info

fi

}

ubuntu_install_containerd(){

grep "Ubuntu" /etc/issue &> /dev/null

if [ $? -eq 0 ];then

/bin/echo "当前系统是`cat /etc/issue`,即将开始系统初始化、安装配置containerd、nerdctl、cni、runc等" && sleep 1

\cp ${DIR}/limits.conf /etc/security/limits.conf

\cp ${DIR}/sysctl.conf /etc/sysctl.conf

lsmod |grep conntrack &>/dev/null || /usr/sbin/modprobe ip_conntrack

lsmod |grep br_netfilter &>/dev/null|| /usr/sbin/modprobe br_netfilter

/usr/sbin/sysctl -p

DIR=`pwd`

PACKAGE_NAME="containerd-1.6.20-linux-amd64.tar.gz"

CONTAINERD_FILE=${DIR}/${PACKAGE_NAME}

NERDCTL="nerdctl-1.3.0-linux-amd64.tar.gz"

CNI="cni-plugins-linux-amd64-v1.2.0.tgz"

RUNC="runc.amd64"

mkdir -p /etc/containerd /etc/nerdctl

tar xvf ${CONTAINERD_FILE} && cp bin/* /usr/local/bin/

\cp runc.amd64 /usr/bin/runc && chmod a+x /usr/bin/runc

\cp config.toml /etc/containerd/config.toml

\cp containerd.service /lib/systemd/system/containerd.service

#CNI

mkdir /opt/cni/bin -p

tar xvf ${CNI} -C /opt/cni/bin/

#nerdctl

tar xvf ${NERDCTL} -C /usr/local/bin/

\cp nerdctl.toml /etc/nerdctl/nerdctl.toml

containerd_install_success_info

fi

}

containerd_install_success_info(){

/bin/echo "正在启动containerd server并设置为开机自启动!"

#start containerd service

systemctl daemon-reload && systemctl restart containerd && systemctl enable containerd

/bin/echo "containerd is:" `systemctl is-active containerd`

sleep 0.5 && /bin/echo "containerd server安装完成,欢迎进入containerd的容器世界!" && sleep 1

}

docker_install_success_info(){

/bin/echo "正在启动docker server并设置为开机自启动!"

systemctl enable containerd.service && systemctl restart containerd.service

systemctl enable docker.service && systemctl restart docker.service

systemctl enable docker.socket && systemctl restart docker.socket

sleep 0.5 && /bin/echo "docker server安装完成,欢迎进入docker世界!" && sleep 1

}

usage(){

echo "使用方法为[shell脚本 containerd|docker]"

}

main(){

RUNTIME=$1

case ${RUNTIME} in

docker)

centos_install_docker

ubuntu_install_docker

;;

containerd)

ubuntu_install_containerd

;;

*)

usage;

esac;

}

main $1

root@k8s-master01:/usr/local/src# systemctl status containerd #验证conatinerd正常启动

在另外两个node节点也依次执行上述安装步骤:

root@k8s-node01:~# cd /usr/local/src/ #node1节点

root@k8s-node01:/usr/local/src# tar -xf runtime-docker20.10.19-containerd1.6.20-binary-install.tar.gz

root@k8s-node01:/usr/local/src# bash runtime-install.sh containerd

root@k8s-node02:~# cd /usr/local/src/ #node2节点

root@k8s-node02:/usr/local/src# tar -xf runtime-docker20.10.19-containerd1.6.20-binary-install.tar.gz

root@k8s-node02:/usr/local/src# bash runtime-install.sh containerd

安装kubeadm、 kubectl、 kubelet:

三个主机都需要操作:

root@k8s-master01:/usr/local/src# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-node01:/usr/local/src# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-node02:/usr/local/src# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-master01:/usr/local/src# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@k8s-node01:/usr/local/src# curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

root@k8s-node02:/usr/local/src# apt-get update && apt-get install -y apt-transport-https -y

root@k8s-master01:/usr/local/src# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8s-node01:/usr/local/src# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8s-node02:/usr/local/src# cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

root@k8s-master01:/usr/local/src# apt-get update && apt-cache madison kubeadm #查看可用的kubeadm版本

root@k8s-node01:/usr/local/src# apt-get update && apt-cache madison kubeadm

root@k8s-node02:/usr/local/src# apt-get update && apt-cache madison kubeadm

root@k8s-master01:/usr/local/src# apt-get install -y kubeadm=1.26.3-00 kubectl=1.26.3-00 kubelet=1.26.3-00

root@k8s-node01:/usr/local/src# apt-get install -y kubeadm=1.26.3-00 kubectl=1.26.3-00 kubelet=1.26.3-00

root@k8s-node02:/usr/local/src# apt-get install -y kubeadm=1.26.3-00 kubectl=1.26.3-00 kubelet=1.26.3-00

下载kubenetes镜像:

root@k8s-master01:/usr/local/src# kubeadm config images list --kubernetes-version v1.26.3 #查看k8s 1.26.3版本各镜像,默认pull镜像是从官方下载,可能比较慢,可以将镜像源指向国内

registry.k8s.io/kube-apiserver:v1.26.3

registry.k8s.io/kube-controller-manager:v1.26.3

registry.k8s.io/kube-scheduler:v1.26.3

registry.k8s.io/kube-proxy:v1.26.3

registry.k8s.io/pause:3.9

registry.k8s.io/etcd:3.5.6-0

registry.k8s.io/coredns/coredns:v1.9.3

root@k8s-master01:/usr/local/src# kubeadm config images pull --image-repository="registry.cn-hangzhou.aliyuncs.com/google_containers" --kubernetes-version=v1.26.3 #将repository指向阿里云,提前pull镜像

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.26.3

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.26.3

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.26.3

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.26.3

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.9

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.6-0

[config/images] Pulled registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3

内核参数优化(所有集群主机):

修改sysctl.conf文件,默认脚本安装运行时容器已经优化

root@k8s-master01:/usr/local/src# cat /etc/sysctl.conf

net.ipv4.ip_forward=1

vm.max_map_count=262144

kernel.pid_max=4194303

fs.file-max=1000000

net.ipv4.tcp_max_tw_buckets=6000

net.netfilter.nf_conntrack_max=2097152

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-cal

加载内核模块:

root@k8s-master01:/usr/local/src# cat >> /etc/modules-load.d/modules.conf << 'EOF'

ip_vs

ip_vs_lc

ip_vs_lblc

ip_vs_lblcr

ip_vs_rr

ip_vs_wrr

ip_vs_sh

ip_vs_dh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

ip_tables

ip_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

xt_set

br_netfilter

nf_conntrack

overlay

EOF

优化资源使用限制,默认默认脚本安装运行时容器已经优化:

root@k8s-master01:/usr/local/src# cat /etc/security/limits.conf

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

关闭交换分区,默认默认脚本安装运行时容器已经优化:

root@k8s-master01:/usr/local/src# vim /etc/sysctl.conf

vm.swappiness=0 #修改此项为0

root@k8s-master01:/usr/local/src# sysctl -p

所有参数优化完后重启集群主机:

root@k8s-master01:/usr/local/src# reboot

root@k8s-node01:/usr/local/src# reboot

root@k8s-node02:/usr/local/src# reboot

确定优化和模块加载完成:

root@k8s-node01:~# lsmod |grep ip_vs

root@k8s-node01:~# lsmod | grep br_netfilter

root@k8s-master01:~# sysctl -a | grep bridge-nf-call-iptables

kubernetes集群初始化:

K8S集群的pod网络和service网络不能和生产环境中所有的网段重叠,否则后期打通所有容器网络将无法实现

root@k8s-master01:~# kubeadm init --apiserver-advertise-address=192.168.100.101 --apiserver-bind-port=6443 --kubernetes-version=v1.26.3 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=cluster.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swap

[init] Using Kubernetes version: v1.26.3

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.200.0.1 192.168.100.101]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master01 localhost] and IPs [192.168.100.101 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master01 localhost] and IPs [192.168.100.101 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 9.501955 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: n9wl12.h1ftewrh71kxxf96

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.100.101:6443 --token n9wl12.h1ftewrh71kxxf96 \

--discovery-token-ca-cert-hash sha256:256adcaa61bb31478cf595bde416d67ab26a0df6fd51e68cdf1462bdf0d5dc40

执行初始化成功后输出的命令:

root@k8s-master01:~# mkdir -p $HOME/.kube

root@k8s-master01:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master01:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看集群节点和pod:

root@k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 7m16s v1.26.3

root@k8s-master01:~# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-567c556887-ll8s9 0/1 Pending 0 7m22s

kube-system coredns-567c556887-zhwhc 0/1 Pending 0 7m22s

kube-system etcd-k8s-master01 1/1 Running 0 7m36s

kube-system kube-apiserver-k8s-master01 1/1 Running 0 7m38s

kube-system kube-controller-manager-k8s-master01 1/1 Running 0 7m36s

kube-system kube-proxy-qlvk2 1/1 Running 0 7m22s

kube-system kube-scheduler-k8s-master01 1/1 Running 0 7m36s

添加node节点:

将两台node节点加入到集群(在两个node节点执行,此命令是master节点初始化集群成功后输出的,直接拷贝执行)

root@k8s-node01:~# kubeadm join 192.168.100.101:6443 --token n9wl12.h1ftewrh71kxxf96 \

--discovery-token-ca-cert-hash sha256:256adcaa61bb31478cf595bde416d67ab26a0df6fd51e68cdf1462bdf0d5dc40

root@k8s-node02:~# kubeadm join 192.168.100.101:6443 --token n9wl12.h1ftewrh71kxxf96 \

--discovery-token-ca-cert-hash sha256:256adcaa61bb31478cf595bde416d67ab26a0df6fd51e68cdf1462bdf0d5dc40

master节点查看node状态:

root@k8s-master01:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady control-plane 24m v1.26.3

k8s-node01 NotReady <none> 4m3s v1.26.3

k8s-node02 NotReady <none> 47s v1.26.3

部署⽹络组件:

k8s支持的网络组件列表参考:Installing Addons | Kubernetes

此处部署calico组件,需要下载yaml文件并将组件运行为容器:https://www.tigera.io/project-calico/,同时对文件中pod和service网段地址等配置进行调整,和初始化集群配置参数对应

注意,此yaml文件中需要下载镜像,如果内外无法访问互联网的主机,需要将镜像地址指向内外的harbor或其它可以访问的镜像仓库地址

root@k8s-master01:~# cd /usr/local/src/

root@k8s-master01:/usr/local/src# vi calico-ipip_ubuntu2004-k8s-1.26.x.yaml #确保以下配置

- name: CALICO_IPV4POOL_CIDR #4438行开始

value: "10.100.0.0/16" #pod可分配的地址范围,和k8s初始化指定的要一致

#自定义子网范围

- name: CALICO_IPV4POOL_BLOCK_SIZE

value: "24" #子网大小

#指定基于eth0的网卡IP建立BGP连接,默认为服务器的第一块(first-found)网 卡,https://projectcalico.docs.tigera.io/reference/node/configuration

- name: IP_AUTODETECTION_METHOD #4400行开始,使用那块网卡建立通信隧道,多块网卡的主机需要指定

value: "interface=eth0"

运行yaml文件创建calico组件前,需要在node节点拷贝认证文件,否则calico容器组件创建会失败,提示需要config文件:

root@k8s-node01:~# mkdir -p /root/.kube/

root@k8s-node02:~# mkdir -p /root/.kube/

root@k8s-node01:~# scp 192.168.100.101:/root/.kube/config /root/.kube

root@k8s-node02:~# scp 192.168.100.101:/root/.kube/config /root/.kube

master节点根据yaml文件创建calico组件:

root@k8s-master01:/usr/local/src# kubectl apply -f calico-ipip_ubuntu2004-k8s-1.26.x.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

查看calico组件容器状态:

root@k8s-master01:/usr/local/src# kubectl get pod -A #状态全部转换成Running表示创建完成

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-79bfdd4898-6h9lf 0/1 Pending 0 77s

kube-system calico-node-5zc7v 0/1 Init:0/2 0 77s

kube-system calico-node-8z65q 0/1 Init:0/2 0 77s

kube-system calico-node-b2v9f 0/1 Init:0/2 0 77s

kube-system coredns-567c556887-ll8s9 0/1 Pending 0 18h

kube-system coredns-567c556887-zhwhc 0/1 Pending 0 18h

kube-system etcd-k8s-master01 1/1 Running 1 (68m ago) 18h

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (68m ago) 18h

kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (68m ago) 18h

kube-system kube-proxy-hrgtw 1/1 Running 1 (68m ago) 18h

kube-system kube-proxy-jntpr 1/1 Running 1 (68m ago) 18h

kube-system kube-proxy-qlvk2 1/1 Running 1 (68m ago) 18h

kube-system kube-scheduler-k8s-master01 1/1 Running 1 (68m ago) 18h

root@k8s-master01:/usr/local/src# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-79bfdd4898-6h9lf 1/1 Running 0 24m

kube-system calico-node-5zc7v 1/1 Running 0 24m

kube-system calico-node-8z65q 1/1 Running 0 24m

kube-system calico-node-b2v9f 1/1 Running 0 24m

kube-system coredns-567c556887-ll8s9 1/1 Running 0 19h

kube-system coredns-567c556887-zhwhc 1/1 Running 0 19h

kube-system etcd-k8s-master01 1/1 Running 1 (92m ago) 19h

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (92m ago) 19h

kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (92m ago) 19h

kube-system kube-proxy-hrgtw 1/1 Running 1 (92m ago) 18h

kube-system kube-proxy-jntpr 1/1 Running 1 (92m ago) 18h

kube-system kube-proxy-qlvk2 1/1 Running 1 (92m ago) 19h

root@k8s-master01:/usr/local/src# kubectl describe pod -n kube-system calico-kube-controllers-79bfdd4898-6h9lf #查看集群中容器的事件信息

root@k8s-master01:/usr/local/src# kubectl delete pod -n kube-system calico-kube-controllers-79bfdd4898-6h9lf #如果容器一直无法创建成功,可以进行删除,会自动重新创建

root@k8s-master01:/usr/local/src# kubectl logs -f -n kube-system calico-kube-controllers-79bfdd4898-6h9lf

#查看集群中容器日志

至此,利用kubeadm部署用于学习测试的k8s集群已经完成

在K8S集群部署nginx服务

利用yaml文件部署nginx容器并提供web服务

root@k8s-master01:/usr/local/src# cd /usr/local/src/

root@k8s-master01:/usr/local/src# cat nginx.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-nginx-deployment-label

name: myserver-nginx-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-nginx-selector

template:

metadata:

labels:

app: myserver-nginx-selector

spec:

containers:

- name: myserver-nginx-container

image: nginx

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80 #镜像中声明容器中服务的端口

protocol: TCP

name: http

- containerPort: 443 #镜像中声明容器中服务的端口

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

# resources:

# limits:

# cpu: 2

# memory: 2Gi

# requests:

# cpu: 500m

# memory: 1Gi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-nginx-service-label

name: myserver-nginx-service

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80 #service监听的端口,service通常是iptables或者IPVS规则

protocol: TCP

targetPort: 80 #service转给容器的端口,也就是容器里服务监听端口,一般和service监听端口一致

nodePort: 30004 #宿主机监听的端口,K8S集群外部访问容器里服务时的端口

- name: https

port: 443

protocol: TCP

targetPort: 443

nodePort: 30443

selector:

app: myserver-nginx-selector

root@k8s-master01:/usr/local/src# kubectl create ns myserver #创建namespace

namespace/myserver created

root@k8s-master01:/usr/local/src# kubectl apply -f nginx.yaml #通过yaml文件创建容器

deployment.apps/myserver-nginx-deployment created

service/myserver-nginx-service created

root@k8s-master01:/usr/local/src# kubectl get pod -n myserver #查看容器状态

NAME READY STATUS RESTARTS AGE

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 2m11s

root@k8s-master01:/usr/local/src# kubectl get svc -n myserver #查看service信息,宿主机的30004端口映射给service(myserver-nginx-service)的80端口

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myserver-nginx-service NodePort 10.200.31.148 <none> 80:30004/TCP,443:30443/TCP 2m52s

root@k8s-master01:/usr/local/src# kubectl get ep -n myserver #查看后端映射信息,myserver-nginx-service这个service映射的pod是10.100.96.1

NAME ENDPOINTS AGE

myserver-nginx-service 10.100.96.1:443,10.100.96.1:80 4m53s

root@k8s-master01:/usr/local/src# kubectl get pod -o wide -n myserver #查看容pod的IP和所属node等信息

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 11m 10.100.96.1 k8s-node02 <none> <none>

在本地浏览器访问nginx服务,可以访问任意node节点的30004端口

在K8S集群部署tomcat服务

root@k8s-master01:/usr/local/src# cd /usr/local/src/

root@k8s-master01:/usr/local/src# cat tomcat.yaml #创建tomcat容器的yaml文件

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: myserver-tomcat-app1-deployment-label

name: myserver-tomcat-app1-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: myserver-tomcat-app1-selector

template:

metadata:

labels:

app: myserver-tomcat-app1-selector

spec:

containers:

- name: myserver-tomcat-app1-container

image: registry.cn-hangzhou.aliyuncs.com/zhangshijie/tomcat-app1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

# resources:

# limits:

# cpu: 2

# memory: 2Gi

# requests:

# cpu: 500m

# memory: 1Gi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: myserver-tomcat-app1-service-label

name: myserver-tomcat-app1-service

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30005

selector:

app: myserver-tomcat-app1-selector

root@k8s-master01:/usr/local/src# kubectl apply -f tomcat.yaml

deployment.apps/myserver-tomcat-app1-deployment created

service/myserver-tomcat-app1-service created

root@k8s-master01:/usr/local/src# kubectl get pod -n myserver #查看pod状态

NAME READY STATUS RESTARTS AGE

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 18m

myserver-tomcat-app1-deployment-6bb596979f-tfvv8 0/1 ContainerCreating 0 87s

root@k8s-master01:/usr/local/src# kubectl get pod -n myserver #状态Running,表示创建成功

NAME READY STATUS RESTARTS AGE

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 25m

myserver-tomcat-app1-deployment-6bb596979f-tfvv8 1/1 Running 0 8m28s

root@k8s-master01:/usr/local/src# kubectl get svc -n myserver #宿主机30005映射到service(myserver-tomcat-app1-service)的80端口

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

myserver-nginx-service NodePort 10.200.31.148 <none> 80:30004/TCP,443:30443/TCP 30m

myserver-tomcat-app1-service NodePort 10.200.236.91 <none> 80:30005/TCP 13m

root@k8s-master01:/usr/local/src# kubectl get ep -n myserver #myserver-tomcat-app1-service这个service的后端pod是10.100.96.2:8080

NAME ENDPOINTS AGE

myserver-nginx-service 10.100.96.1:443,10.100.96.1:80 37m

myserver-tomcat-app1-service 10.100.96.2:8080 19m

root@k8s-master01:/usr/local/src# kubectl get pod -o wide -n myserver #查看pod的IP和所属节点信息

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 38m 10.100.96.1 k8s-node02 <none> <none>

myserver-tomcat-app1-deployment-6bb596979f-tfvv8 1/1 Running 0 20m 10.100.96.2 k8s-node02 <none> <none>

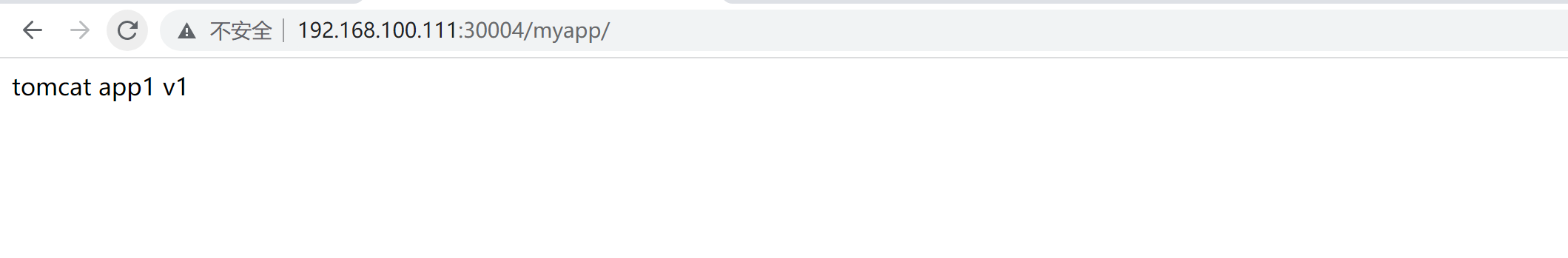

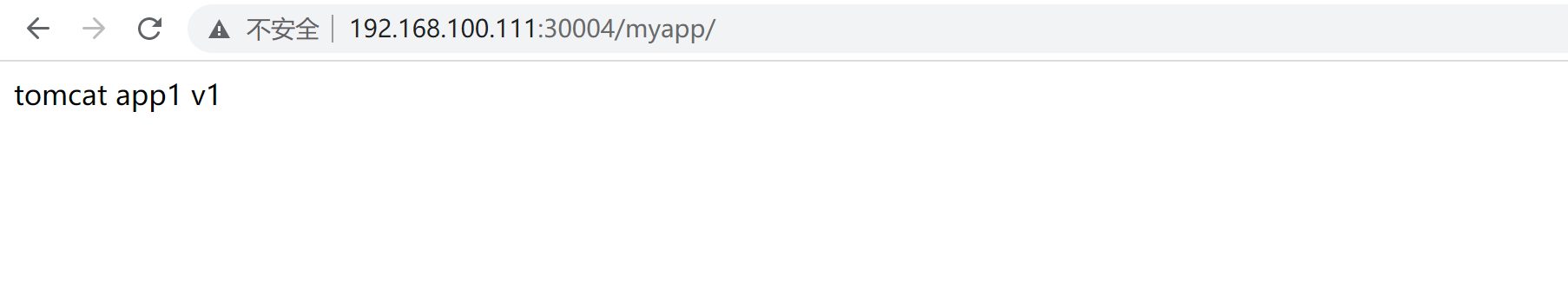

在本地浏览器访问tomcat服务,可以访问任意node节点的30005端口:

用nginx作为代理访问后端tomcat

将上述两步中的nginx容器作为代理访问后端tomcat服务:

如上图,默认无法访问,没有做代理

正常生产上实现需要重新打镜像,此处测试直接修改容器中nginx的配置文件来实现:

root@k8s-master01:/usr/local/src# kubectl exec -it myserver-nginx-deployment-596d5d9799-5x9zt bash -n myserver #进入容器

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@myserver-nginx-deployment-596d5d9799-5x9zt:/# apt-get update

root@myserver-nginx-deployment-596d5d9799-5x9zt:/# apt-get -y install vim

root@myserver-nginx-deployment-596d5d9799-5x9zt:/# vi /etc/nginx/conf.d/default.conf #增加代理相关配置,将访问nginx中myapp的请求转发给service,通过service最终负载到tomcat容器

location /myapp {

proxy_pass http://myserver-tomcat-app1-service:80;

}

root@myserver-nginx-deployment-596d5d9799-5x9zt:/# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

root@myserver-nginx-deployment-596d5d9799-5x9zt:/# nginx -s reload #重新加载配置

2023/04/21 07:36:43 [notice] 437#437: signal process started

本机再次访问:

对tomcat容器的service进行扩展:

root@k8s-master01:/usr/local/src# vi tomcat.yaml #修改tomcat的yaml文件

replicas: 2 #第10行,改为2,即2个pod

root@k8s-master01:/usr/local/src# kubectl apply -f tomcat.yaml #执行

deployment.apps/myserver-tomcat-app1-deployment configured

service/myserver-tomcat-app1-service unchanged

root@k8s-master01:/usr/local/src# kubectl get pod -n myserver #重新开始创建另一个pod

NAME READY STATUS RESTARTS AGE

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 102m

myserver-tomcat-app1-deployment-6bb596979f-ll2xt 0/1 ContainerCreating 0 90s

myserver-tomcat-app1-deployment-6bb596979f-tfvv8 1/1 Running 0 84m

root@k8s-master01:/usr/local/src# kubectl get discribe -n myserver myserver-tomcat-app1-deployment-6bb596979f-ll2xt #查看pod当前状态

root@k8s-master01:/usr/local/src# kubectl get pod -n myserver #扩展完成

NAME READY STATUS RESTARTS AGE

myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 115m

myserver-tomcat-app1-deployment-6bb596979f-ll2xt 1/1 Running 0 15m

myserver-tomcat-app1-deployment-6bb596979f-tfvv8 1/1 Running 0 98m

root@k8s-master01:/usr/local/src# kubectl get ep -n myserver #发现tomcat对应的svc有两个ep

NAME ENDPOINTS AGE

myserver-nginx-service 10.100.96.1:443,10.100.96.1:80 116m

myserver-tomcat-app1-service 10.100.131.4:8080,10.100.96.2:8080 98m

为了验证前端请求是否转发到了两个tomcat的pod上,可以修改其中一个pod中的网页内容来区分:

root@k8s-master01:/usr/local/src# kubectl exec -it -n myserver myserver-tomcat-app1-deployment-6bb596979f-ll2xt bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

[root@myserver-tomcat-app1-deployment-6bb596979f-ll2xt /]# cd /data/tomcat/webapps/myapp/

[root@myserver-tomcat-app1-deployment-6bb596979f-ll2xt myapp]# echo "tomcat app2 v1" >index.html

本机浏览器验证:同一个地址访问发送给后端两台tomcat容器

部署K8S集群官⽅dashboard:

K8S官方dashboard是开源的,使用go语言开发,有版本要求,不同的K8S版本需要不同版本的dashboard,具体兼容版本查看官网文档

官网地址:GitHub - kubernetes/dashboard: General-purpose web UI for Kubernetes clusters

部署dashboard需要两个镜像:kubernetesui/dashboard:v2.7.0、kubernetesui/metrics-scraper:v1.0.8,如果无法上网,需要提前down到本地镜像仓库

root@k8s-master01:/usr/local/src# cd /usr/local/src/dashboard-v2.7.0/

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# ls -ltrh #三个yaml文件

total 16K

-rw-r--r-- 1 root root 374 Apr 21 16:30 admin-user.yaml

-rw-r--r-- 1 root root 212 Apr 21 16:30 admin-secret.yaml

-rw-r--r-- 1 root root 7.5K Apr 21 16:30 dashboard-v2.7.0.yaml

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# vi dashboard-v2.7.0.yaml #修改配置可以从外部访问dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30000 #将宿主机的30000端口映射到dashboard访问

selector:

k8s-app: kubernetes-dashboard

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl apply -f dashboard-v2.7.0.yaml #先执行创建dashboard的yaml,K8S从1.24开始不会为用户自动创建密钥,需要手动创建

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl apply -f admin-user.yaml #执行创建用户

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl apply -f admin-secret.yaml #执行为用户创建密钥

secret/dashboard-admin-user created

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl get pod -A #查看新创建的namespace: kubernetes-dashboard,pod全部处于Running状态

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-79bfdd4898-6h9lf 1/1 Running 0 5h48m

kube-system calico-node-5zc7v 1/1 Running 0 5h48m

kube-system calico-node-8z65q 1/1 Running 0 5h48m

kube-system calico-node-b2v9f 1/1 Running 0 5h48m

kube-system coredns-567c556887-ll8s9 1/1 Running 0 24h

kube-system coredns-567c556887-zhwhc 1/1 Running 0 24h

kube-system etcd-k8s-master01 1/1 Running 1 (6h56m ago) 24h

kube-system kube-apiserver-k8s-master01 1/1 Running 1 (6h56m ago) 24h

kube-system kube-controller-manager-k8s-master01 1/1 Running 1 (6h56m ago) 24h

kube-system kube-proxy-hrgtw 1/1 Running 1 (6h56m ago) 24h

kube-system kube-proxy-jntpr 1/1 Running 1 (6h56m ago) 24h

kube-system kube-proxy-qlvk2 1/1 Running 1 (6h56m ago) 24h

kube-system kube-scheduler-k8s-master01 1/1 Running 1 (6h56m ago) 24h

kubernetes-dashboard dashboard-metrics-scraper-7bc864c59-lckvl 1/1 Running 0 5m57s

kubernetes-dashboard kubernetes-dashboard-6c7ccbcf87-drjwc 1/1 Running 0 5m57s

myserver myserver-nginx-deployment-596d5d9799-5x9zt 1/1 Running 0 162m

myserver myserver-tomcat-app1-deployment-6bb596979f-ll2xt 1/1 Running 0 61m

myserver myserver-tomcat-app1-deployment-6bb596979f-tfvv8 1/1 Running 0 144m

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl get secret -A |grep admin #查看创建的密钥

kubernetes-dashboard dashboard-admin-user kubernetes.io/service-account-token 3 4m37s

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl describe secret -n kubernetes-dashboard dashboard-admin-user #输出的token信息既是K8S的密钥,用此密钥可以访问管理K8S集群,权限非常高

Name: dashboard-admin-user

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 7dcbf592-bd60-48de-af47-51f7677d34f7

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1099 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InJQcHRiWnVBLVZTSW9MUDQ3NUNIYktrZlNzRERnSnZiNFFJSFFNY2hoVUUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdXNlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiN2RjYmY1OTItYmQ2MC00OGRlLWFmNDctNTFmNzY3N2QzNGY3Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmVybmV0ZXMtZGFzaGJvYXJkOmFkbWluLXVzZXIifQ.ALDe9XZ1xzMs1H1VeO5RQnZv99Gwz8nDvOLAPxGUej_yvx0A9avLOfWpfOI-jxT1GVVj1nWlDM7lp8QZCvO2xoNXj7MVjw9uFToxG9sAJgTuWxZAYdsC3XMl2U1bGQiRVMoEs3WcBypadlhaPzfWbOQQtqkCnp0-fnhnsCIwv3CjJAgkM79WDgZzx5VBmj05svLNwYnSARTU70NIMvVGLN1LGXLn_0JVZbZQ88VugpzNuOrPvkq9gDtptzWgG3wW-iQjrmwTecSB8iSol7-b1wjsMrI3G5bz5xjWzCSjxFM4cjGqUTLSNaezbfP_9jAHNxOO5Eq12LYVP4K0GJYTRg

root@k8s-master01:/usr/local/src/dashboard-v2.7.0# kubectl get svc -n kubernetes-dashboard #映射到宿主机30000端口,https协议

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.200.212.96 <none> 8000/TCP 28m

kubernetes-dashboard NodePort 10.200.204.119 <none> 443:30000/TCP 28m

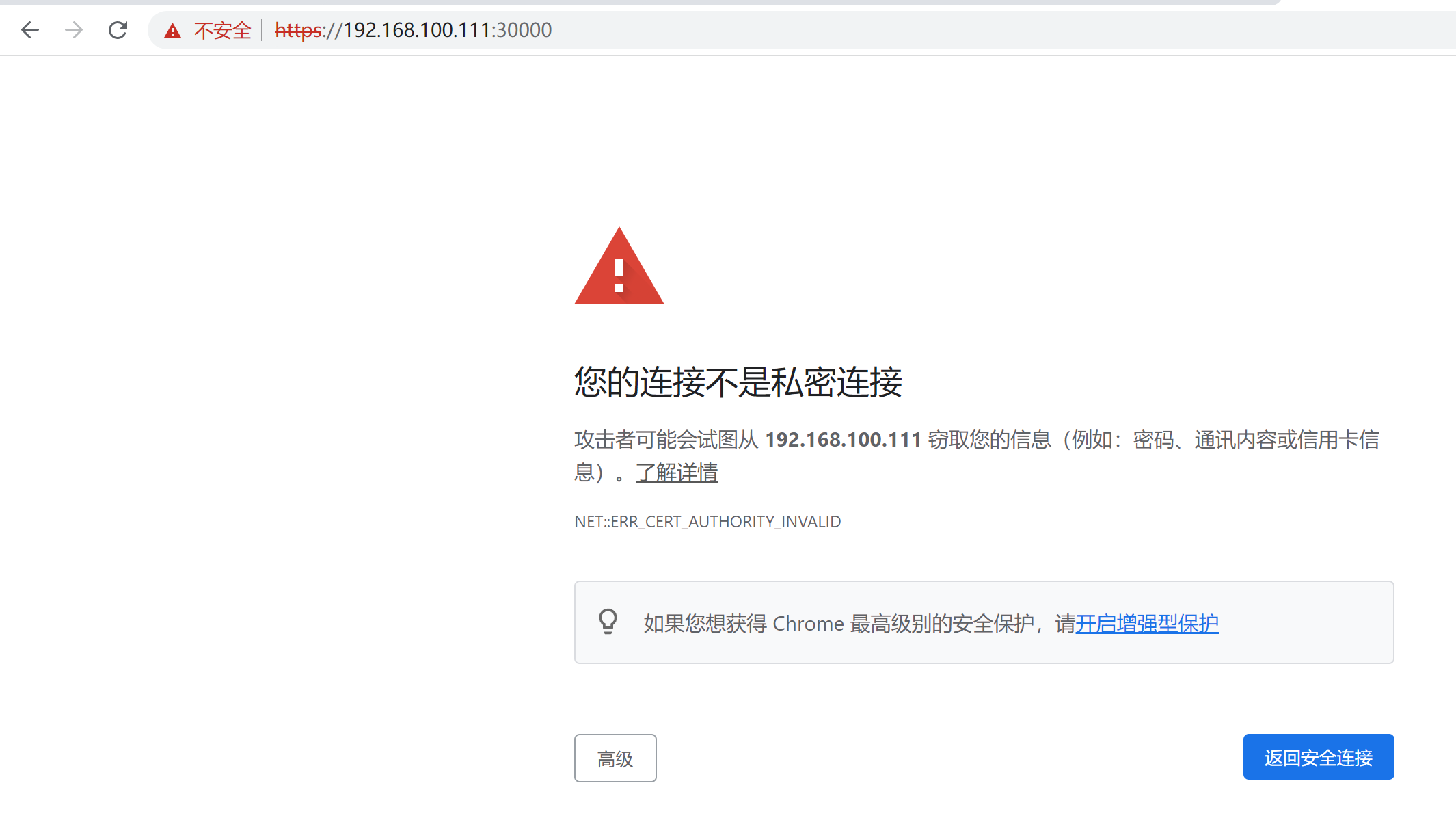

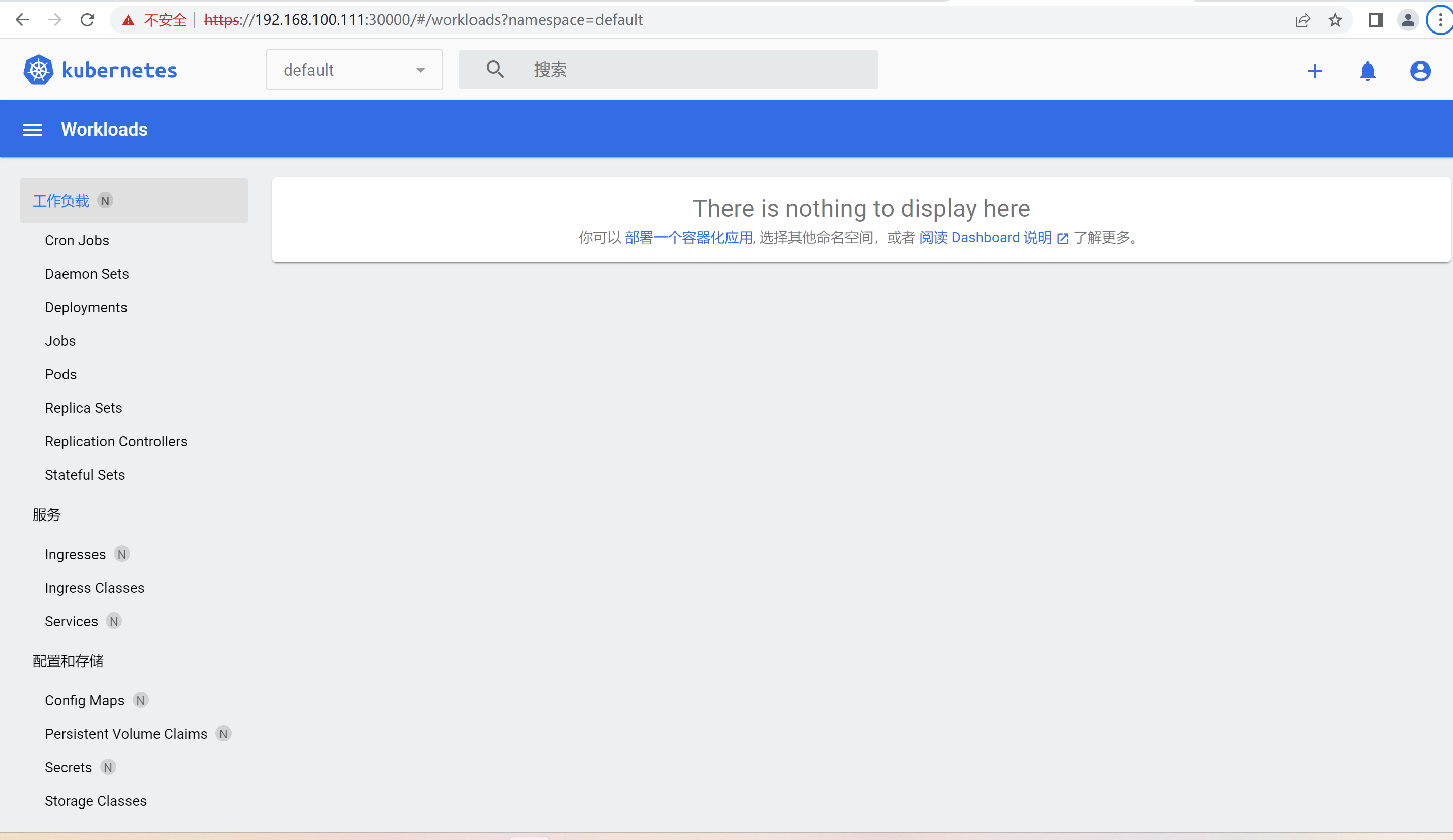

本机浏览器访问dashboard:(任意node节点的30000端口的https协议)

点击高级,信任此证书

输入获取的token,点击登录

在此页面可以对K8S集群进行管理

K8S的第三方dashboard

第三方dashboard工具,功能比较强大:kuboard(国人开发)、kubeSphere(青云开源的PASS平台)

K8S集群中service实现

K8S集群中service功能实现依赖于内核通过IPVS或iptables实现,具体用哪个实现是由kube-proxy来定义的,kubeadm部署默认使用iptables实现

root@k8s-node01:~# apt-get -y install ipvsadm #安装ipvs管理工具

root@k8s-node01:~# ipvsadm -Ln #ipvs规则为空,默认使用iptables实现

root@k8s-node01:~# iptables-save > node1iptables #导出iptables规则

修改配置使用ipvs实现service功能,修改配置kube-proxy configmap:

root@k8s-master01:/usr/local/src# kubectl edit configmap -n kube-system kube-proxy

kind: KubeProxyConfiguration #46行

metricsBindAddress: "" #47行

mode: "ipvs" #48行,修改此行,默认为空

修改完后重启node节点,再次查看ipvs规则,确认生效:

root@k8s-node02:~# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.100.112:30000 rr

-> 10.100.96.11:8443 Masq 1 0 0

TCP 192.168.100.112:30004 rr

-> 10.100.96.12:80 Masq 1 0 0

TCP 192.168.100.112:30005 rr

-> 10.100.96.9:8080 Masq 1 0 0

-> 10.100.131.9:8080 Masq 1 0 0

TCP 192.168.100.112:30443 rr

-> 10.100.96.12:443 Masq 1 0 0

TCP 10.100.96.0:30000 rr

-> 10.100.96.11:8443 Masq 1 0 0

TCP 10.100.96.0:30004 rr

-> 10.100.96.12:80 Masq 1 0 0

TCP 10.100.96.0:30005 rr

-> 10.100.96.9:8080 Masq 1 0 0

-> 10.100.131.9:8080 Masq 1 0 0

TCP 10.100.96.0:30443 rr

-> 10.100.96.12:443 Masq 1 0 0

TCP 10.200.0.1:443 rr

-> 192.168.100.101:6443 Masq 1 7 0

TCP 10.200.0.10:53 rr

-> 10.100.131.10:53 Masq 1 0 0

-> 10.100.131.12:53 Masq 1 0 0

TCP 10.200.0.10:9153 rr

-> 10.100.131.10:9153 Masq 1 0 0

-> 10.100.131.12:9153 Masq 1 0 0

TCP 10.200.31.148:80 rr

-> 10.100.96.12:80 Masq 1 0 0

TCP 10.200.31.148:443 rr

-> 10.100.96.12:443 Masq 1 0 0

TCP 10.200.204.119:443 rr

-> 10.100.96.11:8443 Masq 1 0 0

TCP 10.200.212.96:8000 rr

-> 10.100.96.10:8000 Masq 1 0 0

TCP 10.200.236.91:80 rr

-> 10.100.96.9:8080 Masq 1 0 0

-> 10.100.131.9:8080 Masq 1 0 0

UDP 10.200.0.10:53 rr

-> 10.100.131.10:53 Masq 1 0 0

-> 10.100.131.12:53 Masq 1 0 0

至此通过kubeadm部署K8S集群已经完成,用于测试和学习

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?