理解LGWR,Log File Sync Waits以及Commit的性能问题

一.概要:

1. Commit和log filesync的工作机制

2. 为什么log file wait太久

3. 如何去度量问题出在那里呢?

二.log file sync等待的原因

1. 默认情况下我们commit一个事务是要等待logfile sync,这其中包括:

(1)User commit(用户提交的统计信息可以通过v$sesstat来查看)

(2)DDL-这一部分主要是由于递归的事务提交所产生

(3)递归的数据字典DML操作

2. Rollbacks导致log file sync等待

(1)Userrollbacks-用户或者由应用发出的rollback操作所致

(2)Transactionrollbacks:1,由于一些失败的操作导致oracle内部的rollback 2.空间分配,或者ASSM相关的问题,以及用户取消的长查询,被kill掉的session等等。

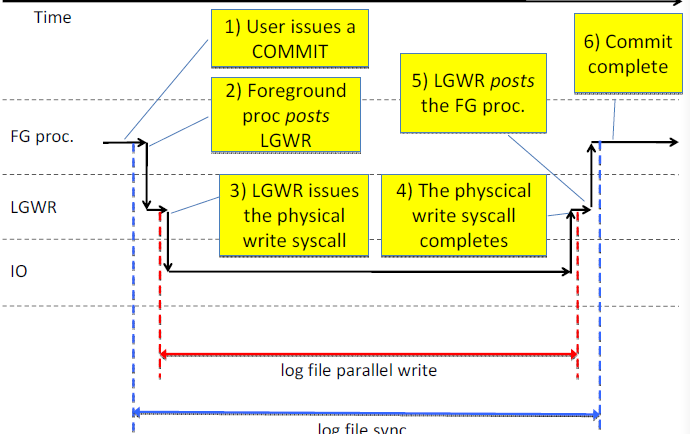

下图为Commit和log file sync相关的流程图:

Log file sync performance>disk IO speed

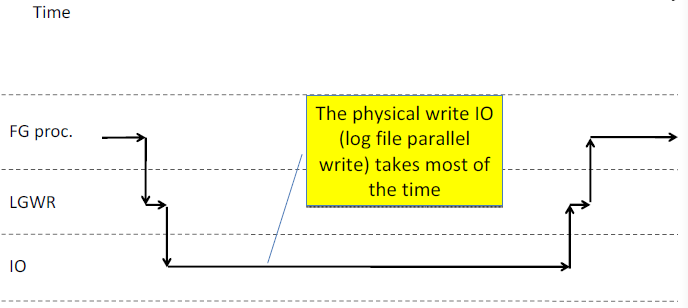

****大多数log file sync的等待时间其实都是花费在logfile parallel write,类似与DBWR会等待db file parallel write

****其它的log file sync等待花费在调度延迟,IPC通信延迟等等

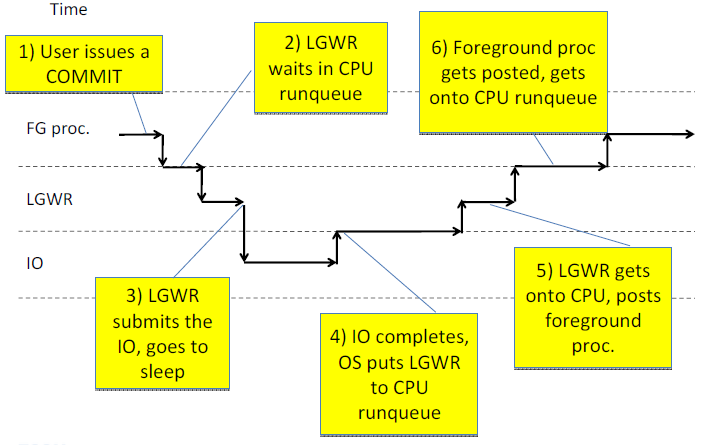

1. 前台进程对LGWR发出调用,然后到sleep状态下面看看Log file sync等待的整个流程:

此时log file sync等待开始记数

次调用在Unix平台是通过信号量来实现

2. LGWR被唤醒,得到CPU时间片来工作

LGWR发出IO请求

LGWR转去sleep,并且等待log file parallel write

3. 当在存储级别完成IO调用后OS唤醒LGWR进程

LGWR继续去获得CPU时间片

此时标记log file parallel write等待完成,Post相关信息给前台进程

4. 前台进程被LGWR唤醒,前台进程得到CPU时间片并且标记log file sync等待完成

通过snapper脚本来度量LGWR的速度:

- <span style="font-family:'Comic Sans MS';">---------------------------------------------------------------------------------

- SID, USERNAME , TYPE, STATISTIC , DELTA, HDELTA/SEC, %TIME, GRAPH

- ---------------------------------------------------------------------------------

- 1096, (LGWR) , STAT, messages sent , 12 , 12,

- 1096, (LGWR) , STAT, messages received , 10 , 10,

- 1096, (LGWR) , STAT, background timeouts , 1 , 1,

- 1096, (LGWR) , STAT, physical write total IO requests , 40, 40,

- 1096, (LGWR) , STAT, physical write total multi block request, 38, 38,

- 1096, (LGWR) , STAT, physical write total bytes, 2884608 , 2.88M,

- 1096, (LGWR) , STAT, calls to kcmgcs , 20 , 20,

- 1096, (LGWR) , STAT, redo wastage , 4548 , 4.55k,

- 1096, (LGWR) , STAT, redo writes , 10 , 10,

- 1096, (LGWR) , STAT, redo blocks written , 2817 , 2.82k,

- 1096, (LGWR) , STAT, redo write time , 25 , 25,

- 1096, (LGWR) , WAIT, LGWR wait on LNS , 1040575 , 1.04s, 104.1%, |@@@@@@@@@@|

- 1096, (LGWR) , WAIT, log file parallel write , 273837 , 273.84ms, 27.4%,|@@@ |

- 1096, (LGWR) , WAIT, events in waitclass Other , 1035172 , 1.04s , 103.5%,|@@@@@@@@@@|</span>

- oracle@linux01:~$ strace -cp `pgrep -f lgwr`

- Process 12457 attached - interrupt to quit

- ^CProcess 12457 detached

- % time seconds usecs/call calls errors syscall

- ------ ----------- ----------- --------- --------- --------------

- 100.00 0.010000 263 38 3 semtimedop

- 0.00 0.000000 0 213 times

- 0.00 0.000000 0 8 getrusage

- 0.00 0.000000 0 701 gettimeofday

- 0.00 0.000000 0 41 io_getevents

- 0.00 0.000000 0 41 io_submit

- 0.00 0.000000 0 2 semop

- 0.00 0.000000 0 37 semctl

- ------ ----------- ----------- --------- --------- --------------

- 100.00 0.010000 1081 3 total

Redo,commit相关的latch tuning

1.redo allocation latches-故名思议,在私有现成写redo到log buffer时保护分配空间的latch

2.redo copy latches-当从私有内存区域copy redo到log buffer时需要的latch直到相关redo流被copy到log buffer,,那么LGWR进程

直到已经copy完成可以写buffers到磁盘,此时LGWR将等待LGWR wait for redo copy事件,相关的可以被调整的参数:_log_simultaneous_copies

等待事件:

1.log file sync

2.log file parallel write

3.log file single write

可以获取相关的统计信息(v$sesstat,v$sysstat)

(1.redo size 2.redo writing time 3.user commits 4 user rollbacks 5.transaction rollbacks)

下面看一个非commit问题的等待事件:log buffer space-此事件主要是由于redo log buffer太小,LGWR刷出redo导致争用,或者由于IO子系统太慢.根据很多人的经验,相对log buffer设置大一点能够缓解log file sync,这只是相对而言.如果你滴业务类型,每次commit都是比较大的写入,而且系统的整个IO已经达到存储子系统的瓶颈,那么增大log buffer将是无济于事的。根据MOS的很多文档参考,在10g中还是不建议设置次参数。

log file single write:

单块写redo IO大多数情况下仅仅用于logfile header block的读和写,其中log switch是主要的情况,当归档发生时需要update log header,所以可能是LGWR和ARCH等待此事件。

如下是log switch发生时的trace文件:

- WAIT #0: nam='log file sequential read' ela= 12607 log#=0

- block#=1

- WAIT #0: nam='log file sequential read' ela= 21225 log#=1

- block#=1

- WAIT #0: nam='control file sequential read' ela= 358 file#=0

- WAIT #0: nam='log file single write' ela= 470 log#=0 block#=1

- WAIT #0: nam='log file single write' ela= 227 log#=1 block#=1

- LGWR trace file:

- *** 2012-06-10 11:36:06.759

- Warning: log write time 690ms, size 19KB

- *** 2012-06-10 11:37:23.778

- Warning: log write time 52710ms, size 0KB

- *** 2012-06-10 11:37:27.302

- Warning: log write time 3520ms, size 144KB

看下面我们某库的AWR信息:

- Load Profile Per Second Per Transaction Per Exec Per Call

- ~~~~~~~~~~~~ --------------- --------------- ---------- ----------

- Redo size: 15,875,849.0 121,482.8

- Logical reads: 42,403.5 324.5

- Block changes: 34,759.1 266.0

- Physical reads: 46.0 0.4

- Physical writes: 3,417.9 26.2

- User calls: 569.6 4.4

- Parses: 292.3 2.2

- Hard parses: 0.1 0.0

- W/A MB processed: 0.5 0.0

- Logons: 10.7 0.1

- Executes: 552.8 4.2

- Rollbacks: 42.9 0.3

- Transactions: 130.7

- Top 5 Timed Foreground Events

- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

- Avg

- wait % DB

- Event Waits Time(s) (ms) time Wait Class

- ------------------------------ ------------ ----------- ------ ------ ----------

- DB CPU 37,301 76.5

- log file sync 1,665,900 7,732 5 15.9 Commit

- db file sequential read 711,221 6,614 9 13.6 User I/O

- buffer busy waits 366,589 440 1 .9 Concurrenc

- gc current multi block request 192,791 230 1 .5 Cluster

这是某库的2号节点,还不算太忙,只是由于业务类型每次写入的量都很大,log file sync等待占用DB time的16%

看看后台进程等待:

- <span style="font-family:Comic Sans MS;"> Avg

- %Time Total Wait wait Waits % bg

- Event Waits -outs Time (s) (ms) /txn time

- -------------------------- ------------ ----- ---------- ------- -------- ------

- db file parallel write 11,968,325 0 24,481 2 5.7 71.2

- log file parallel write 1,503,192 0 3,863 3 0.7 11.2</span>

***如上信息log file sync占用了DB time的16%,avg wait(5),那么LGWR等待占用的比例为多少呢?占用了平均每次相应的40%.那么这主要是因为业务原因,已经达到我们存储的IO瓶颈.

***此库平均每s的吞吐量在200M左右

下面看看我使用脚本lfsdiag.sql收集的部分信息:

- INST_ID EVENT WAIT_TIME_MILLI WAIT_COUNT

- ---------- ---------------------------------------- --------------- ----------

- 1 wait for scn ack 1 4243024

- 1 wait for scn ack 2 728196

- 1 wait for scn ack 4 1133400

- 1 wait for scn ack 8 1157120

- 1 wait for scn ack 16 88333

- 1 wait for scn ack 32 3883

- 1 wait for scn ack 64 429

- 1 wait for scn ack 128 80

- 1 wait for scn ack 256 34

- 1 wait for scn ack 512 48

- 2 wait for scn ack 1 55024800

- 2 wait for scn ack 2 6658764

- 2 wait for scn ack 4 6802492

- 2 wait for scn ack 8 4400949

- 2 wait for scn ack 16 564950

- 2 wait for scn ack 32 21712

- 2 wait for scn ack 64 3190

- 2 wait for scn ack 128 912

- 2 wait for scn ack 256 390

- 2 wait for scn ack 512 508

- 1 log file sync 1 49708644

- 1 log file sync 2 4285471

- 1 log file sync 4 3929029

- 1 log file sync 8 2273533

- 1 log file sync 16 709349

- 1 log file sync 32 257827

- 1 log file sync 64 10464

- 1 log file sync 128 2371

- 1 log file sync 256 1582

- 1 log file sync 512 1979

- 1 log file sync 1024 1200

- 2 log file sync 1 647580137

- 2 log file sync 2 56421028

- 2 log file sync 4 42559988

- 2 log file sync 8 26002340

- 2 log file sync 16 12821558

- 2 log file sync 32 4429073

- 2 log file sync 64 229397

- 2 log file sync 128 42685

- 2 log file sync 256 22693

- 2 log file sync 512 23922

- 2 log file sync 1024 215090

- 1 log file switch completion 1 141

- 1 log file switch completion 2 27

- 1 log file switch completion 4 35

- 1 log file switch completion 8 72

- 1 log file switch completion 16 237

- 1 log file switch completion 32 453

- 1 log file switch completion 64 387

- 1 log file switch completion 128 31

- 2 log file switch completion 1 956

- 2 log file switch completion 2 508

- 2 log file switch completion 4 1005

- 2 log file switch completion 8 1858

- 2 log file switch completion 16 4506

- 2 log file switch completion 32 5569

- 2 log file switch completion 64 6957

- 2 log file switch completion 128 319

- 2 log file switch completion 256 24

- 2 log file switch completion 512 108

- 2 log file switch completion 1024 1

- 1 log file parallel write 1 56713575

- 1 log file parallel write 2 2952904

- 1 log file parallel write 4 1832942

- 1 log file parallel write 8 785097

- 1 log file parallel write 16 386755

- 1 log file parallel write 32 229099

- 1 log file parallel write 64 8552

- 1 log file parallel write 128 1461

- 1 log file parallel write 256 914

- 1 log file parallel write 512 231

- 1 log file parallel write 1024 21

- 1 log file parallel write 2048 3

- 2 log file parallel write 1 708078642

- 2 log file parallel write 2 31616460

- 2 log file parallel write 4 16087368

- 2 log file parallel write 8 5656461

- 2 log file parallel write 16 3121042

- 2 log file parallel write 32 1995505

- 2 log file parallel write 64 44298

- 2 log file parallel write 128 7506

- 2 log file parallel write 256 2582

- 2 log file parallel write 512 536

- 2 log file parallel write 1024 464

- 2 log file parallel write 2048 26

- 2 log file parallel write 4096 0

- 2 log file parallel write 8192 0

- 2 log file parallel write 16384 0

- 2 log file parallel write 32768 0

- 2 log file parallel write 65536 0

- 2 log file parallel write 131072 0

- 2 log file parallel write 262144 0

- 2 log file parallel write 524288 1

- 1 gcs log flush sync 1 4366103

- 1 gcs log flush sync 2 72108

- 1 gcs log flush sync 4 52374

- 1 gcs log flush sync 8 23374

- INST_ID EVENT WAIT_TIME_MILLI WAIT_COUNT

- ---------- ---------------------------------------- --------------- ----------

- 1 gcs log flush sync 16 13518

- 1 gcs log flush sync 32 12450

- 1 gcs log flush sync 64 11307

- 1 gcs log flush sync 128 4

- 2 gcs log flush sync 1 9495464

- 2 gcs log flush sync 2 263718

- 2 gcs log flush sync 4 222876

- 2 gcs log flush sync 8 148562

- 2 gcs log flush sync 16 68586

- 2 gcs log flush sync 32 33704

- 2 gcs log flush sync 64 5231

- 2 gcs log flush sync 128 1

- 1 gc current block 2-way 1 30064919

- 1 gc current block 2-way 2 353563

- 1 gc current block 2-way 4 239425

- 1 gc current block 2-way 8 29994

- 1 gc current block 2-way 16 3203

- 1 gc current block 2-way 32 1661

- 1 gc current block 2-way 64 1501

- 1 gc current block 2-way 128 273

- 1 gc current block 2-way 256 153

- 1 gc current block 2-way 512 22

- 1 gc current block 2-way 1024 119

- 2 gc current block 2-way 1 36168617

- 2 gc current block 2-way 2 303236

- 2 gc current block 2-way 4 148934

- 2 gc current block 2-way 8 13304

- 2 gc current block 2-way 16 2140

- 2 gc current block 2-way 32 1635

- 2 gc current block 2-way 64 1114

- 2 gc current block 2-way 128 210

- 2 gc current block 2-way 256 28

- 2 gc current block 2-way 512 12

- 2 gc current block 2-way 1024 12

- 2 gc current block 2-way 2048 3

- 2 gc current block 2-way 4096 2

- 1 gc cr grant 2-way 1 76502000

- 1 gc cr grant 2-way 2 476023

- 1 gc cr grant 2-way 4 564802

- 1 gc cr grant 2-way 8 61560

- 1 gc cr grant 2-way 16 5657

- 1 gc cr grant 2-way 32 3011

- 1 gc cr grant 2-way 64 440

- 1 gc cr grant 2-way 128 217

- 1 gc cr grant 2-way 256 6

- 2 gc cr grant 2-way 1 155966394

- 2 gc cr grant 2-way 2 740788

- 2 gc cr grant 2-way 4 748834

- 2 gc cr grant 2-way 8 59464

- 2 gc cr grant 2-way 16 9889

- 2 gc cr grant 2-way 32 7236

- 2 gc cr grant 2-way 64 937

- 2 gc cr grant 2-way 128 463

- 2 gc cr grant 2-way 256 14

- 2 gc cr grant 2-way 512 5

- 2 gc cr grant 2-way 1024 10

- 2 gc cr grant 2-way 2048 2

- 2 gc cr grant 2-way 4096 4

- 2 gc cr grant 2-way 8192 1

- 1 gc buffer busy 1 34252868

- 1 gc buffer busy 2 18723990

- 1 gc buffer busy 4 9528539

- 1 gc buffer busy 8 4351426

- 1 gc buffer busy 16 3691918

- 1 gc buffer busy 32 755331

- 1 gc buffer busy 64 68712

- 1 gc buffer busy 128 10869

- 1 gc buffer busy 256 2553

- 1 gc buffer busy 512 337

- 1 gc buffer busy 1024 2933

- 2 gc buffer busy 1 7881434

- 2 gc buffer busy 2 2083189

- 2 gc buffer busy 4 1372486

- 2 gc buffer busy 8 1957290

- 2 gc buffer busy 16 1417604

- 2 gc buffer busy 32 448992

- 2 gc buffer busy 64 544446

- 2 gc buffer busy 128 202888

- 2 gc buffer busy 256 58584

- 2 gc buffer busy 512 16470

- 2 gc buffer busy 1024 91266

- 2 gc buffer busy 2048 14

- 1 LGWR wait for redo copy 1 278115

- 1 LGWR wait for redo copy 2 3698

- 1 LGWR wait for redo copy 4 8498

- 1 LGWR wait for redo copy 8 220

- 1 LGWR wait for redo copy 16 6

- 1 LGWR wait for redo copy 32 1

- 2 LGWR wait for redo copy 1 7935371

- 2 LGWR wait for redo copy 2 29915

- 2 LGWR wait for redo copy 4 58179

- 2 LGWR wait for redo copy 8 2472

- 2 LGWR wait for redo copy 16 204

- 2 LGWR wait for redo copy 32 47

此信息主要来自于V$EVENT_HISTOGRAM,对于判断到底是什么引起的问题,有助于参考。

***Oracle有个bug:log file sync等待1s,cursor:pin s wait on x也有个bug,莫名等待10ms.

如何tuning sql log file:

对于log buffer size的大小,前面已经提到过,默认的就已经足够大了,但是从经验来看(主要是前辈们,我也尝试过,效果不太明显),所以在allocate相关latch等待,比较多的时候可以考虑增大log buffer size.9.2以后oracle使用多个log buffer,也在很大程度上缓解了相关latch等待(每个latch保护自己的buffers).10g出现新功能:private redo strand,每一个allocate latch保护自己的redo strand,也出现了IMU的概念,所以log buffer相关latch的争用已经很少出现.

下面看看相关的参数调整:

10g R1:commit_logging

10g R2:commit_write

| Option | Effect |

| Wait(default) | Ensures that the commit returns only after the corresponding redo information is persistent in the online redo log. When the client receives a successful return from this COMMIT statement, the transaction has been committed to durable media. A failure that occurs after a successful write to the log might prevent the success message from returning to the client, in which case the client cannot tell whether or not the transaction committed. |

| Nowait | The commit returns to the client whether or not the write to the redo log has completed. This behavior can increase transaction throughput. |

| Batch | The redo information is buffered to the redo log, along with other concurrently executing transactions. When sufficient redo information is collected, a disk write to the redo log is initiated. This behavior is called group commit, as redo information for multiple transactions is written to the log in a single I/O operation. |

| Immediate(default) | LGWR writes the transaction's redo information to the log. Because this operation option forces a disk I/O, it can reduce transaction throughput. |

以上来自于Online Document,如果你不关心ACID的D(持久性),也就是不关心instance crash后丢失数据的风险,完全可以采用nowait,但我目前没有见过系统使用该参数,都为默认值.

可能在很多情况下,我们从单纯的DB的层面去tuning,并不能得到很好的效果,从应用层面能够得到很好的效果,但是推动应用修改代码又是一件难而又难的事情。

1.PLSQL,这包括Procedure导致的log file sync,看下面例子:

- SW_SID STATE SW_EVENT SEQ# SEC_IN_WAIT SQL_ID SQL_TEXT

- ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

- 2962 WAITING log file sync 60440 0 773b3nqmcvwf4 BEGIN P_MS_UPDATE_SENDTASK (:1,:2,:3,:4,:5,:6,:7,:8,:9,:10,:11,:12,:13,:14,:15,:16,:17); END;

- 2962 WAITING log file sync 60440 0 773b3nqmcvwf4 BEGIN P_MS_UPDATE_SENDTASK(:1,:2,:3,:4,:5,:6,:7,:8,:9,:10,:11,:12,:13,:14,:15,:16,:17); END;

对于这种类型的存过,里面有各种update,insert,delete,每次的处理量比较大,所以我们只能去修改,分散相应的业务逻辑.是每次提交尽可能以合理的批量来做

CPU方面:

也有种可能是在CPU的配置上来优化,LGWR消耗大量的CPU,做法是如果LGWR等待的延迟相当严重,那么可以把LGWR调整到高优先级

IO方面:

如果你的存储IO存在瓶颈,那么log file parallel write事件会比较明显,所以这个调整还是从存储级别,比如采用raw device,ASM,更加快速的存储设备等等

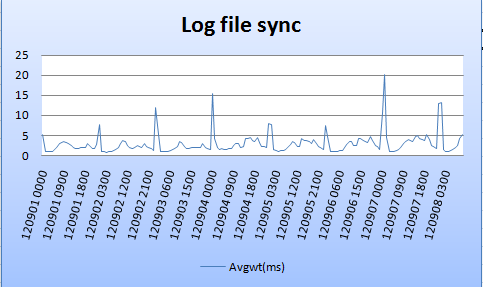

下面是如上Begin....End执行的系统的log file sync曲线,当此过程大量执行的时候,平均等待的时间有所增加,如下图:

未完待续……

925

925

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?