1.创建感知

def activate(self,inputs):

"""

Takes in @param inputs, a list of numbers equal to length of weights.

@return the output of a threshold perceptron with given inputs based on

perceptron weights and threshold.

"""

# The strength with which the perceptron fires.

strength = np.dot(self.weights, inputs)

# TODO: return 0 or 1 based on the threshold

if strength <= self.threshold :

self.result = 0# TODO

else:

self.result = 1# TODO

return self.result3.在哪儿训练感知

- 我们希望建立一个感知机,那么在建立模型的过程中,我们需要修改的是以下哪些值?

阈值

权重

4.感知输入

- 人工神经网络是由感知机单元构成的,人工神经网络的输入应该是什么格式的呢?

每行带有标签的数值型矩阵

5.神经网络输出

- 我们能从神经网络的输出中得到什么信息?

一个有向图(神经网络本身)

一个标量

用向量表示的分类信息

每个输入向量都对应一个输出向量

6.感知更新规则

def update(self, values, train, eta=.1):

"""

Takes in a 2D array @param values consisting of a LIST of inputs and a

1D array @param train, consisting of a corresponding list of expected

outputs. Updates internal weights according to the perceptron training

rule using these values and an optional learning rate, @param eta.

"""

# For each data point:

for data_point in xrange(len(values)):

# TODO: Obtain the neuron's prediction for the data_point --> values[data_point]

prediction = self.activate(values[data_point])

# Get the prediction accuracy calculated as (expected value - predicted value)

# expected value = train[data_point], predicted value = prediction

error = train[data_point] - prediction

# TODO: update self.weights based on the multiplication of:

# - prediction accuracy(error)

# - learning rate(eta)

# - input value(values[data_point])

weight_update = eta*error*values[data_point]# TODO

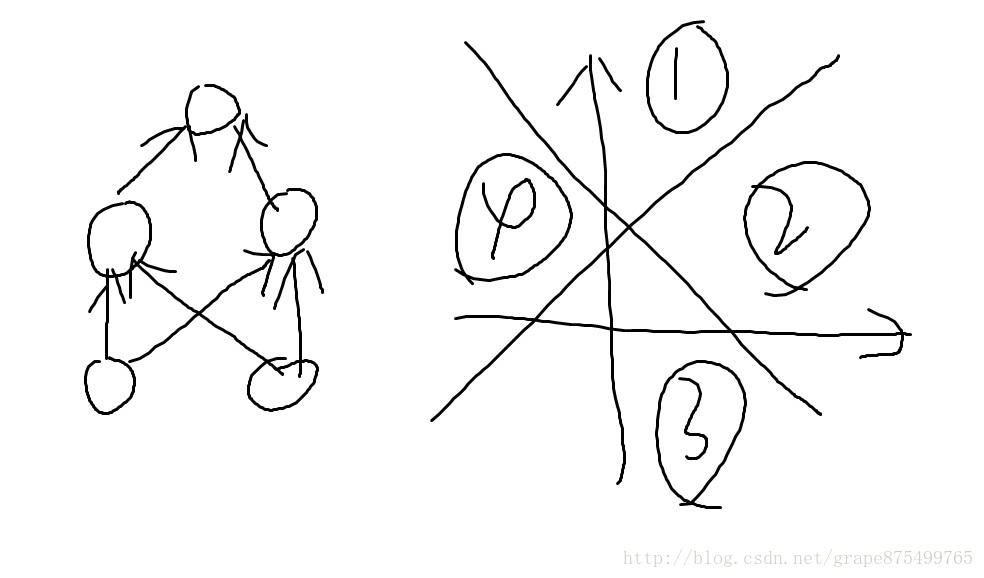

self.weights += weight_update7.多层网络示例

8.线性表征能力

9.创建XOR网络

# Part 1: Set up the perceptron network

Network = [

# input layer, declare input layer perceptrons here

[ input1,input2], \

# output node, declare output layer perceptron here

[ output ]

]

# Part 2: Define a procedure to compute the output of the network, given inputs

def EvalNetwork(inputValues, Network):

"""

Takes in @param inputValues, a list of input values, and @param Network

that specifies a perceptron network. @return the output of the Network for

the given set of inputs.

"""

# YOUR CODE HERE

input=[]

for net in Network[0]:

input.append(net.activate(inputValues))

OutputValue = output.activate(input)

# Be sure your output value is a single number

return OutputValue

10.离散测验

- 人工神经网络的一个问题是他只能输出离散值,这就使得他不能有效的处理回归问题,并且处理负责问题的时候需要更多的单元。

例如: 给定一个结构为 [2,2,1](输入层两个单元,隐藏层两个单元,输出层一个单元)的神经网络,最多可以预测几种房屋的价格?

2*2=4

13.激活函数 测验

- 我们已经决定使用一个连续(避免离散问题)并且非线性(允许我们表示非线性)的方程,以下哪个方程满足我们的需求?

Logistic function

其实就是阶跃函数和sigmoid函数

14.Perceptron Vs Sigmoid

- 单个感知机和一个 Sigmoid 单元在二分类问题上有什么区别?

后者给出了更多的信息,但是两者的结果会相同

15.Sigmoid Learning

- 我们需要像训练感知机一样来训练 Sigmoid 单元。该怎么定义更新规则呢?

运用微积分

16.Gradient Descent Issues

- 运用微积分,梯度下降算法可以给我们提供一个求极值的方法。但是也会产生很多问题,你认为会产生下列哪些问题?

局部的极值

运行太耗时

会产生无限次循环

无法收敛

17.

# ----------

#

# As with the previous perceptron exercises, you will complete some of the core

# methods of a sigmoid unit class.

#

# There are two functions for you to finish:

# First, in activate(), write the sigmoid activation function.

# Second, in update(), write the gradient descent update rule. Updates should be

# performed online, revising the weights after each data point.

#

# ----------

import numpy as np

class Sigmoid:

"""

This class models an artificial neuron with sigmoid activation function.

"""

def __init__(self, weights = np.array([1])):

"""

Initialize weights based on input arguments. Note that no type-checking

is being performed here for simplicity of code.

"""

self.weights = weights

# NOTE: You do not need to worry about these two attribues for this

# programming quiz, but these will be useful for if you want to create

# a network out of these sigmoid units!

self.last_input = 0 # strength of last input

self.delta = 0 # error signal

def activate(self, values):

"""

Takes in @param values, a list of numbers equal to length of weights.

@return the output of a sigmoid unit with given inputs based on unit

weights.

"""

# YOUR CODE HERE

# First calculate the strength of the input signal.

strength = np.dot(values, self.weights)

self.last_input = strength

# TODO: Modify strength using the sigmoid activation function and

# return as output signal.

# HINT: You may want to create a helper function to compute the

# logistic function since you will need it for the update function.

result=self.logistic(strength)

return result

def logistic(self,x):

return 1.0/(1+np.exp(-x))

def update(self, values, train, eta=.1):

"""

Takes in a 2D array @param values consisting of a LIST of inputs and a

1D array @param train, consisting of a corresponding list of expected

outputs. Updates internal weights according to gradient descent using

these values and an optional learning rate, @param eta.

"""

# TODO: for each data point...

for X, y_true in zip(values, train):

# obtain the output signal for that point

y_pred = self.activate(X)

# YOUR CODE HERE

error = y_true - y_pred

# TODO: compute derivative of logistic function at input strength

# Recall: d/dx logistic(x) = logistic(x)*(1-logistic(x))

from scipy.special import expit

de_logistic = self.logistic(self.last_input)* (1 -self.logistic(self.last_input))

# TODO: update self.weights based on learning rate, signal accuracy,

# function slope (derivative) and input value

weight_update=X*de_logistic*eta*error

self.weights += weight_update

def test():

"""

A few tests to make sure that the perceptron class performs as expected.

Nothing should show up in the output if all the assertions pass.

"""

def sum_almost_equal(array1, array2, tol = 1e-5):

return sum(abs(array1 - array2)) < tol

u1 = Sigmoid(weights=[3,-2,1])

assert abs(u1.activate(np.array([1,2,3])) - 0.880797) < 1e-5

u1.update(np.array([[1,2,3]]),np.array([0]))

assert sum_almost_equal(u1.weights, np.array([2.990752, -2.018496, 0.972257]))

u2 = Sigmoid(weights=[0,3,-1])

u2.update(np.array([[-3,-1,2],[2,1,2]]),np.array([1,0]))

assert sum_almost_equal(u2.weights, np.array([-0.030739, 2.984961, -1.027437]))

if __name__ == "__main__":

test()

1975

1975

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?