系列文章目录

Kubernetes实战 第一章 Kubernetes基础概念: link

文章目录

1 是什么

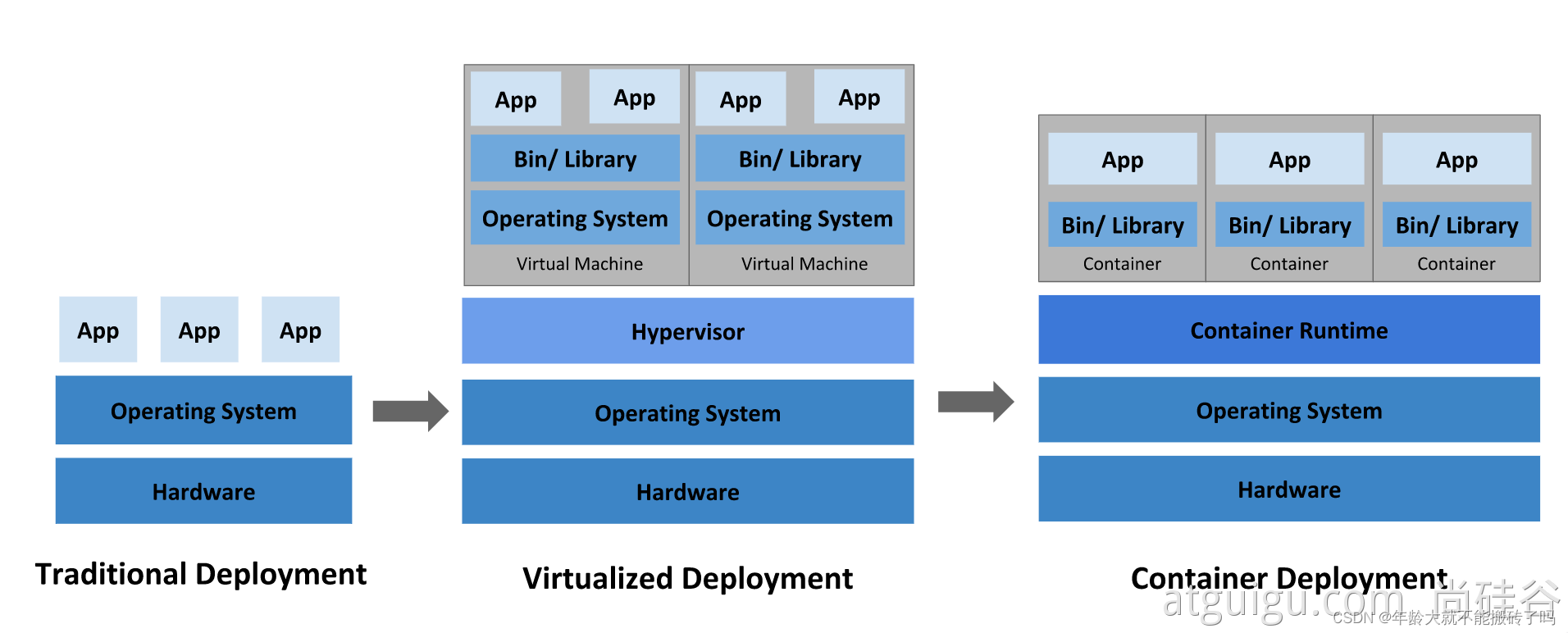

急需一个大规模容器编排系统

kubernetes具有以下特性:

● 服务发现和负载均衡

Kubernetes 可以使用 DNS 名称或自己的 IP 地址公开容器,如果进入容器的流量很大, Kubernetes 可以负载均衡并分配网络流量,从而使部署稳定。

● 存储编排

Kubernetes 允许你自动挂载你选择的存储系统,例如本地存储、公共云提供商等。

● 自动部署和回滚

你可以使用 Kubernetes 描述已部署容器的所需状态,它可以以受控的速率将实际状态 更改为期望状态。例如,你可以自动化 Kubernetes 来为你的部署创建新容器, 删除现有容器并将它们的所有资源用于新容器。

● 自动完成装箱计算

Kubernetes 允许你指定每个容器所需 CPU 和内存(RAM)。 当容器指定了资源请求时,Kubernetes 可以做出更好的决策来管理容器的资源。

● 自我修复

Kubernetes 重新启动失败的容器、替换容器、杀死不响应用户定义的 运行状况检查的容器,并且在准备好服务之前不将其通告给客户端。

● 密钥与配置管理

Kubernetes 允许你存储和管理敏感信息,例如密码、OAuth 令牌和 ssh 密钥。 你可以在不重建容器镜像的情况下部署和更新密钥和应用程序配置,也无需在堆栈配置中暴露密钥。

Kubernetes 为你提供了一个可弹性运行分布式系统的框架。 Kubernetes 会满足你的扩展要求、故障转移、部署模式等。 例如,Kubernetes 可以轻松管理系统的 Canary 部署。

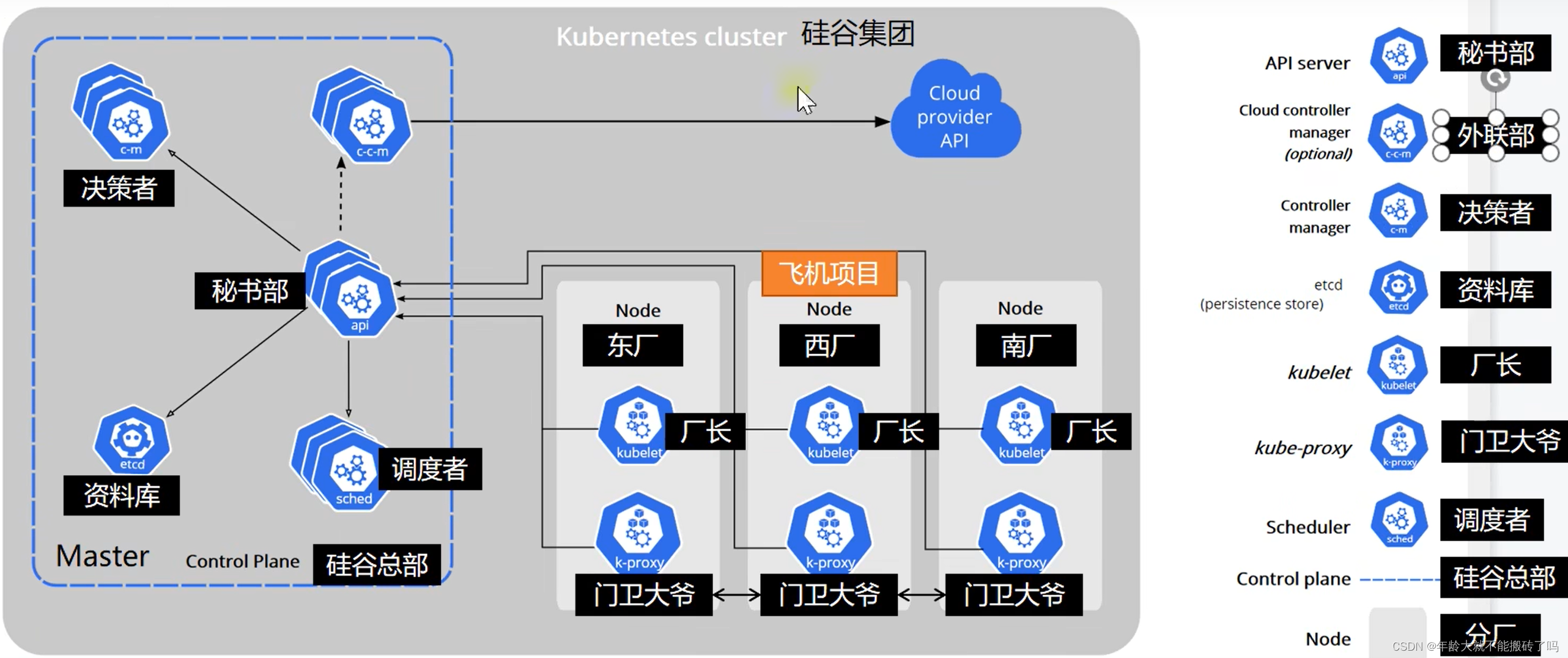

2 架构

2.1 工作方式

Kubernetes Cluster = N Master Node + N Worker Node:N主节点+N工作节点; N>=1

2.2 组件架构

1、控制平面组件(Control Plane Components)

控制平面的组件对集群做出全局决策(比如调度),以及检测和响应集群事件(例如,当不满足部署的 replicas 字段时,启动新的 pod)。

控制平面组件可以在集群中的任何节点上运行。 然而,为了简单起见,设置脚本通常会在同一个计算机上启动所有控制平面组件, 并且不会在此计算机上运行用户容器。 请参阅使用 kubeadm 构建高可用性集群 中关于多 VM 控制平面设置的示例。

kube-apiserver

API 服务器是 Kubernetes 控制面的组件, 该组件公开了 Kubernetes API。 API 服务器是 Kubernetes 控制面的前端。

Kubernetes API 服务器的主要实现是 kube-apiserver。 kube-apiserver 设计上考虑了水平伸缩,也就是说,它可通过部署多个实例进行伸缩。 你可以运行 kube-apiserver 的多个实例,并在这些实例之间平衡流量。

etcd

etcd 是兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库。

您的 Kubernetes 集群的 etcd 数据库通常需要有个备份计划。

要了解 etcd 更深层次的信息,请参考 etcd 文档。

kube-scheduler

控制平面组件,负责监视新创建的、未指定运行节点(node)的 Pods,选择节点让 Pod 在上面运行。

调度决策考虑的因素包括单个 Pod 和 Pod 集合的资源需求、硬件/软件/策略约束、亲和性和反亲和性规范、数据位置、工作负载间的干扰和最后时限。

kube-controller-manager

在主节点上运行 控制器 的组件。

从逻辑上讲,每个控制器都是一个单独的进程, 但是为了降低复杂性,它们都被编译到同一个可执行文件,并在一个进程中运行。

这些控制器包括:

● 节点控制器(Node Controller): 负责在节点出现故障时进行通知和响应

● 任务控制器(Job controller): 监测代表一次性任务的 Job 对象,然后创建 Pods 来运行这些任务直至完成

● 端点控制器(Endpoints Controller): 填充端点(Endpoints)对象(即加入 Service 与 Pod)

● 服务帐户和令牌控制器(Service Account & Token Controllers): 为新的命名空间创建默认帐户和 API 访问令牌

cloud-controller-manager

云控制器管理器是指嵌入特定云的控制逻辑的 控制平面组件。 云控制器管理器允许您链接集群到云提供商的应用编程接口中, 并把和该云平台交互的组件与只和您的集群交互的组件分离开。

cloud-controller-manager 仅运行特定于云平台的控制回路。 如果你在自己的环境中运行 Kubernetes,或者在本地计算机中运行学习环境, 所部署的环境中不需要云控制器管理器。

与 kube-controller-manager 类似,cloud-controller-manager 将若干逻辑上独立的 控制回路组合到同一个可执行文件中,供你以同一进程的方式运行。 你可以对其执行水平扩容(运行不止一个副本)以提升性能或者增强容错能力。

下面的控制器都包含对云平台驱动的依赖:

● 节点控制器(Node Controller): 用于在节点终止响应后检查云提供商以确定节点是否已被删除

● 路由控制器(Route Controller): 用于在底层云基础架构中设置路由

● 服务控制器(Service Controller): 用于创建、更新和删除云提供商负载均衡器

2、Node 组件

节点组件在每个节点上运行,维护运行的 Pod 并提供 Kubernetes 运行环境。

kubelet

一个在集群中每个节点(node)上运行的代理。 它保证容器(containers)都 运行在 Pod 中。

kubelet 接收一组通过各类机制提供给它的 PodSpecs,确保这些 PodSpecs 中描述的容器处于运行状态且健康。 kubelet 不会管理不是由 Kubernetes 创建的容器。

kube-proxy

kube-proxy 是集群中每个节点上运行的网络代理, 实现 Kubernetes 服务(Service) 概念的一部分。

kube-proxy 维护节点上的网络规则。这些网络规则允许从集群内部或外部的网络会话与 Pod 进行网络通信。

如果操作系统提供了数据包过滤层并可用的话,kube-proxy 会通过它来实现网络规则。否则, kube-proxy 仅转发流量本身

3 kubeadm创建集群

3.1 前置条件

3.1.1 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

3.1.2 关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

3.1.3 关闭swap

临时关闭

swapoff -a

永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

free -g 验证,swap必须为0;

###3.1.4 添加主机名与ip对应关系

vi /etc/hosts

192.168.233.128 k8s-node1

192.168.233.129 k8s-node2

192.168.233.130 k8s-node3

hostnamectl set-hostname <newhostname>: 指定新的hostname

su 切换过来

3.1.5 将桥接的IPv4流量传递到iptables的链:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.brigde.bridge-nf-call-iptables = 1

EOF

sysctl --system

3.1.6 疑难问题

遇见提示是只读的文件系统,运行如下命令

mount -o remount rw /

date 查看时间(可选)

yum install -y ntpdate

ntpdate time.windows.com 同步最新的时间

3.2 所有节点安装Docker、kubeadm、kubelet、kubectl

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker.

3.2.1 安装docker

3.2.1.1 卸载系统之前的docker

sudo yum remove docker \

docker-client \

docker-client-latest \

docker-common\

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

3.2.1.2.安装Docker-CE

安装必须得依赖

sudo yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

3.2.1.3.设置docker repo的yum位置

sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

3.2.1.4.安装docker,以及docker-cli

sudo yum install -y docker-ce docker-ce-di containerd.io

3.2.1.5.配置docker加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors":["https://82m9ar63.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

3.2.1.6.配置docker开机启动

systemctl enable docker

3.2.1.7.添加阿里云镜像

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

3.2.1.8.安装kubeadm,kubelet和kubectl

yum list|grep kube

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

3.2.1.9.下载各个机器需要的镜像

sudo tee ./images.sh <<-'EOF'

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageName

done

EOF

chmod +x ./images.sh && ./images.sh

3.2.1.10.初始化主节点

#所有机器添加master域名映射,以下需要修改为自己的

echo "192.168.233.128 cluster-endpoint" >> /etc/hosts

#主节点初始化

kubeadm init \

--apiserver-advertise-address=192.168.233.128 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

#registry.aliyuncs.com/google_containers 也可以

#--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

#--image-repository registry.aliyuncs.com/google_containers \

#所有网络范围不重叠

3.2.1.11. 成功会打印

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token pvz51j.ev8mazezdjrqblqr \

--discovery-token-ca-cert-hash sha256:8a4d880cbdd2eb010c57afd206cfa36f7f0040490e11734bbe1aee800d0f1625 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token pvz51j.ev8mazezdjrqblqr \

--discovery-token-ca-cert-hash sha256:8a4d880cbdd2eb010c57afd206cfa36f7f0040490e11734bbe1aee800d0f1625

3.2.1.12 按要求执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3.2.1.13 查看集群状态

kubectl get nodes

14 安装网络组件

##在master节点运行

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -O

kubectl apply -f calico.yaml

3.2.1.14 遇到的问题排查

# 发现 kube-system calico-node-tdbcx 0/1 Init:ImagePullBackOff

[root@k8s-node1 k8s]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-577f77cb5c-cbqhr 0/1 Pending 0 2d10h

kube-system calico-node-tdbcx 0/1 Init:ImagePullBackOff 0 2d10h

kube-system coredns-5897cd56c4-7scvt 0/1 Pending 0 2d11h

kube-system coredns-5897cd56c4-kkbb8 0/1 Pending 0 2d11h

kube-system etcd-k8s-node1 1/1 Running 1 2d11h

kube-system kube-apiserver-k8s-node1 1/1 Running 3 2d11h

kube-system kube-controller-manager-k8s-node1 1/1 Running 3 2d11h

kube-system kube-proxy-9lrwb 1/1 Running 1 2d11h

kube-system kube-scheduler-k8s-node1

# 使用 kubectl describe pod calico-node-tdbcx -n kube-system 排查

# 发现Normal docker.io/calico/cni:v3.20.6

kubectl describe pod calico-node-tdbcx -n kube-system

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Failed 39h (x1965 over 2d10h) kubelet Error: ImagePullBackOff

Normal BackOff 39h (x1994 over 2d10h) kubelet Back-off pulling image "docker.io/calico/cni:v3.20.6"

Warning Failed 20m (x3 over 21m) kubelet Failed to pull image "docker.io/calico/cni:v3.20.6": rpc error: code = Unknown desc = Error response from daemon: Get "https://registry-1.docker.io/vn 192.168.233.2:53: server misbehaving

Normal Pulling 19m (x4 over 21m) kubelet Pulling image "docker.io/calico/cni:v3.20.6"

Warning Failed 18m kubelet Failed to pull image "docker.io/calico/cni:v3.20.6": rpc error: code = Unknown desc = error pulling image configuration: download failed after attemp.docker.com on 192.168.233.2:53: server misbehaving

Normal BackOff 10m (x27 over 21m) kubelet Back-off pulling image "docker.io/calico/cni:v3.20.6"

Warning Failed 4m42s (x7 over 21m) kubelet Error: ErrImagePull

Warning Failed 59s (x64 over 21m) kubelet Error: ImagePullBackOff

# docker pull calico/cni:v3.20.6 拉不下来

[root@k8s-node1 k8s]# docker pull calico/cni:v3.20.6

v3.20.6: Pulling from calico/cni

3711d8cd4959: Retrying in 1 second

695d19431d49: Retrying in 1 second

719a98b816cb: Retrying in 1 second

error pulling image configuration: download failed after attempts=6: dial tcp: lookup production.cloudflare.docker.com on 192.168.233.2:53: server misbehaving

# 使用科学上网再次拉取 docker pull calico/cni:v3.20.6

[root@k8s-node1 k8s]# docker pull calico/cni:v3.20.6

v3.20.6: Pulling from calico/cni

3711d8cd4959: Pull complete

695d19431d49: Pull complete

719a98b816cb: Pull complete

Digest: sha256:cea82d82423e76818f5c83814b578e697a4e1fb18cac5c13ca35c1182206e7cb

Status: Downloaded newer image for calico/cni:v3.20.6

docker.io/calico/cni:v3.20.6

# 成功解决

[root@k8s-node1 k8s]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-577f77cb5c-cbqhr 1/1 Running 0 2d10h

kube-system calico-node-tdbcx 1/1 Running 0 2d10h

kube-system coredns-5897cd56c4-7scvt 1/1 Running 0 2d11h

kube-system coredns-5897cd56c4-kkbb8 1/1 Running 0 2d11h

kube-system etcd-k8s-node1 1/1 Running 2 2d11h

kube-system kube-apiserver-k8s-node1 1/1 Running 4 2d11h

kube-system kube-controller-manager-k8s-node1 1/1 Running 4 2d11h

kube-system kube-proxy-9lrwb 1/1 Running 2 2d11h

kube-system kube-scheduler-k8s-node1 1/1 Running 4 2d11h

3.2.1.15 小结

#查看集群所有节点

kubectl get nodes

#根据配置文件,给集群创建资源

kubectl apply -f xxxx.yaml

#查看集群部署了哪些应用?

docker ps === kubectl get pods -A

# 运行中的应用在docker里面叫容器,在k8s里面叫Pod

kubectl get pods -A

#查看集群中的某个依赖

kubectl describe pod calico-node-tdbcx -n kube-system

# 监控

kubectl get pod -w

#监控2 ?秒刷新

watch -n 1 kubectl get pod

kubeadm reset

新令牌

kubeadm token create --print-join-command

设置开机启动

systemctl enable kubelet

systemctl start kubelet

3.2.2 部署dashboard

3.2.2.1 部署

kubernetes官方提供的可视化界面

https://github.com/kubernetes/dashboard

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

3.2.2.2 设置访问端口

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

type: ClusterIP 改为 type: NodePort

kubectl get svc -A |grep kubernetes-dashboard

## 找到端口,在安全组放行

访问: https://集群任意IP:端口 https://139.198.165.238:32759

创建访问账号

#创建访问账号,准备一个yaml文件; vi dash.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

kubectl apply -f dash.yaml

令牌访问

#获取访问令牌

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

[root@k8s-node1 k8s]# kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6IlVINV92VHNyaXJSTE9tdXJNUnQ4dDFsRzAySnhjejdfVG9Qb0NFSWxJTUEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXFjYnNxIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5NmY4YzZmMi04NWFkLTRkZjEtYTZkZi1hYzNmZDFlNjMwMTEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.pzFH1DXNKDoN2k0-89hrLbpCJ3gbV0Baw_TSHMhJOVXSU1FwH9ylVOIdYFYDo3X4ywF7r7OcSKFu5Uy3pJllbXQEjeAnlYlf3uW3zbeFEu-GPrLzjWY-yBgiQBdBJg0j9NJrucTTLImX12iwIBWKV127b5yR1xU58Dd4f8X3AG5z2IXzbANGe4mHUTORHBXxnOikNaocty85zwvYoiujlHVX14Re1Tw5xKFg8iMkNp4Oal3nv2pErMxLJDldRcslXjUOHk-Dlycveb3nO6m6rlW_KhdXgjUnD7-cttyN72FghNLOOMF-q2QFseP2npk74HjB83kczIyvgvsMQC4Zng[root@k8s-node1 k8s]#

4 Kubernetes核心实战

4.1 Pod

运行中的一组容器,Pod是kubernetes中应用的最小单位.

kubectl run mynginx --image=nginx

# 查看default名称空间的Pod

kubectl get pod

# 查看节点状态

kubectl get nodes

# 描述

kubectl describe pod 你自己的Pod名字

# 删除

kubectl delete pod Pod名字

# 查看Pod的运行日志

kubectl logs Pod名字

# 每个Pod - k8s都会分配一个ip

kubectl get pod -owide

# 使用Pod的ip+pod里面运行容器的端口

curl 192.168.169.136

# 集群中的任意一个机器以及任意的应用都能通过Pod分配的ip来访问这个Pod

#进入内部pod

kubectl exec -it mynginx-7bf8c6db65-jshjt -- /bin/bash

4.2 以yaml创建pod

apiVersion: v1

kind: Pod

metadata:

labels:

run: mynginx

name: mynginx

# namespace: default

spec:

containers:

- image: nginx

name: mynginx

apiVersion: v1

kind: Pod

metadata:

labels:

run: myapp

name: myapp

spec:

containers:

- image: nginx

name: nginx

#namespace: default

- image: tomcat:8.5.68

name: tomcat

kubectl apply -f pod.yaml

4.3 Deployment

控制Pod,使Pod拥有多副本,自愈,扩缩容等能力

# 清除所有Pod,比较下面两个命令有何不同效果?

kubectl run mynginx --image=nginx

kubectl create deployment mytomcat --image=tomcat:8.5.68

# 自愈能力

#监控kubectl pod

watch -n 1 kubectl get pod

## 查看部署 删除部署的NAME才又效果 kubectl delete deploy [podname]

kubectl get deploy

4.3.1 多副本部署

kubectl create deployment my-dep --image=nginx --replicas=3

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: my-dep

name: my-dep

spec:

replicas: 3

selector:

matchLabels:

app: my-dep

template:

metadata:

labels:

app: my-dep

spec:

containers:

- image: nginx

name: nginx

4.3.2 扩缩容

#扩容 deployment/应用名 扩容数

kubectl scale deployment/my-dep --replicas=5

kubectl scale --replicas=5 deployment/my-dep

#缩容 由5变2就自动缩了

kubectl scale deployment/my-dep --replicas=2

#修改 replicas 修改应用名 通过修改也能时时扩缩容

kubectl edit deployment my-dep

4.3.3 滚动更新

又称不停机更新

# 改变镜像

kubectl set image deployment/my-dep nginx=nginx:1.16.1 --record

kubectl rollout status deployment/my-dep

#查看应用以yaml的方式

kubectl get deploy my-dep -oyaml

修改 kubectl edit deployment/my-dep

4.3.4 版本回退

#历史记录

kubectl rollout history deployment/my-dep

#查看某个历史详情

kubectl rollout history deployment/my-dep --revision=2

#回滚(回到上次)

kubectl rollout undo deployment/my-dep

#回滚(回到指定版本)

kubectl rollout undo deployment/my-dep --to-revision=2

更多:

除了Deployment,k8s还有 StatefulSet 、DaemonSet 、Job 等 类型资源。我们都称为 工作负载。

有状态应用使用 StatefulSet 部署,无状态应用使用 Deployment 部署

https://kubernetes.io/zh/docs/concepts/workloads/controllers/

其他工作负载

4.4 Service

将一组 Pods 公开为网络服务的抽象方法。

#暴露Deploy

kubectl expose deployment my-dep --port=8000 --target-port=80

#使用标签检索Pod

kubectl get pod -l app=my-dep

apiVersion: v1

kind: Service

metadata:

labels:

app: my-dep

name: my-dep

spec:

selector:

app: my-dep

ports:

- port: 8000

protocol: TCP

targetPort: 80

4.5 ClusterIP

# 等同于没有--type的

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=ClusterIP

apiVersion: v1

kind: Service

metadata:

labels:

app: my-dep

name: my-dep

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: my-dep

type: ClusterIP

4.6 NodePort

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=NodePort

apiVersion: v1

kind: Service

metadata:

labels:

app: my-dep

name: my-dep

spec:

ports:

- port: 8000

protocol: TCP

targetPort: 80

selector:

app: my-dep

type: NodePort

NodePort范围在 30000-32767 之间

5 Ingress

5.1 安装

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

#修改镜像

vi deploy.yaml

#将image的值改为如下值:

registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

# 检查安装的结果

kubectl get svc -A

kubectl get pod,svc -n ingress-nginx

# 最后别忘记把svc暴露的端口要放行

如果下载不到,用以下文件

apiVersion: v1

kind: Namespace

metadata:

name: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ''

resources:

- nodes

verbs:

- get

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- namespaces

verbs:

- get

- apiGroups:

- ''

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- extensions

- networking.k8s.io # k8s 1.14+

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io # k8s 1.14+

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- configmaps

resourceNames:

- ingress-controller-leader-nginx

verbs:

- get

- update

- apiGroups:

- ''

resources:

- configmaps

verbs:

- create

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

type: ClusterIP

ports:

- name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: controller

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

revisionHistoryLimit: 10

minReadySeconds: 0

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/component: controller

spec:

dnsPolicy: ClusterFirst

containers:

- name: controller

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

args:

- /nginx-ingress-controller

- --election-id=ingress-controller-leader

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

runAsUser: 101

allowPrivilegeEscalation: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- name: http

containerPort: 80

protocol: TCP

- name: https

containerPort: 443

protocol: TCP

- name: webhook

containerPort: 8443

protocol: TCP

volumeMounts:

- name: webhook-cert

mountPath: /usr/local/certificates/

readOnly: true

resources:

requests:

cpu: 100m

memory: 90Mi

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

name: ingress-nginx-admission

webhooks:

- name: validate.nginx.ingress.kubernetes.io

matchPolicy: Equivalent

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1beta1

operations:

- CREATE

- UPDATE

resources:

- ingresses

failurePolicy: Fail

sideEffects: None

admissionReviewVersions:

- v1

- v1beta1

clientConfig:

service:

namespace: ingress-nginx

name: ingress-nginx-controller-admission

path: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

rules:

- apiGroups:

- ''

resources:

- secrets

verbs:

- get

- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: ingress-nginx-admission

annotations:

helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-create

annotations:

helm.sh/hook: pre-install,pre-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-create

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: create

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: ingress-nginx-admission-patch

annotations:

helm.sh/hook: post-install,post-upgrade

helm.sh/hook-delete-policy: before-hook-creation,hook-succeeded

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

namespace: ingress-nginx

spec:

template:

metadata:

name: ingress-nginx-admission-patch

labels:

helm.sh/chart: ingress-nginx-3.33.0

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/version: 0.47.0

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/component: admission-webhook

spec:

containers:

- name: patch

image: docker.io/jettech/kube-webhook-certgen:v1.5.1

imagePullPolicy: IfNotPresent

args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

restartPolicy: OnFailure

serviceAccountName: ingress-nginx-admission

securityContext:

runAsNonRoot: true

runAsUser: 2000

5.2 使用

官网地址:https://kubernetes.github.io/ingress-nginx/

就是nginx做的

测试环境

应用如下yaml,准备好测试环境

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server

ports:

- containerPort: 9000

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 9000

5.3 域名访问

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix # 前缀模式

path: "/" #前缀的路径是/

backend:

service: #转交给后台服务

name: hello-server #转交的后台服务名

port:

number: 8000

- host: "demo.atguigu.com" #多个规则配置多个host

http:

paths:

- pathType: Prefix

path: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

kubectl apply -f ingress-rule.yaml

#修改

kubectl edit ing ingress-host-bar

hosts配置域名映射

192.168.233.129 hello.atguigu.com

192.168.233.129 demo.atguigu.com

修改pod下的nginx文件

5.4 路径重写

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.atguigu.com"

http:

paths:

- pathType: Prefix

path: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

5.5 流量限制

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1" #限流1秒一次

spec:

ingressClassName: nginx

rules:

- host: "haha.atguigu.com"

http:

paths:

- pathType: Exact

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

网络模型

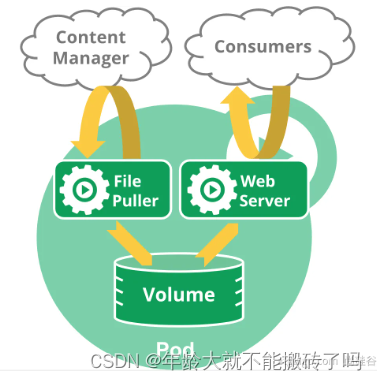

6 存储抽象

6.1 环境准备

6.1.1 所有节点

#所有机器安装

yum install -y nfs-utils

6.1.2 主节点

#nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

#配置生效

exportfs -r

#确认配置

exportfs

6.1.3 从节点

#查看远程机器有哪些远程目录可用

showmount -e 192.168.233.128

#执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount

mkdir -p /nfs/data

mount -t nfs 192.168.233.128:/nfs/data /nfs/data

# 写入一个测试文件

echo "hello nfs server" > /nfs/data/test.txt

6.1.4 原生方式数据挂载

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts: # 卷挂载

- name: html #挂载名

mountPath: /usr/share/nginx/html #被挂载的目录

volumes: #声明挂载方式

- name: html

nfs: #nfs网络文件系统

server: 192.168.233.128

path: /nfs/data/nginx-pv #挂载到

#把上面的内容写进去

vi deploy.yaml

#应用

kubectl apply -f deploy.yaml

#检查pod

kubectl get pod

#查询pod

kubectl describe pod nginx-pv-demo-5ffb6666b-j7s5b

挂载失败了

从页面上看没有nginx-pv的目录

在主节点上创建出这个文件夹

mkdir -p nginx-pv

在容器内部查询

以kubectl delete -f deploy.yaml形式删除的pod挂载的数据文件还在,不会自动清理

6.2 PV&PVC

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置,可以指定容量大小

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格,如果删除可以顺便清理之前申请的空间

6.2.1 创建pv池

静态供应

#nfs主节点

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03

创建PV

apiVersion: v1

kind: PersistentVolume #资源类型为PersistentVolume 持久化卷

metadata:

name: pv01-10m #名称,可随便起,但是Gi单位必须是小写gi

spec:

capacity:

storage: 10M #限制容量为10M

accessModes:

- ReadWriteMany #读写模式为可读可写

storageClassName: nfs #起的clsss的名称可随便起

nfs:

path: /nfs/data/01 #路径

server: 192.168.233.128 #服务器地址

--- #---代表分割一个完整的文档

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi #Gi单位必须是小写gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 192.168.233.128

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi #Gi单位必须是小写gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 192.168.233.128

6.2.2 PVC创建与绑定

创建PVC

kind: PersistentVolumeClaim #资源类型为申请书

apiVersion: v1

metadata:

name: nginx-pvc #名称随便

spec:

accessModes:

- ReadWriteMany #必须是可读可写的

resources:

requests:

storage: 200Mi #需要200Mi的空间

storageClassName: nfs #需要跟PV的属性对应

执行前

执行后

pv02-lgi 已经被Bound了,是被nginx-pvc绑定的。

删除操作后再查看,空间已经被释放了。

查看pv和pvc

kubectl get pv

kubectl get pvc

创建Pod绑定PVC

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc #之前写的是nfs,现在写的是申请书。mountPath的内容是跟申请书中申请的内容绑定的。

同时查看

后面会使用动态供应

6.3 ConfigMap

抽取应用配置,并且可以自动更新

挂载配置文件时使用

6.3.1 redis示例

把之前的配置文件创建为配置集

# 创建配置,redis保存到k8s的etcd;

kubectl create cm redis-conf --from-file=redis.conf

创建完配置集就可以删除刚刚创建的redis.conf文件

配置集会存在于k8s的etch档案库中

apiVersion: v1

data: #data是所有真正的数据,key:默认是文件名 value:配置文件的内容

redis.conf: |

appendonly yes

kind: ConfigMap

metadata:

name: redis-conf

namespace: default

6.3.2 创建Pod

apiVersion: v1

kind: Pod #创建Pod

metadata:

name: redis

spec:

containers: #镜像配置

- name: redis

image: redis

command:

- redis-server

- "/redis-master/redis.conf" #指的是redis容器内部的位置

ports:

- containerPort: 6379

volumeMounts: #卷挂载

- mountPath: /data #挂载路径

name: data #是下面配置的data

- mountPath: /redis-master

name: config #指的是下面的config

volumes:

- name: data

emptyDir: {}

- name: config

configMap: #配置集

name: redis-conf

items:

- key: redis.conf

path: redis.conf

更改配置集后k8s也能自动去更新

增加requirepass 123456

过一会就会在容器内部看到已经同步

推荐使用ConfigMap的方式去挂载配置文件,默认就有热更新的功能。

6.3.3 检查默认配置

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET appendonly

127.0.0.1:6379> CONFIG GET requirepass

6.3.4 修改ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: example-redis-config

data:

redis-config: |

maxmemory 2mb

maxmemory-policy allkeys-lru

6.3.5 检查配置是否更新

kubectl exec -it redis -- redis-cli

127.0.0.1:6379> CONFIG GET maxmemory

127.0.0.1:6379> CONFIG GET maxmemory-policy

检查指定文件内容是否已经更新

修改了CM。Pod里面的配置文件会跟着变

配置值未更改,因为需要重新启动 Pod 才能从关联的 ConfigMap 中获取更新的值。

原因:我们的Pod部署的中间件自己本身没有热更新能力

6.4 Secret

Secret 对象类型用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在 Pod 的定义或者 容器镜像 中来说更加安全和灵活。

kubectl create secret docker-registry zk006-docker \

--docker-username=zk639320199\

--docker-password=zk123456 \

--docker-email=668219987@qq.com

##命令格式

kubectl create secret docker-registry regcred \

--docker-server=<你的镜像仓库服务器> \

--docker-username=<你的用户名> \

--docker-password=<你的密码> \

--docker-email=<你的邮箱地址>

apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: leifengyang/guignginx:v1.0

imagePullSecrets: #下载镜像使用的秘钥,上面设置的

- name: zk006-docker

2847

2847

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?