大数据从业者通常需要对json数据进行处理,格式化过后导入到hive当中进行处理

1. 数据准备

新建op.log,并导入数据

1593136280858|{"cm":{"ln":"-55.0","sv":"V2.9.6","os":"8.0.4","g":"C6816QZ0@gmail.com","mid":"489","nw":"3G","l":"es","vc":"4","hw":"640*960","ar":"MX","uid":"489","t":"1593123253541","la":"5.2","md":"sumsung-18","vn":"1.3.4","ba":"Sumsung","sr":"I"},"ap":"app","et":[{"ett":"1593050051366","en":"loading","kv":{"extend2":"","loading_time":"14","action":"3","extend1":"","type":"2","type1":"201","loading_way":"1"}},{"ett":"1593108791764","en":"ad","kv":{"activityId":"1","displayMills":"78522","entry":"1","action":"1","contentType":"0"}},{"ett":"1593111271266","en":"notification","kv":{"ap_time":"1593097087883","action":"1","type":"1","content":""}},{"ett":"1593066033562","en":"active_background","kv":{"active_source":"3"}},{"ett":"1593135644347","en":"comment","kv":{"p_comment_id":1,"addtime":"1593097573725","praise_count":973,"other_id":5,"comment_id":9,"reply_count":40,"userid":7,"content":"辑赤蹲慰鸽抿肘捎"}}]}

1593136280858|{"cm":{"ln":"-114.9","sv":"V2.7.8","os":"8.0.4","g":"NW0S962J@gmail.com","mid":"490","nw":"3G","l":"pt","vc":"8","hw":"640*1136","ar":"MX","uid":"490","t":"1593121224789","la":"-44.4","md":"Huawei-8","vn":"1.0.1","ba":"Huawei","sr":"O"},"ap":"app","et":[{"ett":"1593063223807","en":"loading","kv":{"extend2":"","loading_time":"0","action":"3","extend1":"","type":"1","type1":"102","loading_way":"1"}},{"ett":"1593095105466","en":"ad","kv":{"activityId":"1","displayMills":"1966","entry":"3","action":"2","contentType":"0"}},{"ett":"1593051718208","en":"notification","kv":{"ap_time":"1593095336265","action":"2","type":"3","content":""}},{"ett":"1593100021275","en":"comment","kv":{"p_comment_id":4,"addtime":"1593098946009","praise_count":220,"other_id":4,"comment_id":9,"reply_count":151,"userid":4,"content":"抄应螟皮釉倔掉汉蛋蕾街羡晶"}},{"ett":"1593105344120","en":"praise","kv":{"target_id":9,"id":7,"type":1,"add_time":"1593098545976","userid":8}}]}

2. 数据拆分

2.1 启动spark

spark-shell

2.2 导入所需的jar包

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types._

2.3 导入文件并创建RDD

val fileRDD=sc.textFile("/opt/kb09file/op.log")

2.4 按’|'拆分数据为两部分 注意:这里需要用单引号,不能用双引号,表示字符

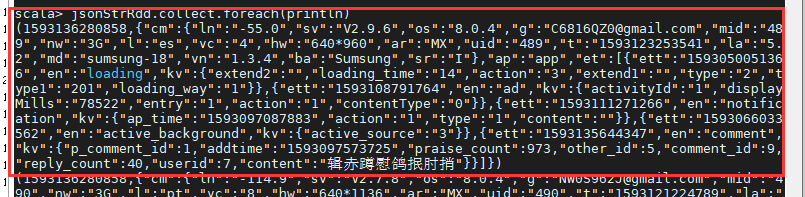

val jsonStrRdd=fileRDD.map(_.split('|')).map(x=>(x(0),x(1)))

将数据拆分二元组

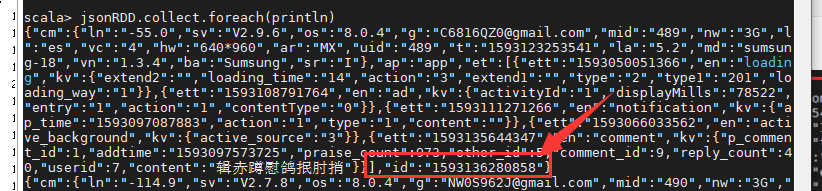

2.5 将二元组前面的id合并到后面的json数据,整理为一个长字符串

val jsonRDD=jsonStrRdd.map(x=>{var jsonStr=x._2;jsonStr=jsonStr.substring(0,jsonStr.length-1);jsonStr+",\"id\":\""+x._1+"\"}"})

2.6 将rdd转换为df

val jsonDF=jsonRDD.toDF

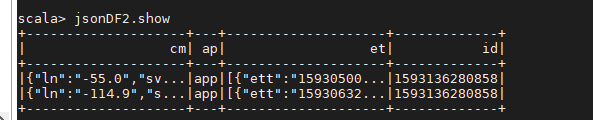

2.7 拆分json为 cm,ap,et,id四个字段

val jsonDF2=jsonDF.select(

get_json_object($"value","$.cm").alias("cm"),

get_json_object($"value","$.ap").alias("ap"),

get_json_object($"value","$.et").alias("et"),

get_json_object($"value","$.id").alias("id")

)

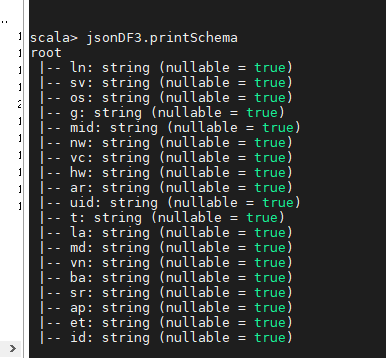

2.8 拆分cm里面的字段

val jsonDF3=jsonDF2.select(

get_json_object($"cm","$.ln").alias("ln"),

get_json_object($"cm","$.sv").alias("sv"),

get_json_object($"cm","$.os").alias("os"),

get_json_object($"cm","$.g").alias("g"),

get_json_object($"cm","$.mid").alias("mid"),

get_json_object($"cm","$.nw").alias("nw"),

get_json_object($"cm","$.vc").alias("vc"),

get_json_object($"cm","$.hw").alias("hw"),

get_json_object($"cm","$.ar").alias("ar"),

get_json_object($"cm","$.uid").alias("uid"),

get_json_object($"cm","$.t").alias("t"),

get_json_object($"cm","$.la").alias("la"),

get_json_object($"cm","$.md").alias("md"),

get_json_object($"cm","$.vn").alias("vn"),

get_json_object($"cm","$.ba").alias("ba"),

get_json_object($"cm","$.sr").alias("sr"),

$"ap",

$"et",

$"id"

)

2.9 拆分et里面的字段

将

[{"ett":"","en":"","kv":""}]

拆分为

ett en kv

值 值 值

值 值 值

值 值 值

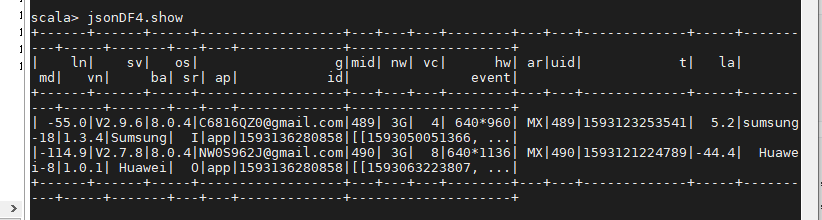

val jsonDF4=jsonDF3.select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",from_json($"et",ArrayType(StructType(StructField("ett",StringType)::StructField("en",StringType)::StructField("kv",StringType)::Nil))).alias("event"))

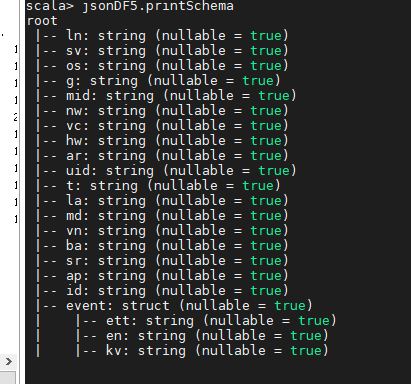

3.0 把数组再次拆开 使用explode

val jsonDF5=jsonDF4.select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",explode($"event").as("event"))

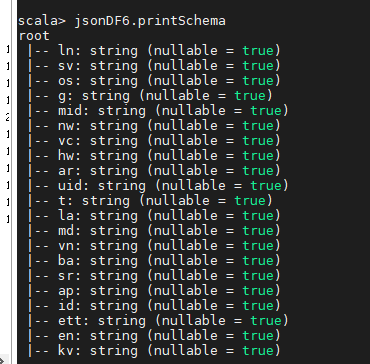

3.1 把event拆分

val jsonDF6=jsonDF5.select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"event.ett",$"event.en",$"event.kv")

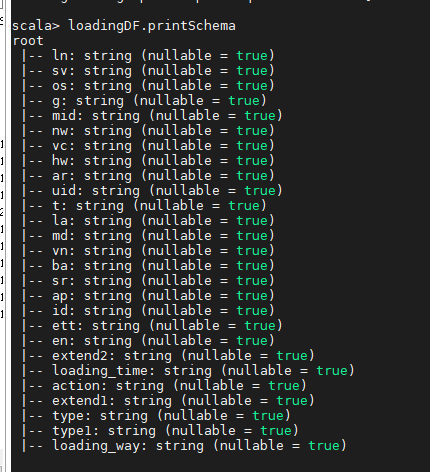

3.2

拆分en各种类型得到loading

val loadingDF=jsonDF6.filter("en='loading'").select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"ett",$"en",

get_json_object($"kv","$.extend2").alias("extend2"),

get_json_object($"kv","$.loading_time").alias("loading_time"),

get_json_object($"kv","$.action").alias("action"),

get_json_object($"kv","$.extend1").alias("extend1"),

get_json_object($"kv","$.type").alias("type"),

get_json_object($"kv","$.type1").alias("type1"),

get_json_object($"kv","$.loading_way").alias("loading_way")

)

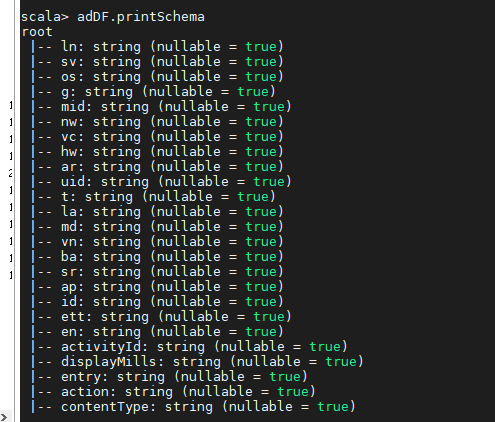

拆分en各种类型得到ad

val adDF=jsonDF6.filter("en='ad'").select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"ett",$"en",

get_json_object($"kv","$.activityId").alias("activityId"),

get_json_object($"kv","$.displayMills").alias("displayMills"),

get_json_object($"kv","$.entry").alias("entry"),

get_json_object($"kv","$.action").alias("action"),

get_json_object($"kv","$.contentType").alias("contentType")

)

拆分en各种类型得到notification

val notificationDF=jsonDF6.filter("en='notification'").select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"ett",$"en",

get_json_object($"kv","$.ap_time").alias("ap_time"),

get_json_object($"kv","$.action").alias("action"),

get_json_object($"kv","$.type").alias("type"),

get_json_object($"kv","$.content").alias("content")

)

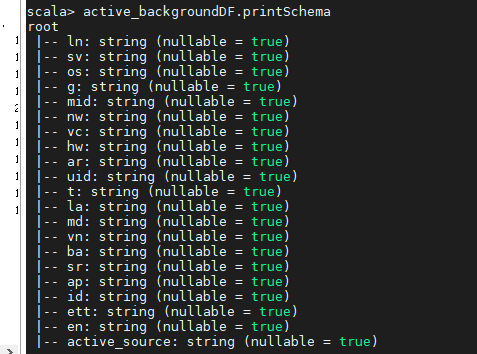

拆分en各种类型得到active_background

val active_backgroundDF=jsonDF6.filter("en='active_background'").select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"ett",$"en",

get_json_object($"kv","$.active_source").alias("active_source")

)

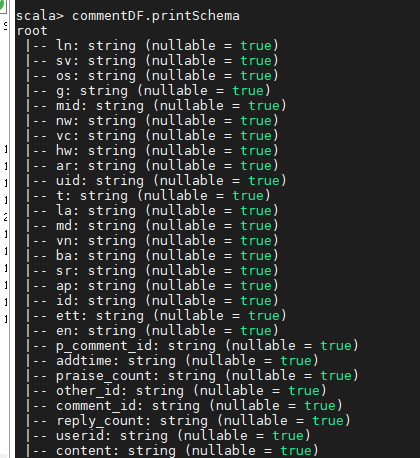

拆分en各种类型得到comment

val commentDF=jsonDF6.filter("en='comment'").select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"ett",$"en",

get_json_object($"kv","$.p_comment_id").alias("p_comment_id"),

get_json_object($"kv","$.addtime").alias("addtime"),

get_json_object($"kv","$.praise_count").alias("praise_count"),

get_json_object($"kv","$.other_id").alias("other_id"),

get_json_object($"kv","$.comment_id").alias("comment_id"),

get_json_object($"kv","$.reply_count").alias("reply_count"),

get_json_object($"kv","$.userid").alias("userid"),

get_json_object($"kv","$.content").alias("content")

)

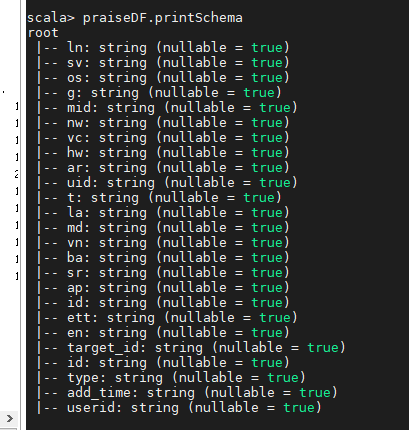

拆分en各种类型得到praise

val praiseDF=jsonDF6.filter("en='comment'").select($"ln",$"sv",$"os",$"g",$"mid",$"nw",$"vc",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",$"ap",$"id",$"ett",$"en",

get_json_object($"kv","$.target_id").alias("target_id"),

get_json_object($"kv","$.id").alias("id"),

get_json_object($"kv","$.type").alias("type"),

get_json_object($"kv","$.add_time").alias("add_time"),

get_json_object($"kv","$.userid").alias("userid")

)

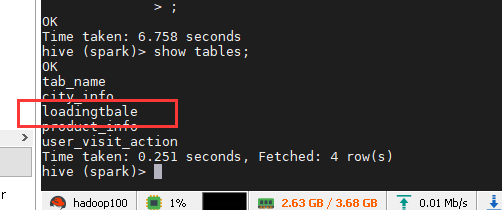

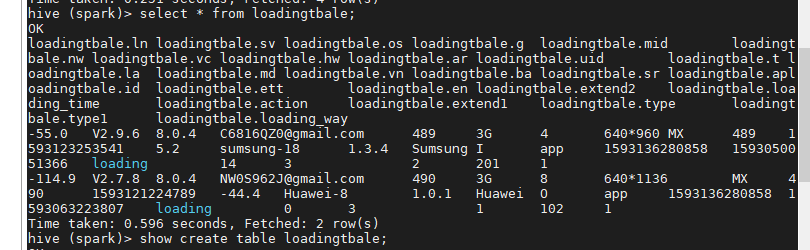

3. 数据保存

loadingDF.createTempView("loading")

spark.sql("create table loadingTbale as select * from loading")

其他表格操作类似,这样就将数据导入到了hive中

4.在idea当中实现

package json

import org.apache.spark.SparkContext

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, Dataset, Row, SparkSession}

import org.apache.spark.sql.types._

import org.apache.spark.sql.functions._

object JsonDemo {

def main(args: Array[String]): Unit = {

val spark: SparkSession = SparkSession.builder()

.master("local[3]")

.appName("jsonDemo")

.enableHiveSupport()

.getOrCreate()

import spark.implicits._

val sc: SparkContext = spark.sparkContext

val jsonRDD: RDD[String] = sc.textFile("in/op.log")

val jsonDF: DataFrame = jsonRDD.map(_.split('|'))

.map(x => x(1).substring(0, x(1).length - 1) + ",\"id\":\"" + x(0) + "\"}").toDF()

val ett = new StructType()

.add($"ett".string)

.add($"en".string)

.add($"kv".string)

val common = new StructType()

.add($"ln".string).add($"sv".string).add($"os".string).add($"g".string).add($"mid".string).add($"nw".string)

.add($"hw".string).add($"ar".string).add($"uid".string).add($"t".string).add($"la".string).add($"md".string)

.add($"vn".string).add($"ba".string).add($"sr".string)

val schema: StructType = new StructType()

.add($"cm".struct(common))

.add($"ap".string)

.add($"et".array(ett))

val jsonDF1: Dataset[Row] = jsonDF.select(from_json($"value",schema).as("values"))

val jsonDF2: DataFrame = jsonDF1.select(

$"values.ap",$"values.cm.ln",$"values.cm.sv",$"values.cm.os",$"values.cm.g",$"values.cm.mid",$"values.cm.nw",

$"values.cm.hw",$"values.cm.ar",$"values.cm.uid",$"values.cm.t",$"values.cm.la",$"values.cm.md",$"values.cm.vn",

$"values.cm.ba",$"values.cm.sr",

explode($"values.et").alias("event"))

val jsonDF3: DataFrame = jsonDF2.select(

$"ap",$"ln",$"sv",$"os", $"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",$"sr",

$"event.ett",$"event.en",$"event.kv"

)

val loadingDF: DataFrame = jsonDF3.where($"en" === "loading")

.select($"ap",$"ln",$"sv",$"os",$"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",

$"sr",$"ett",$"en",

get_json_object($"kv", "$.extend2").alias("a1"),

get_json_object($"kv", "$.loading_time").alias("a2"),

get_json_object($"kv", "$.action").alias("a3"),

get_json_object($"kv", "$.extend1").alias("a4"),

get_json_object($"kv", "$.type").alias("a5"),

get_json_object($"kv", "$.loading_way").alias("a6")

)

val adDF: DataFrame = jsonDF3.where($"en" === "ad")

.select($"ap",$"ln",$"sv",$"os",$"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",

$"sr",$"ett",$"en",

get_json_object($"kv", "$.activityId").alias("a1"),

get_json_object($"kv", "$.displayMills").alias("a2"),

get_json_object($"kv", "$.entry").alias("a3"),

get_json_object($"kv", "$.action").alias("a4"),

get_json_object($"kv", "$.contentType").alias("a5")

)

val notificationDF: DataFrame = jsonDF3.where($"en" === "notification")

.select($"ap",$"ln",$"sv",$"os",$"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",

$"sr",$"ett",$"en",

get_json_object($"kv", "$.ap_time").alias("a1"),

get_json_object($"kv", "$.action").alias("a2"),

get_json_object($"kv", "$.type").alias("a3"),

get_json_object($"kv", "$.content").alias("a4")

)

val activeBackgroundDF: DataFrame = jsonDF3.where($"en" === "active_background")

.select($"ap",$"ln",$"sv",$"os",$"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",

$"sr",$"ett",$"en",

get_json_object($"kv", "$.active_source").alias("a1")

)

val commentDF: DataFrame = jsonDF3.where($"en" === "comment")

.select($"ap",$"ln",$"sv",$"os",$"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",

$"sr",$"ett",$"en",

get_json_object($"kv", "$.p_comment_id").alias("a1"),

get_json_object($"kv", "$.addtime").alias("a2"),

get_json_object($"kv", "$.praise_count").alias("a3"),

get_json_object($"kv", "$.other_id").alias("a4"),

get_json_object($"kv", "$.comment_id").alias("a5"),

get_json_object($"kv", "$.reply_count").alias("a6"),

get_json_object($"kv", "$.userid").alias("a7"),

get_json_object($"kv", "$.content").alias("a8")

)

val praiseDF: DataFrame = jsonDF3.where($"en" === "praise")

.select($"ap",$"ln",$"sv",$"os",$"g",$"mid",$"nw",$"hw",$"ar",$"uid",$"t",$"la",$"md",$"vn",$"ba",

$"sr",$"ett",$"en",

get_json_object($"kv", "$.target_id").alias("a1"),

get_json_object($"kv", "$.id").alias("a2"),

get_json_object($"kv", "$.type").alias("a3"),

get_json_object($"kv", "$.add_time").alias("a4"),

get_json_object($"kv", "$.userid").alias("a5")

)

loadingDF.createOrReplaceTempView("loading")

//写入hive spar默认会把大写转换为小写

spark.sql("use kb09")

spark.sql("create table loadingTable as select * from loading")

}

}

667

667

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?