运维管理篇

1、Helm 包管理器

1.1、什么是 Helm?

Kubernetes 包管理器

Helm 是查找、分享和使用软件构件 Kubernetes 的最优方式。

Helm 管理名为 chart 的 Kubernetes 包的工具。Helm 可以做以下的事情:

-

从头开始创建新的 chart

-

将 chart 打包成归档(tgz)文件

-

与存储 chart 的仓库进行交互

-

在现有的 Kubernetes 集群中安装和卸载 chart

-

管理与 Helm 一起安装的 chart 的发布周期

对于Helm,有三个重要的概念:

-

chart 创建Kubernetes应用程序所必需的一组信息。

-

config 包含了可以合并到打包的chart中的配置信息,用于创建一个可发布的对象。

-

release 是一个与特定配置相结合的chart的运行实例。

1.2、Helm 架构

1.2.1、重要概念

- chart: chart 创建 Kubernetes 应用程序所必需的一组信息。

- config: config 包含了可以合并到打包的 chart 中的配置信息,用于创建一个可发布的对象。

- release: release 是一个与特定配置相结合的 chart 的运行实例。

1.2.1、组件

-

Helm 客户端

Helm 客户端 是终端用户的命令行客户端。负责以下内容:

-

本地 chart 开发

-

管理仓库

-

管理发布

-

与 Helm 库建立接口

-

发送安装的 chart

-

发送升级或卸载现有发布的请求

-

-

-

Helm 库

Helm 库 提供执行所有 Helm 操作的逻辑。与 Kubernetes API 服务交互并提供以下功能:

-

结合 chart 和配置来构建版本

-

将 chart 安装到 Kubernetes 中,并提供后续发布对象

-

与 Kubernetes 交互升级和卸载 chart

独立的 Helm 库封装了 Helm 逻辑以便不同的客户端可以使用它。

-

1.3、安装 Helm

https://helm.sh/docs/intro/install

1. 下载二进制文件

https://get.helm.sh/helm-v3.2.3-linux-amd64.tar.gz

2. 解压(tar -zxvf helm-v3.10.2-linux-amd64.tar.gz)

3. 将解压目录下的 helm 程序移动到 usr/local/bin/helm

4. 添加阿里云 helm 仓库

1.4、Helm 的常用命令

- **helm repo: ** 列出、增加、更新、删除 chart 仓库

- helm search: 使用关键词搜索 chart

- helm pull: 拉取远程仓库中的 chart 到本地

- helm create: 在本地创建新的 chart

- helm dependency: 管理 chart 依赖

- helm install: 安装 chart

- helm list: 列出所有 release

- helm lint: 检查 chart 配置是否有误

- helm package: 打包本地 chart

- helm rollback: 回滚 release 到历史版本

- helm uninstall: 卸载 release

- helm upgrade: 升级 release

1.5、chart 详解

1.5.1、目录结构

mychart

├── Chart.yaml

├── charts # 该目录保存其他依赖的 chart(子 chart)

├── templates # chart 配置模板,用于渲染最终的 Kubernetes YAML 文件

│ ├── NOTES.txt # 用户运行 helm install 时候的提示信息

│ ├── _helpers.tpl # 用于创建模板时的帮助类

│ ├── deployment.yaml # Kubernetes deployment 配置

│ ├── ingress.yaml # Kubernetes ingress 配置

│ ├── service.yaml # Kubernetes service 配置

│ ├── serviceaccount.yaml # Kubernetes serviceaccount 配置

│ └── tests

│ └── test-connection.yaml

└── values.yaml # 定义 chart 模板中的自定义配置的默认值,可以在执行 helm install 或 helm update 的时候覆盖

1.5.2、Redis chart 实践

-

修改 helm 源

# 查看默认仓库 helm repo list # 添加仓库 helm repo add bitnami https://charts.bitnami.com/bitnami helm repo add aliyun https://apphub.aliyuncs.com/stable helm repo add azure http://mirror.azure.cn/kubernetes/charts -

搜索 redis chart

# 搜索 redis chart helm search repo redis # 查看安装说明 helm show readme bitnami/redis -

修改配置安装

# 先将 chart 拉到本地 helm pull bitnami/redis # 解压后,修改 values.yaml 中的参数 tar -xvf redis-17.4.3.tgz # 修改 storageClass 为 managed-nfs-storage # 设置 redis 密码 password # 修改集群架构 architecture,默认是主从(replication,3个节点),可以修改为 standalone 单机模式 # 修改实例存储大小 persistence.size 为需要的大小 # 修改 service.nodePorts.redis 向外暴露端口,范围 # 安装操作 # 创建命名空间 kubectl create namespace redis # 安装 cd ../ helm install redis ./redis -n redis -

查看安装情况

# 查看 helm 安装列表 helm list # 查看 redis 命名空间下所有对象信息 kubectl get all -n redis -

升级与回滚

要想升级 chart 可以修改本地的 chart 配置并执行: helm upgrade [RELEASE] [CHART] [flags] helm upgrade redis ./redis 使用 helm ls 的命令查看当前运行的 chart 的 release 版本,并使用下面的命令回滚到历史版本: helm rollback [REVISION] [flags] # 查看历史 helm history redis # 回退到上一版本 helm rollback redis # 回退到指定版本 helm rollback redis 3 -

helm 卸载 redis

helm delete redis -n redis

2、k8s 集群监控

2.1、监控方案

-

Heapster

Heapster 是容器集群监控和性能分析工具,天然的支持Kubernetes 和 CoreOS。

Kubernetes 有个出名的监控 agent—cAdvisor。在每个kubernetes Node 上都会运行 cAdvisor,它会收集本机以及容器的监控数据(cpu,memory,filesystem,network,uptime)。

在较新的版本中,K8S 已经将 cAdvisor 功能集成到 kubelet 组件中。每个 Node 节点可以直接进行 web 访问。

-

Weave Scope

Weave Scope 可以监控 kubernetes 集群中的一系列资源的状态、资源使用情况、应用拓扑、scale、还可以直接通过浏览器进入容器内部调试等,其提供的功能包括:

-

交互式拓扑界面

-

图形模式和表格模式

-

过滤功能

-

搜索功能

-

实时度量

-

容器排错

-

插件扩展

-

-

Prometheus

Prometheus 是一套开源的监控系统、报警、时间序列的集合,最初由 SoundCloud 开发,后来随着越来越多公司的使用,于是便独立成开源项目。自此以后,许多公司和组织都采用了 Prometheus 作为监控告警工具。

2.2、Prometheus 监控 k8s

2.2.1、自定义配置

-

创建 ConfigMap 配置

# 创建 prometheus-config.yml apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'prometheus' static_configs: - targets: ['localhost:9090'] # 创建 configmap kubectl create -f prometheus-config.yml -

部署 Prometheus

# 创建 prometheus-deploy.yml apiVersion: v1 kind: Service metadata: name: prometheus labels: name: prometheus spec: ports: - name: prometheus protocol: TCP port: 9090 targetPort: 9090 selector: app: prometheus type: NodePort --- apiVersion: apps/v1 kind: Deployment metadata: labels: name: prometheus name: prometheus spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus spec: containers: - name: prometheus image: prom/prometheus:v2.2.1 command: - "/bin/prometheus" args: - "--config.file=/etc/prometheus/prometheus.yml" ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: "/etc/prometheus" name: prometheus-config volumes: - name: prometheus-config configMap: name: prometheus-config # 创建部署对象 kubectl create -f prometheus-deploy.yml # 查看是否在运行中 kubectl get pods -l app=prometheus # 获取服务信息 kubectl get svc -l name=prometheus # 通过 http://节点ip:端口 进行访问 -

配置访问权限

# 创建 prometheus-rbac-setup.yml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - nodes/proxy - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: - extensions resources: - ingresses verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics"] verbs: ["get"] --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus namespace: default --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: default # 创建资源对象 kubectl create -f prometheus-rbac-setup.yml # 修改 prometheus-deploy.yml 配置文件 spec: replicas: 1 template: metadata: labels: app: prometheus spec: serviceAccountName: prometheus serviceAccount: prometheus # 升级 prometheus-deployment kubectl apply -f prometheus-deployment.yml # 查看 pod kubectl get pods -l app=prometheus # 查看 serviceaccount 认证证书 kubectl exec -it -- ls /var/run/secrets/kubernetes.io/serviceaccount/ -

服务发现配置

# 配置 job,帮助 prometheus 找到所有节点信息,修改 prometheus-config.yml 增加为如下内容 data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'prometheus' static_configs: - targets: ['localhost:9090'] - job_name: 'kubernetes-nodes' tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node - job_name: 'kubernetes-service' tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: service - job_name: 'kubernetes-endpoints' tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: endpoints - job_name: 'kubernetes-ingress' tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: ingress - job_name: 'kubernetes-pods' tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: pod # 升级配置 kubectl apply -f prometheus-config.yml # 获取 prometheus pod kubectl get pods -l app=prometheus # 删除 pod kubectl delete pods # 查看 pod 状态 kubectl get pods # 重新访问 ui 界面 -

系统时间同步

# 查看系统时间 date # 同步网络时间 ntpdate cn.pool.ntp.org -

监控 k8s 集群

# 往 prometheus-config.yml 中追加如下配置 - job_name: 'kubernetes-kubelet' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics # 升级资源 kubectl apply -f prometheus-config.yml # 重新构建应用 kubectl delete pods # 利用指标获取当前节点中 pod 的启动时间 kubelet_pod_start_latency_microseconds{quantile="0.99"} # 计算平均时间 kubelet_pod_start_latency_microseconds_sum / kubelet_pod_start_latency_microseconds_count-

从 kubelet 获取节点容器资源使用情况

# 修改prometheus-config.yml配置文件,增加如下内容,并更新服务 - job_name: 'kubernetes-cadvisor' scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token kubernetes_sd_configs: - role: node relabel_configs: - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - action: labelmap regex: __meta_kubernetes_node_label_(.+) -

Exporter 监控资源使用情况

# 创建 node-exporter-daemonset.yml 文件 apiVersion: apps/v1 kind: DaemonSet metadata: name: node-exporter spec: template: metadata: annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9100' prometheus.io/path: 'metrics' labels: app: node-exporter name: node-exporter spec: containers: - image: prom/node-exporter imagePullPolicy: IfNotPresent name: node-exporter ports: - containerPort: 9100 hostPort: 9100 name: scrape hostNetwork: true hostPID: true # 创建 daemonset kubectl create -f node-exporter-daemonset.yml # 查看 daemonset 运行状态 kubectl get daemonsets -l app=node-exporter # 查看 pod 状态 kubectl get pods -l app=node-exporter # 修改prometheus-config.yml配置文件,增加监控采集任务 - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name # 通过监控 apiserver 来监控所有对应的入口请求,增加 api-server 监控配置 - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - target_label: __address__ replacement: kubernetes.default.svc:443 -

对 Ingress 和 Service 进行网络探测

# 创建 blackbox-exporter.yaml 进行网络探测 apiVersion: v1 kind: Service metadata: labels: app: blackbox-exporter name: blackbox-exporter spec: ports: - name: blackbox port: 9115 protocol: TCP selector: app: blackbox-exporter type: ClusterIP --- apiVersion: apps/v1 kind: Deployment metadata: labels: app: blackbox-exporter name: blackbox-exporter spec: replicas: 1 selector: matchLabels: app: blackbox-exporter template: metadata: labels: app: blackbox-exporter spec: containers: - image: prom/blackbox-exporter imagePullPolicy: IfNotPresent name: blackbox-exporter # 创建资源对象 kubectl -f blackbox-exporter.yaml # 配置监控采集所有 service/ingress 信息,加入配置到配置文件 - job_name: 'kubernetes-services' metrics_path: /probe params: module: [http_2xx] kubernetes_sd_configs: - role: service relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.default.svc.cluster.local:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' metrics_path: /probe params: module: [http_2xx] kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path] regex: (.+);(.+);(.+) replacement: ${1}://${2}${3} target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.default.svc.cluster.local:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_ingress_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_ingress_name] target_label: kubernetes_name

-

-

Grafana 可视化

Grafana 是一个通用的可视化工具。‘通用’意味着 Grafana 不仅仅适用于展示 Prometheus 下的监控数据,也同样适用于一些其他的数据可视化需求。在开始使用Grafana之前,我们首先需要明确一些 Grafana下的基本概念,以帮助用户能够快速理解Grafana。

-

基本概念

-

数据源(Data Source)

对于Grafana而言,Prometheus这类为其提供数据的对象均称为数据源(Data Source)。目前,Grafana官方提供了对:Graphite, InfluxDB, OpenTSDB, Prometheus, Elasticsearch, CloudWatch的支持。对于Grafana管理员而言,只需要将这些对象以数据源的形式添加到Grafana中,Grafana便可以轻松的实现对这些数据的可视化工作。

-

仪表盘(Dashboard)

-

组织和用户

-

-

集成 Grafana

-

部署 Grafana

# 创建 grafana-statefulset.yaml apiVersion: apps/v1 kind: StatefulSet metadata: name: grafana-core namespace: kube-monitoring labels: app: grafana component: core spec: serviceName: "grafana" selector: matchLabels: app: grafana replicas: 1 template: metadata: labels: app: grafana component: core spec: containers: - image: grafana/grafana:6.5.3 name: grafana-core imagePullPolicy: IfNotPresent env: # The following env variables set up basic auth twith the default admin user and admin password. - name: GF_AUTH_BASIC_ENABLED value: "true" - name: GF_AUTH_ANONYMOUS_ENABLED value: "false" # - name: GF_AUTH_ANONYMOUS_ORG_ROLE # value: Admin # does not really work, because of template variables in exported dashboards: # - name: GF_DASHBOARDS_JSON_ENABLED # value: "true" readinessProbe: httpGet: path: /login port: 3000 # initialDelaySeconds: 30 # timeoutSeconds: 1 volumeMounts: - name: grafana-persistent-storage mountPath: /var/lib/grafana subPath: grafana volumeClaimTemplates: - metadata: name: grafana-persistent-storage spec: storageClassName: managed-nfs-storage accessModes: - ReadWriteOnce resources: requests: storage: "1Gi" -

服务发现

# 创建 grafana-service.yaml apiVersion: v1 kind: Service metadata: name: grafana namespace: kube-monitoring labels: app: grafana component: core spec: type: NodePort ports: - port: 3000 nodePort: 30011 selector: app: grafana component: core -

配置 Grafana 面板

添加 Prometheus 数据源

下载 k8s 面板,导入该面板

-

-

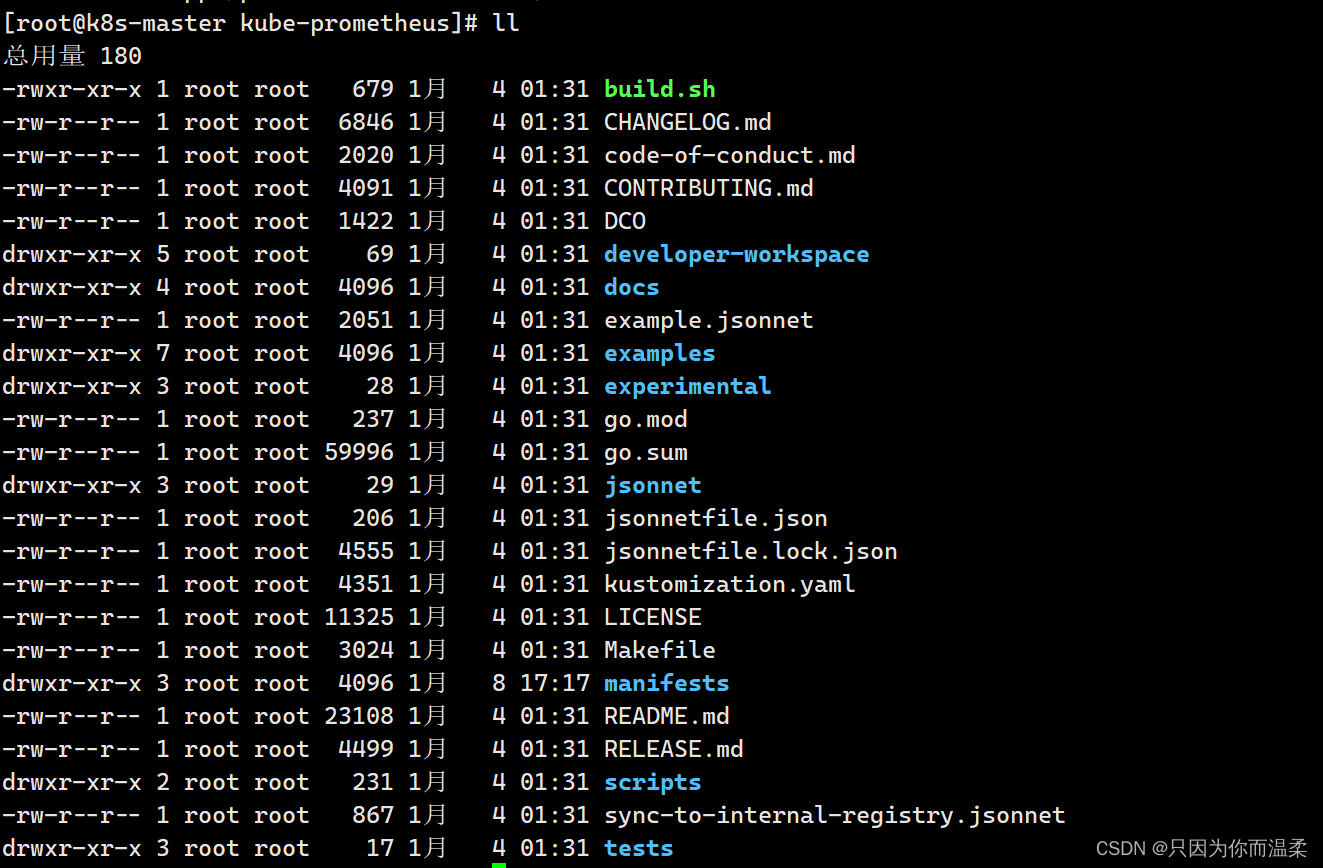

2.2.2、kube-prometheus

-

去下载kube-prometheus

https://github.com/prometheus-operator/kube-prometheus/

-

解压

-

进入manifests目录下替换国内镜像

sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheusOperator-deployment.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheus-prometheus.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' alertmanager-alertmanager.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' kubeStateMetrics-deployment.yaml sed -i 's/k8s.gcr.io/lank8s.cn/g' kubeStateMetrics-deployment.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' nodeExporter-daemonset.yaml sed -i 's/quay.io/quay.mirrors.ustc.edu.cn/g' prometheusAdapter-deployment.yaml sed -i 's/k8s.gcr.io/lank8s.cn/g' prometheusAdapter-deployment.yaml # 查看是否还有国外镜像 grep "image: " * -r -

修改访问入口

分别修改 prometheus-service.yaml、alertmanager-service.yaml、grafana-service.yaml 中的 spec.type 为 NodePort 方便测试访问

-

进入manifests目录配置 Ingress

# 通过域名访问(没有域名可以在主机配置 hosts) 192.168.171.128 grafana.xiaoge.cn 192.168.171.128 prometheus.xiaoge.cn 192.168.171.128 alertmanager.xiaoge.cn # 创建 prometheus-ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: namespace: monitoring name: prometheus-ingress spec: ingressClassName: nginx rules: - host: grafana.xiaoge.cn # 访问 Grafana 域名 http: paths: - path: / pathType: Prefix backend: service: name: grafana port: number: 3000 - host: prometheus.xiaoge.cn # 访问 Prometheus 域名 http: paths: - path: / pathType: Prefix backend: service: name: prometheus-k8s port: number: 9090 - host: alertmanager.xiaoge.cn # 访问 alertmanager 域名 http: paths: - path: / pathType: Prefix backend: service: name: alertmanager-main port: number: 9093 -

安装

kubectl create -f manifests/setup kubectl apply -f manifests/ -

卸载

kubectl delete --ignore-not-found=true -f manifests/ -f manifests/setup

3、ELK 日志管理

3.1、ELK 组成

- Elasticsearch: ES 作为一个搜索型文档数据库,拥有优秀的搜索能力,以及提供了丰富的 REST API 让我们可以轻松的调用接口。

- Filebeat: Filebeat 是一款轻量的数据收集工具

- Logstash: 通过 Logstash 同样可以进行日志收集,但是若每一个节点都需要收集时,部署 Logstash 有点过重,因此这里主要用到 Logstash 的数据清洗能力,收集交给 Filebeat 去实现

- Kibana: Kibana 是一款基于 ES 的可视化操作界面工具,利用 Kibana 可以实现非常方便的 ES 可视化操作

3.2、集成 ELK

-

创建命名空间

apiVersion: v1 kind: Namespace metadata: name: kube-logging -

部署 es 搜索服务

# 需要提前给 es 落盘节点打上标签 kubectl label node es=data # 创建 es.yaml --- apiVersion: v1 kind: Service metadata: name: elasticsearch-logging namespace: kube-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Elasticsearch" spec: ports: - port: 9200 protocol: TCP targetPort: db selector: k8s-app: elasticsearch-logging --- # RBAC authn and authz apiVersion: v1 kind: ServiceAccount metadata: name: elasticsearch-logging namespace: kube-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - "services" - "namespaces" - "endpoints" verbs: - "get" --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: kube-logging name: elasticsearch-logging labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: elasticsearch-logging namespace: kube-logging apiGroup: "" roleRef: kind: ClusterRole name: elasticsearch-logging apiGroup: "" --- # Elasticsearch deployment itself apiVersion: apps/v1 kind: StatefulSet #使用statefulset创建Pod metadata: name: elasticsearch-logging #pod名称,使用statefulSet创建的Pod是有序号有顺序的 namespace: kube-logging #命名空间 labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile srv: srv-elasticsearch spec: serviceName: elasticsearch-logging #与svc相关联,这可以确保使用以下DNS地址访问Statefulset中的每个pod (es-cluster-[0,1,2].elasticsearch.elk.svc.cluster.local) replicas: 1 #副本数量,单节点 selector: matchLabels: k8s-app: elasticsearch-logging #和pod template配置的labels相匹配 template: metadata: labels: k8s-app: elasticsearch-logging kubernetes.io/cluster-service: "true" spec: serviceAccountName: elasticsearch-logging containers: - image: docker.io/library/elasticsearch:7.9.3 name: elasticsearch-logging resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m memory: 2Gi requests: cpu: 100m memory: 500Mi ports: - containerPort: 9200 name: db protocol: TCP - containerPort: 9300 name: transport protocol: TCP volumeMounts: - name: elasticsearch-logging mountPath: /usr/share/elasticsearch/data/ #挂载点 env: - name: "NAMESPACE" valueFrom: fieldRef: fieldPath: metadata.namespace - name: "discovery.type" #定义单节点类型 value: "single-node" - name: ES_JAVA_OPTS #设置Java的内存参数,可以适当进行加大调整 value: "-Xms512m -Xmx2g" volumes: - name: elasticsearch-logging hostPath: path: /data/es/ nodeSelector: #如果需要匹配落盘节点可以添加 nodeSelect es: data tolerations: - effect: NoSchedule operator: Exists # Elasticsearch requires vm.max_map_count to be at least 262144. # If your OS already sets up this number to a higher value, feel free # to remove this init container. initContainers: #容器初始化前的操作 - name: elasticsearch-logging-init image: alpine:3.6 command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"] #添加mmap计数限制,太低可能造成内存不足的错误 securityContext: #仅应用到指定的容器上,并且不会影响Volume privileged: true #运行特权容器 - name: increase-fd-ulimit image: busybox imagePullPolicy: IfNotPresent command: ["sh", "-c", "ulimit -n 65536"] #修改文件描述符最大数量 securityContext: privileged: true - name: elasticsearch-volume-init #es数据落盘初始化,加上777权限 image: alpine:3.6 command: - chmod - -R - "777" - /usr/share/elasticsearch/data/ volumeMounts: - name: elasticsearch-logging mountPath: /usr/share/elasticsearch/data/ # 创建命名空间 kubectl create ns kube-logging # 创建服务 kubectl create -f es.yaml # 查看 pod 启用情况 kubectl get pod -n kube-logging -

部署 logstash 数据清洗

# 创建 logstash.yaml 并部署服务 --- apiVersion: v1 kind: Service metadata: name: logstash namespace: kube-logging spec: ports: - port: 5044 # 接受filtebeat传递数据的端口 targetPort: beats selector: type: logstash clusterIP: None --- apiVersion: apps/v1 kind: Deployment metadata: name: logstash namespace: kube-logging spec: selector: matchLabels: type: logstash template: metadata: labels: type: logstash srv: srv-logstash spec: containers: - image: docker.io/kubeimages/logstash:7.9.3 #该镜像支持arm64和amd64两种架构 name: logstash ports: - containerPort: 5044 name: beats command: - logstash - '-f' - '/etc/logstash_c/logstash.conf' env: - name: "XPACK_MONITORING_ELASTICSEARCH_HOSTS" value: "http://elasticsearch-logging:9200" volumeMounts: - name: config-volume mountPath: /etc/logstash_c/ - name: config-yml-volume mountPath: /usr/share/logstash/config/ - name: timezone mountPath: /etc/localtime resources: #logstash一定要加上资源限制,避免对其他业务造成资源抢占影响 limits: cpu: 1000m memory: 2048Mi requests: cpu: 512m memory: 512Mi volumes: - name: config-volume configMap: name: logstash-conf items: - key: logstash.conf path: logstash.conf - name: timezone hostPath: path: /etc/localtime - name: config-yml-volume configMap: name: logstash-yml items: - key: logstash.yml path: logstash.yml --- apiVersion: v1 kind: ConfigMap metadata: name: logstash-conf namespace: kube-logging labels: type: logstash data: logstash.conf: |- input { beats { port => 5044 } } filter { # 处理 ingress 日志 if [kubernetes][container][name] == "nginx-ingress-controller" { json { source => "message" target => "ingress_log" } if [ingress_log][requesttime] { mutate { convert => ["[ingress_log][requesttime]", "float"] } } if [ingress_log][upstremtime] { mutate { convert => ["[ingress_log][upstremtime]", "float"] } } if [ingress_log][status] { mutate { convert => ["[ingress_log][status]", "float"] } } if [ingress_log][httphost] and [ingress_log][uri] { mutate { add_field => {"[ingress_log][entry]" => "%{[ingress_log][httphost]}%{[ingress_log][uri]}"} } mutate { split => ["[ingress_log][entry]","/"] } if [ingress_log][entry][1] { mutate { add_field => {"[ingress_log][entrypoint]" => "%{[ingress_log][entry][0]}/%{[ingress_log][entry][1]}"} remove_field => "[ingress_log][entry]" } } else { mutate { add_field => {"[ingress_log][entrypoint]" => "%{[ingress_log][entry][0]}/"} remove_field => "[ingress_log][entry]" } } } } # 处理以srv进行开头的业务服务日志 if [kubernetes][container][name] =~ /^srv*/ { json { source => "message" target => "tmp" } if [kubernetes][namespace] == "kube-logging" { drop{} } if [tmp][level] { mutate{ add_field => {"[applog][level]" => "%{[tmp][level]}"} } if [applog][level] == "debug"{ drop{} } } if [tmp][msg] { mutate { add_field => {"[applog][msg]" => "%{[tmp][msg]}"} } } if [tmp][func] { mutate { add_field => {"[applog][func]" => "%{[tmp][func]}"} } } if [tmp][cost]{ if "ms" in [tmp][cost] { mutate { split => ["[tmp][cost]","m"] add_field => {"[applog][cost]" => "%{[tmp][cost][0]}"} convert => ["[applog][cost]", "float"] } } else { mutate { add_field => {"[applog][cost]" => "%{[tmp][cost]}"} } } } if [tmp][method] { mutate { add_field => {"[applog][method]" => "%{[tmp][method]}"} } } if [tmp][request_url] { mutate { add_field => {"[applog][request_url]" => "%{[tmp][request_url]}"} } } if [tmp][meta._id] { mutate { add_field => {"[applog][traceId]" => "%{[tmp][meta._id]}"} } } if [tmp][project] { mutate { add_field => {"[applog][project]" => "%{[tmp][project]}"} } } if [tmp][time] { mutate { add_field => {"[applog][time]" => "%{[tmp][time]}"} } } if [tmp][status] { mutate { add_field => {"[applog][status]" => "%{[tmp][status]}"} convert => ["[applog][status]", "float"] } } } mutate { rename => ["kubernetes", "k8s"] remove_field => "beat" remove_field => "tmp" remove_field => "[k8s][labels][app]" } } output { elasticsearch { hosts => ["http://elasticsearch-logging:9200"] codec => json index => "logstash-%{+YYYY.MM.dd}" #索引名称以logstash+日志进行每日新建 } } --- apiVersion: v1 kind: ConfigMap metadata: name: logstash-yml namespace: kube-logging labels: type: logstash data: logstash.yml: |- http.host: "0.0.0.0" xpack.monitoring.elasticsearch.hosts: http://elasticsearch-logging:9200 -

部署 filebeat 数据采集

# 创建 filebeat.yaml 并部署 --- apiVersion: v1 kind: ConfigMap metadata: name: filebeat-config namespace: kube-logging labels: k8s-app: filebeat data: filebeat.yml: |- filebeat.inputs: - type: container enable: true paths: - /var/log/containers/*.log #这里是filebeat采集挂载到pod中的日志目录 processors: - add_kubernetes_metadata: #添加k8s的字段用于后续的数据清洗 host: ${NODE_NAME} matchers: - logs_path: logs_path: "/var/log/containers/" #output.kafka: #如果日志量较大,es中的日志有延迟,可以选择在filebeat和logstash中间加入kafka # hosts: ["kafka-log-01:9092", "kafka-log-02:9092", "kafka-log-03:9092"] # topic: 'topic-test-log' # version: 2.0.0 output.logstash: #因为还需要部署logstash进行数据的清洗,因此filebeat是把数据推到logstash中 hosts: ["logstash:5044"] enabled: true --- apiVersion: v1 kind: ServiceAccount metadata: name: filebeat namespace: kube-logging labels: k8s-app: filebeat --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: filebeat labels: k8s-app: filebeat rules: - apiGroups: [""] # "" indicates the core API group resources: - namespaces - pods verbs: ["get", "watch", "list"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: filebeat subjects: - kind: ServiceAccount name: filebeat namespace: kube-logging roleRef: kind: ClusterRole name: filebeat apiGroup: rbac.authorization.k8s.io --- apiVersion: apps/v1 kind: DaemonSet metadata: name: filebeat namespace: kube-logging labels: k8s-app: filebeat spec: selector: matchLabels: k8s-app: filebeat template: metadata: labels: k8s-app: filebeat spec: serviceAccountName: filebeat terminationGracePeriodSeconds: 30 containers: - name: filebeat image: docker.io/kubeimages/filebeat:7.9.3 #该镜像支持arm64和amd64两种架构 args: [ "-c", "/etc/filebeat.yml", "-e","-httpprof","0.0.0.0:6060" ] #ports: # - containerPort: 6060 # hostPort: 6068 env: - name: NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName - name: ELASTICSEARCH_HOST value: elasticsearch-logging - name: ELASTICSEARCH_PORT value: "9200" securityContext: runAsUser: 0 # If using Red Hat OpenShift uncomment this: #privileged: true resources: limits: memory: 1000Mi cpu: 1000m requests: memory: 100Mi cpu: 100m volumeMounts: - name: config #挂载的是filebeat的配置文件 mountPath: /etc/filebeat.yml readOnly: true subPath: filebeat.yml - name: data #持久化filebeat数据到宿主机上 mountPath: /usr/share/filebeat/data - name: varlibdockercontainers #这里主要是把宿主机上的源日志目录挂载到filebeat容器中,如果没有修改docker或者containerd的runtime进行了标准的日志落盘路径,可以把mountPath改为/var/lib mountPath: /var/lib readOnly: true - name: varlog #这里主要是把宿主机上/var/log/pods和/var/log/containers的软链接挂载到filebeat容器中 mountPath: /var/log/ readOnly: true - name: timezone mountPath: /etc/localtime volumes: - name: config configMap: defaultMode: 0600 name: filebeat-config - name: varlibdockercontainers hostPath: #如果没有修改docker或者containerd的runtime进行了标准的日志落盘路径,可以把path改为/var/lib path: /var/lib - name: varlog hostPath: path: /var/log/ # data folder stores a registry of read status for all files, so we don't send everything again on a Filebeat pod restart - name: inputs configMap: defaultMode: 0600 name: filebeat-inputs - name: data hostPath: path: /data/filebeat-data type: DirectoryOrCreate - name: timezone hostPath: path: /etc/localtime tolerations: #加入容忍能够调度到每一个节点, 符合条件的节点都会部署对应的pod - effect: NoExecute key: dedicated operator: Equal value: gpu - effect: NoSchedule operator: Exists -

部署 kibana 可视化界面

# 此处有配置 kibana 访问域名,如果没有域名则需要在本机配置 hosts 192.168.171.129 kibana.xiaoge.cn # 创建标签 kubectl label node k8s-app=kibana # 创建 kibana.yaml 并创建服务 --- apiVersion: v1 kind: ConfigMap metadata: namespace: kube-logging name: kibana-config labels: k8s-app: kibana data: kibana.yml: |- server.name: kibana server.host: "0" i18n.locale: zh-CN #设置默认语言为中文 elasticsearch: hosts: ${ELASTICSEARCH_HOSTS} #es集群连接地址,由于我这都都是k8s部署且在一个ns下,可以直接使用service name连接 --- apiVersion: v1 kind: Service metadata: name: kibana namespace: kube-logging labels: k8s-app: kibana kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Kibana" srv: srv-kibana spec: type: NodePort ports: - port: 5601 protocol: TCP targetPort: ui selector: k8s-app: kibana --- apiVersion: apps/v1 kind: Deployment metadata: name: kibana namespace: kube-logging labels: k8s-app: kibana kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile srv: srv-kibana spec: replicas: 1 selector: matchLabels: k8s-app: kibana template: metadata: labels: k8s-app: kibana spec: containers: - name: kibana image: docker.io/kubeimages/kibana:7.9.3 #该镜像支持arm64和amd64两种架构 resources: # need more cpu upon initialization, therefore burstable class limits: cpu: 1000m requests: cpu: 100m env: - name: ELASTICSEARCH_HOSTS value: http://elasticsearch-logging:9200 ports: - containerPort: 5601 name: ui protocol: TCP volumeMounts: - name: config mountPath: /usr/share/kibana/config/kibana.yml readOnly: true subPath: kibana.yml volumes: - name: config configMap: name: kibana-config --- apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: kibana namespace: kube-logging spec: ingressClassName: nginx rules: - host: kibana.xiaoge.cn http: paths: - path: / pathType: Prefix backend: service: name: kibana port: number: 5601 -

Kibana 配置

进入 Kibana 界面,打开菜单中的 Stack Management 可以看到采集到的日志 避免日志越来越大,占用磁盘过多,进入 索引生命周期策略 界面点击 创建策略 按钮 设置策略名称为 logstash-history-ilm-policy 关闭 热阶段 开启删除阶段,设置保留天数为 7 天 保存配置 为了方便在 discover 中查看日志,选择 索引模式 然后点击 创建索引模式 按钮 索引模式名称 里面配置 logstash-* 点击下一步 时间字段 选择 @timestamp 点击 创建索引模式 按钮 由于部署的单节点,产生副本后索引状态会变成 yellow,打开 dev tools,取消所有索引的副本数 PUT _all/_settings { "number_of_replicas": 0 } 为了标准化日志中的 map 类型,以及解决链接索引生命周期策略,我们需要修改默认模板 PUT _template/logstash { "order": 1, "index_patterns": [ "logstash-*" ], "settings": { "index": { "lifecycle" : { "name" : "logstash-history-ilm-policy" }, "number_of_shards": "2", "refresh_interval": "5s", "number_of_replicas" : "0" } }, "mappings": { "properties": { "@timestamp": { "type": "date" }, "applog": { "dynamic": true, "properties": { "cost": { "type": "float" }, "func": { "type": "keyword" }, "method": { "type": "keyword" } } }, "k8s": { "dynamic": true, "properties": { "namespace": { "type": "keyword" }, "container": { "dynamic": true, "properties": { "name": { "type": "keyword" } } }, "labels": { "dynamic": true, "properties": { "srv": { "type": "keyword" } } } } }, "geoip": { "dynamic": true, "properties": { "ip": { "type": "ip" }, "latitude": { "type": "float" }, "location": { "type": "geo_point" }, "longitude": { "type": "float" } } } } }, "aliases": {} } 最后即可通过 discover 进行搜索了

4、 Kubernetes 可视化界面

-

Kubernetes Dashboard

-

安装

# 下载官方部署配置文件 wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml # 修改属性 kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort #新增 ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard # 创建资源 kubectl apply -f recommend.yaml # 查看资源是否已经就绪 kubectl get all -n kubernetes-dashboard -o wide # 访问测试 https://节点ip:端口 -

配置所有权限账号

# 创建账号配置文件 touch dashboard-admin.yaml # 配置文件 apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard name: dashboard-admin namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: dashboard-admin-cluster-role roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: dashboard-admin namespace: kubernetes-dashboard # 创建资源 kubectl apply -f dashboard-admin.yaml # 查看所有账号 kubectl get serviceaccount -n kubernetes-dashboard # 查看账号信息(会显示对应的secrets的名字) kubectl describe serviceaccount dashboard-admin -n kubernetes-dashboard # 查看所有的secrets kubectl get secrets -n kubernetes-dashboard # 获取账号的 token 登录 dashboard kubectl describe secrets dashboard-admin-token-5crbd(secrets的名字) -n kubernetes-dashboard -

Dashboard 的使用

-

-

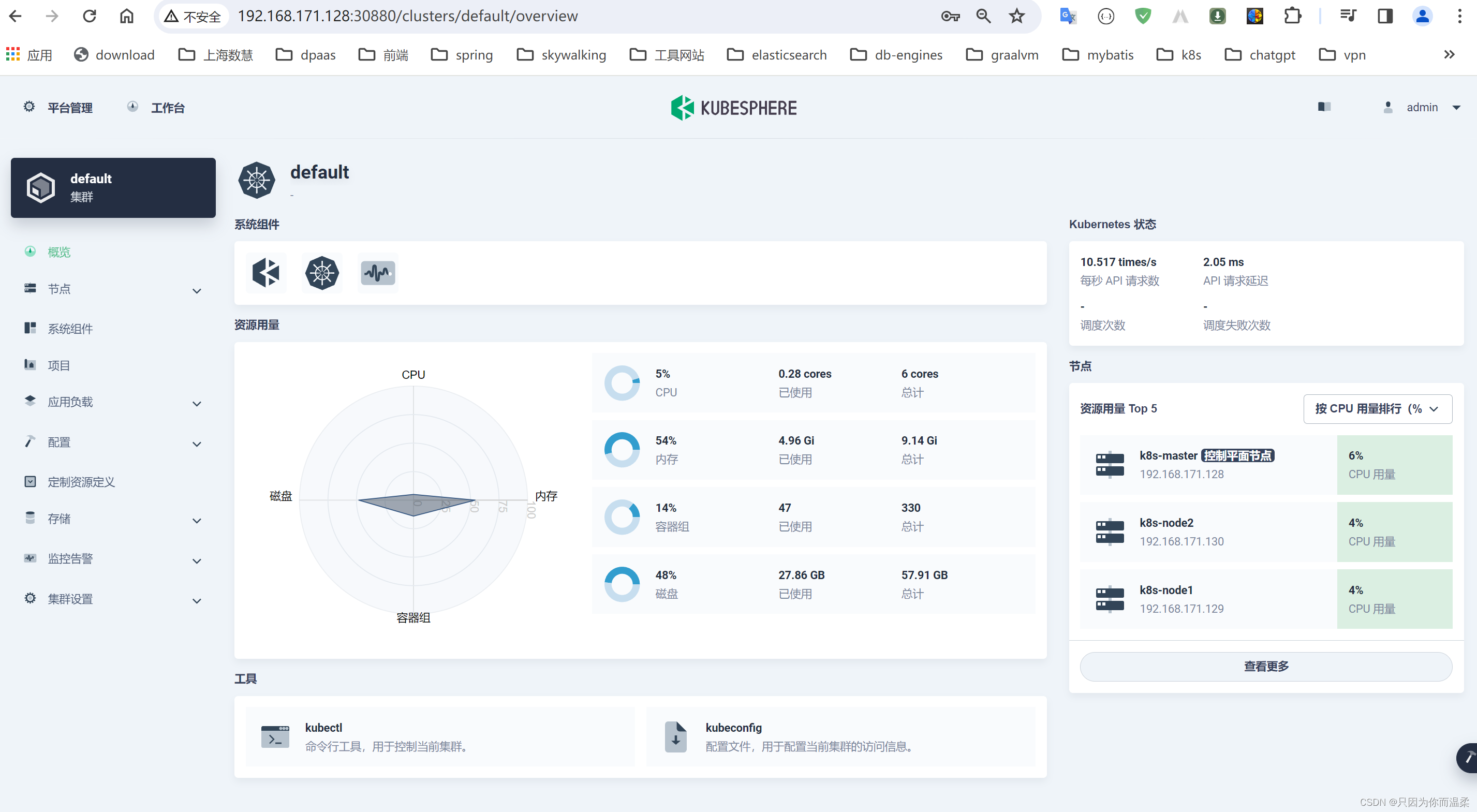

kubesphere

https://kubesphere.io/zh/ KubeSphere 愿景是打造一个以 Kubernetes 为内核的云原生分布式操作系统,它的架构可以非常方便地使第三方应用与云原生生态组件进行即插即用(plug-and-play)的集成,支持云原生应用在多云与多集群的统一分发和运维管理。-

default-storage-class.yaml

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: local annotations: cas.openebs.io/config: | - name: StorageType value: "hostpath" - name: BasePath value: "/var/openebs/local/" kubectl.kubernetes.io/last-applied-configuration: > {"apiVersion":"storage.k8s.io/v1", "kind":"StorageClass","metadata":{"annotations":{"cas.openebs.io/config":"-name: StorageType\n value: \"hostpath\"\n- name: BasePath\n value: class.kubesphere.io/supported-access-modes":"[\"ReadWriteOnce\"]"}, "name": "local"}, "provisioner":"opedebs.io/local", "reclaimPolicy":"Delete","volumeBindingMode":"WaitForFirstConsumer"} openebs.io/cas-type: local storageclass.beta.kubernetes.io/is-default-class: 'true' storageclass.kubesphere.io/supported-access-modes: '["ReadWriteOnce"]' provisioner: openebs.io/local reclaimPolicy: Delete volumeBindingMode: WaitForFirstConsumer -

本地存储动态 PVC

# 在所有节点安装 iSCSI 协议客户端(OpenEBS 需要该协议提供存储支持) yum install iscsi-initiator-utils -y # 设置开机启动 systemctl enable --now iscsid # 启动服务 systemctl start iscsid # 查看服务状态 systemctl status iscsid # 安装 OpenEBS kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml # 查看状态(下载镜像可能需要一些时间) kubectl get all -n openebs # 在主节点创建本地 storage class kubectl apply -f default-storage-class.yaml -

安装

# 安装资源 kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.1/kubesphere-installer.yaml kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.1/cluster-configuration.yaml # 编辑cluster-configuration.yaml, 修改devops和logging的enable为true # 检查安装日志 kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f # 查看端口 kubectl get svc/ks-console -n kubesphere-system # 默认端口是 30880,如果是云服务商,或开启了防火墙,记得要开放该端口 # 登录控制台访问,账号密码:admin/P@88w0rd -

查看页面

-

启用可插拔组件

https://kubesphere.io/zh/docs/v3.3/pluggable-components/ -

卸载执行脚本 kubesphere-delete.sh

#!/usr/bin/env bash function delete_sure(){ cat << eof $(echo -e "\033[1;36mNote:\033[0m") Delete the KubeSphere cluster, including the module kubesphere-system kubesphere-devops-system kubesphere-devops-worker kubesphere-monitoring-system kubesphere-logging-system openpitrix-system. eof read -p "Please reconfirm that you want to delete the KubeSphere cluster. (yes/no) " ans while [[ "x"$ans != "xyes" && "x"$ans != "xno" ]]; do read -p "Please reconfirm that you want to delete the KubeSphere cluster. (yes/no) " ans done if [[ "x"$ans == "xno" ]]; then exit fi } delete_sure # delete ks-installer kubectl delete deploy ks-installer -n kubesphere-system 2>/dev/null # delete helm for namespaces in kubesphere-system kubesphere-devops-system kubesphere-monitoring-system kubesphere-logging-system openpitrix-system kubesphere-monitoring-federated do helm list -n $namespaces | grep -v NAME | awk '{print $1}' | sort -u | xargs -r -L1 helm uninstall -n $namespaces 2>/dev/null done # delete kubefed kubectl get cc -n kubesphere-system ks-installer -o jsonpath="{.status.multicluster}" | grep enable if [[ $? -eq 0 ]]; then # delete kubefed types resources for kubefed in `kubectl api-resources --namespaced=true --api-group=types.kubefed.io -o name` do kubectl delete -n kube-federation-system $kubefed --all 2>/dev/null done for kubefed in `kubectl api-resources --namespaced=false --api-group=types.kubefed.io -o name` do kubectl delete $kubefed --all 2>/dev/null done # delete kubefed core resouces for kubefed in `kubectl api-resources --namespaced=true --api-group=core.kubefed.io -o name` do kubectl delete -n kube-federation-system $kubefed --all 2>/dev/null done for kubefed in `kubectl api-resources --namespaced=false --api-group=core.kubefed.io -o name` do kubectl delete $kubefed --all 2>/dev/null done # uninstall kubefed chart helm uninstall -n kube-federation-system kubefed 2>/dev/null fi helm uninstall -n kube-system snapshot-controller 2>/dev/null # delete kubesphere deployment & statefulset kubectl delete deployment -n kubesphere-system `kubectl get deployment -n kubesphere-system -o jsonpath="{.items[*].metadata.name}"` 2>/dev/null kubectl delete statefulset -n kubesphere-system `kubectl get statefulset -n kubesphere-system -o jsonpath="{.items[*].metadata.name}"` 2>/dev/null # delete monitor resources kubectl delete prometheus -n kubesphere-monitoring-system k8s 2>/dev/null kubectl delete Alertmanager -n kubesphere-monitoring-system main 2>/dev/null kubectl delete DaemonSet -n kubesphere-monitoring-system node-exporter 2>/dev/null kubectl delete statefulset -n kubesphere-monitoring-system `kubectl get statefulset -n kubesphere-monitoring-system -o jsonpath="{.items[*].metadata.name}"` 2>/dev/null # delete grafana kubectl delete deployment -n kubesphere-monitoring-system grafana 2>/dev/null kubectl --no-headers=true get pvc -n kubesphere-monitoring-system -o custom-columns=:metadata.namespace,:metadata.name | grep -E kubesphere-monitoring-system | xargs -n2 kubectl delete pvc -n 2>/dev/null # delete pvc pvcs="kubesphere-system|openpitrix-system|kubesphere-devops-system|kubesphere-logging-system" kubectl --no-headers=true get pvc --all-namespaces -o custom-columns=:metadata.namespace,:metadata.name | grep -E $pvcs | xargs -n2 kubectl delete pvc -n 2>/dev/null # delete rolebindings delete_role_bindings() { for rolebinding in `kubectl -n $1 get rolebindings -l iam.kubesphere.io/user-ref -o jsonpath="{.items[*].metadata.name}"` do kubectl -n $1 delete rolebinding $rolebinding 2>/dev/null done } # delete roles delete_roles() { kubectl -n $1 delete role admin 2>/dev/null kubectl -n $1 delete role operator 2>/dev/null kubectl -n $1 delete role viewer 2>/dev/null for role in `kubectl -n $1 get roles -l iam.kubesphere.io/role-template -o jsonpath="{.items[*].metadata.name}"` do kubectl -n $1 delete role $role 2>/dev/null done } # remove useless labels and finalizers for ns in `kubectl get ns -o jsonpath="{.items[*].metadata.name}"` do kubectl label ns $ns kubesphere.io/workspace- kubectl label ns $ns kubesphere.io/namespace- kubectl patch ns $ns -p '{"metadata":{"finalizers":null,"ownerReferences":null}}' delete_role_bindings $ns delete_roles $ns done # delete clusterroles delete_cluster_roles() { for role in `kubectl get clusterrole -l iam.kubesphere.io/role-template -o jsonpath="{.items[*].metadata.name}"` do kubectl delete clusterrole $role 2>/dev/null done for role in `kubectl get clusterroles | grep "kubesphere" | awk '{print $1}'| paste -sd " "` do kubectl delete clusterrole $role 2>/dev/null done } delete_cluster_roles # delete clusterrolebindings delete_cluster_role_bindings() { for rolebinding in `kubectl get clusterrolebindings -l iam.kubesphere.io/role-template -o jsonpath="{.items[*].metadata.name}"` do kubectl delete clusterrolebindings $rolebinding 2>/dev/null done for rolebinding in `kubectl get clusterrolebindings | grep "kubesphere" | awk '{print $1}'| paste -sd " "` do kubectl delete clusterrolebindings $rolebinding 2>/dev/null done } delete_cluster_role_bindings # delete clusters for cluster in `kubectl get clusters -o jsonpath="{.items[*].metadata.name}"` do kubectl patch cluster $cluster -p '{"metadata":{"finalizers":null}}' --type=merge done kubectl delete clusters --all 2>/dev/null # delete workspaces for ws in `kubectl get workspaces -o jsonpath="{.items[*].metadata.name}"` do kubectl patch workspace $ws -p '{"metadata":{"finalizers":null}}' --type=merge done kubectl delete workspaces --all 2>/dev/null # make DevOps CRs deletable for devops_crd in $(kubectl get crd -o=jsonpath='{range .items[*]}{.metadata.name}{"\n"}{end}' | grep "devops.kubesphere.io"); do for ns in $(kubectl get ns -ojsonpath='{.items..metadata.name}'); do for devops_res in $(kubectl get $devops_crd -n $ns -oname); do kubectl patch $devops_res -n $ns -p '{"metadata":{"finalizers":[]}}' --type=merge done done done # delete validatingwebhookconfigurations for webhook in ks-events-admission-validate users.iam.kubesphere.io network.kubesphere.io validating-webhook-configuration resourcesquotas.quota.kubesphere.io do kubectl delete validatingwebhookconfigurations.admissionregistration.k8s.io $webhook 2>/dev/null done # delete mutatingwebhookconfigurations for webhook in ks-events-admission-mutate logsidecar-injector-admission-mutate mutating-webhook-configuration do kubectl delete mutatingwebhookconfigurations.admissionregistration.k8s.io $webhook 2>/dev/null done # delete users for user in `kubectl get users -o jsonpath="{.items[*].metadata.name}"` do kubectl patch user $user -p '{"metadata":{"finalizers":null}}' --type=merge done kubectl delete users --all 2>/dev/null # delete helm resources for resource_type in `echo helmcategories helmapplications helmapplicationversions helmrepos helmreleases`; do for resource_name in `kubectl get ${resource_type}.application.kubesphere.io -o jsonpath="{.items[*].metadata.name}"`; do kubectl patch ${resource_type}.application.kubesphere.io ${resource_name} -p '{"metadata":{"finalizers":null}}' --type=merge done kubectl delete ${resource_type}.application.kubesphere.io --all 2>/dev/null done # delete workspacetemplates for workspacetemplate in `kubectl get workspacetemplates.tenant.kubesphere.io -o jsonpath="{.items[*].metadata.name}"` do kubectl patch workspacetemplates.tenant.kubesphere.io $workspacetemplate -p '{"metadata":{"finalizers":null}}' --type=merge done kubectl delete workspacetemplates.tenant.kubesphere.io --all 2>/dev/null # delete federatednamespaces in namespace kubesphere-monitoring-federated for resource in $(kubectl get federatednamespaces.types.kubefed.io -n kubesphere-monitoring-federated -oname); do kubectl patch "${resource}" -p '{"metadata":{"finalizers":null}}' --type=merge -n kubesphere-monitoring-federated done # delete crds for crd in `kubectl get crds -o jsonpath="{.items[*].metadata.name}"` do if [[ $crd == *kubesphere.io ]] || [[ $crd == *kubefed.io ]] ; then kubectl delete crd $crd 2>/dev/null; fi done # delete relevance ns for ns in kube-federation-system kubesphere-alerting-system kubesphere-controls-system kubesphere-devops-system kubesphere-devops-worker kubesphere-logging-system kubesphere-monitoring-system kubesphere-monitoring-federated openpitrix-system kubesphere-system do kubectl delete ns $ns 2>/dev/null done

-

-

Rancher: https://www.rancher.cn/

-

Kuboard: https://www.kuboard.cn/

1544

1544

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?