1、前言

前段时间,在升级Hive版本(从Hive1.1.0升级至Hive2.3.6)的过程中,遇到了权限兼容问题。

(升级相关请移步Hive1.1.0升级至2.3.6 踩坑记录)

Hive1.1.0使用的是AuthorizerV1而Hive2.3.6默认是AuthorizerV2,两者相差极大。

其中AuthorizerV2的权限验证极为严格,如果曾经使用V1鉴权想要使用V2的,需要修改部分代码,这是我提交的patch,供参考HIVE-22830

2、权限认证

v1和v2的权限授权和查询,在SQL语法上是通用的,因此我们不需要对Hive授权相关的系统做代码上的修改。

v1权限认证

(org.apache.hadoop.hive.ql.security.authorization.DefaultHiveAuthorizationProvider):官方文档

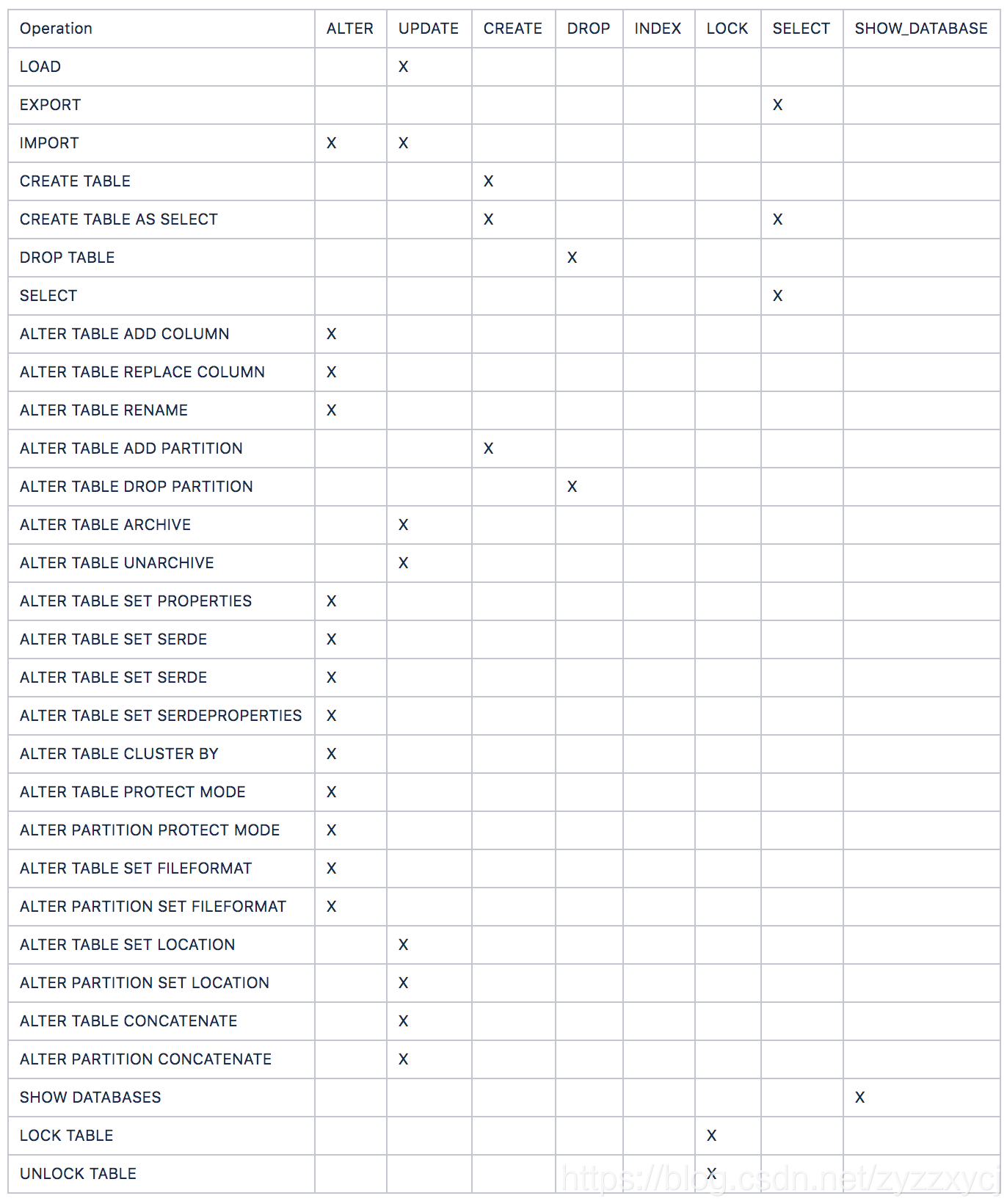

总共有ALL | ALTER | UPDATE | CREATE | DROP | INDEX | LOCK | SELECT | SHOW_DATABASE这几种权限类型。

相关Hive操作所需要的权限如下表:

v2权限认证

(org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory):官方文档

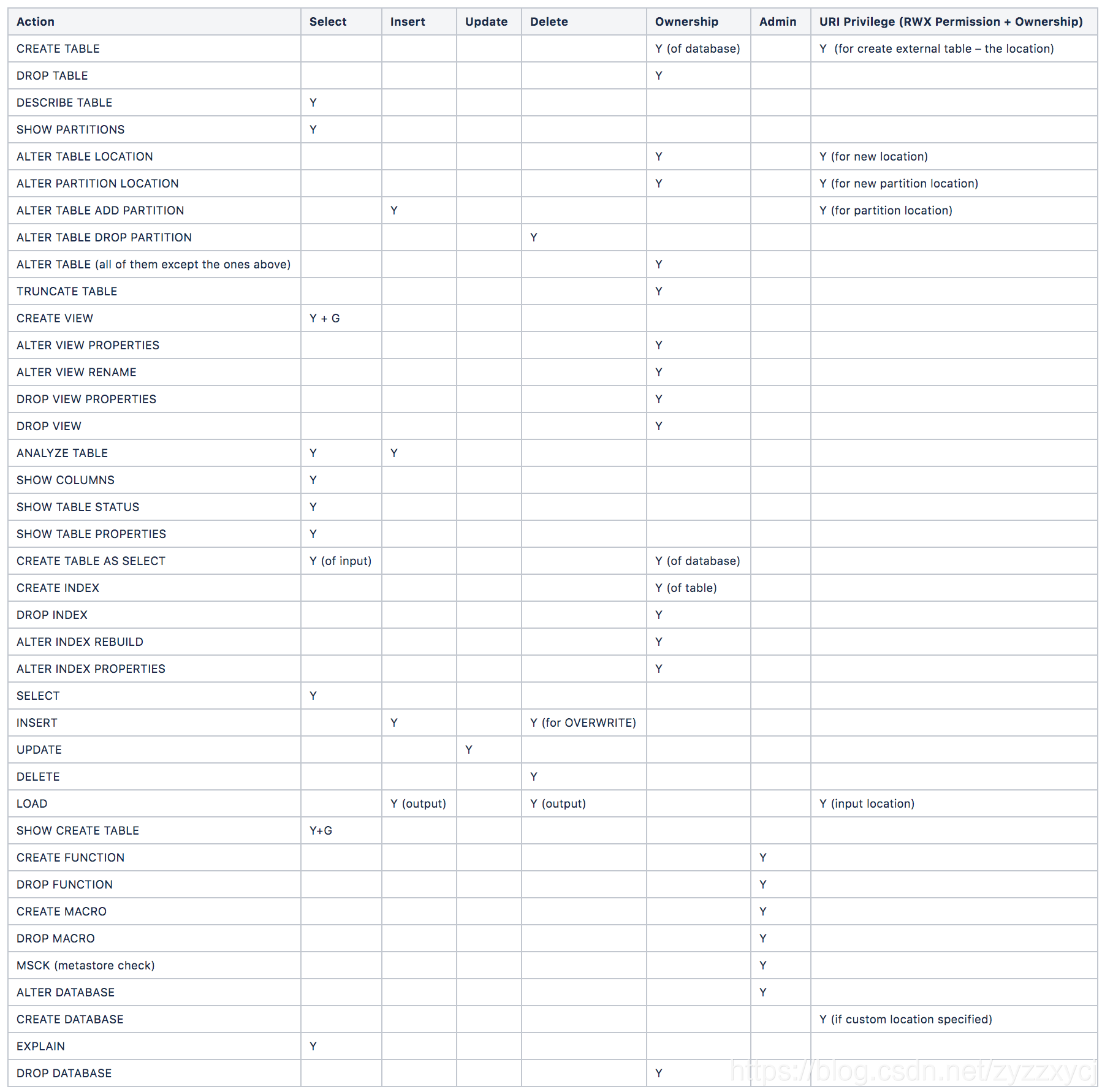

总共有INSERT | SELECT | UPDATE | DELETE | ALL这几种类型的权限。

相关Hive操作所需要的权限如下表:

Y代表需要有该权限

Y+G代表不仅需要有改权限,还必须是能给别人赋权的权限(“WITH GRANT OPTION”)

来说说两者在一些细节上的区别:

1、v2的ALL权限在mysql中会分成INSERT | SELECT | UPDATE | DELETE 来保存,而v1则是直接存ALL。

2、v1对库授权,则用户/角色对该库下的所有表都有相应的权限,而v2必须对表一一授权。

3、v1如果需要删库/表,只需要拥有对应的drop权限即可,而v2如果需要删库/表,必须是该库/表的owner,这个owner可以是用户或者角色。

3、源码解析(Hive3.1版本)

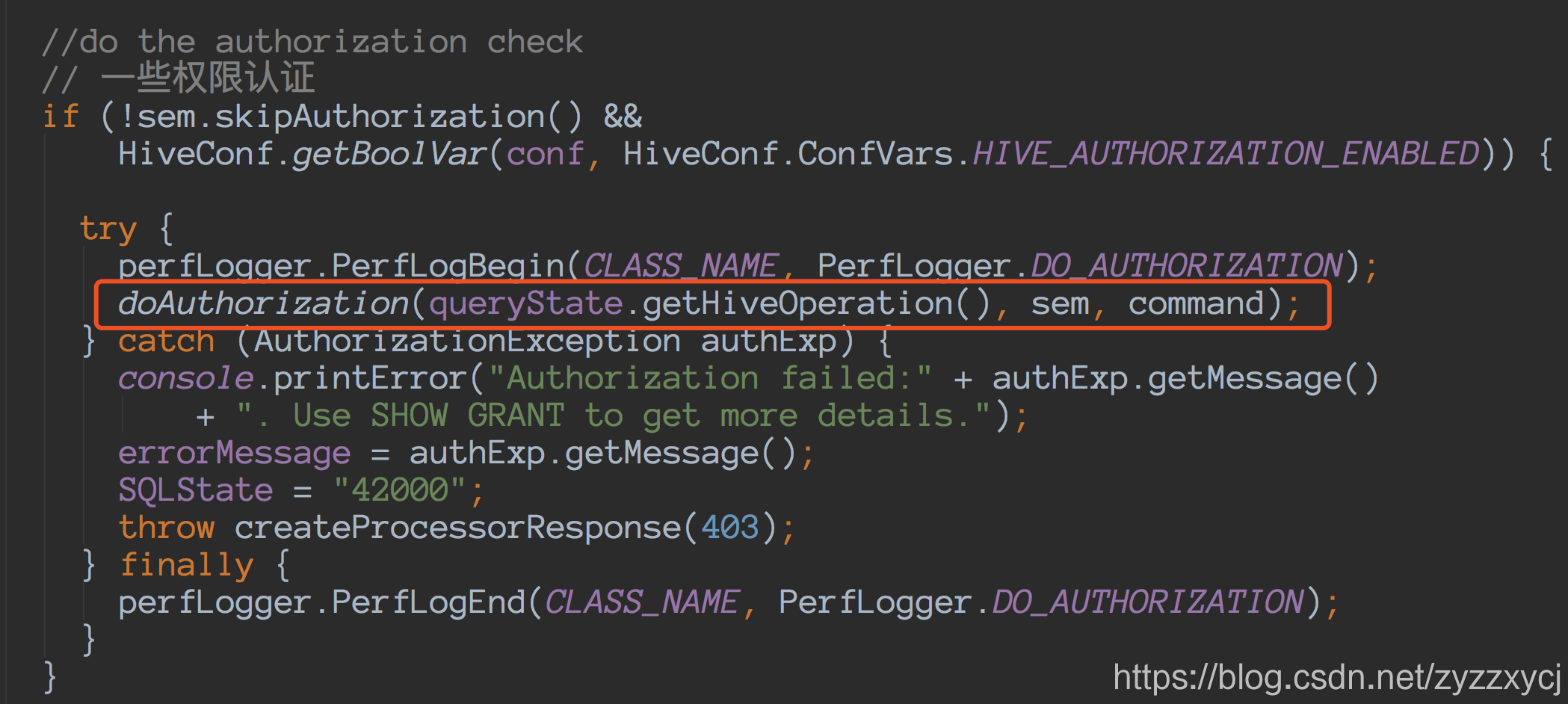

在Hive SQL执行全过程源码解析中,介绍了Driver.compile中权限认证的入口:

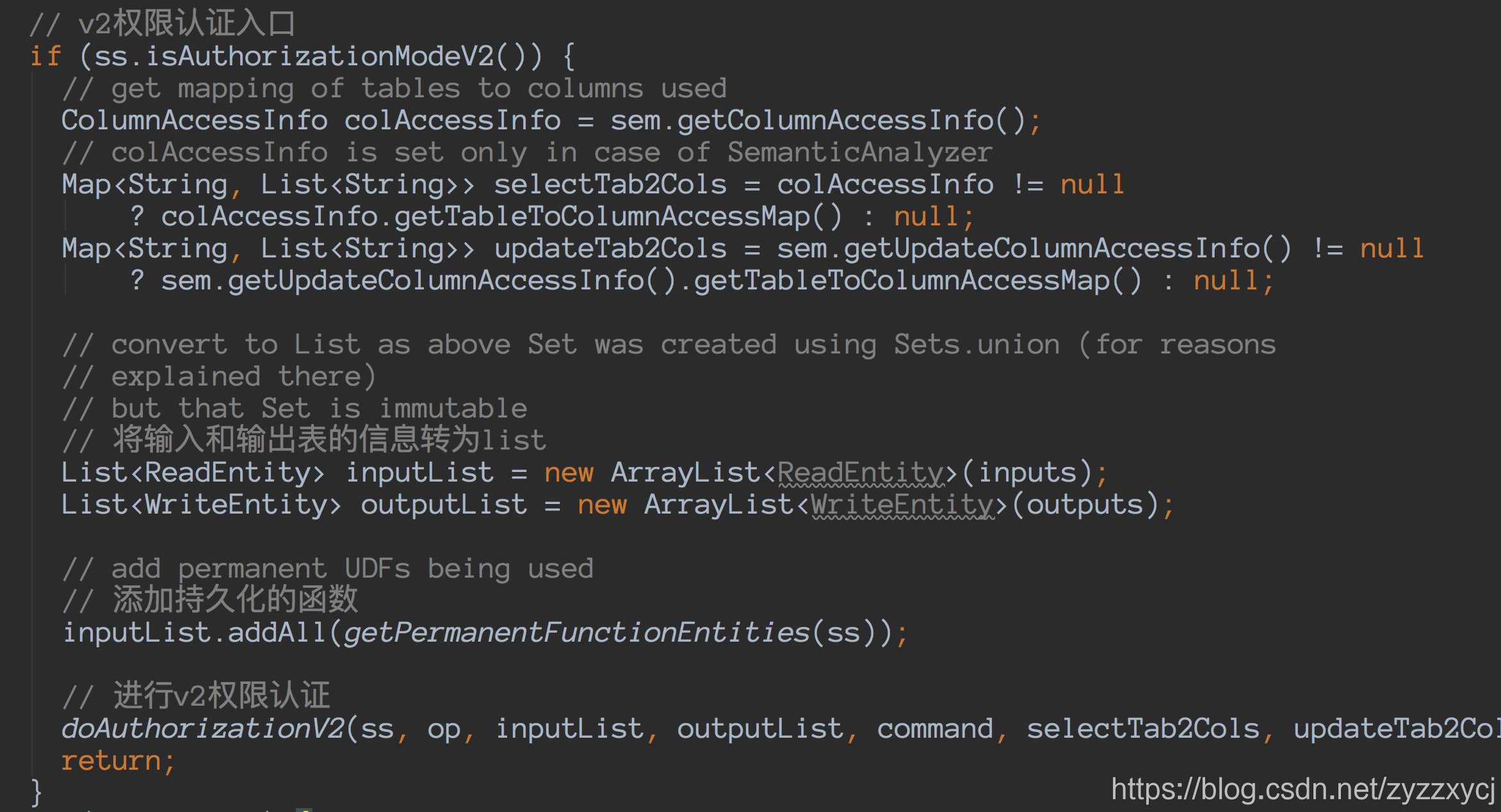

接下来着重看下v2权限认证的实现:

如何判断是用v1或v2进行认证?

if (ss.isAuthorizationModeV2())

-> public boolean isAuthorizationModeV2(){

return getAuthorizationMode() == AuthorizationMode.V2;

}

--> public AuthorizationMode getAuthorizationMode(){

setupAuth();

// 判断在初始化操作setupAuth()后,authorizer是否为null

// 优先判断是否为v1

if(authorizer != null){

return AuthorizationMode.V1;

}else if(authorizerV2 != null){

return AuthorizationMode.V2;

}

}

setupAuth():

/**

* Setup authentication and authorization plugins for this session.

*/

private void setupAuth() {

// 已经被初始化了,直接返回

if (authenticator != null) {

// auth has been initialized

return;

}

try {

// 通过获取hive.security.authenticator.manager参数来决定authenticator

authenticator = HiveUtils.getAuthenticator(sessionConf,

HiveConf.ConfVars.HIVE_AUTHENTICATOR_MANAGER);

authenticator.setSessionState(this);

// 获取hive.security.authorization.manager参数,为权限验证实现类名

// 决定了使用v1还是v2

// Hive1默认v1:(org.apache.hadoop.hive.ql.security.authorization.DefaultHiveAuthorizationProvider)

// Hive2及以后默认v2:(org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory)

String clsStr = HiveConf.getVar(sessionConf, HiveConf.ConfVars.HIVE_AUTHORIZATION_MANAGER);

// 使用反射尝试实例化权限认证类对象

// 生成HiveAuthorizationProvider对象,v1

authorizer = HiveUtils.getAuthorizeProviderManager(sessionConf,

clsStr, authenticator, true);

if (authorizer == null) {

// if it was null, the new (V2) authorization plugin must be specified in

// config

// 实现和v1大致相同,使用反射尝试实例化权限认证类对象

HiveAuthorizerFactory authorizerFactory = HiveUtils.getAuthorizerFactory(sessionConf,

HiveConf.ConfVars.HIVE_AUTHORIZATION_MANAGER);

HiveAuthzSessionContext.Builder authzContextBuilder = new HiveAuthzSessionContext.Builder();

authzContextBuilder.setClientType(isHiveServerQuery() ? CLIENT_TYPE.HIVESERVER2

: CLIENT_TYPE.HIVECLI);

authzContextBuilder.setSessionString(getSessionId());

// 生成HiveAuthorizer对象,v2

authorizerV2 = authorizerFactory.createHiveAuthorizer(new HiveMetastoreClientFactoryImpl(),

sessionConf, authenticator, authzContextBuilder.build());

setAuthorizerV2Config();

}

// create the create table grants with new config

createTableGrants = CreateTableAutomaticGrant.create(sessionConf);

} catch (HiveException e) {

LOG.error("Error setting up authorization: " + e.getMessage(), e);

throw new RuntimeException(e);

}

if(LOG.isDebugEnabled()){

Object authorizationClass = getActiveAuthorizer();

LOG.debug("Session is using authorization class " + authorizationClass.getClass());

}

return;

}

继续看v2的实现:

ss.getAuthorizerV2().checkPrivileges(hiveOpType, inputsHObjs, outputHObjs, authzContextBuilder.build());

-> public void checkPrivileges(HiveOperationType hiveOpType, List<HivePrivilegeObject> inputHObjs,

List<HivePrivilegeObject> outputHObjs, HiveAuthzContext context)

throws HiveAuthzPluginException, HiveAccessControlException {

authValidator.checkPrivileges(hiveOpType, inputHObjs, outputHObjs, context);

}

--> public void checkPrivileges(HiveOperationType hiveOpType, List<HivePrivilegeObject> inputHObjs,

List<HivePrivilegeObject> outputHObjs, HiveAuthzContext context)

throws HiveAuthzPluginException, HiveAccessControlException {

if (LOG.isDebugEnabled()) {

String msg = "Checking privileges for operation " + hiveOpType + " by user "

+ authenticator.getUserName() + " on " + " input objects " + inputHObjs

+ " and output objects " + outputHObjs + ". Context Info: " + context;

LOG.debug(msg);

}

String userName = authenticator.getUserName();

// 获取metastore api入口

IMetaStoreClient metastoreClient = metastoreClientFactory.getHiveMetastoreClient();

// check privileges on input and output objects

List<String> deniedMessages = new ArrayList<String>();

// 分别对输入输出鉴权,结果计入deniedMessages

checkPrivileges(hiveOpType, inputHObjs, metastoreClient, userName, IOType.INPUT, deniedMessages);

checkPrivileges(hiveOpType, outputHObjs, metastoreClient, userName, IOType.OUTPUT, deniedMessages);

// 若deniedMessages大小不为0,则权限不足,抛出异常

SQLAuthorizationUtils.assertNoDeniedPermissions(new HivePrincipal(userName,

HivePrincipalType.USER), hiveOpType, deniedMessages);

}

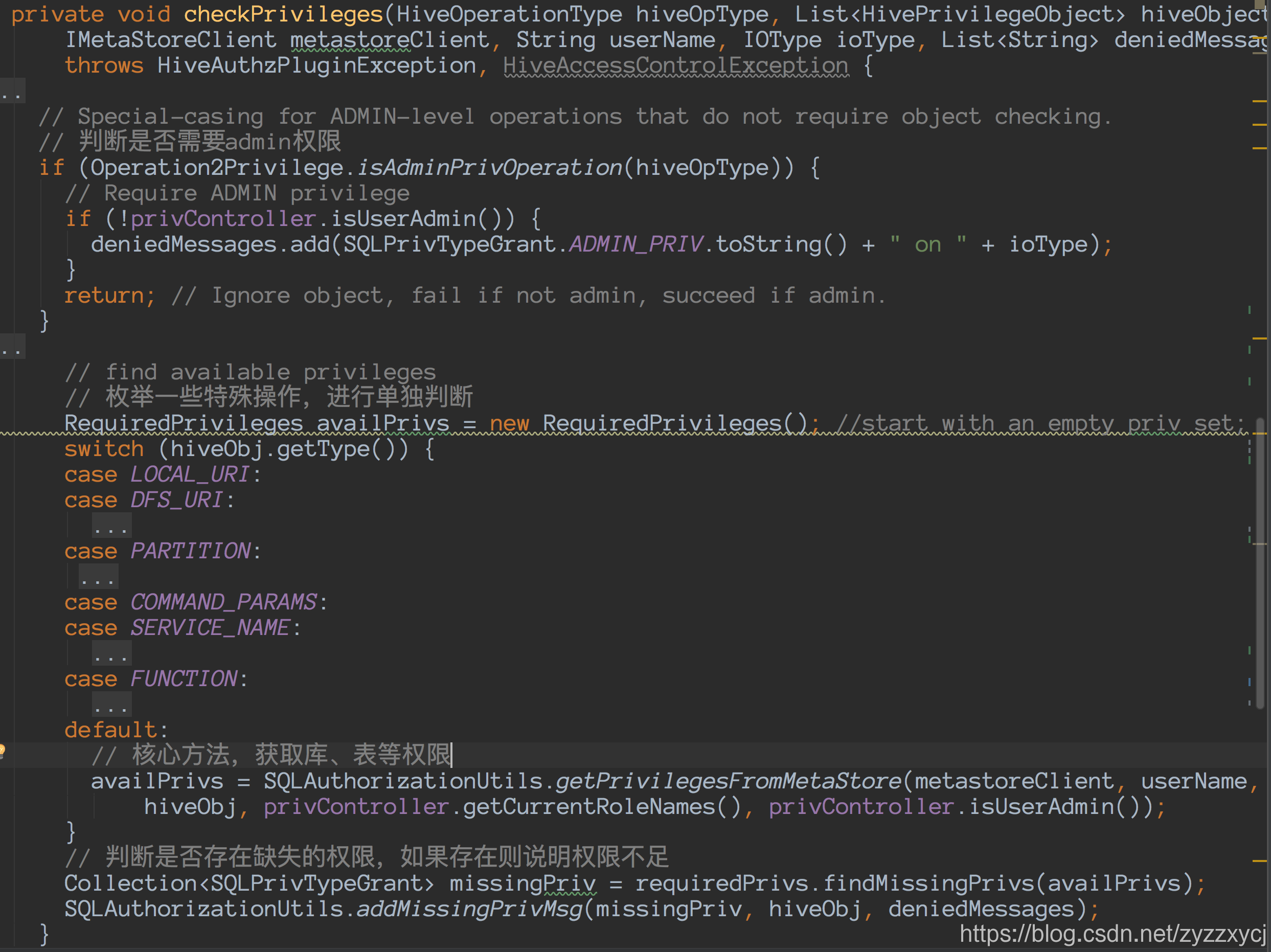

checkPrivileges:

getPrivilegesFromMetaStore:

...

thrifPrivs = metastoreClient.get_privilege_set(objectRef, userName, null);

-> public PrincipalPrivilegeSet get_privilege_set(HiveObjectRef hiveObject,

String userName, List<String> groupNames) throws MetaException,

TException {

if (!hiveObject.isSetCatName()) {

hiveObject.setCatName(getDefaultCatalog(conf));

}

// 访问metastore

return client.get_privilege_set(hiveObject, userName, groupNames);

}

...

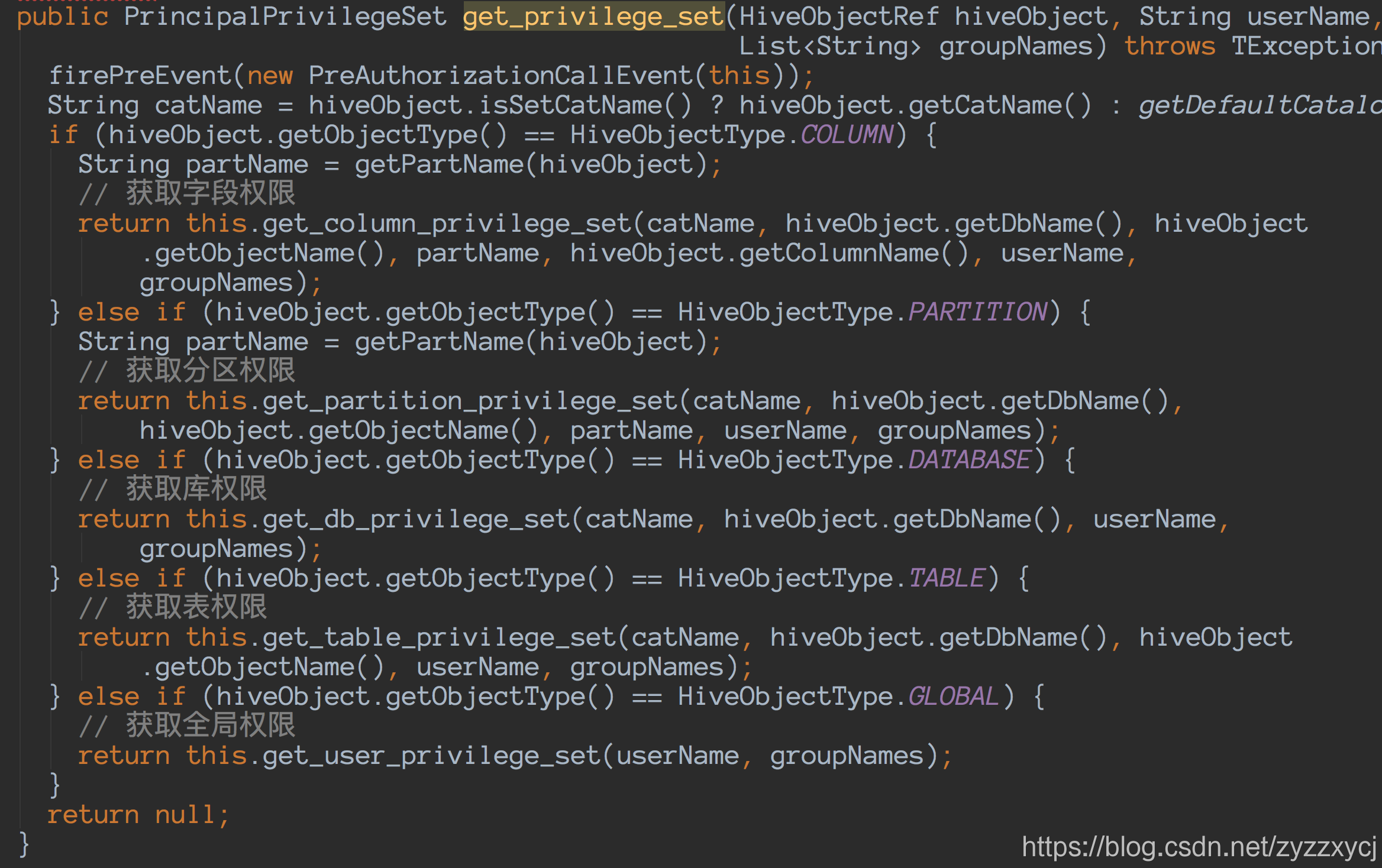

get_privilege_set:

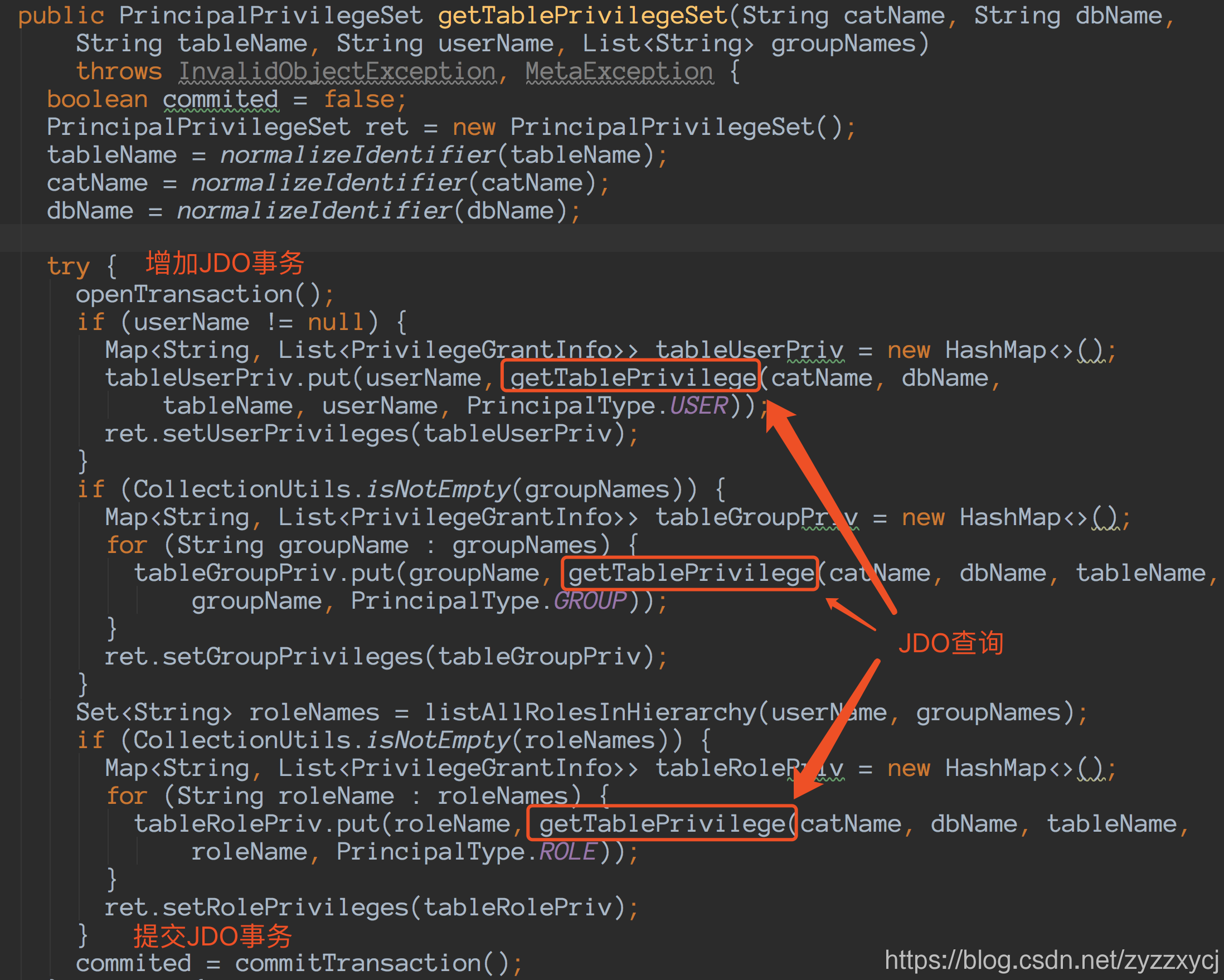

这边以获取表权限为例,get_table_privilege_set:

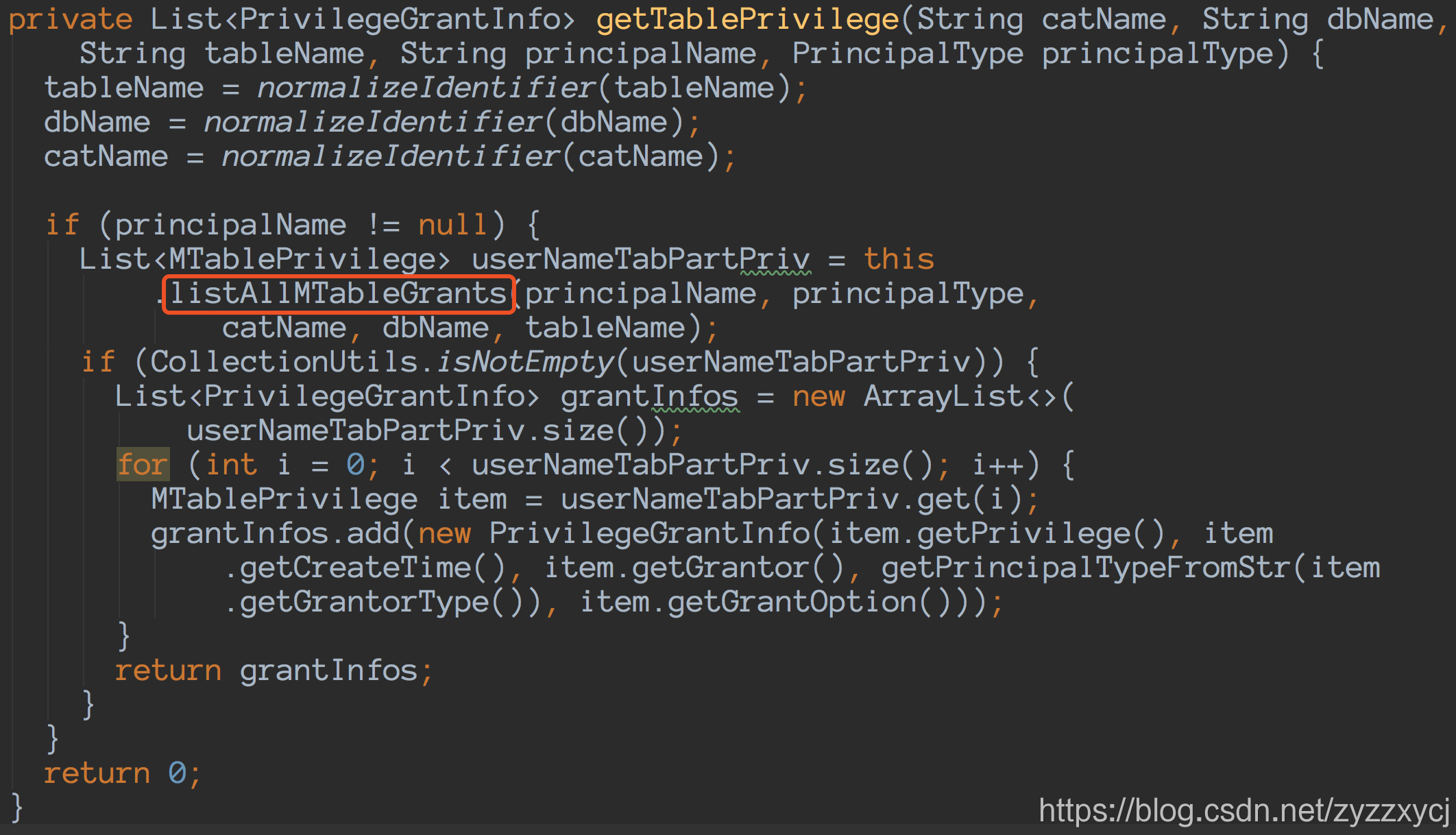

getTablePrivilege:

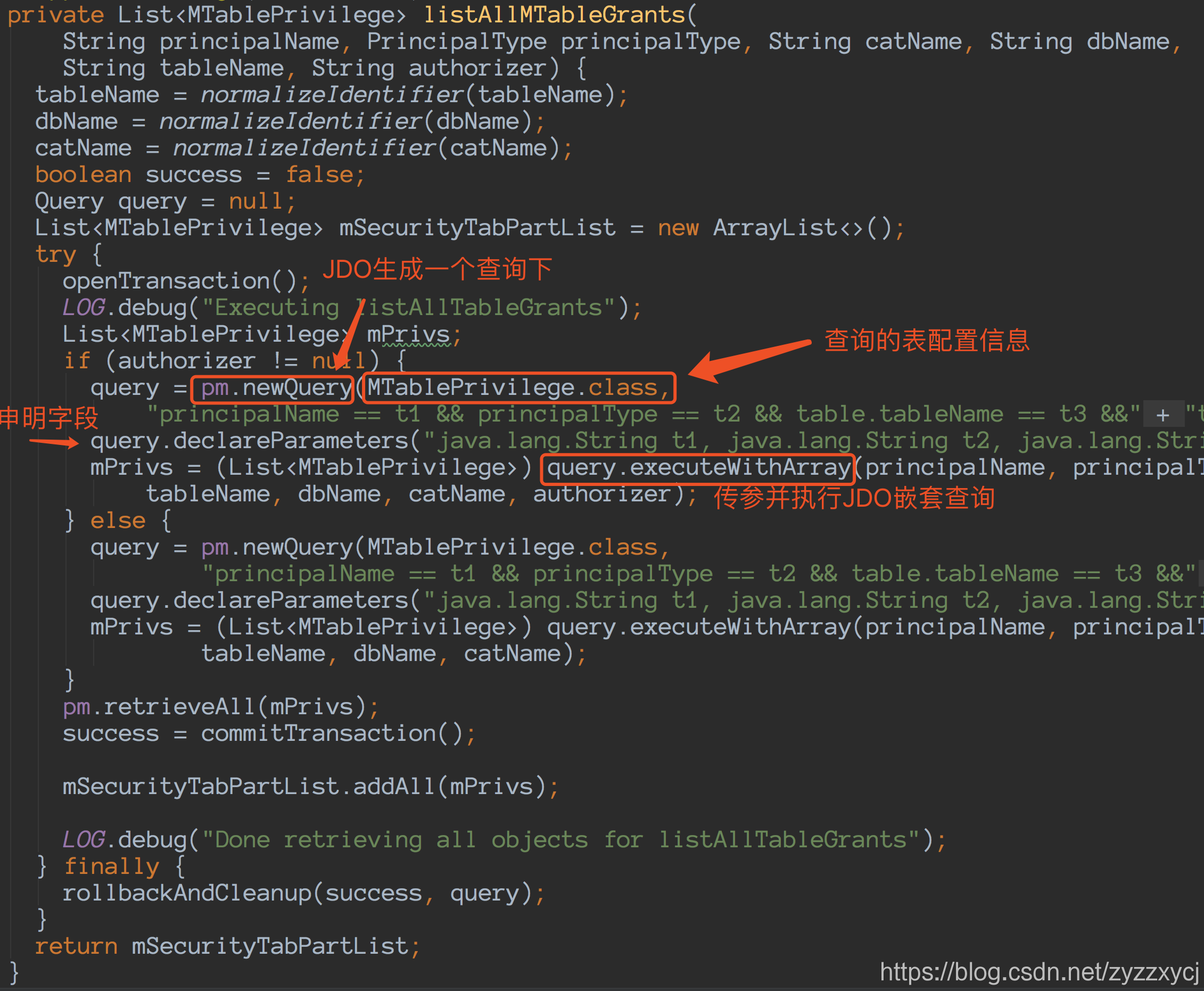

listAllMTableGrants:

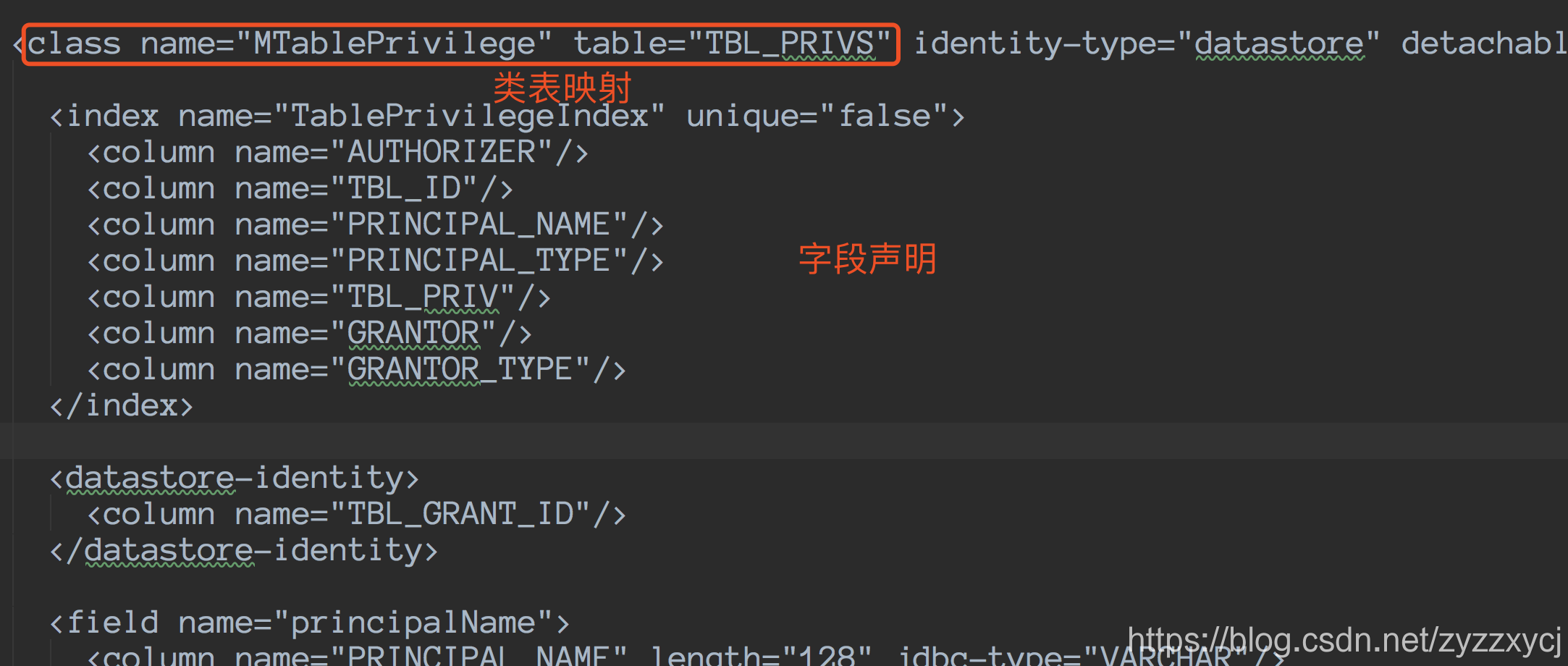

package.jdo:

至此,权限认证核心部分的代码已经梳理完了,如果对JDO不是很熟悉,可以看一下JDOQL官方文档。

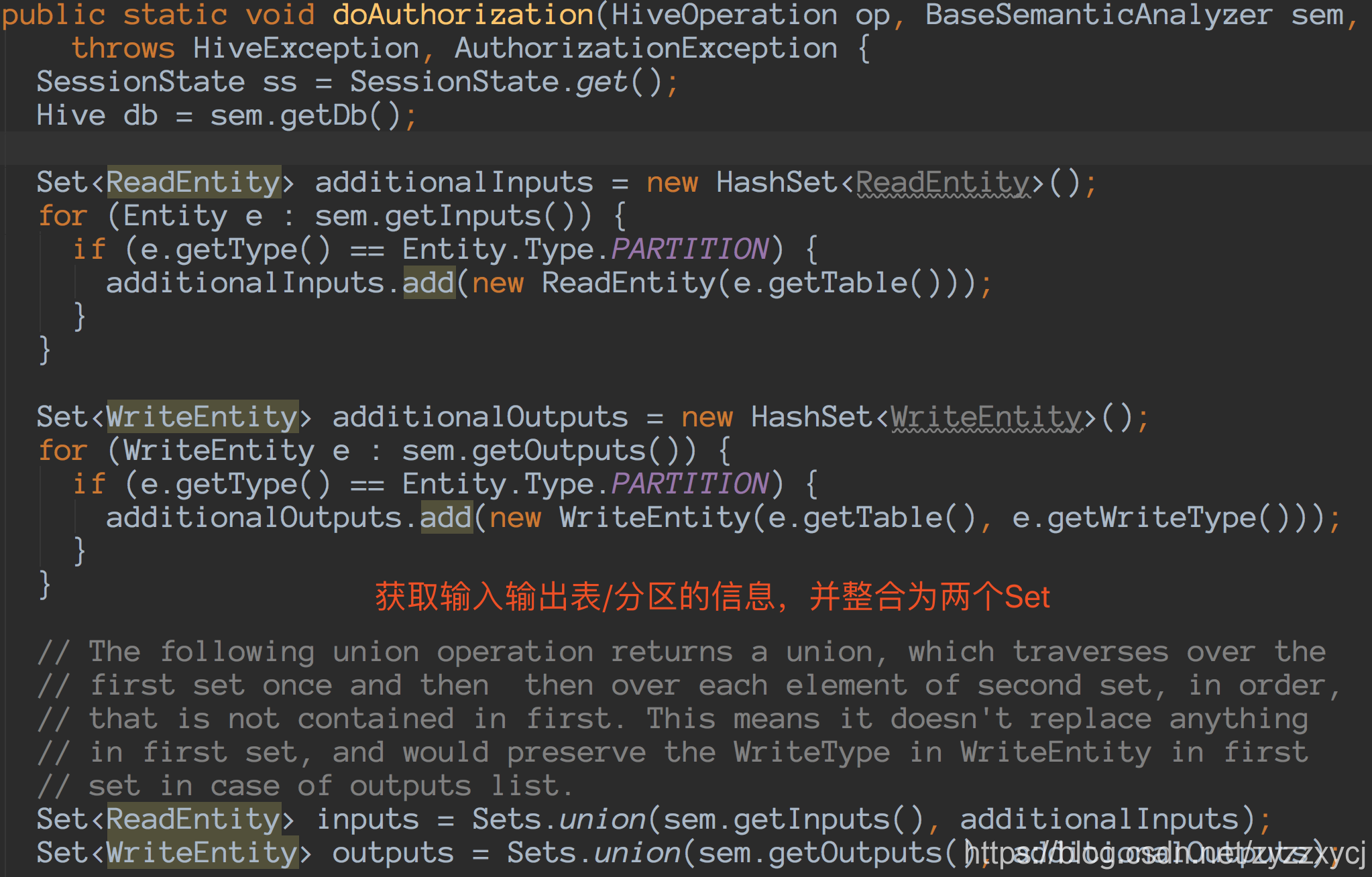

再回到Driver.doAuthorization,如果不走v2则会默认进行v1鉴权,其底层实现也是同v2一样,通过连metastore查库获取权限信息,然后比对。

// 进行v1权限认证

HiveAuthorizationProvider authorizer = ss.getAuthorizer();

if (op.equals(HiveOperation.CREATEDATABASE)) {

// 建库鉴权

authorizer.authorize(

op.getInputRequiredPrivileges(), op.getOutputRequiredPrivileges());

} else if (op.equals(HiveOperation.CREATETABLE_AS_SELECT)

|| op.equals(HiveOperation.CREATETABLE)) {

// 建表鉴权

authorizer.authorize(

db.getDatabase(SessionState.get().getCurrentDatabase()), null,

HiveOperation.CREATETABLE_AS_SELECT.getOutputRequiredPrivileges());

} else {

if (op.equals(HiveOperation.IMPORT)) {

ImportSemanticAnalyzer isa = (ImportSemanticAnalyzer) sem;

if (!isa.existsTable()) {

// 导入(import)鉴权

authorizer.authorize(

db.getDatabase(SessionState.get().getCurrentDatabase()), null,

HiveOperation.CREATETABLE_AS_SELECT.getOutputRequiredPrivileges());

}

}

}

if (outputs != null && outputs.size() > 0) {

for (WriteEntity write : outputs) {

if (write.isDummy() || write.isPathType()) {

continue;

}

if (write.getType() == Entity.Type.DATABASE) {

if (!op.equals(HiveOperation.IMPORT)){

// We skip DB check for import here because we already handle it above

// as a CTAS check.

// 写库鉴权

authorizer.authorize(write.getDatabase(),

null, op.getOutputRequiredPrivileges());

}

continue;

}

if (write.getType() == WriteEntity.Type.PARTITION) {

Partition part = db.getPartition(write.getTable(), write

.getPartition().getSpec(), false);

if (part != null) {

// 写分区鉴权

authorizer.authorize(write.getPartition(), null,

op.getOutputRequiredPrivileges());

continue;

}

}

// 写表鉴权

if (write.getTable() != null) {

authorizer.authorize(write.getTable(), null,

op.getOutputRequiredPrivileges());

}

}

}

// 之后就是对读取的鉴权,不一一注释了。

// 其底层实现也是同v2一样,连metastore查库获取权限信息,然后比对。

if (inputs != null && inputs.size() > 0) {

Map<Table, List<String>> tab2Cols = new HashMap<Table, List<String>>();

Map<Partition, List<String>> part2Cols = new HashMap<Partition, List<String>>();

//determine if partition level privileges should be checked for input tables

Map<String, Boolean> tableUsePartLevelAuth = new HashMap<String, Boolean>();

for (ReadEntity read : inputs) {

if (read.isDummy() || read.isPathType() || read.getType() == Entity.Type.DATABASE) {

continue;

}

Table tbl = read.getTable();

if ((read.getPartition() != null) || (tbl != null && tbl.isPartitioned())) {

String tblName = tbl.getTableName();

if (tableUsePartLevelAuth.get(tblName) == null) {

boolean usePartLevelPriv = (tbl.getParameters().get(

"PARTITION_LEVEL_PRIVILEGE") != null && ("TRUE"

.equalsIgnoreCase(tbl.getParameters().get(

"PARTITION_LEVEL_PRIVILEGE"))));

if (usePartLevelPriv) {

tableUsePartLevelAuth.put(tblName, Boolean.TRUE);

} else {

tableUsePartLevelAuth.put(tblName, Boolean.FALSE);

}

}

}

}

// column authorization is checked through table scan operators.

getTablePartitionUsedColumns(op, sem, tab2Cols, part2Cols, tableUsePartLevelAuth);

// cache the results for table authorization

Set<String> tableAuthChecked = new HashSet<String>();

for (ReadEntity read : inputs) {

// if read is not direct, we do not need to check its autho.

if (read.isDummy() || read.isPathType() || !read.isDirect()) {

continue;

}

if (read.getType() == Entity.Type.DATABASE) {

authorizer.authorize(read.getDatabase(), op.getInputRequiredPrivileges(), null);

continue;

}

Table tbl = read.getTable();

if (tbl.isView() && sem instanceof SemanticAnalyzer) {

tab2Cols.put(tbl,

sem.getColumnAccessInfo().getTableToColumnAccessMap().get(tbl.getCompleteName()));

}

if (read.getPartition() != null) {

Partition partition = read.getPartition();

tbl = partition.getTable();

// use partition level authorization

if (Boolean.TRUE.equals(tableUsePartLevelAuth.get(tbl.getTableName()))) {

List<String> cols = part2Cols.get(partition);

if (cols != null && cols.size() > 0) {

authorizer.authorize(partition.getTable(),

partition, cols, op.getInputRequiredPrivileges(),

null);

} else {

authorizer.authorize(partition,

op.getInputRequiredPrivileges(), null);

}

continue;

}

}

// if we reach here, it means it needs to do a table authorization

// check, and the table authorization may already happened because of other

// partitions

if (tbl != null && !tableAuthChecked.contains(tbl.getTableName()) &&

!(Boolean.TRUE.equals(tableUsePartLevelAuth.get(tbl.getTableName())))) {

List<String> cols = tab2Cols.get(tbl);

if (cols != null && cols.size() > 0) {

authorizer.authorize(tbl, null, cols,

op.getInputRequiredPrivileges(), null);

} else {

authorizer.authorize(tbl, op.getInputRequiredPrivileges(),

null);

}

tableAuthChecked.add(tbl.getTableName());

}

}

}

981

981

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?