此文鸣谢lct1999,MathLover与我一起翻译,给我提供了许多的帮助

Claris老司机昨天向我安利了这篇波兰黑科技论文,主要讲的是怎么使用Hash来做AC自动机能做的那些问题,那么为了黑科技事业的蓬勃发展我今天就来把它翻译一下.翻译进度可能会非常非常慢….在线持久更新

翻译的不好的地方可能会非常多…可能很多地方都会是直译…只是给大家看这个论文提供一个参考罢了

语序懒得调整成汉语语序辣

如果某些地方有更好的翻译建议,请联系我.

不严格按照原论文的排版来翻译…

Section1.引言

多串匹配问题,即在长度为n的文本串中定位s个总长为m的模板串的出现位置,是字符串算法领域中的一个基本问题.

此前存在一个来自Aho and Corasick的算法,可以以

O(n+m)

的时间代价,

O(m)

的空间代价(除掉额外的用来储存模板串和文本串的空间代价)来解决这个问题.

为了列举出所有(共计occ个)出现的位置(而不是如仅仅最左边的出现位置),

O(occ)

的额外时间代价也是必要的.

在空间十分有限的情况下,我们可以使用压缩后的AC自动机(或许是使用STL或者可持久化线段树来保存转移边的意思)

在极端的情况下,我们还可以依次对每个模板串使用线性时间和常数级的空间的单模板串匹配算法,这样的时间复杂度增加至

O(ns+m)

.

这种算法的著名例子有Galil and Seiferas [8],Crochemore and Perrin [5],以及Karp and Rabin13。

Multiple-pattern matching, the task of locating the occurrences of s

patterns of total length m in a single text of length n, is a

fundamental problem in the field of string algorithms. The algorithm

by Aho and Corasick [2] solves this problem using O(n+m) time and O(m)

working space in addition to the space needed for the text and

patterns. To list all occ occurrences rather than, e.g., the leftmost

ones, extra O(occ) time is necessary. When the space is limited, we

can use a compressed Aho-Corasick automaton [11]. In extreme cases,

one could apply a linear-time constant-space single-pattern matching

algorithm sequentially for each pattern in turn, at the cost of

increasing the running time to O(n · s + m). Well-known examples of

such algorithms include those by Galil and Seiferas [8], Crochemore

and Perrin [5], and Karp and Rabin [13] (see [3] for a recent survey).

如果给定的所有模板串长度相同的话,我们可以很容易地将KarpRabin算法推广至多串的情形,以O(n+m)的期望时间复杂度和O(s)的空间复杂度来完成匹配.为了实现这个算法,我们将所有模板串的哈希值存储在哈希表中,然后用一个区间滑块(滑块的定义请参照LZ77编码的相关内容)来扫描整个文本串,并维护当前区间内的文本串的哈希值.利用哈希表,我们可以检查当前区间的文本串是否和某个模板串相匹配;如果能够匹配,我们就返回这一匹配及位置,并更新哈希表中的值,使得每个模板串最多被返回一次.这个想法很简单,实际上也很实用,但是我们并不知道如何将它扩展到模板串长度不同的情形.目前这种做法还有待研究.而在这篇论文中,我们会介绍一种适用于任意模板串集合的字典匹配算法,时间复杂度是

O(nlogn+m)

,空间复杂度是

O(s)

,但是使用这个算法的前提是我们可以访问模板串和文本串的任意位置.

如果需要的话,我们还可以使用

O(nlogn+m)

的时间,

O(s)

的空间来计算每个模板串在文本串中出现的最长的前缀长度.

It is easy to generalize Karp-Rabin matching to handle multiple patterns in O(n+ m) expected time and

O(s) working space provided that all patterns are of the same length [10]. To do this, we store the fingerprints

of the patterns in a hash table, and then slide a window over the text maintaining the fingerprint of the

fragment currently in the window. The hash table lets us check if the fragment is an occurrence of a pattern.

If so, we report it and update the hash table so that every pattern is returned at most once. This is a very simple and actually applied idea [1], but it is not clear how to extend it for patterns with many distinct

lengths. In this paper we develop a dictionary matching algorithm which works for any set of patterns in

O(nlog n + m) time and O(s) working space, assuming that read-only random access to the text and the

patterns is available. If required, we can compute for every pattern its longest prefix occurring in the text,

also in O(nlog n + m) time and O(s) working space.

在最近的一个克利福德的独立工作给出了一个基于流模型的字符串匹配算法.(流模型是啥..?)

只需要读入模板串和文本串一次(与只读随机访问正好相反),并且一个出现位置需要在他的最后一个字符被读入之后被立刻返回.

这个算法使用

O(slogl)

的空间复杂度,和对单个字符

O(loglog(s+l))

的时间复杂度,l指最长的模板串的长度

ms≤l≤m

.尽管两个算法中的许多想法是类似的,但是我们必须承认流模型和只读模型有很多不同.尤其是在流模型中,对每个模板串计算文本串中最长前缀出现位置需要

Ω(mlogmin(m,|∑|))

bits的空间(

∑

肯定是字符集),与我们给出的解法里的只读模型只有

O(s)

的空间正好相反.

In a very recent independent work Clifford et al. [4] gave a dictionary matching algorithm in the streaming

model. In this setting the patterns and later the text are scanned once only (as opposed to read-only random

access) and an occurrence needs to be reported immediately after its last character is read. Their algorithm

uses O(slog) space and takes O(loglog(s +)) time per character whereis the length of the longest≤ m). Even though some of the ideas used in both results are similar, one should note that

pattern ( m s ≤

the streaming and read-only models are quite different. In particular, computing the longest prefix occurring

in the text for every pattern requires Ω(mlogmin(n, |Σ|)) bits of space in the streaming model, as opposed

to the O(s) working space achieved by our solution in the read-only setting.

我们的算法作为对质数的一个应用, 我们展示了如何近似的计算一段长度为m的LZ77文本的编码,所用的空间与划分元素的数量成正比(我们再一次对文本串进行随机位置的读取操作).用较小的空间计算LZ77解析码是一个很重要的问题,空间是当今大多数算法的瓶颈所在.此外,LZ77不仅仅是在数据压缩方面十分有用,同时也是一个加速算法运行速度的手段.我们介绍一种通常的近似算法,使用

O(z)

的空间复杂度来将读入的文本转化成LZ77编码化并划分成z个元素.

对于任意的

ϵ∈(0,1]

,这个算法可以被用于在

O(ϵ−1nlogn)

的时间内生成一个由

(1+ϵ)z

个元素组成的编码.

据我们所知,使用较小空间的近似LZ77编码分解在此前还从未被考虑过,我们的算法

我们的方法显然比产生精确解的方法有更加高效.一个由K¨arkk¨ainen et提出的最近的次线性空间的算法,对每个字符额外使用

O(nd)

的时间和

O(nd)

的空间(对一个任意的参数d).一个由Gasieniec提出的更早的在线算法对每个字符额外使用使用

O(z2log2z)

的时间和

O(z)

的空间.

其他更早的方法显然都会在编码相对于n较小时使用更多的空间.这一点可以在[7]中看到最近的讨论.

As a prime application of our dictionary matching algorithm, we show how to approximate the Lempel

Ziv 77 (LZ77) parse [18] of a text of length n using working space proportional to the number of phrases

(again, we assume read-only random access to the text). Computing the LZ77 parse in small space is an

issue of high importance, with space being a frequent bottleneck of today’s systems. Moreover, LZ77 is

useful not only for data compression, but also as a way to speed up algorithms [15]. We present a general

approximation algorithm working in O(z) space for inputs admitting LZ77 parsing with z phrases. For any

ε ∈ (0,1], the algorithm can be used to produce a parse consisting of (1+ ε)z phrases in O(ε−1nlog n) time.

To the best of our knowledge, approximating LZ77 factorization in small space has not been considered

before, and our algorithm is significantly more efficient than methods producing the exact answer. A recent

sublinear-space algorithm, due to K¨arkk¨ainen et al. [12], runs in O(nd) time and uses O(n/d) space, for any

parameter d. An earlier online solution by Gasieniec et al. [9] uses O(z) space and takes O(z2 log2 z) time

for each character appended. Other previous methods use significantly more space when the parse is small

relative to n; see [7] for a recent discussion.

论文的结构.第二节介绍了一些术语并重定义了部分已知的概念.其次是关于我们的字符串匹配算法的描述.在第三节,我们会介绍如何将模板串处理成至多s部分.在第四节,我们使用不同的过程处理重复或者不重复的较长的模板.在第五节,我们将算法延伸并对每个模板计算最长的公共前缀在文本串的出现位置.最后在第七节,我们使用这个字符串匹配算法来构造一个近似LZ77编码,同时在第六节,我们会解释如何修改这个算法来使其LasVegas?(这里似乎与那个算法和赌城都没有关系?)

Structure of the paper. Sect. 2 introduces terminology and recalls several known concepts. This is

followed by the description of our dictionary matching algorithm. In Sect. 3 we show how to process

patterns of length at most s and in Sect. 4 we handle longer patterns, with different procedures for repetitive

and non-repetitive ones. In Sect. 5 we extend the algorithm to compute, for every pattern, the longest

prefix occurring in the text. Finally, in Sect. 7, we apply the dictionary matching algorithm to construct an

approximation of the LZ77 parsing, and in Sect. 6 we explain how to modify the algorithms to make them

Las Vegas.

计算模型.我们的算法被设计用于word-RAM(?)使用 Ω(logn) bit个单词.假设字符集都是多项式级别的.Karp-Rabin哈希值的使用使得算法更加随机(什么鬼?)有更大的可能返回正确的答案,错误的概率是输入数据规模的反多项式级别,多项式的项数和指数(大概是这样)可以是任意大小.用一些额外的代价,我们的算法可以变成LasVegas随机级,使得答案总是正确的且时间上下界有较大概率是可控的.通过整篇论文,我们始终假设对文本串和模板串进行只读性随机访问,并且我们在考虑空间时不考虑他们的大小.

Model of computation. Our algorithms are designed for the word-RAM with Ω(log n)-bit words and

assume integer alphabet of polynomial size. The usage of Karp-Rabin fingerprints makes them Monte

Carlo randomized: the correct answer is returned with high probability, i.e., the error probability is inverse

polynomial with respect to input size, where the degree of the polynomial can be set arbitrarily large. With

some additional effort, our algorithms can be turned into Las Vegas randomized, where the answer is always

correct and the time bounds hold with high probability. Throughout the whole paper, we assume read

only random access to the text and the patterns, and we do not include their sizes while measuring space

consumption.

Section2.预备说明

我们只考虑一个从 0…σ 的字符集,其中 σ 至多是 n+m .对于一个字符串 w=w[1]…w[n]∈∑n ,我们定义w的长度为 |w|=n .对于 1≤i≤j≤n ,一个词语 u=w[i]…w[j] ,我们定义其为字符串w的子串.对于由 w[i..j] 组成的子串,我们将它的出现位置表示为i,称为原字符串w的子段/串.一个左端点为i=1的子段我们称其为原串的前缀,一个右端点j=n的子串称为原串的后缀.

We consider finite words over an integer alphabet Σ = {0, … , σ − 1}, where σ = poly(n + m). For a word

w = w[1] … w[n] ∈ Σn, we define the length of w as |w| = n. For 1 ≤ i ≤ j ≤ n, a word u = w[i] … w[j]

is called a subword of w. By w[i..j] we denote the occurrence of u at position i, called a fragment of w. A

fragment with i = 1 is called a prefix and a fragment with j = n is called a suffix.

对于一个字符串w如果存在一个确定的整数p使得对于任意的 i=1,2…|w|−1 使得w[i]=w[i+p],我们可以把p称为原串的一个周期.在这种情况下,一个前缀w[1..p]通常也被称为w的一个周期前缀.w的一个满足条件的最小周期前缀可以表示为 per(w) .我们称一个字符串w具有周期性,当且仅当 per(w)≤|w|2 ,称其具有高度周期性当且仅当 per(w)≤|w|3 .著名的周期性引理[6]证明,如果p和q都是w的周期,并且 p+q≤|w| ,那么 gcd(p,q) 一定也是w的一个周期.如果每一个per(w)都不恰好是|w|的约数,我们称字符串w是原始的,并且定义周期w[1..per(w)]总是原始的.(不知道这里原始具体是什么意思)

A positive integer p is called a period of w whenever w[i] = w[i + p] for all i = 1, 2, … , |w| − p. In this

case, the prefix w[1..p] is often also called a period of w. The length of the shortest period of a word w is

denoted as per(w). A word w is called periodic if per(w) ≤ |w|/2 and highly periodic if per(w) ≤ |w|/3. The

well-known periodicity lemma [6] says that if p and q are both periods of w, and p + q ≤ |w|, then gcd(p, q)

is also a period of w. We say that word w is primitive if per(w) is not a proper divisor of |w|. Note that the

shortest period w[1.. per(w)] is always primitive.

2.1使用哈希

我们的随机构造是基于Karp-Rabin所使用的哈希方法的;见[13].对于一个基于

0…σ−1

的字符串w[1..n],确定一个常数c(

c≥1

),一个质数p(

p>max(σ,nc+4)

),并随机选择一个x(x为正质数).我们定义一个子串w[i..j]的哈希值为

Φ(w[i..j])=w[i]+w[i+1]x+⋯+w[j]xj−imodp

.至少也有

1−1nc

的概率满足不存在两个长度相同的子串哈希值相同.哈希值相同的情况只会对两个我们称为”不确定”的子串发生.从现在开始,每当说明结果时我们都假设不存在不确定的子串来避免重复答案只是有很大的可能正确而不是绝对正确的情况.

对于字典匹配,我们假设

w=TP1P2…Ps

没有不同的子串(这里T,P分别对应文本串和所有模板串,w为其连接起来构成的总串)存在相同的哈希值的情况.哈希值可以使得我们肯简单的就能定位很多长度相同的模板串.

在引言中描述了一个直截了当的解法来对模板串构建哈希表.

然而,我们只能保证哈希表可以以

1−O(1sc)

的正确率构造出来(对于一个任意的常数c),可是我们想要将错误率下降到

O(1(n+m)c)

.于是我们用一个如下文所阐释的那种确定的字典来重置哈希表.尽管时间复杂度会上升到

O(slogs)

,考虑复杂度时较小的项都计算入常数.

2.1 Fingerprints

Our randomized construction is based on Karp-Rabin fingerprints; see [13]. Fix a word w[1..n] over an

alphabet Σ = {0, … , σ−1}, a constant c ≥ 1, a prime number p > max(σ, nc+4), and choose x ∈ Zp uniformly

at random. We define the fingerprint of a subword w[i..j] as Φ(w[i..j]) = w[i]+w[i+1]x+…+w[j]xj−i mod p.

With probability at least 1 − 1

nc , no two distinct subwords of the same length have equal fingerprints. The

situation when this happens for some two subwords is called a false-positive. From now on when stating the

results we assume that there are no false-positives to avoid repeating that the answers are correct with high

probability. For dictionary matching, we assume that no two distinct subwords of w = T P1 … Ps have equal

fingerprints. Fingerprints let us easily locate many patterns of the same length. A straightforward solution

described in the introduction builds a hash table mapping fingerprints to patterns. However, then we can

only guarantee that the hash table is constructed correctly with probability 1 − O( s 1 c ) (for an arbitrary

constant c), and we would like to bound the error probability by O( (n+ 1m)c ). Hence we replace hash table

with a deterministic dictionary as explained below. Although it increases the time by O(s log s), the extra

term becomes absorbed in the final complexities.

定理1

给定一个长度为n的文本串T和s个长确定为l的模板串 P1,…,Ps ,我们可以在 O∗(n+s+slogs 的总时间内对每个文本串计算出其在T中的最左边的出现位置,同时只需要 O(s) 的总空间.

Theorem 1. Given a text T of length n and patterns P1, … , Ps, each of length exactly

, we can compute+ s log s) total time and O(s) space.

the the leftmost occurrence of every pattern Pi in T using O(n + s

证明

我们可以对每个文本串计算出其哈希值

Φ(Pj)

.然后我们可以用

O(slogs)

的时间对待匹配的

Φ(Pj)

和h这样的关系构建出一个确定的字典D(应该就是一个哈希表).对于多个相同的模板串,我们只构建一次,在最后我们将结果记入所有的模板串中.

然后我们可以读入文本串T并选择一个长度为l的匹配区间同时维护区间内

T[i..i+l−1]

的哈希值.使用字典D,我们可以在

O(1)

的时间内寻找某个模板串j是否满足

Φ(T[i...i+l−1])=Φ(Pj)

.如果满足的话,我们更新Pj的答案(如果在此前

Pj

)没有对应的出现位置的话.如果我们预处理出

x−1

,就可以在i增大时以O(1)的时间内计算

Φ(T[i..i+l−1])

.

Proof. We calculate the fingerprint Φ(Pj) of every pattern. Then we build in O(s log s) time [16] a deter

ministic dictionary D with an entry mapping Φ(Pj) to j. For multiple identical patterns we create just

one entry, and at the end we copy the answers to all instances of the pattern. Then we scan the text T

with a sliding window of lengthwhile maintaining the fingerprint Φ(T[i..i +− 1]) of the current window.

Using D, we can find in O(1) time an index j such that Φ(T[i..i +− 1]) = Φ(Pj), if any, and update the− 1]) can be updated in O(1) time while increasing i.

answer for P

j if needed (i.e., if there was no occurrence of Pj before). If we precompute x−1, the fingerprints

Φ(T[i..i +

2.2使用Trie

一个由模板串

P1..Pn

形成的trie是一个有根树,其节点对应着原串中的一个前缀.

根节点不表示任何字符串,树边上标记一个字符.每个节点对应着的那个特殊的前缀称为其轨迹.在一个压缩的trie中,一个一元(与二元,多元相对)的同时不代表任意模板串Pi的节点(也就是trie中没有实际作用的节点)压缩掉(类似于后缀树的样子),将无分支且不代表模板串的节点缩在一起,形成了trie的一棵虚树.被缩掉的节点称为是”implicit”(虚的,隐性的)节点,与explicit实节点相对,储存时只储存实节点而不储存虚节点.一个字符串在一个压缩过的trie中的出现位置可能是一个实节点,也可能是一个虚节点.所有从一个节点连出的边都会被按照首字符储存在链表中,在链表中的边是不重复的.压缩过的trie中的边的字符标号都会按模板串对应位置的哈希值储存起来.所以,一个压缩过的trie的存储空间是

O(s)

的且与实节点的数目成正比.

考虑两个压缩过的trie T1和T2,我们称代表相同字符串的v1和v2(可以是虚节点)(

v1∈T1,v2∈T2

)是兄弟节点.可以发现,T1中的节点v1至多在T2中有一个兄弟节点v2.

A trie of a collection of strings P1, … , Ps is a rooted tree whose nodes correspond to prefixes of the strings.

The root represents the empty word and the edges are labeled with single characters. The node corresponding

to a particular prefix is called its locus. In a compacted trie unary nodes that do not represent any Pi are

dissolved and the labels of their incidents edges are concatenated. The dissolved nodes are called implicit as

opposed to the explicit nodes, which remain stored. The locus of a string in a compacted trie might therefore

be explicit or implicit. All edges outgoing from the same node are stored on a list sorted according to the

first character, which is unique among these edges. The labels of edges of a compacted trie are stored as

pointers to the respective fragments of strings Pi. Consequently, a compacted trie can be stored in space

proportional to the number of explicit nodes, which is O(s).

Consider two compacted tries T1 and T2. We say that (possibly implicit) nodes v1 ∈ T1 and v2 ∈ T2 are

twins if they are loci of the same string. Note that every v1 ∈ T1 has at most one twin v2 ∈ T2.

引理2.给定两个 压缩后的trie T1,T2(分别对s1,s2建立),在 O(s1+s2) 的时间空间复杂度内我们可以对每一个T1中的实节点v1找到他在T2中的兄弟节点v2.如果v1没有兄弟节点,从T2中随便找一个节点v2并返回.

Lemma 2. Given two compacted tries T1 and T2 constructed for s1 and s2 strings, respectively, in O(s1+s2)

total time and space we can find for each explicit node v1 ∈ T1 a node v2 ∈ T2 such that if v1 has a twin in1

T2, then v2 is its twin. (If v1 has no twin in T2, the algorithm returns an arbitrary node v2 ∈ T2).

证明.我们同时递归两个trie并维护一对点v1,v2,从T1和T2的根节点开始,要求满足如下条件:v1和v2是兄弟节点,或者v1在T2中没有兄弟节点.如果v1是实节点,我们把v2存储在v1的兄弟节点候选列表中.接下来,我们列举v1和v2的所有子节点(可以是虚节点)并在线性时间内根据边上的字符来匹配.我们递归每一对点并进行匹配.如果v1和v2都是虚节点,我们可以将他们并入另一个孩子.最后一步就是循环直至找到至少一个trie上的一个实节点,在执行过程中我们可以令他始终为实节点从而得出操作总数目是 O(s1+s2) 的.如果一个节点v( v∈T1 )在遍历过程中没有被访问到,可以确定它在T2中没有兄弟节点.否则,我们可以单独为他计算出兄弟节点的候选节点.

Proof. We recursively traverse both tries while maintaining a pair of nodes v1 ∈ T1 and v2 ∈ T2, starting

with the root of T1 and T2 satisfying the following invariant: either v1 and v2 are twins, or v1 has no twin in

T2. If v1 is explicit, we store v2 as the candidate for its twin. Next, we list the (possibly implicit) children

of v1 and v2 and match them according to the edge labels with a linear scan. We recurse on all pairs of

matched children. If both v1 and v2 are implicit, we simply advance to their immediate children. The last

step is repeated until we reach an explicit node in at least one of the tries, so we keep it implicit in the

implementation to make sure that the total number of operations is O(s1 + s2). If a node v ∈ T1 is not

visited during the traversal, for sure it has no twin in T2. Otherwise, we compute a single candidate for its

twin.

Section3.对于较短的模板串

为了处理那些长度不超过给定阈值l的模板串,我们首先对这些模板串构建一个压缩节点的trie.如果模板串都是按字典序排序过的,构建会非常简单:我们将他们一个个插入进压缩过节点的trie的从根节点开始最新的被遍历到的节点,然后有可能会将一条边分成两部分,如果必要的话,最后还要加入一个叶子节点.因此,下列结果就足够构建一个有效的trie.

To handle the patterns of length not exceeding a given threshold `, we first build a compacted trie for those

patterns. Construction is easy if the patterns are sorted lexicographically: we insert them one by one into

the compacted trie first naively traversing the trie from the root, then potentially partitioning one edge into

two parts, and finally adding a leaf if necessary. Thus, the following result suffices to efficiently build the

tries.

引理3.总长m的模板串P1..Ps可以被在 O(m+σϵ) 的时间和 O(s) 的空间完成字典序排序,其中 ϵ 是一个大于0的常数.

Lemma 3. One can lexicographically sort strings P1, … , Ps of total length m in O(m+ σε) time using O(s)

space, for any constant ε > 0.

证明.我们分开排序前

m−−√+σϵ2

长的字符串和剩下的字符串,然后将两个有序字符串序列归并起来.注意这些最长的串可以在

O(s)

的时间内使用线性复杂度的选择算法找到.

长字符串可以使用插入排序.如果排序时相邻的字符串的LCP被计算并储存下来,我们就可以在

O(j+|Pj|)

的时间内做到将Pj插入到序列中.更详细地说,我们将

S1…Sj−1

储存在一个用于防止已经插入过的字符串的列表里,从k=1开始保持k单调递增同时维护

Sk和Pj

的LCP,直到找到一个

Sk

使得其字典序比

pj

小,记为l.k每增加1,我们用

Sk−1

和

Sk

的LCP来更新l,记为l’.如果

l′>l

,则我们保持l不变.若相等,我们不断让l加一,在满足定义的情况下尽可能多的重复这个过程.在两种情况下,我们都可以用新的l在常数时间内比较

Sk

和

Pj

的字典序大小.最后,当

l′<l

的时候,我们显然能保证此时

Pj<Sk

,此时可以终止整个过程.

排序这

m−−√+σϵ2

个较长的串可以使用该过程做到

O(m+(m−−√+σϵ2)2)=O(m+σϵ)

的时间复杂度.

Proof. We separately sort the √m + σε/2 longest strings and all the remaining strings, and then merge both

sorted lists. Note these longest strings can be found in O(s) time using a linear time selection algorithm.

Long strings are sorted using insertion sort. If the longest common prefixes between adjacent (in the

sorted order) strings are computed and stored, inserting Pj can be done in O(j + |Pj|) time. In more detail,

let S1, S2, … , Sj−1 be the sorted list of already processed strings. We start with k := 1 and keep increasing

k by one as long as Sk is lexicographically smaller than Pj while maintaining the longest common prefix

between Sk and Pj, denoted. After increasing k by one, we updateusing the longest common prefix

between Sk−1 and Sk, denoted0, as follows. If0 >, we keepunchanged. If0 =, we try to iteratively

increaseby one as long as possible. In both cases, the new value ofallows us to lexicographically compare

Sk and Pj in constant time. Finally,0 <guarantees that Pj < Sk and we may terminate the procedure.

Sorting the √m + σε/2 longest strings using this approach takes O(m + (√m + σε/2)2) = O(m + σε) time.

剩下的字符串单串长度至多是

m−−√

,并且如果存在这样的串的话,数量一定

≥σϵ2

.我们使用基数排序来排序这些字符串,对于字符集内的每个字符,将其看成一个从

{0,1,…,σϵ2}

选出的

2ϵ

个字符构成的序列.单次基数排序消耗的时间和空间均正比于参与排序的字符串的字符集大小,具体上是

O(s+σϵ2)=O(s)

的.此外,因为参与排序的字符串总长至多为m,因此总时间复杂度是

O(m+σϵ2m−−√)=O(m+σϵ)

的.

最后,归并的过程需要消耗同总长和参与排序的字符串数目线性相关的时间,然后总的字典序就被排出来了.

The remaining strings are of length at most √m each, and if there are any, then s ≥ σε/2. We sort these

strings by iteratively applying radix sort, treating each symbol from Σ as a sequence of 2

ε

symbols from

{0, 1, … , σε/2 − 1}. Then a single radix sort takes time and space proportional to the number of strings

involved plus the alphabet size, which is O(s + σε/2) = O(s). Furthermore, because the numbers of strings

involved in the subsequent radix sorts sum up to m, the total time complexity is O(m+σε/2√m) = O(m+σε).

Finally, the merging takes time linear in the sum of the lengths of all the involved strings, so the total

complexity is as claimed.

接下来,我们把文本串T划分成

O(nl)

块,其中

T1=T[1..2l],T2=[l+1..3l],T3=[2l+1..4l]…

.注意到划分出的每个至多长l的子串一定被其中某一块所包含,因此我们可以单独考虑每一块.

对每个快建立后缀树,消耗

O(llogl)

的时间和

O(l)

的空间(每个串被处理完后,后缀树会被清空).我们对后缀树应用引理2和对模板串构建的压缩节点的trie,这消耗了

O(l+s)

的时间.对每个模板串

Pj

我们获得了一个节点,其对应的子串与

Pj

相同,且记录了

Pj

在

Ti

中的出现位置.我们计算出最左边的出现位置,

Ti[begin..end]

,如果我们在后缀树的实节点中记录额外的信息就可以在常数的时间内做到,然后我们可以用哈希值来检验

Ti[begin…end]

是否与

Pj

相同.为了完成这一步,我们需要提前对所有块和所有模板串预处理哈希值,这需要

O(l)

的时间和空间.这样之后我们就可以在常数的时间内确定一个串与另一个串的子串关系.

Next, we partition T into O( n

) overlapping blocks T1 = T[1..2], T2 = T[+1..3], T3 = T[2+1..4], ….

Notice that each subword of length at mostis completely contained in some block. Thus, we can considerlog

every block separately.

The suffix tree of each block Ti takes O() time [17] and O() space to construct and store (the suffix

tree is discarded after processing the block). We apply Lemma 2 to the suffix tree and the compacted trie

of patterns; this takes O(+ s) time. For each pattern Pj we obtain a node such that the corresponding) time and space, which

subword is equal to Pj provided that Pj occurs in Ti. We compute the leftmost occurrence Ti[b..e] of the

subword, which takes constant time if we store additional data at every explicit node of the suffix tree,

and then we check whether Ti[b..e] = Pj using fingerprints. For this, we precompute the fingerprints of all

patterns, and for each block Ti we precompute the fingerprints of its prefixes in O(

allows to determine the fingerprint of any of its subwords in constant time.

总的来说,我们每个过程消耗 O(m+σs) 的时间,每个块消耗 O(llogl+s) 的时间,其中 σ=(n+m)O(1) ,因为 ϵ 足够小所以计算答案时候不考虑.

In total, we spend O(m + σε) for preprocessing and O(

log+ s) for each block. Since σ = (n + m)O(1),

for small enough ε this yields the following result.

定理4.给定一个长n的文本串T和总长m的模板串 P1…Ps ,可以用 O(nlogl+snl+m) 的总时间和 O(s+l) 的总空间计算出每个模板串在文本串中最靠左的出现位置,其中模板串的长度至多为l.

Theorem 4. Given a text T of length n and patterns P1, … , Ps of total length m, using O(nlog

+s n+m)

total time and O(s +) space we can compute the leftmost occurrences in T of every pattern Pj of length at.

most

Section4.对于较长的模板串

为了处理长度大于设定的阈值的模板串,我们首先把他们根据

⌊log34|Pj|⌋

分成很多组.比文本串长的模板串可以直接忽略,因此至多有

O(logn)

组.每组都被分开处理,且从现在开始我们只考虑满足

⌊log34|Pj|⌋=i

的模板串

Pj

.

我们根据字符串前缀和后缀的周期性将他们分成不同类. 我们将l定为

⌈43i⌉

,并定义

αj,βj

分别表示长为

l

的模板串

To handle patterns longer than a certain threshold, we first distribute them into groups according to the

value of blog4/3 |Pj|c. Patterns longer than the text can be ignored, so there are O(log n) groups. Each

group is handled separately, and from now on we consider only patterns Pj satisfying blog4/3 |Pj|c = i.

We classify the patterns into classes depending on the periodicity of their prefixes and suffixes. We

set= d(4/3)ie and define αj and βj as, respectively, the prefix and the suffix of lengthof Pj. Since

23

(|αj| + |βj|) = 4 3` ≥ |Pj|, the following fact yields a classification of the patterns into three classes: either

Pj

is highly periodic, or αj is not highly periodic, or βj is not highly periodic. The intuition behind this

classification is that if the prefix or the suffix is not repetitive, then we will not see it many times in a short

subword of the text. On the other hand, if both the prefix and suffix are repetitive, then there is some

structure that we can take advantage of.

例证5.假设x和y分别是一个单词w的一个前缀和一个后缀.如果

|x|+|y|≥|w|+p

,且p是x和y的一个周期,则p一定是w的一个周期.

证明.我们需要证明

w[i]=w[i+p]

对所有的i=1,2,…,|w|-p均成立.如果p是x的一个周期,那么可以推出

i+p≤|x|

.如果p也是y的一个周期,那么可以推出

i≥|w|−|y|+1

.因为

|x|+|y|≥|w|+p

,因此这两种可能覆盖了i可能的所有取值.

Fact 5. Suppose x and y are a prefix and a suffix of a word w, respectively. If |x| + |y| ≥ |w| + p and p is a

period of both x and y, then p is a period of w.

Proof. We need to prove that w[i] = w[i + p] for all i = 1,2, … , |w| − p. If i + p ≤ |x| this follows from p

being a period of x, and if i ≥ |w| − |y|+1 from p being a period of y. Because |x|+ |y| ≥ |w|+ p, these two

cases cover all possible values of i.

为了将每个模板串划分到正确的类别,我们要使用较小的空间计算 Pj,αj,βj 的周期.大致相同的结果已经在[14]中被证明,但是为了完整我们在这里提供一个完整的证明.

To assign every pattern to the appropriate class, we compute the periods of Pj, αj and βj using small

space. Roughly the same result has been proved in [14], but for completeness we provide the full proof here.

引理6.给定一个串w,如果它是有周期性的,我们可以用

O(|w|)

的时间和常数级的空间,计算出w的最小周期前缀per(w).

证明.设v是w的一个前缀,长度为

⌈12|w|⌉

,设p为v在w中第二次出现的位置(如果存在的话).如果

per(w)≤12|w|

,我们令per(w)为p-1.首先我们注意到v第二次出现的位置为per(w)+1.如果令

per(w)≥p−1

,且p-1为w[1..|v|+p-1]关于per(w)的一个周期的话.根据周期性引理,

per(w)≤12|w|≤|v|

可以推出gcd(p-1,per(w))也是该前缀的一个周期.因此

per(w)≥p−1

与之前我们定义per(w)为最小周期前缀相矛盾.

Lemma 6. Given a read-only string w one can decide in O(|w|) time and constant space if w is periodic

and if so, compute per(w).

Proof. Let v be the prefix of w of length d 1 2|w|e and p be the starting position of the second occurrence of v

in w, if any. We claim that if per(w) ≤ 1 2|w|, then per(w) = p − 1. Observe first that in this case v occurs

at a position per(w) + 1. Hence, per(w) ≥ p − 1. Moreover p − 1 is a period of w[1..|v| + p − 1] along with

per(w). By the periodicity lemma, per(w) ≤ 1 2|w| ≤ |v| implies that gcd(p − 1,per(w)) is also a period of

that prefix. Thus per(w) > p − 1 would contradict the primitivity of w[1..per(w)].

该算法使用线性时间和常数空间的匹配算法来计算p.如果它存在的话,它将使用一个字符一个字符的对照方式来判断w[1..p-1]是否是w的一个周期.如果是的话,通过关于per(w)=p-1的结论,这个算法得到了返回值.否则, 2per(w)>|w| ,则w不具备周期性.这个算法将使用线性的时间和常数级空间.

The algorithm computes the position p using a linear time constant-space pattern matching algorithm.

If it exists, it uses letter-by-letter comparison to determine whether w[1..p −1] is a period of w. If so, by the

discussion above per(w) = p − 1 and the algorithm returns this value. Otherwise, 2per(w) > |w|, i.e., w is

not periodic. The algorithm runs in linear time and uses constant space.

4.1对于不具备高度周期性前缀的模板串

接下来我们将介绍如何对前缀

αj

不具备高度周期性的模板串进行处理.对于后缀

βj

同样不具备高度周期性的模板串和文本串,我们将其翻转后可以用一样的方法处理.

引理7 我们令l为任意一个数字.假设给定我们一个长度为n的文本串T和一些模板串

P1…Ps

,满足

l≤|Pj|≤43l

且

αj=Pj[1..l]

不具备高度的周期性.我们可以对每个模板串计算出其在文本串中最左的和最右的出现位置,只需要消耗

O(n+s(1+nl)logs+sl)

的时间和

O(s)

的空间.

Below we show how to deal with patterns with non-highly periodic prefixes αj. Patterns with non-highly

periodic suffixes βj can be processed using the same method after reversing the text and the patterns.

Lemma 7. Letbe an arbitrary integer. Suppose we are given a text T of length n and patterns P1, . . . , Ps≤ |Pj| < 4 3

such that for 1 ≤ j ≤ s we haveand αj = Pj[1..] is not highly periodic. We can compute the

leftmost and the rightmost occurrence of each pattern Pj in T using O(n+ s(1+ n)log s+ s) time and O(s)

space.

算法读入文本串T,并只考虑当前的一个长为l的区域.当遭遇一个与某个模板串P_j的某个前缀相同的单词时,会进行一个校验过程来判断长l的后缀 βj 是否出现在了对应的位置.只有当我们的匹配区域到达了那个位置才会执行这个匹配过程.这个方法可以检测出所有模板串的出现位置.事实上我们可以对每个模板串记录下最左和最右的出现位置.

The algorithm scans the text T with a sliding window of length

. Whenever it encounters a subword

equal to the prefix αj of some Pj, it creates a request to verify whether the corresponding suffix βj of length

occurs at the appropriate position. The request is processed when the sliding window reaches that position.

This way the algorithm detects the occurrences of all the patterns. In particular, we may store the leftmost

and rightmost occurrence of each pattern.

我们使用哈希值来比较T中的短语与

αj,βj

.我们可以对每个j预处理出

Φ(αj),Φ(βj)

.我们也可以对每个j的

Φ(αj)

构建一个确定的哈希表D(如果有多个模板串有相同的哈希值

Φ(αj)

,这个哈希表将形成一个索引.这两个方法分别使用

O(sl)

和

O(slogs)

的时间复杂度.后续等待中的请求将使用一个优先队列

Q

来维护,二叉堆中储存的是pair元素,pair的两个关键字分别是模板串的索引(应当是指编号或者哈希值)(作为排序的权值)和

We use the fingerprints to compare the subwords of T with αj and βj. To this end, we precompute Φ(αj)

and Φ(βj) for each j. We also build a deterministic dictionary D [16] with an entry mapping Φ(αj) to j for

every pattern (if there are multiple patterns with the same value of Φ(αj), the dictionary maps a fingerprint

to a list of indices). These steps take O(s`) and O(s log s), respectively. Pending requests are maintained

in a priority queue Q, implemented using a binary heap1 as pairs containing the pattern index (as a value)

and the position where the occurrence of βj is anticipated (as a key).

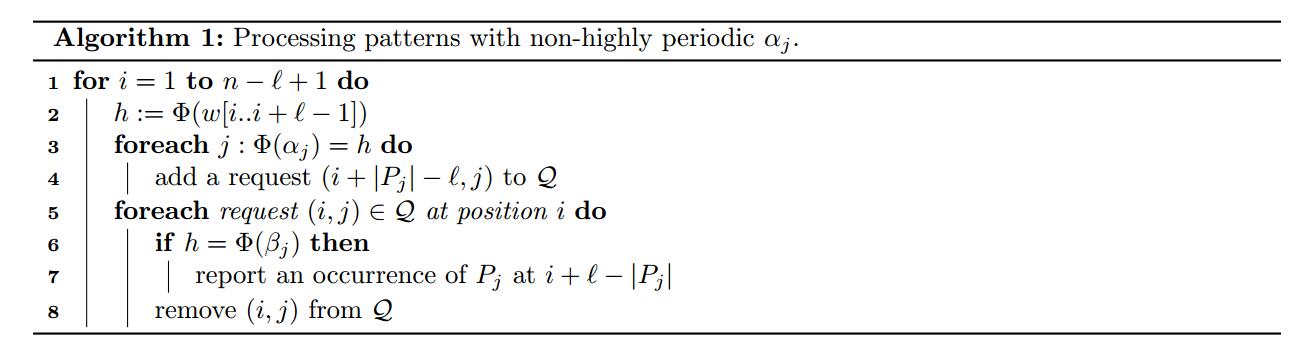

算法1的伪代码(处理不具备高度周期性的

αj

).

算法以给出了这个过程的一个详细描述.让我们分析一下它的时间和空间复杂度.

根据Karp-Rabin hash比较法的性能分析,代码的第二行可以在

O(1)

的时间内被执行.如果各自的集合都是空集的话,第三行和第五行的循环会额外消耗

O(1)

的时间(感觉这不是废话吗).除了这些,每一个运算都可以被分配成一个指令,他们中的每一个都会消耗

O(1)

(如行3,5,6)或者

O(log|Q|)

(|Q|为堆的大小)(如行4,8)的时间.为了界定|Q|的大小,我们需要知道请求序列的最大规模.

Algorithm 1 provides a detailed description of the processing phase. Let us analyze its time and space

complexities. Due to the properties of Karp-Rabin fingerprints, line 2 can be implemented in O(1) time.

Also, the loops in lines 3 and 5 takes extra O(1) time even if the respective collections are empty. Apart from

these, every operation can be assigned to a request, each of them taking O(1) (lines 3 and 5-6) or O(log |Q|)

(lines 4 and 8) time. To bound |Q|, we need to look at the maximum number of pending requests.

实例8.对任意的模板串 Pj ,任意时刻最多只会产生 O(1+nl) 个请求.

Fact 8. For any pattern Pj just O(1 + n ` ) requests are created and at any time at most one of them is pending.

证明.对跟 Pj 有关的请求与 αj 在T中的出现位置记录一一对应的关系.两个出现位置之间的距离至少是 13l ,因此使得 αj 变得具有高度周期性.这使得请求总数上界变成了 O(1+nl) .另外,每个请求最多参与 |Pj|−l<13l 个迭代过程.因此,一个请求过程在下一个 αj 的出现位置到达前已经被处理完成了.(这里有点拗口,我大致翻译成了这个意思)

Proof. Note that there is a one-to-one correspondence between requests concerning Pj and the occurrences

of α

j in T. The distance between two such occurrences must be at least 1 3, because otherwise the period, thus making αj highly periodic. This yields the O(1 + n

of α

j would be at most 1 3) upper bound on the< 1 3` iterations of the

total number of requests. Additionally, any request is pending for at most |Pj| −

main for loop. Thus, the request corresponding to an occurrence of αj is already processed before the next

occurrence appears.

所以,扫描过程消耗 O(s) 的空间和 O(n+s(1+nl)logs) 的时间.我们获得了在引理7中提出的复杂度界限.

Hence, the scanning phase uses O(s) space and takes O(n + s(1 + n ` ) log s) time. Taking preprocessing

into account, we obtain bounds claimed in Lemma 7.

4.2对于具备高度周期性的模板串

引理9.令

l

为一个任意的整数.给定一个长为n的文本串T和一个由具有高度周期性的模板串组成的集合

Lemma 9. Let

be an arbitrary integer. Given a text T of length n and a collection of highly periodic≤ |Pj| < 4 3

patterns P1, . . . , Ps such that for 1 ≤ j ≤ s we have, we can compute the leftmost occurrence) log s + s`) total time and O(s) space.

of each pattern Pj in T using O(n + s(1 + n

答案的证明基本与引理7相同,除了这个算法要无视恰巧可拆分的出现位置.如果x在位置i中还存在另一个出现位置per(x),则一个关于x在T中出现在i位置被称为可拆分的.剩余的出现位置都被称为是不可拆分的.注意到最左边的出现位置通常都是不可拆分的,因此我们确实可以放心的忽视掉一些模板串的可拆分的位置.因为 2per(Pj)≤23|Pj|≤89l<l ,接下来的事实说明了如果 Pj 的一个出现位置是不可拆分的,那么 αj 在文本串的相同位置的出现位置也总是不可拆分的.

The solution is basically the same as in the proof of Lemma 7, except that the algorithm ignores certain

shiftable occurrences. An occurrence of x at position i of T is called shiftable if there is another occurrence of x at position i − per(x). The remaining occurrences are called non-shiftable. Notice that the leftmost

occurrence is always non-shiftable, so indeed we can safely ignore some of the shiftable occurrences of the

patterns. Because 2 per(Pj) ≤ 2 3|Pj| ≤ 8 9<, the following fact implies that if an occurrence of Pj is

non-shiftable, then the occurrence of αj at the same position is also non-shiftable.

引例10.令y为x的一个前缀(满足 |y|≥2per(x) )假设x在w中的i位置没有可以拆分的出现位置.于是有,y在i位置的出现也是不可拆分的.

Fact 10. Let y be a prefix of x such that |y| ≥ 2 per(x). Suppose x has a non-shiftable occurrence at position

i in w. Then, the occurrence of y at position i is also non-shiftable.

证明.我们记

per(y)+per(x)≤|y|

,因此由周期性引理可以证明

per(y)=per(x)

.

令

x=ρkρ′

,其中

ρ

是x的最小循环节.因为

|y|≥per(x)

,y在i-per(x)处出现意味着

ρ

也会在相同位置出现.因此有

w[i−per(x)…i+|x|−1]=ρk+1ρ′

.但接下来x显然会在i-per(x)处出现,这与之前的假设”x在i处的出现是不可拆分的”相矛盾.

Proof. Note that per(y) + per(x) ≤ |y| so the periodicity lemma implies that per(y) = per(x).

Let x = ρkρ0 where ρ is the shortest period of x. Suppose that the occurrence of y at position i is

shiftable, meaning that y occurs at position i − per(x). Since |y| ≥ per(x), y occurring at position i − per(x)

implies that ρ occurs at the same position. Thus w[i−per(x)..i+|x|−1] = ρk+1ρ0. But then x clearly occurs

at position i−per(x), which contradicts the assumption that its occurrence at position i is non-shiftable.

所以,我们或许可以只为 αj 不可拆分的出现位置生成请求.换句话说,当 αj 的某个出现位置是可拆分的时候,我们不会立刻生成一个请求并会继续算法的执行过程.为了检测并忽视掉这些可拆分的出现位置,我们对每个 αj 维护一个最后的出现位置.然而,如果有多个模板串有相同的前缀 αj1=⋯=αjk 的话,需要单独考虑,使得检测一个可拆分的出现位置是 O(1) 的而不是 O(k) .为了达到这个目的,我们维护另一个确定的哈希表,对每一个 Φ(αj) 使用一个变量的指针来存储,并对 αj 维护先前的出现过的位置.这个变量由所有具有相同前缀 αj 的模板串公用.

Consequently, we may generate requests only for the non-shiftable occurrences of αj. In other words, if

an occurrence of α

j is shiftable, we do not create the requests and proceed immediately to line 5. To detect

and ignore such shiftable occurrences, we maintain the position of the last occurrence of every αj. However,

if there are multiple patterns sharing the same prefix αj1 = … = αjk, we need to be careful so that the

time to detect a shiftable occurrence is O(1) rather than O(k). To this end, we build another deterministic

dictionary, which stores for each Φ(αj) a pointer to the variable where we maintain the position of the

previously encountered occurrence of αj. The variable is shared by all patterns with the same prefix αj.

这个修改后的算法的复杂度仍然需要分析.首先对于一个 αj ,我们需要知道它的不可拆分的出现位置的数目上界.假设 αj 在i’处有一个不可拆分的出现位置,其中 i′<i 且 i′≥i−12l , i−i′≤12l 是 T[i′…i+l−1] 的一个周期.根据周期性引理, per(αj) 划分开i-i’,因此 αj 出现在位置 i−per(αj) ,与之前”i’位置的出现是不可拆分的”相矛盾.所以, αj 的每个不可拆分的位置至少可以划分成 12l 个字符.请求的总数和请求队列中请求的最大值的上界分别是 O(s(1+nl)) 和 O(s) ,证明类同引理7.考虑其他过程占用的时间和空间,总时间/空间复杂度为 O(n+slogs)/O(s) .最终的复杂度上界也是如此.

It remains to analyze the complexity of the modified algorithm. First, we need to bound the number

of non-shiftable occurrences of a single αj. Assume that there is a non-shiftable occurrence αj at positions

i0 < i such that i0 ≥ i − 1

2. Then i − i0 ≤ 1 2is a period of T [i0..i +− 1]. By the periodicity lemma,characters apart, and the total number of requests and the maximum number of pending requests

per(αj) divides i−i0, and therefore αj occurs at position i0 − per(αj), which contradicts the assumption that

the occurrence at position i0 is non-shiftable. Consequently, the non-shiftable occurrences of every αj are at

least 1

2

can be bounded by O(s(1 + n ` )) and O(s), respectively, as in the proof of Lemma 7. Taking into the account

the time and space to maintain the additional components, which are O(n + s log s) and O(s), respectively,

the final bounds remain the same.

4.3小结

定理11. 给定一个长为n的文本串T和s个总长为m的模板串 P1…Ps ,使用 O(nlogn+m+snlogs) 的总时间和 O(s) 的总空间可以对每个长至少为l的模板串计算出其在T中的最左的出现位置.

Theorem 11. Given a text T of length n and patterns P1, … , Ps of total length m, using O(n log n + m +

s n

log s) total time and O(s) space we can compute the leftmost occurrences in T of every pattern Pj of.

length at least

证明.算法将模板串按长度分成 O(logn) 组,可以将他们按周期性分成三类,共计消耗 O(m) 的时间和 O(s) 的空间.还需要说明被声明过的所有复杂度都有一个明确的上界.其中每一个都可以被看做 O(n) 加上对每个模板串 O(|Pj|+(1+n|Pj|)logs) .因为 l≤|Pj|≤n 且总计有 O(logn) 组,因此总计为 O(nlogn+m+snllogs) .

Proof. The algorithm distributes the patterns into O(log n) groups according to their lengths, and then into

three classes according to their repetitiveness, which takes O(m) time and O(s) space in total. Then, it

applies either Lemma 7 or Lemma 9 on every class. It remains to show that the running times of all those

calls sum up to the claimed bound. Each of them can be seen as O(n) plus O(|Pj|+(1+ |P n j|) log s) per every

pattern Pj. Because≤ |Pj| ≤ n and there are O(log n) groups, this sums up to O(n log n+m+s nlog s).

对所有长度至多是min(n,s)的模板串使用定理4,如果 s≤n ,对所有长度至多为s的模板串使用定理11,我们得到了我们的主定理.

定理12.给定一个长为n的文本串T和总长为m的模板串 P1…Ps ,我们可以用 O(nlogn+m) 的总时间和 O(s) 的总空间计算出每个模板串 Pj 在T中最左的出现位置.

Using Thm. 4 for all patterns of length at most min(n, s), and (if s ≤ n) Thm. 11 for patterns of length

at least s, we obtain our main theorem.

Theorem 12. Given a text T of length n and patterns P1, … , Ps of total length m, we can compute the

leftmost occurrence in T of every pattern Pj using O(n log n + m) total time and O(s) space.

5346

5346

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?