以58足浴(http://bj.58.com/zuyu/pn1/?PGTID=0d306b61-0000-186a-d0e6-09e79d939b21&ClickID=1)的该网页为例来实战下Xpath。想要爬取的信息包括:标题、类型、临近、转让费、租金、面积。

1. 使用基础库完成

先不使用框架,自己手写爬取该页的代码:

# -*- coding: utf-8 -*-

import codecs

import re

import pandas as pd

import sys

from lxml import etree

import requests

reload(sys)

sys.setdefaultencoding("utf-8")

# print res

# print type(res)

# with codecs.open("bj.58.html", mode="wb") as f:

# f.write(res)

from lxml.etree import _Element

blank = "" # 空字符串

colon_en = ":" # 英文冒号

colon_zh = u":" # 中文冒号

forward_slash = "/" # 正斜杠

br_label = "<br>" # 换行标签

pattern_space = re.compile("\s+") # 空格

pattern_line = re.compile("<br\s*?/?>") # 换行

pattern_label = re.compile("</?\w+[^>]*>") # HTML标签

def crawl_data(url):

data = {"title": [],

"kind": [],

"approach": [],

"trans_fee": [],

"rent": [],

"area": []

}

response = requests.get(url)

res = response.content

tree = etree.HTML(res)

frame = tree.xpath("//*[@id='infolist']/table/tr")

# one = frame[0]

# print one.xpath(".//text()")

# print one.xpath("string()")

for one in frame:

# 标题提取 method 1

raw_title = blank.join(one.xpath("./td[@class='t']/a/text()"))

title = re.sub(pattern_space, blank, raw_title)

# print("title: %s" % title)

# method 2

# title = one.xpath("string(./td[@class='t']/a)")

data["title"].append(title)

print("title: %s" % title)

# 类型和临近位置提取

raw_kind_and_approach = blank.join(one.xpath("./td[@class='t']/text()"))

kind_and_approach = re.sub(pattern_space, blank, raw_kind_and_approach)

k_and_a_list = kind_and_approach.split(forward_slash)

kind = ""

approach = ""

for thing in k_and_a_list:

if u"类型" in thing:

kind = thing.split(colon_en)[1]

elif u"临近" in thing:

approach = thing.split(colon_en)[1]

data["kind"].append(kind)

data["approach"].append(approach)

print("kind: %s, approach: %s" % (kind, approach))

# 转让费和租金提取

transfer_fee_and_rent = etree.tostring(one.xpath("./td[3]")[0], encoding="utf-8")

# print("transfer_fee_and_rent: %s" % transfer_fee_and_rent)

t_and_r_list = re.sub(pattern_space, blank, transfer_fee_and_rent).split(br_label)

# 针对转让费为面议或租金为面议或都为面议的情况进行处理

t_and_r_list = t_and_r_list if len(t_and_r_list) == 2 else t_and_r_list * 2

transfer_fee = re.sub(pattern_label, blank, t_and_r_list[0]).split(colon_zh)[-1]

rent = re.sub(pattern_label, blank, t_and_r_list[1]).split(colon_zh)[-1]

data["trans_fee"].append(transfer_fee)

data["rent"].append(rent)

print("transfer_fee: %s, rent: %s" % (transfer_fee, rent))

# 面积提取

raw_area = etree.tostring(one.xpath("./td[position()=4]")[0], encoding="utf-8")

area = re.sub(pattern_label, blank, raw_area)

area = re.sub(pattern_space, blank, area)

data["area"].append(area)

print("area: %s" % area)

print("-" * 50)

# data.append(item)

return data

def write_csv(data, file):

df = pd.DataFrame(data)

df.to_csv(file, index=False, encoding="gbk")

if __name__ == "__main__":

url = "http://bj.58.com/zuyu/pn1/?PGTID=0d306b61-0000-186a-d0e6-09e79d939b21&ClickID=1"

data = crawl_data(url)

out_file = "bj_58.csv"

write_csv(data, out_file)

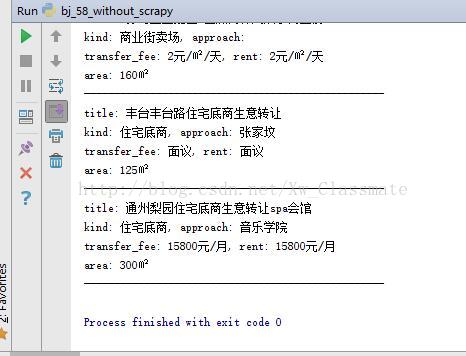

# print("data: %s" % data)运行后结果:

2. 使用Scrapy框架完成

命令行中输入 scrapy startproject tutorial来创建一个tutorial工程。

在items.py中添加一个新的Item:

class ZuYuItem(scrapy.Item):

title = scrapy.Field() # 标题

kind = scrapy.Field() # 类型

approach = scrapy.Field() # 临近

transfer_fee = scrapy.Field() # 转让费

rent = scrapy.Field() # 租金

area = scrapy.Field() # 面积在spiders目录下创建一个名为 bj_58.py 的新的Python文件。内容如下:

# -*- coding: utf-8 -*-

import re

import scrapy

from tutorial.items import ZuYuItem

class BJ58Spider(scrapy.Spider):

"""

scrapy crawl bj_58 -o res.csv

"""

name = "bj_58"

start_urls = [

"http://bj.58.com/zuyu/pn1/?PGTID=0d306b61-0000-186a-d0e6-09e79d939b21&ClickID=1"

]

def parse(self, response):

blank = "" # 空字符串

colon_en = ":" # 英文冒号

colon_zh = u":" # 中文冒号

forward_slash = "/" # 正斜杠

br_label = "<br>" # 换行标签

pattern_space = re.compile("\s+") # 空格

pattern_line = re.compile("<br\s*?/?>") # 换行

pattern_label = re.compile("</?\w+[^>]*>") # HTML标签

item = ZuYuItem()

frame = response.xpath("//*[@id='infolist']/table/tr")

# one = frame[0]

# print one.xpath(".//text()").extract() # 提取每个选择器所对应

# print one.xpath("string()").extract_first()

for one in frame:

# 标题提取 method 1

raw_title = blank.join(one.xpath("./td[@class='t']/a/text()").extract())

title = re.sub(pattern_space, blank, raw_title)

# method 2

# title = one.xpath("string(./td[@class='t']/a)").extract_first()

item["title"] = title

# 类型和临近位置提取

raw_kind_and_approach = blank.join(one.xpath("./td[@class='t']/text()").extract())

kind_and_approach = re.sub(pattern_space, blank, raw_kind_and_approach)

k_and_a_list = kind_and_approach.split(forward_slash)

kind = ""

approach = ""

for thing in k_and_a_list:

if u"类型" in thing:

kind = thing.split(colon_en)[1]

elif u"临近" in thing:

approach = thing.split(colon_en)[1]

item["kind"] = kind

item["approach"] = approach

# 转让费和租金提取

transfer_fee_and_rent = one.xpath("./td[position()=3]").extract_first()

t_and_r_list = re.sub(pattern_space, blank, transfer_fee_and_rent).split(br_label)

self.log("title: %s" % title)

self.log("t_and_r_list: %s" % t_and_r_list)

t_and_r_list = t_and_r_list if len(t_and_r_list) == 2 else t_and_r_list * 2

self.log("t_and_r_list: %s" % t_and_r_list)

transfer_fee = re.sub(pattern_label, blank, t_and_r_list[0]).split(colon_zh)[-1]

rent = re.sub(pattern_label, blank, t_and_r_list[1]).split(colon_zh)[-1]

item["transfer_fee"] = transfer_fee

item["rent"] = rent

# 面积提取

raw_area = one.xpath("./td[position()=4]").extract_first()

area = re.sub(pattern_label, blank, raw_area)

item["area"] = area

yield item

在命令行中输入 scrapy crawl bj_58 -o res.csv 将结果存入res.csv文件中

6038

6038

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?