前言

Hadoop系统作为一个比较成熟的分布式系统,他被人们常常定义为一个存储海量数据的地方,与MySQL这里传统的RDBMS数据库有着明显的不同。Hadoop拥有着他天然的优势,他可以存储PB级别的数据,只要你的机器够多,我就可以存那么多,而且帮你考虑了副本备份这样的机制,只能说,Hadoop这一整套体系真的很完善。说到Hadoop的海量数据存储量,每一天的数据增量可以基本达到TB级别,对于一个类似于BAT这种级别的公司来说,于是有一个很重要的东西我们需把控制住,那就是数据的使用情况的数据,说白了就是你存储的数据有多少个block块,文件目录多少个,磁盘使用了多少PB,剩余多少空间等等这样的信息。这类信息的获取对于当前集群的数据使用情况以及未来趋势的分析都有很大的帮助作用,当然Hadoop自身并不会帮你做这样的事情,所以你要想办法自己去拿,那么如何去拿这部分的数据呢,这就是我今天所要给大家分享的。

要获取拿些数据

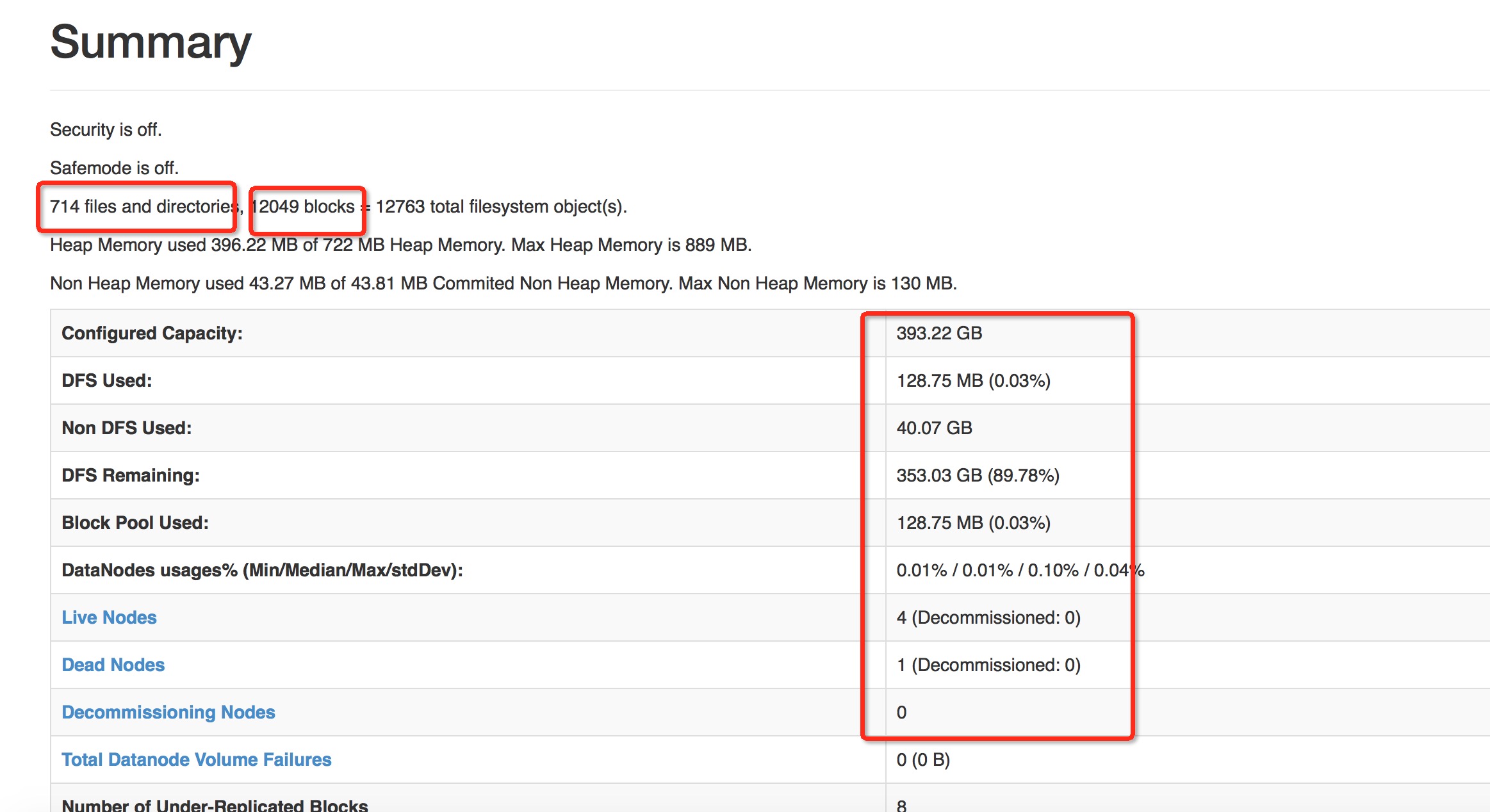

数据不是获取的越多,越细就好的,因为你要考虑你的成本代价的,在这里我们很明确,我们只需要一个宏观上的数据,总体使用情况的一些数据,比如dfs资源使用量,具体这类的信息这些信息可以参考namenode的页面数据,下面图中所示的一些就是不错的数据.

这些数据足以可以展现目前集群的数据使用情况以及数据的未来分析了.OK,我们就可以以这些数据为目标,想尽一起办法去获得其中的数据.

如何获取这些数据

目标倒是有了,但是如何去获取数据呢,如果你想走hdfs 命令行的方式去获取,答案当然是有,,研究过Hadoop-2.7.1源码的同学们应该知道,这个版本的发布包里包含了许多分析fsimage的命令,这些命令可以离线计算集群的file block块的信息,这些命令具体都是以bin/hdfs oiv [-args]开头,就是offlineImageView的缩写.但是这种方法有几个弊端,效率低,性能差,为什么这么说呢,因为每次分析fsimage的文件数据,数据每次都需要重新来过,而且当fsimage文件变得越来越大的时候,所需要的计算延时必然会增大,而且如果这个命令本身属于篇离线分析型的命令,不适合定时周期性去跑的,假设我们想以后缩小间隔数据获取,30分钟获取一下file,block数量,怎么办,所以最佳的办法就是以namenode页面数据获取的方式去拿到这些数据,因为我们经常刷新页面就能得到更新过后的数据,说明这个页面有自己的一套获取这方面数据的方法,只要我们找到了这方面的代码,基本就算大功告成了.中间我这里省略许多如何找这部分代码的过程,最后直接给出结果吧,HDFS的确没有在dfsAdmin这样的命令中添加类似的命令,也没有添加对应的RPC接口.我最后是通过找到,控制此页面的dfshealth.html文件才找到这段代码的,就是下面这个类中设计的代码,最终走的是http请求获取信息的.

/**

* Licensed to the Apache Software Foundation (ASF) under one

* or more contributor license agreements. See the NOTICE file

* distributed with this work for additional information

* regarding copyright ownership. The ASF licenses this file

* to you under the Apache License, Version 2.0 (the

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.hadoop.hdfs.server.namenode;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import java.net.InetSocketAddress;

import java.net.URI;

import java.net.URL;

import java.net.URLConnection;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.Map.Entry;

import java.util.Set;

import javax.management.MalformedObjectNameException;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.apache.hadoop.classification.InterfaceAudience;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hdfs.DFSConfigKeys;

import org.apache.hadoop.hdfs.DFSUtil;

import org.apache.hadoop.hdfs.DFSUtil.ConfiguredNNAddress;

import org.apache.hadoop.hdfs.protocol.DatanodeInfo.AdminStates;

import org.apache.hadoop.util.StringUtils;

import org.codehaus.jackson.JsonNode;

import org.codehaus.jackson.map.ObjectMapper;

import org.codehaus.jackson.type.TypeReference;

import org.znerd.xmlenc.XMLOutputter;

import com.google.common.base.Charsets;

/**

* This class generates the data that is needed to be displayed on cluster web

* console.

*/

@InterfaceAudience.Private

class ClusterJspHelper {

private static final Log LOG = LogFactory.getLog(ClusterJspHelper.class);

public static final String OVERALL_STATUS = "overall-status";

public static final String DEAD = "Dead";

private static final String JMX_QRY =

"/jmx?qry=Hadoop:service=NameNode,name=NameNodeInfo";

/**

* JSP helper function that generates cluster health report. When

* encountering exception while getting Namenode status, the exception will

* be listed on the page with corresponding stack trace.

*/

ClusterStatus generateClusterHealthReport() {

ClusterStatus cs = new ClusterStatus();

Configuration conf = new Configuration();

List<ConfiguredNNAddress> nns = null;

try {

nns = DFSUtil.flattenAddressMap(

DFSUtil.getNNServiceRpcAddresses(conf));

} catch (Exception e) {

// Could not build cluster status

cs.setError(e);

return cs;

}

// Process each namenode and add it to ClusterStatus

for (ConfiguredNNAddress cnn : nns) {

InetSocketAddress isa = cnn.getAddress();

NamenodeMXBeanHelper nnHelper = null;

try {

nnHelper = new NamenodeMXBeanHelper(isa, conf);

String mbeanProps= queryMbean(nnHelper.httpAddress, conf);

NamenodeStatus nn = nnHelper.getNamenodeStatus(mbeanProps);

if (cs.clusterid.isEmpty() || cs.clusterid.equals("")) { // Set clusterid only once

cs.clusterid = nnHelper.getClusterId(mbeanProps);

}

cs.addNamenodeStatus(nn);

} catch ( Exception e ) {

// track exceptions encountered when connecting to namenodes

cs.addException(isa.getHostName(), e);

continue;

}

}

return cs;

}

/**

* Helper function that generates the decommissioning report. Connect to each

* Namenode over http via JmxJsonServlet to collect the data nodes status.

*/

DecommissionStatus generateDecommissioningReport() {

String clusterid = "";

Configuration conf = new Configuration();

List<ConfiguredNNAddress> cnns = null;

try {

cnns = DFSUtil.flattenAddressMap(

DFSUtil.getNNServiceRpcAddresses(conf));

} catch (Exception e) {

// catch any exception encountered other than connecting to namenodes

DecommissionStatus dInfo = new DecommissionStatus(clusterid, e);

return dInfo;

}

// Outer map key is datanode. Inner map key is namenode and the value is

// decom status of the datanode for the corresponding namenode

Map<String, Map<String, String>> statusMap =

new HashMap<String, Map<String, String>>();

// Map of exceptions encountered when connecting to namenode

// key is namenode and value is exception

Map<String, Exception> decommissionExceptions =

new HashMap<String, Exception>();

List<String> unreportedNamenode = new ArrayList<String>();

for (ConfiguredNNAddress cnn : cnns) {

InetSocketAddress isa = cnn.getAddress();

NamenodeMXBeanHelper nnHelper = null;

try {

nnHelper = new NamenodeMXBeanHelper(isa, conf);

String mbeanProps= queryMbean(nnHelper.httpAddress, conf);

if (clusterid.equals("")) {

clusterid = nnHelper.getClusterId(mbeanProps);

}

nnHelper.getDecomNodeInfoForReport(statusMap, mbeanProps);

} catch (Exception e) {

// catch exceptions encountered while connecting to namenodes

String nnHost = isa.getHostName();

decommissionExceptions.put(nnHost, e);

unreportedNamenode.add(nnHost);

continue;

}

}

updateUnknownStatus(statusMap, unreportedNamenode);

getDecommissionNodeClusterState(statusMap);

return new DecommissionStatus(statusMap, clusterid,

getDatanodeHttpPort(conf), decommissionExceptions);

}

/**

* Based on the state of the datanode at each namenode, marks the overall

* state of the datanode across all the namenodes, to one of the following:

* <ol>

* <li>{@link DecommissionStates#DECOMMISSIONED}</li>

* <li>{@link DecommissionStates#DECOMMISSION_INPROGRESS}</li>

* <li>{@link DecommissionStates#PARTIALLY_DECOMMISSIONED}</li>

* <li>{@link DecommissionStates#UNKNOWN}</li>

* </ol>

*

* @param statusMap

* map whose key is datanode, value is an inner map with key being

* namenode, value being decommission state.

*/

private void getDecommissionNodeClusterState(

Map<String, Map<String, String>> statusMap) {

if (statusMap == null || statusMap.isEmpty()) {

return;

}

// For each datanodes

Iterator<Entry<String, Map<String, String>>> it =

statusMap.entrySet().iterator();

while (it.hasNext()) {

// Map entry for a datanode:

// key is namenode, value is datanode status at the namenode

Entry<String, Map<String, String>> entry = it.next();

Map<String, String> nnStatus = entry.getValue();

if (nnStatus == null || nnStatus.isEmpty()) {

continue;

}

boolean isUnknown = false;

int unknown = 0;

int decommissioned = 0;

int decomInProg = 0;

int inservice = 0;

int dead = 0;

DecommissionStates overallState = DecommissionStates.UNKNOWN;

// Process a datanode state from each namenode

for (Map.Entry<String, String> m : nnStatus.entrySet()) {

String status = m.getValue();

if (status.equals(DecommissionStates.UNKNOWN.toString())) {

isUnknown = true;

unknown++;

} else

if (status.equals(AdminStates.DECOMMISSION_INPROGRESS.toString())) {

decomInProg++;

} else if (status.equals(AdminStates.DECOMMISSIONED.toString())) {

decommissioned++;

} else if (status.equals(AdminStates.NORMAL.toString())) {

inservice++;

本文介绍了如何获取Hadoop HDFS集群的数据使用情况,包括文件、目录、Block块数量及磁盘使用量,并讨论了这些数据在监控和趋势预测中的应用。通过分析NameNode页面数据,实现高效获取并存储到数据库,进一步生成折线趋势图以展示文件、Block的增长和磁盘使用趋势,以便于管理和扩容决策。

本文介绍了如何获取Hadoop HDFS集群的数据使用情况,包括文件、目录、Block块数量及磁盘使用量,并讨论了这些数据在监控和趋势预测中的应用。通过分析NameNode页面数据,实现高效获取并存储到数据库,进一步生成折线趋势图以展示文件、Block的增长和磁盘使用趋势,以便于管理和扩容决策。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1086

1086

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?