# coding=utf-8

from numpy import *

import matplotlib

import matplotlib.pyplot as plt

from matplotlib.patches import Circle

#加载数据集

def loadDataSet(fileName):

dataMat=[]

labelMat=[]

fr=open(fileName)

for line in fr.readlines():

lineArr=line.strip().split('\t')

dataMat.append([float(lineArr[0]),float(lineArr[1])])

labelMat.append(float(lineArr[2]))

return dataMat,labelMat

#定义核映射函数

def kernelTrans(X,A,kTup):

m,n=shape(X)

K=mat(zeros((m,1)))# 结果

if kTup[0]=="lin":#线性核心

K=X * A.T

elif kTup[0]=='rbf':

for j in range(m):

deltaRow=X[j,:]-A

K[j]=deltaRow*deltaRow.T

K=exp(K/(-1*kTup[1]**2))

else:

raise("参数错误")

return K

#i为alpha_1在样本集合中的下标 ,需要在找一个不同的alpha_2 其下表不能为i

def selectJrand(i,m):

j=i

while(j==i):

j=int(random.uniform(0,m))

return j

#alpha需要满足一定的约束条件 0<=alpha<=C sum(alpha*y)=0

def clipAlpha(aj,H,L):

if aj>H:

aj=H

if L>aj:

aj=L

return aj

def calcWs(alphas,dataArr,classLabels):

X=mat(dataArr);labelMat=mat(classLabels).transpose()

m,n=shape(X)

w=zeros((n,1))

for i in range(m):

w+=multiply(alphas[i]*labelMat[i],X[i,:].T)#对应的元素相乘

return w

class optStruct:

def __init__(self,dataMatIn,classLabels,C,toler,kTup):

self.X=dataMatIn#输入矩阵 样本矩阵

self.labelMat=classLabels#样本标签

self.C=C

self.tol=toler

self.m=shape(dataMatIn)[0]#样本容量

self.alphas=mat(zeros((self.m,1)))#生成一个全是0的alphas向量

self.b=0

self.eCache=mat(zeros((self.m,2)))#误差缓存 eCache第一列给出eCache是否有效的标志位,第二列给出的是实际值

self.K=mat(zeros((self.m,self.m)))

for i in range(self.m):

self.K[:,i]=kernelTrans(self.X,self.X[i,:],kTup)

def calcEk(oS,k):#计算给定alpha的时候的误差 k用来指定计算哪个样本的误差

#fXk=float(multiply(oS.alphas,oS.labelMat).T*(oS.X*oS.X[k,:].T))+oS.b

fXk=float(multiply(oS.alphas,oS.labelMat).T*oS.K[:,k]+oS.b)

Ek=fXk-float(oS.labelMat[k])

return Ek

def selectJ(i,oS,Ei):

#选择第二个/内循环的alpha值 选择合适的第二个alphas值 是的每次有优化的时候的步长都最大

maxK=-1

maxDeltaE=0

Ej=0

oS.eCache[i]=[1,Ei]#先设置为有效的

validEcacheList=nonzero(oS.eCache[:,0].A)[0]

if (len(validEcacheList))>1:

for k in validEcacheList:

if k==i:

continue

Ek=calcEk(oS,k)

deltaE=abs(Ei-Ek)

if (deltaE>maxDeltaE):

maxK=k

maxDeltaE=deltaE

Ej=Ek

return maxK,Ej

else:#如果是第一次 就随机选择

j=selectJrand(i,oS.m)

Ej=calcEk(oS,j)

return j,Ej

#更新缓存

def updateEk(oS,k):

Ek=calcEk(oS,k)

oS.eCache[k]=[1,Ek]

#SMO内部函数

def innerL(i,oS):

Ei=calcEk(oS,i)#计算第一个alpha的误差

# if ((oS.labelMat[i].Ei<-oS.tol) and (oS.alphas[i]<oS.C)) or \

# ((oS.labelMat[i].Ei>oS.tol) and( oS.alphas[i]>0)):

if ((oS.labelMat[i]*Ei < -oS.tol) and (oS.alphas[i] < oS.C)) or ((oS.labelMat[i]*Ei > oS.tol) and (oS.alphas[i] > 0)):

#SMO必须要满足KKT条件

j,Ej=selectJ(i,oS,Ei)#选择具有最大步长的第二个alpha

alphaIold=oS.alphas[i].copy()

alphaJold=oS.alphas[j].copy()

if (oS.labelMat[i]!=oS.labelMat[j]):

L=max(0,oS.alphas[j]-oS.alphas[i])

H=min(oS.C,oS.C+oS.alphas[j]-oS.alphas[i])

else:

L=max(0,oS.alphas[j]+oS.alphas[i]-oS.C)

H=min(oS.C,oS.alphas[j]+oS.alphas[i])

# eta=2.0*oS.X[i,:]*oS.X[j,:].T-oS.X[i,:]*oS.X[i,:].T-oS.X[j,:]*oS.X[j,:].T

eta=2.0*oS.K[i,j]-oS.K[i,i]-oS.K[j,j]

oS.alphas[j]-=oS.labelMat[j]*(Ei-Ej)/eta

oS.alphas[j]=clipAlpha(oS.alphas[j],H,L)

updateEk(oS,j)

if (abs(oS.alphas[j]-alphaJold)<0.00001):

return 0

oS.alphas[i]+=oS.labelMat[j]*oS.labelMat[i]*(alphaJold-oS.alphas[j])

updateEk(oS,i)

b1 = oS.b - Ei- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*oS.K[i,i] - oS.labelMat[j]*(oS.alphas[j]-alphaJold)*oS.K[i,j]

b2 = oS.b - Ej- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*oS.K[i,j] - oS.labelMat[j]*(oS.alphas[j]-alphaJold)*oS.K[j,j]

# b1 = oS.b - Ei- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*(oS.X[i,:]*oS.X[i,:].T) - oS.labelMat[j]*(oS.alphas[j]-alphaJold)*(oS.X[i,:]*oS.X[j,:].T)#oS.K[i,j]

#b2 = oS.b - Ej- oS.labelMat[i]*(oS.alphas[i]-alphaIold)*(oS.X[i,:]*oS.X[j,:].T) - oS.labelMat[j]*(oS.alphas[j]-alphaJold)*(oS.X[j,:]*oS.X[j,:].T)#oS.K[j,j]

if (0 < oS.alphas[i]) and (oS.C > oS.alphas[i]): oS.b = b1

elif (0 < oS.alphas[j]) and (oS.C > oS.alphas[j]): oS.b = b2

else: oS.b = (b1 + b2)/2.0

return 1

else:

return 0

#选择第一个alpha的策略,在所有的数据集上进行单边扫描,在非边界上进行扫描

def smoP(dataMatIn, classLabels, C, toler, maxIter,kTup=('lin', 0)): #full Platt SMO

oS = optStruct(mat(dataMatIn),mat(classLabels).transpose(),C,toler,kTup)

iter = 0

entireSet = True; alphaPairsChanged = 0

while (iter < maxIter) and ((alphaPairsChanged > 0) or (entireSet)):# entireSet控制在边界上和整个数据集上进行选择alpha

alphaPairsChanged = 0

if entireSet: #go over all

for i in range(oS.m):

alphaPairsChanged += innerL(i,oS)

# print "fullSet, iter: %d i:%d, pairs changed %d" % (iter,i,alphaPairsChanged)

iter += 1

else:#go over non-bound (railed) alphas

nonBoundIs = nonzero((oS.alphas.A > 0) * (oS.alphas.A < C))[0]

for i in nonBoundIs:

alphaPairsChanged += innerL(i,oS)

#print "non-bound, iter: %d i:%d, pairs changed %d" % (iter,i,alphaPairsChanged)

iter += 1

if entireSet: entireSet = False #toggle entire set loop

elif (alphaPairsChanged == 0): entireSet = True

print "iteration number: %d" % iter

return oS.b,oS.alphas

#利用高斯核将数据映射到高纬空间

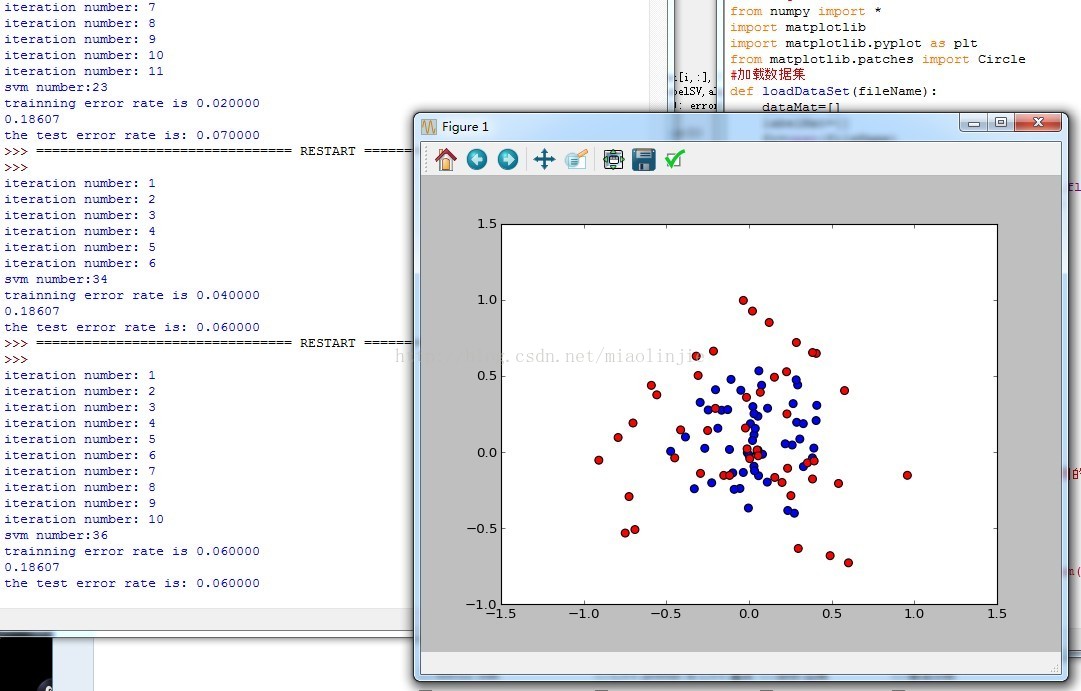

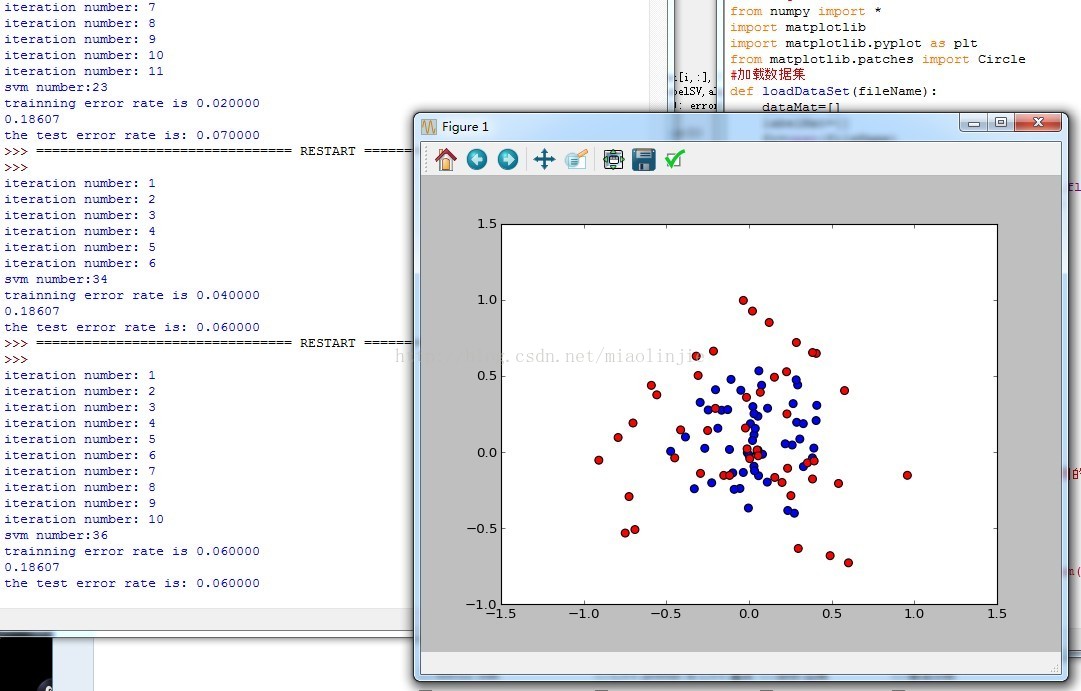

def testRbf(k1=2.3):

dataArr,labelArr=loadDataSet("testSetRBF.txt")

#训练数据集

b,alphas=smoP(dataArr,labelArr,200,0.0001,10000,('rbf',k1))

#print alphas

dataMat=mat(dataArr)

labelMat=mat(labelArr).transpose()

svInd=nonzero(alphas.A>0)[0]#找到支持向量

sVs=dataMat[svInd]

labelSV=labelMat[svInd]

print "svm number:%d"%shape(sVs)[0]

m,n=shape(dataMat)

errorCount=0#训练集的误差

for i in range(m):

#预测为 y=sum(yi*alphai*ker(xi,x))+b

kernelEval=kernelTrans(sVs,dataMat[i,:],('rbf',k1))

predict=kernelEval.T*multiply(labelSV,alphas[svInd])+b

if sign(predict)!=sign(labelArr[i]):

errorCount+=1

print "trainning error rate is %f"%(float(errorCount)/m)

#测试集合的误差

dataArr,labelArr = loadDataSet('testSetRBF2.txt')

errorCount = 0

datMat=mat(dataArr); labelMat = mat(labelArr).transpose()

xcord0=[]

ycord0=[]

xcord1=[]

ycord1=[]

m,n = shape(datMat)

for i in range(m):

kernelEval = kernelTrans(sVs,datMat[i,:],('rbf', k1))

predict=kernelEval.T * multiply(labelSV,alphas[svInd]) + b

if sign(predict)!=sign(labelArr[i]): errorCount += 1

if sign(predict)==1:

xcord0.extend(datMat[i,:].tolist())

else:

xcord1.extend(dataMat[i,:].tolist())

# print xcord0

fig=plt.figure()

ax=fig.add_subplot(111)

print xcord0[0][1]

s1=shape(xcord0)[0]

for i in range(s1):

ax.scatter(xcord0[i][0],xcord0[i][1],marker='o',s=50)

s1=shape(xcord1)[0]

for i in range(s1):

ax.scatter(xcord1[i][0],xcord1[i][1],marker='o',s=50,c='red')

# ax.scatter(xcord0[:,0].tolist(),xcord0[:,1].tolist(),marker='s',s=50)

# ax.scatter(xcord1[:,0],xcord1[:,1],marker='o',s=50,c='red')

print "the test error rate is: %f" % (float(errorCount)/m)

plt.show()

return alphas,b

testRbf()

956

956

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?