py-faster-rcnn用自己的数据训练模型

环境:

- ubuntu14.04

- CUDA7.5

- python2.7

- opencv2.0以上

caffe及py-faster-rcnn的配置安装过程可以参考我的另一篇博客:深度学习框架caffe及py-faster-rcnn详细配置安装过程

做训练数据集的过程可以参考这篇博客:将数据集做成VOC2007格式用于Faster-RCNN训练

1、下载VOC2007数据集

百度云地址:http://pan.baidu.com/s/1gfdSFRX

解压,然后,将该数据集放在py-faster-rcnn\data目录下,用你的训练数据集替换VOC2007数据集。(替换Annotations,ImageSets和JPEGImages)

(用你的Annotations,ImagesSets和JPEGImages替换py-faster-rcnn\data\VOCdevkit2007\VOC2007中对应文件夹)

文件结构如下所示:

wlw@wlw:~/language/caffe/py-faster-rcnn/data/VOCdevkit2007$ ls

results VOC2007wlw@wlw:~/language/caffe/py-faster-rcnn/data/VOCdevkit2007/results/VOC2007$ ls

Layout Main Segmentationwlw@wlw:~/language/caffe/py-faster-rcnn/data/VOCdevkit2007/VOC2007$ ls

Annotations ImageSets JPEGImagesAnnotations中是所有的xml文件

JPEGImages中是所有的训练图片

Main中是4个txt文件,其中test.txt是测试集,train.txt是训练集,val.txt是验证集,trainval.txt是训练和验证集

2、训练前的一些修改

(1)py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt/stage1_fast_rcnn_train.pt修改

layer {

name: 'data'

type: 'Python'

top: 'data'

top: 'rois'

top: 'labels'

top: 'bbox_targets'

top: 'bbox_inside_weights'

top: 'bbox_outside_weights'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 2" #按训练集类别改,该值为类别数+1

}

} layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

inner_product_param {

num_output: 2 #按训练集类别改,该值为类别数+1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

} layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

inner_product_param {

num_output: 8 #按训练集类别改,该值为(类别数+1)*4

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

} (2)py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt/stage1_rpn_train.pt修改

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 2" #按训练集类别改,该值为类别数+1

}

} (3)py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt/stage2_fast_rcnn_train.pt修改

layer {

name: 'data'

type: 'Python'

top: 'data'

top: 'rois'

top: 'labels'

top: 'bbox_targets'

top: 'bbox_inside_weights'

top: 'bbox_outside_weights'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 2" #按训练集类别改,该值为类别数+1

}

} layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

inner_product_param {

num_output: 2 #按训练集类别改,该值为类别数+1

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

} layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

param { lr_mult: 1.0 }

param { lr_mult: 2.0 }

inner_product_param {

num_output: 8 #按训练集类别改,该值为(类别数+1)*4

weight_filler {

type: "gaussian"

std: 0.001

}

bias_filler {

type: "constant"

value: 0

}

}

} (4)py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt/stage2_rpn_train.pt修改

layer {

name: 'input-data'

type: 'Python'

top: 'data'

top: 'im_info'

top: 'gt_boxes'

python_param {

module: 'roi_data_layer.layer'

layer: 'RoIDataLayer'

param_str: "'num_classes': 2" #按训练集类别改,该值为类别数+1

}

} (5)py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt/faster_rcnn_test.pt修改

layer {

name: "cls_score"

type: "InnerProduct"

bottom: "fc7"

top: "cls_score"

inner_product_param {

num_output: 2 #按训练集类别改,该值为类别数+1

}

} layer {

name: "bbox_pred"

type: "InnerProduct"

bottom: "fc7"

top: "bbox_pred"

inner_product_param {

num_output: 8 #按训练集类别改,该值为(类别数+1)*4

}

} (6)py-faster-rcnn/lib/datasets/pascal_voc.py修改

class pascal_voc(imdb):

def __init__(self, image_set, year, devkit_path=None):

imdb.__init__(self, 'voc_' + year + '_' + image_set)

self._year = year

self._image_set = image_set

self._devkit_path = self._get_default_path() if devkit_path is None \

else devkit_path

self._data_path = os.path.join(self._devkit_path, 'VOC' + self._year)

self._classes = ('__background__', # always index 0

'你的标签1','你的标签2','你的标签3','你的标签4'

) 将其中

self._classes = ('__background__', # always index 0

'你的标签1','你的标签2','你的标签3','你的标签4'

) 中的标签修改成你的数据集的标签。

(7)py-faster-rcnn/lib/datasets/imdb.py修改

该文件的append_flipped_images(self)函数修改为:

def append_flipped_images(self):

num_images = self.num_images

widths = [PIL.Image.open(self.image_path_at(i)).size[0]

for i in xrange(num_images)]

for i in xrange(num_images):

boxes = self.roidb[i]['boxes'].copy()

oldx1 = boxes[:, 0].copy()

oldx2 = boxes[:, 2].copy()

boxes[:, 0] = widths[i] - oldx2 - 1

print boxes[:, 0]

boxes[:, 2] = widths[i] - oldx1 - 1

print boxes[:, 0]

assert (boxes[:, 2] >= boxes[:, 0]).all()

entry = {'boxes' : boxes,

'gt_overlaps' : self.roidb[i]['gt_overlaps'],

'gt_classes' : self.roidb[i]['gt_classes'],

'flipped' : True}

self.roidb.append(entry)

self._image_index = self._image_index * 2 (8)其他

为防止与之前的模型搞混,训练前把output文件夹删除(或改个其他名),还要把py-faster-rcnn/data/cache中的文件和

py-faster-rcnn/data/VOCdevkit2007/annotations_cache中的文件删除(如果有的话)。

至于学习率等之类的设置,可在py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt中的solve文件设置,迭代次数可在py-faster-rcnn/tools的train_faster_rcnn_alt_opt.py中修改:

max_iters = [80000, 40000, 80000, 40000] 分别为4个阶段(rpn第1阶段,fast rcnn第1阶段,rpn第2阶段,fast rcnn第2阶段)的迭代次数。可改成你希望的迭代次数。

如果改了这些数值,最好把py-faster-rcnn/models/pascal_voc/ZF/faster_rcnn_alt_opt里对应的solver文件(有4个)也修改,stepsize小于上面修改的数值。

3、训练

在py-faster-rcnn根目录下执行:

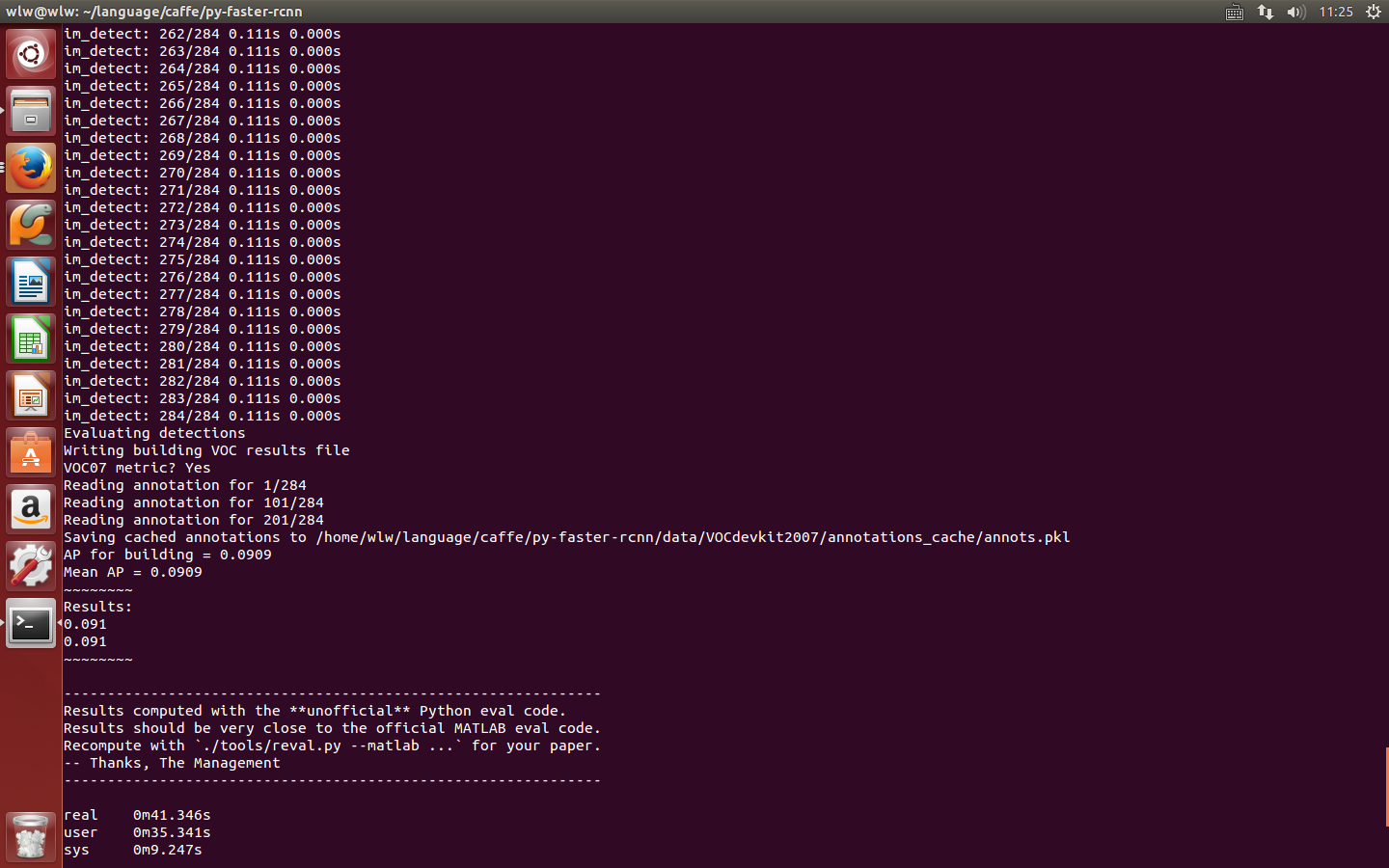

./experiments/scripts/faster_rcnn_alt_opt.sh 0 ZF pascal_voc 训练结束如下图所示:

在py-faster-rcnn/output/faster_rcnn_alt_opt/voc_2007_trainval/下会有ZF_faster_rcnn_final.caffemodel ,这就是我们用自己的数据集训练得到的最终模型。

wlw@wlw:~/language/caffe/py-faster-rcnn/output/faster_rcnn_alt_opt/voc_2007_trainval$ ls

ZF_faster_rcnn_final.caffemodel zf_rpn_stage1_iter_80000.caffemodel zf_rpn_stage2_iter_80000_proposals.pkl

zf_fast_rcnn_stage1_iter_40000.caffemodel zf_rpn_stage1_iter_80000_proposals.pkl

zf_fast_rcnn_stage2_iter_40000.caffemodel zf_rpn_stage2_iter_80000.caffemodel4、测试

将上述的ZF_faster_rcnn_final.caffemodel复制到py-faster-rcnn\data\faster_rcnn_models,修改修改:

py-faster-rcnn\tools\demo.py:

CLASSES = ('__background__',

'你的标签1', '你的标签2', '你的标签3', '你的标签4') 改为之前的训练数据集的标签。

在修改def parse_args()函数:

parser.add_argument('--net', dest='demo_net', help='Network to use [vgg16]',

choices=NETS.keys(), default='zf')选择模型ZF:default=’zf’

注:

若修改了模型的名称,如将ZF_faster_rcnn_final.caffemodel重命名为CAR_faster_rcnn_final.caffemodel,并将需要检测的图片放在/data/car/

目录下,则还需要修改py-faster-rcnn\tools\demo.py:

NETS = {'vgg16': ('VGG16',

'VGG16_faster_rcnn_final.caffemodel'),

'ldlidar': ('LDLIDAR',

'LDLIDAR_faster_rcnn_final.caffemodel')}

# Load the demo image

im_file = os.path.join(cfg.DATA_DIR, 'ldlidar', image_name)

im = cv2.imread(im_file)def parse_args():

"""Parse input arguments."""

parser = argparse.ArgumentParser(description='Faster R-CNN demo')

parser.add_argument('--gpu', dest='gpu_id', help='GPU device id to use [0]',

default=0, type=int)

parser.add_argument('--cpu', dest='cpu_mode',

help='Use CPU mode (overrides --gpu)',

action='store_true')

parser.add_argument('--net', dest='demo_net', help='Network to use [vgg16]',

choices=NETS.keys(), default='ldlidar')

args = parser.parse_args()

path = '/home/wlw/language/caffe/py-faster-rcnn/data/ldlidar'

for filename in os.listdir(path):

im_name = filename

print '~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~'

print 'Demo for data/ldlidar/{}'.format(im_name)

demo(net, im_name)

运行demo.py

cd ./tools

python demo.py5、可能遇到的问题

(1)

Traceback (most recent call last):

File "/usr/lib/python2.7/multiprocessing/process.py", line 258, in _bootstrap

self.run()

File "/usr/lib/python2.7/multiprocessing/process.py", line 114, in run

self._target(*self._args, **self._kwargs)

File "./tools/train_faster_rcnn_alt_opt.py", line 189, in train_fast_rcnn

roidb, imdb = get_roidb(imdb_name, rpn_file=rpn_file)

File "./tools/train_faster_rcnn_alt_opt.py", line 67, in get_roidb

roidb = get_training_roidb(imdb)

File "/home/wlw/language/caffe/py-faster-rcnn/tools/../lib/fast_rcnn/train.py", line 118, in get_training_roidb

imdb.append_flipped_images()

File "/home/wlw/language/caffe/py-faster-rcnn/tools/../lib/datasets/imdb.py", line 114, in append_flipped_images

assert (boxes[:, 2] >= boxes[:, 0]).all()这是因为用于训练的图片中的标记的左上角坐标(x,y)可能为0,或标记的区域溢出图片。而faster rcnn会对Xmin,Ymin,Xmax,Ymax进行减一操作,如果Xmin为0,减一后变为65535。

解决方法:

修改lib/datasets/imdb.py中的append_flipped_images()函数:

在代码

boxes[:, 2] = widths[i] - oldx1 - 1下,添加代码:

for b in range(len(boxes)):

if boxes[b][2] < boxes[b][0]:

boxes[b][0] = 0若仍不能解决,修改lib/datasets/pascal_voc.py中的_load_pascal_annotation()函数,将其中将对Xmin,Ymin,Xmax,Ymax减一去掉,改为:

for ix, obj in enumerate(objs):

bbox = obj.find('bndbox')

# Make pixel indexes 0-based

x1 = float(bbox.find('xmin').text)

y1 = float(bbox.find('ymin').text)

x2 = float(bbox.find('xmax').text)

y2 = float(bbox.find('ymax').text)(2)

Traceback (most recent call last):

File "./tools/test_net.py", line 90, in <module>

test_net(net, imdb, max_per_image=args.max_per_image, vis=args.vis)

File "/home/wlw/language/caffe/py-faster-rcnn/tools/../lib/fast_rcnn/test.py", line 295, in test_net

imdb.evaluate_detections(all_boxes, output_dir)

File "/home/wlw/language/caffe/py-faster-rcnn/tools/../lib/datasets/pascal_voc.py", line 317, in evaluate_detections

self._write_voc_results_file(all_boxes)

File "/home/wlw/language/caffe/py-faster-rcnn/tools/../lib/datasets/pascal_voc.py", line 244, in _write_voc_results_file

with open(filename, 'wt') as f:

IOError: [Errno 2] No such file or directory: '/home/wlw/language/caffe/py-faster-rcnn/data/VOCdevkit2007/results/VOC2007/Main/comp4_507d2b05-379f-4cf1-a1d4-5bd729d32fb0_det_test_building.txt'解决方法:检查./data/VOCdevkit2007文件夹是否复制完整,./data/VOCdevkit2007/results/VOC2007目录下是否有Layout Main Segmentation三个文件夹。

参考资料:

Faster-RCNN+ZF用自己的数据集训练模型(Python版本)

解决faster-rcnn中训练时assert(boxes[:,2]>=boxes[:,0]).all()的问题

Faster RCNN 训练自己的检测模型

2525

2525

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?