项目里有一个需求,就是动态判断图片合成的mov与自己自拍的视频合成,然后还要保留自拍的原音,最后再混音一个音乐,并设置音量大小下面是代码,可以模拟器测试,但测试的时候最后不要开断点

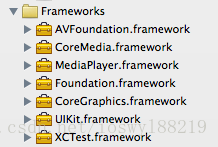

加入framework

//

// ViewController.h

// img+mov+audio+video

//

// Created by 王颜龙 on 14-1-14.

// Copyright (c) 2014年 longyan. All rights reserved.

//

#import <UIKit/UIKit.h>

#import "CompositionCenter.h"

@interface ViewController : UIViewController<CompositionDelegate>

- (IBAction)start:(UIButton *)sender;

- (IBAction)soundMixing:(UIButton *)sender;

- (IBAction)overMix:(id)sender;

@end

#import "CompositionCenter.h"

#import "ViewController.h"

#import "MovItem.h"

@interface ViewController ()

@property(nonatomic,strong)NSMutableArray *dataArr;

@end

@implementation ViewController

- (void)viewDidLoad

{

[super viewDidLoad];

NSString *fileStr = [[NSBundle mainBundle]pathForResource:@"IMG_0598" ofType:@"MOV"];

NSURL *fileUrl = [NSURL fileURLWithPath:fileStr];

NSString *fileStr2 = [[NSBundle mainBundle]pathForResource:@"IMG_0634" ofType:@"MOV"];

NSURL *fileUrl2 = [NSURL fileURLWithPath:fileStr2];

self.dataArr = [[NSMutableArray alloc]initWithCapacity:0];

for (int i = 0; i < 30; i++) {

MovItem *item = [[MovItem alloc]init];

item.type = @"img";

item.image = [UIImage imageNamed:[NSString stringWithFormat:@"%d.jpg",arc4random()%10+1]];

[self.dataArr addObject:item];

}

MovItem *item = [[MovItem alloc]init];

item.type = @"mov";

item.fileUrl = fileUrl;

[self.dataArr addObject:item];

for (int i = 0; i < 30; i++) {

MovItem *item = [[MovItem alloc]init];

item.type = @"img";

item.image = [UIImage imageNamed:[NSString stringWithFormat:@"%d.jpg",arc4random()%10+1]];

[self.dataArr addObject:item];

}

MovItem *item2 = [[MovItem alloc]init];

item2.type = @"mov";

item2.fileUrl = fileUrl2;

[self.dataArr addObject:item2];

for (int i = 0; i < 30; i++) {

MovItem *item = [[MovItem alloc]init];

item.type = @"img";

item.image = [UIImage imageNamed:[NSString stringWithFormat:@"%d.jpg",arc4random()%10+1]];

[self.dataArr addObject:item];

}

}

- (void)didReceiveMemoryWarning

{

[super didReceiveMemoryWarning];

// Dispose of any resources that can be recreated.

}

- (IBAction)start:(UIButton *)sender {

[[CompositionCenter sharedDataCenter]startComposition:self.dataArr];

[[CompositionCenter sharedDataCenter] setDeleagte:self];

}

- (IBAction)soundMixing:(UIButton *)sender {

//不能开断点测试

[[CompositionCenter sharedDataCenter] soundMixing];

}

- (IBAction)overMix:(id)sender {

//最后的混音

[[CompositionCenter sharedDataCenter]overMix];

}

- (void)CompositionDidBegin{

NSLog(@"begin");

}

- (void)CompositionDidFinish:(NSURL *)url{

NSLog(@"Finish");

}

- (void)CompositionDidFail{

NSLog(@"Fail");

}

@end

//

// DataCenter.h

// img+mov+audio+video

//

// Created by 王颜龙 on 14-1-14.

// Copyright (c) 2014年 longyan. All rights reserved.

//

#import <Foundation/Foundation.h>

@protocol CompositionDelegate <NSObject>

- (void)CompositionDidBegin;

- (void)CompositionDidFinish:(NSURL *)url;

- (void)CompositionDidFail;

@end

@interface CompositionCenter : NSObject

{

CGRect imageRect;

dispatch_queue_t _serialQueue;

NSMutableArray *audioMixParams;

}

@property(nonatomic,strong)NSMutableArray *trackArr;

@property(nonatomic, strong)NSString* videoPath;

@property (nonatomic,strong)NSURL *url;

@property(nonatomic, strong)AVAssetWriter* videoWriter;

@property(nonatomic, strong)AVAssetWriterInput* writerInput;

@property(nonatomic, strong)AVAssetWriterInputPixelBufferAdaptor* adaptor;

@property(nonatomic, assign)BOOL firstImgAdded;

@property(nonatomic,unsafe_unretained)id<CompositionDelegate> deleagte;

@property(nonatomic,assign)CMTime allTime;

@property(nonatomic,strong)NSMutableArray *tmpArr;

@property(nonatomic,strong)NSMutableArray *tmpDetailArr;

@property(nonatomic,strong)NSURL *overUrl;

@property(nonatomic,strong)NSURL *mixURL;

+ (CompositionCenter*) sharedDataCenter;

- (void)startComposition:(NSMutableArray *)arr;

- (void)soundMixing;

- (void)overMix;

@end

//

// DataCenter.m

// img+mov+audio+video

//

// Created by 王颜龙 on 14-1-14.

// Copyright (c) 2014年 longyan. All rights reserved.

//

#import "ExtAudioFileMixer.h"

#import "MovItem.h"

#import "CompositionCenter.h"

#import "MovDetailItem.h"

static CompositionCenter *sharedObj = nil; //第一步:静态实例,并初始化。

@implementation CompositionCenter

+ (CompositionCenter*) sharedDataCenter //第二步:实例构造检查静态实例是否为nil

{

@synchronized (self)

{

if (sharedObj == nil) {

//如果为nil,创建实例

sharedObj = [[[self class] alloc]init];

}

}

return sharedObj;

}

#pragma mark - 下面这些方法,是为了确保只有一个实例对象

+(id)allocWithZone:(NSZone *)zone

{

if (sharedObj == nil) {

sharedObj = [super allocWithZone:zone];

}

return sharedObj;

}

//实现copy协议,返回本身

- (id)copyWithZone:(NSZone *)zone

{

return sharedObj;

}

#pragma mark - 动态保存mov

static int numCount = 0;

- (void)startComposition:(NSMutableArray *)arr{

NSLog(@"保存路径===%@",[self getLibarayPath]);

if (self.deleagte && [self.deleagte respondsToSelector:@selector(CompositionDidBegin)]) {

[self.deleagte CompositionDidBegin];

}

self.allTime = kCMTimeZero;

//存放png的数组

self.tmpArr = [[NSMutableArray alloc]initWithCapacity:0];

//存放合成mov的item数组

self.tmpDetailArr = [[NSMutableArray alloc]initWithCapacity:0];

//存放track的数组

self.trackArr = [[NSMutableArray alloc]initWithCapacity:0];

//创建串行队列

_serialQueue = dispatch_queue_create("serialQueue", DISPATCH_QUEUE_SERIAL);

__block int num = 0;

//使用串行队列控制合成顺序

for (MovItem *item in arr) {

dispatch_async(_serialQueue, ^{

if ([item.type isEqualToString:@"img"]) {

[self.tmpArr addObject:item];

NSLog(@"jiarushuzu %d",num);

if (num+1 == arr.count) {

NSLog(@"over %d",num);

[self initRecord];

[self startCamera];

[self.tmpArr removeAllObjects];

}

}else{

NSLog(@"mov save %d",num);

[self initRecord];

[self startCamera];

[self saveMOV:item];

[self.tmpArr removeAllObjects];

}

num++;

});

}

numCount = 0;

//合成方法

[self Composition];

}

- (void)Composition{

dispatch_async(_serialQueue, ^{

//整合

AVMutableComposition* mixComposition = [[AVMutableComposition alloc] init];

AVMutableVideoCompositionInstruction * MainInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

CMTime tmpTime;

for (MovDetailItem *item in self.tmpDetailArr) {

NSLog(@"item === %d",item.num);

//动态创建track

AVMutableCompositionTrack *firstTrack = [mixComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

if ( item.num == 1 ) {

tmpTime = kCMTimeZero;

}

NSLog(@"tmpTime === %f",CMTimeGetSeconds(tmpTime));

[firstTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, item.asset.duration) ofTrack:[[item.asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0] atTime:tmpTime error:nil];

//AUDIO TRACK

//如果是mov进行合成保留原声

if([item.type isEqualToString:@"mov"]){

AVMutableCompositionTrack *AudioTrack = [mixComposition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

[AudioTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, item.asset.duration) ofTrack:[[item.asset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0] atTime:tmpTime error:nil];

}

//FIXING ORIENTATION//

//调整视频方向

AVMutableVideoCompositionLayerInstruction *FirstlayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:firstTrack];

AVAssetTrack *FirstAssetTrack = [[item.asset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

UIImageOrientation FirstAssetOrientation_ = UIImageOrientationUp;

BOOL isFirstAssetPortrait_ = NO;

CGAffineTransform firstTransform = FirstAssetTrack.preferredTransform;

if(firstTransform.a == 0 && firstTransform.b == 1.0 && firstTransform.c == -1.0 && firstTransform.d == 0) {FirstAssetOrientation_= UIImageOrientationRight; isFirstAssetPortrait_ = YES;}

if(firstTransform.a == 0 && firstTransform.b == -1.0 && firstTransform.c == 1.0 && firstTransform.d == 0) {FirstAssetOrientation_ = UIImageOrientationLeft; isFirstAssetPortrait_ = YES;}

if(firstTransform.a == 1.0 && firstTransform.b == 0 && firstTransform.c == 0 && firstTransform.d == 1.0) {FirstAssetOrientation_ = UIImageOrientationUp;}

if(firstTransform.a == -1.0 && firstTransform.b == 0 && firstTransform.c == 0 && firstTransform.d == -1.0) {FirstAssetOrientation_ = UIImageOrientationDown;}

CGFloat FirstAssetScaleToFitRatio = 320/FirstAssetTrack.naturalSize.width;

if(isFirstAssetPortrait_){

FirstAssetScaleToFitRatio = 320/FirstAssetTrack.naturalSize.height;

CGAffineTransform FirstAssetScaleFactor = CGAffineTransformMakeScale(FirstAssetScaleToFitRatio,FirstAssetScaleToFitRatio);

[FirstlayerInstruction setTransform:CGAffineTransformConcat(FirstAssetTrack.preferredTransform, FirstAssetScaleFactor) atTime:tmpTime];

}else{

CGAffineTransform FirstAssetScaleFactor = CGAffineTransformMakeScale(FirstAssetScaleToFitRatio,FirstAssetScaleToFitRatio);

[FirstlayerInstruction setTransform:CGAffineTransformConcat(CGAffineTransformConcat(FirstAssetTrack.preferredTransform, FirstAssetScaleFactor),CGAffineTransformMakeTranslation(0, 150)) atTime:tmpTime];

}

tmpTime = [self addAllAVAssetDuration:item];

[FirstlayerInstruction setOpacity:0.0 atTime:self.allTime];

[self.trackArr insertObject:FirstlayerInstruction atIndex:0];

}

MainInstruction.timeRange = CMTimeRangeMake(kCMTimeZero,self.allTime);

MainInstruction.layerInstructions = self.trackArr;

AVMutableVideoComposition *MainCompositionInst = [AVMutableVideoComposition videoComposition];

MainCompositionInst.instructions = [NSArray arrayWithObject:MainInstruction];

MainCompositionInst.frameDuration = CMTimeMake(1, 30);

if (iPhone5) {

MainCompositionInst.renderSize = CGSizeMake(320.0, 568.0);

}else{

MainCompositionInst.renderSize = CGSizeMake(320.0, 480.0);//解决图像问题

}

NSString* fileName = [NSString stringWithFormat:@"%@.mov", @"over"];

NSString *filePath = [NSString stringWithFormat:@"%@/%@", [self getLibarayPath], fileName];

NSLog(@"输出路径 === %@",filePath);

NSURL *url = [NSURL fileURLWithPath:filePath];

//输出

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:mixComposition presetName:AVAssetExportPresetHighestQuality];

exporter.outputURL=url;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

exporter.videoComposition = MainCompositionInst;

exporter.shouldOptimizeForNetworkUse = YES;

[exporter exportAsynchronouslyWithCompletionHandler:^

{

dispatch_async(dispatch_get_main_queue(), ^{

[self exportDidFinish:exporter];

});

}];

});

}

- (void)exportDidFinish:(AVAssetExportSession*)session

{

if(session.status == AVAssetExportSessionStatusCompleted){

NSLog(@"!!!");

self.overUrl = session.outputURL;

if (self.deleagte && [self.deleagte respondsToSelector:@selector(CompositionDidFinish:)]) {

[self.deleagte CompositionDidFinish:self.overUrl];

}

}else if (session.status == AVAssetExportSessionStatusFailed){

if (self.deleagte && [self.deleagte respondsToSelector:@selector(CompositionDidFail)]) {

[self.deleagte CompositionDidFail];

}

}

}

#pragma mark - 视频保存本地

- (void)saveMOV:(MovItem *)item{

numCount ++;

NSString* fileName = [NSString stringWithFormat:@"%d.mov", numCount];

NSString *filePath = [NSString stringWithFormat:@"%@/%@", [self getLibarayPath], fileName];

NSData *data = [NSData dataWithContentsOfURL:item.fileUrl];

[data writeToFile:filePath atomically:YES];

//创建合成mov的item

MovDetailItem *item0 = [[MovDetailItem alloc]init];

AVAsset *asset = [AVAsset assetWithURL:item.fileUrl];

item0.asset = asset;

item0.num = numCount;

item0.type = @"mov";

[self.tmpDetailArr addObject:item0];

}

#pragma mark - 初始化写入

- (void)initRecord

{

numCount ++;

//是否是第一次添加图片

self.firstImgAdded = FALSE;

//video路径

NSString* fileName = [NSString stringWithFormat:@"%d.mov", numCount];

self.videoPath = [NSString stringWithFormat:@"%@/%@", [self getLibarayPath], fileName];

// NSLog(@"输出== %@",self.videoPath);

//设置图片

CGSize frameSize = imageRect.size;

NSError* error = nil;

//创建写入对象

self.videoWriter = [[AVAssetWriter alloc] initWithURL:[NSURL fileURLWithPath:self.videoPath] fileType:AVFileTypeQuickTimeMovie error:&error];

//如果出错,打印错误内容

if(error)

{

NSLog(@"error creating AssetWriter: %@",[error description]);

self.videoWriter = nil;

return;

}

//设置参数

NSDictionary *videoSettings = [NSDictionary dictionaryWithObjectsAndKeys:

AVVideoCodecH264, AVVideoCodecKey,

[NSNumber numberWithInt:frameSize.width], AVVideoWidthKey,

[NSNumber numberWithInt:frameSize.height], AVVideoHeightKey,

nil];

//输入对象

self.writerInput = [AVAssetWriterInput

assetWriterInputWithMediaType:AVMediaTypeVideo

outputSettings:videoSettings];

//属性设置

NSMutableDictionary *attributes = [[NSMutableDictionary alloc] init];

[attributes setObject:[NSNumber numberWithUnsignedInt:kCVPixelFormatType_32ARGB] forKey:(NSString*)kCVPixelBufferPixelFormatTypeKey];

[attributes setObject:[NSNumber numberWithUnsignedInt:frameSize.width] forKey:(NSString*)kCVPixelBufferWidthKey];

[attributes setObject:[NSNumber numberWithUnsignedInt:frameSize.height] forKey:(NSString*)kCVPixelBufferHeightKey];

//通过属性和writerInput 创建一个新的Adaptor

self.adaptor = [AVAssetWriterInputPixelBufferAdaptor

assetWriterInputPixelBufferAdaptorWithAssetWriterInput:self.writerInput

sourcePixelBufferAttributes:attributes];

//添加输入,必须在开始写入之前

[self.videoWriter addInput:self.writerInput];

self.writerInput.expectsMediaDataInRealTime = YES;

//开始写入

[self.videoWriter startWriting];

[self.videoWriter startSessionAtSourceTime:kCMTimeZero];

}

#pragma mark - 开始写入

- (void)startCamera

{

int count = 0;

while (self.tmpArr.count - count) {

MovItem *item = [self.tmpArr objectAtIndex:count];

UIImage *image = item.image;

CGImageRef image1 = image.CGImage;

[self writeImage:image1 withIndex:count];

count ++;

}

[self.writerInput markAsFinished];

[self.videoWriter finishWriting];

MovDetailItem *item = [[MovDetailItem alloc]init];

AVAsset *asset = [AVAsset assetWithURL:[NSURL fileURLWithPath:self.videoPath]];

item.asset = asset;

item.num = numCount;

item.type = @"img";

[self.tmpDetailArr addObject:item];

}

- (void)writeImage:(CGImageRef)img withIndex:(NSInteger)curCount

{

CVPixelBufferRef buffer = NULL;

if (self.videoWriter == nil)

{

NSLog(@"error~~~~~~~~~~~");

}

if (self.firstImgAdded == FALSE)

{

buffer = [self pixelBufferFromCGImage:img];

BOOL result = [self.adaptor appendPixelBuffer:buffer withPresentationTime:kCMTimeZero];

if (result == NO) //failes on 3GS, but works on iphone 4

{

NSLog(@"failed to append buffer");

}

if(buffer)

{

CVBufferRelease(buffer);

}

self.firstImgAdded = TRUE;

}

else

{

if (self.adaptor.assetWriterInput.readyForMoreMediaData)

{

CMTime frameTime = CMTimeMake(1, FramePerSec);

CMTime lastTime = CMTimeMake(curCount, FramePerSec);

CMTime presentTime = CMTimeAdd(lastTime, frameTime);

buffer = [self pixelBufferFromCGImage:img];

BOOL result = [self.adaptor appendPixelBuffer:buffer withPresentationTime:presentTime];

if (result == NO) //failes on 3GS, but works on iphone 4

{

NSLog(@"failed to append buffer");

NSLog(@"The error is %@", [self.videoWriter error]);

}

else

{

NSLog(@"write ok");

}

if(buffer)

{

CVBufferRelease(buffer);

}

}

else

{

NSLog(@"error");

}

}

}

- (CVPixelBufferRef)pixelBufferFromCGImage:(CGImageRef)image

{

NSDictionary *options = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithBool:TRUE], kCVPixelBufferCGImageCompatibilityKey,

[NSNumber numberWithBool:TRUE],kCVPixelBufferCGBitmapContextCompatibilityKey,

nil];//是否兼容

CVPixelBufferRef pxbuffer = NULL;

CVReturn status = CVPixelBufferCreate(kCFAllocatorDefault, imageRect.size.width,

imageRect.size.height, kCVPixelFormatType_32ARGB, (__bridge CFDictionaryRef) options,

&pxbuffer);//返回kCVReturnSuccess kCFAllocatorDefault = nil

status=status;

NSParameterAssert(status == kCVReturnSuccess && pxbuffer != NULL);//判断类型

CVPixelBufferLockBaseAddress(pxbuffer, 0);//访问地址

void *pxdata = CVPixelBufferGetBaseAddress(pxbuffer);

NSParameterAssert(pxdata != NULL);

CGColorSpaceRef rgbColorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef context = CGBitmapContextCreate(pxdata, imageRect.size.width,

imageRect.size.height, 8, 4*imageRect.size.width, rgbColorSpace,

kCGImageAlphaNoneSkipFirst);

NSParameterAssert(context);

CGContextConcatCTM(context, CGAffineTransformMakeRotation(0));

CGContextDrawImage(context, CGRectMake(0, 0, imageRect.size.width, imageRect.size.height), image);

CGColorSpaceRelease(rgbColorSpace);

CGContextRelease(context);

CVPixelBufferUnlockBaseAddress(pxbuffer, 0);

return pxbuffer;

}

#pragma mark - 混音 取出mov的音频和选择的音乐混音

- (void)soundMixing{

if (self.deleagte && [self.deleagte respondsToSelector:@selector(CompositionDidBegin)]) {

[self.deleagte CompositionDidBegin];

}

AVMutableComposition *composition = [AVMutableComposition composition];

audioMixParams = [[NSMutableArray alloc] initWithObjects:nil];

AVURLAsset *songAsset = [AVURLAsset URLAssetWithURL:self.overUrl options:nil];

CMTime startTime = CMTimeMakeWithSeconds(0, 1);

CMTime trackDuration = songAsset.duration;

[self setUpAndAddAudioAtPath:self.overUrl toComposition:composition start:startTime dura:trackDuration offset:CMTimeMake(14*44100, 44100)];

NSString * path = [[NSBundle mainBundle] pathForResource:@"最炫民族风" ofType:@"mp3"];

NSURL *assetURL2 = [NSURL fileURLWithPath:path];

[self setUpAndAddAudioAtPath:assetURL2 toComposition:composition start:startTime dura:trackDuration offset:CMTimeMake(0, 44100)];

AVMutableAudioMix *audioMix = [AVMutableAudioMix audioMix];

audioMix.inputParameters = [NSArray arrayWithArray:audioMixParams];

//If you need to query what formats you can export to, here's a way to find out

NSLog (@"compatible presets for songAsset: %@",

[AVAssetExportSession exportPresetsCompatibleWithAsset:composition]);

AVAssetExportSession *exporter = [[AVAssetExportSession alloc]

initWithAsset: composition

presetName: AVAssetExportPresetAppleM4A];

exporter.audioMix = audioMix;

exporter.outputFileType = @"com.apple.m4a-audio";

NSString* fileName = [NSString stringWithFormat:@"%@.mov", @"overMix"];

NSString *exportFile = [NSString stringWithFormat:@"%@/%@", [self getLibarayPath], fileName];

// set up export

if ([[NSFileManager defaultManager] fileExistsAtPath:exportFile]) {

[[NSFileManager defaultManager] removeItemAtPath:exportFile error:nil];

}

NSLog(@"是否在主线程1 %d",[NSThread isMainThread]);

NSLog(@"输出路径===%@",exportFile);

NSURL *exportURL = [NSURL fileURLWithPath:exportFile];

exporter.outputURL = exportURL;

self.mixURL = exportURL;

// do the export

[exporter exportAsynchronouslyWithCompletionHandler:^{

int exportStatus = exporter.status;

switch (exportStatus) {

case AVAssetExportSessionStatusFailed:{

NSError *exportError = exporter.error;

NSLog (@"AVAssetExportSessionStatusFailed: %@", exportError);

break;

}

case AVAssetExportSessionStatusCompleted: {

NSLog(@"是否在主线程2 %d",[NSThread isMainThread]);

NSLog (@"AVAssetExportSessionStatusCompleted");

break;

}

case AVAssetExportSessionStatusUnknown: NSLog (@"AVAssetExportSessionStatusUnknown"); break;

case AVAssetExportSessionStatusExporting: NSLog (@"AVAssetExportSessionStatusExporting"); break;

case AVAssetExportSessionStatusCancelled: NSLog (@"AVAssetExportSessionStatusCancelled"); break;

case AVAssetExportSessionStatusWaiting: NSLog (@"AVAssetExportSessionStatusWaiting"); break;

default: NSLog (@"didn't get export status"); break;

}

}];

}

static int numMix = 0;

- (void) setUpAndAddAudioAtPath:(NSURL*)assetURL toComposition:(AVMutableComposition *)composition start:(CMTime)start dura:(CMTime)dura offset:(CMTime)offset{

AVURLAsset *songAsset = [AVURLAsset URLAssetWithURL:assetURL options:nil];

AVMutableCompositionTrack *track = [composition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

AVAssetTrack *sourceAudioTrack = [[songAsset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0];

NSError *error = nil;

BOOL ok = NO;

CMTime startTime = start;

CMTime trackDuration = dura;

CMTimeRange tRange = CMTimeRangeMake(startTime, trackDuration);

//Set Volume

AVMutableAudioMixInputParameters *trackMix = [AVMutableAudioMixInputParameters audioMixInputParametersWithTrack:track];

if (numMix == 0) {

NSLog(@"音量0.9");

[trackMix setVolume:1.0f atTime:startTime];

}else{

NSLog(@"音量0.2");

[trackMix setVolume:0.05f atTime:startTime];

}

numMix++;

[audioMixParams addObject:trackMix];

//Insert audio into track //offset CMTimeMake(0, 44100)

ok = [track insertTimeRange:tRange ofTrack:sourceAudioTrack atTime:kCMTimeInvalid error:&error];

}

#pragma mark - 保存路径

- (NSString*)getLibarayPath

{

NSFileManager *fileManager = [NSFileManager defaultManager];

NSArray* paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory,NSUserDomainMask, YES);

NSString* path = [paths objectAtIndex:0];

NSString *movDirectory = [path stringByAppendingPathComponent:@"tmpMovMix"];

[fileManager createDirectoryAtPath:movDirectory withIntermediateDirectories:YES attributes:nil error:nil];

return movDirectory;

}

#pragma mark - 最后合成总的mov

- (void)overMix{

[self.trackArr removeAllObjects];

dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

//整合

AVMutableComposition* mixComposition = [[AVMutableComposition alloc] init];

AVMutableVideoCompositionInstruction * MainInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

AVAsset *item = [AVAsset assetWithURL:self.overUrl];

AVAsset *sound = [AVAsset assetWithURL:self.mixURL];

AVMutableCompositionTrack *firstTrack = [mixComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

[firstTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, item.duration) ofTrack:[[item tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0] atTime:kCMTimeZero error:nil];

AVMutableCompositionTrack *AudioTrack = [mixComposition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

[AudioTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, item.duration) ofTrack:[[sound tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0] atTime:kCMTimeZero error:nil];

//FIXING ORIENTATION//

AVMutableVideoCompositionLayerInstruction *FirstlayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:firstTrack];

[FirstlayerInstruction setOpacity:0.0 atTime:item.duration];

[self.trackArr insertObject:FirstlayerInstruction atIndex:0];

MainInstruction.timeRange = CMTimeRangeMake(kCMTimeZero,item.duration);

MainInstruction.layerInstructions = self.trackArr;

AVMutableVideoComposition *MainCompositionInst = [AVMutableVideoComposition videoComposition];

MainCompositionInst.instructions = [NSArray arrayWithObject:MainInstruction];

MainCompositionInst.frameDuration = CMTimeMake(1, 30);

if (iPhone5) {

MainCompositionInst.renderSize = CGSizeMake(320.0, 568.0);

}else{

MainCompositionInst.renderSize = CGSizeMake(320.0, 480.0);//解决图像问题

}

NSString* fileName = [NSString stringWithFormat:@"%@.mov", @"over+mix-over2"];

NSString *filePath = [NSString stringWithFormat:@"%@/%@", [self getLibarayPath], fileName];

NSLog(@"输出路径 === %@",filePath);

NSURL *url = [NSURL fileURLWithPath:filePath];

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:mixComposition presetName:AVAssetExportPresetHighestQuality];

exporter.outputURL=url;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

// exporter.videoComposition = MainCompositionInst;

exporter.shouldOptimizeForNetworkUse = YES;

[exporter exportAsynchronouslyWithCompletionHandler:^

{

dispatch_async(dispatch_get_main_queue(), ^{

[self exportDidFinish:exporter];

});

}];

});

}

#pragma mark - 根据asset累加得到duration

- (CMTime)addAllAVAssetDuration:(MovDetailItem *)item{

if (item.num == 1) {

self.allTime = CMTimeAdd(kCMTimeZero, item.asset.duration);

}else{

self.allTime = CMTimeAdd(self.allTime, item.asset.duration);

}

return self.allTime;

}

1516

1516

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?