拍照识别文字

介绍

本示例通过使用@ohos.multimedia.camera (相机管理)和textRecognition(文字识别)接口来实现识别提取照片内文字的功能。

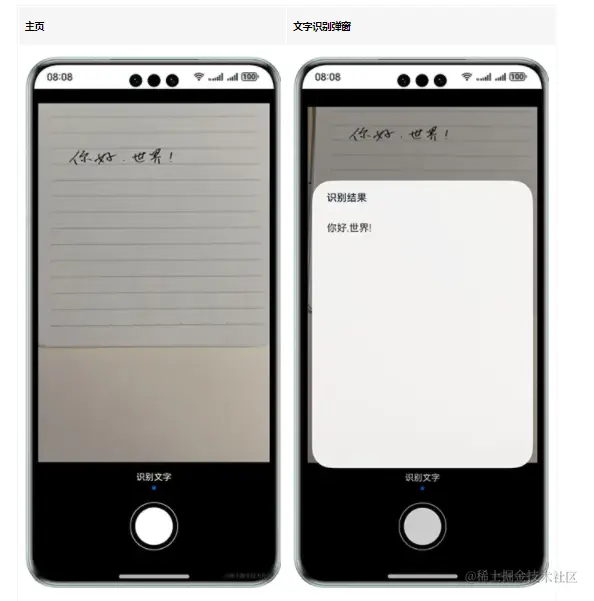

效果预览

使用说明

1.点击界面下方圆形文字识别图标,弹出文字识别结果信息界面,显示当前照片的文字识别结果;

2.点击除了弹窗外的空白区域,弹窗关闭,返回主页。

具体实现

- 本实例完成AI文字识别的功能模块主要封装在CameraModel,源码参考:[CameraModel.ets]。

/*

* Copyright (c) 2023 Huawei Device Co., Ltd.

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

import { BusinessError } from '@kit.BasicServicesKit';

import { camera } from '@kit.CameraKit';

import { common } from '@kit.AbilityKit';

import { image } from '@kit.ImageKit';

import { textRecognition } from '@kit.CoreVisionKit';

import Logger from './Logger';

import CommonConstants from '../constants/CommonConstants';

const TAG: string = '[CameraModel]';

export default class Camera {

private cameraMgr: camera.CameraManager | undefined = undefined;

private cameraDevice: camera.CameraDevice | undefined = undefined;

private capability: camera.CameraOutputCapability | undefined = undefined;

private cameraInput: camera.CameraInput | undefined = undefined;

public previewOutput: camera.PreviewOutput | undefined = undefined;

private receiver: image.ImageReceiver | undefine

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

749

749

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?