一.伪分布搭建

首先配置网络

1. vi /etc/sysconfig/network-scripts/ifcfg-ens33

IPADDR=192.168.x.200

NETMASK=255.255.255.0

GATEWAY=192.168.x.2

DNS1=114.114.114.114

退出

输入

2. service network restart

解压jdk hadoop

3. cd /opt

4. tar -zxf /opt/jdk-8u221-linux-x64.tar.gz -C /usr/local

tar -zxf hadoop-3.2.4.tar.gz -C /usr/local/ (在主机 master 上将 Hadoop 解压到/opt )

5. vi /etc/profile

export JAVA_HOME=/usr/local/jdk1.8.0_221

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/usr/local/hadoop-3.2.4

export PATH=$PATH:$HADOOP_HOME/bin

6. source /etc/profile

执行“java”和“javac”命令

java -version

javac -version 可以验证

host配置

hostnamectl set-hostname master

bash #重置

生成密钥并免密登录

7. ssh-keygen #生成密钥

8. cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys #追加密钥到钥匙包里面

9. ssh localhost # 测试是否对自己免密远程登录

10. logout #退出当前远程登录

关闭防火墙

11. systemctl stop firewalld

12. systemctl disable firewalld.service

然后

13. cd /usr/local/hadoop-3.2.4/etc/hadoop/

14. vi hadoop-env.sh

在文件开头添加

HDFS_NAMENODE_USER=root

HDFS_DATANODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

YARN_RESOURCEMANAGER_USER=root

YARN_NODEMANAGER_USER=root

export JAVA_HOME=/usr/local/jdk1.8.0_221

15. vi core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/tmp/hadoop</value>

</property>

</configuration>

16. vi hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>192.168.17.200:15887</value>

</property>

</configuration>

格式化hdfs (初始化hadoop 环境 namenode)

17. hdfs namenode -format

如果启动失败

rm -rf /tmp/*

再hdfs namenode -format

配置yarn

配置mapred-site.xml

18. cd $HADOOP_HOME/etc/hadoop

19. vi mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.4</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.4</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.2.4</value>

</property>

</configuration>

配置yarn-site.xml

20. vi yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

启动集群

21. cd $HADOOP_HOME

启动hdfs yarn (启动 hadoop)

22. sbin/start-dfs.sh sbin/start-yarn.sh

23. jps (查看节点 jps 进程)

去浏览器输入192.168.xx:158870

二.全分布搭建

worker00

同上1-2

worker01

同上1-2

改为IPADDR=192.168.XX.201

分别修改主机名

hostnamectl set-hostname worker00

bash

hostnamectl set-hostname worker01

bash

相互免密

先在worker00生成密钥

ssh-keygen

发送给worker00

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.xx.200

发送给worker01

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.xx.201

在worker01生成密钥

ssh-keygen

发送给worker01

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.xx.201

发送给worker00

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.xx.200

在worker00上

vi /etc/hosts

文末添加

192.168.xx.200 worker00

192.168.xx.201 worker01

发送文件到worker01

scp /etc/hosts worker01:/etc/hosts

解压hadoop jdk

同上3-4

配置环境变量

同上5-6

配置hadoop在worker00上操作

同上13-14

vi core-site.xml

同上15

修改其中的<value>hdfs://localhost:9000</value>变为<value>hdfs://worker00:9000</value>

vi hdfs-site.xml

同上16

修改其中的<value>1</value>变为<value>2</value>

配置mapred-site.xml

同上18-19

配置yarn-site.xml

同上20

vi workers

删除所有数据并添加

worker00

worker01

发送到worker01在worker00上操作

scp /etc/profile worker01:/etc/profile

到worker01上输入 source /etc/profile

在worker00上操作

scp -r /usr/local/jdk1.8.0_221 worker01:/usr/local/jdk1.8.0_221

scp -r /usr/local/hadoop-3.2.4/ worker01:/usr/local/hadoop-3.2.4/

格式化集群

hdfs namenode -format

启动集群在worker00 上操作

同上21-22

关闭防火墙

同上11-12

三.hive(保证伪/全分布式/hdfs+yarn成功配置)

下载hive

yum -y install wget

cd /opt

安装hive

wget http://mirrors.aliyun.com/apache/hive/hive-3.1.2/apache-hive-3.1.2-bin.tar.gz

启动集群

同上21-22

解压hive

tar -zxf /opt/apache-hive-3.1.2-bin.tar.gz -C /usr/local/

配置环境变量

vi /etc/profile

文末添加

export HIVE_HOME=/usr/local/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin

退出 输入 source /etc/profile

替换hive中落后的文件

cp $HADOOP_HOME/share/hadoop/common/lib/guava-27.0-jre.jar $HIVE_HOME/lib/

rm -f $HIVE_HOME/lib/guava-19.0.jar

启动hive

cd ~

rm -fr *

schematool -initSchema -dbType derby

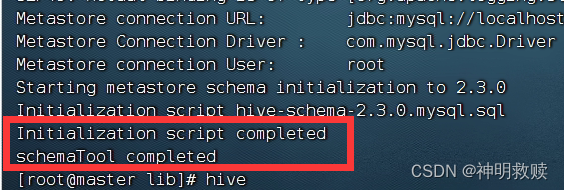

以上代表成功

输入hive 进入hive

输入exit; 退出hive

安装mysql (添加所依赖包)

rpm -qa|grep mariadb

rpm -e mariadb-libs-5.5.68-1.el7.x86_64 --nodeps #建议用这种

解压

tar -xf mysql-5.7.40-1.el7.x86_64.rpm-bundle.tar

rpm -ivh mysql-community-common-5.7.40-1.el7.x86_64.rpm

rpm -ivh mysql-community-libs-5.7.40-1.el7.x86_64.rpm

rpm -ivh mysql-community-client-5.7.40-1.el7.x86_64.rpm

yum install -y net-tools

yum install -y perl

rpm -ivh mysql-community-server-5.7.40-1.el7.x86_64.rpm

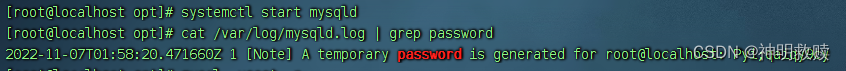

启动mysql

systemctl start mysqld

查看临时密码

cat /var/log/mysqld.log | grep password

登录mysql

mysql -u root -p

输入临时密码

修改密码

#首先需要设置密码的验证强度等级

set global validate_password_policy=LOW;

#设置为 6 位的密码

set global validate_password_length=6;

#现在可以为 mysql 设置简单密码了,只要满足六位的长度即可

ALTER USER 'root'@'localhost' IDENTIFIED BY '123456'

创建hive使用的数据库

create database hivedb CHARACTER SET utf8;

配置hive-site.xml

cd $HIVE_HOME/conf

vi hive-site.xml

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://localhost:3306/hivedb?createDatabaseIfNotExist=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=GMT</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<!-- 修改为你自己的Mysql账号 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<!-- 修改为你自己的Mysql密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<!-- 忽略HIVE 元数据库版本的校验,如果非要校验就得进入MYSQL升级版本 -->

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

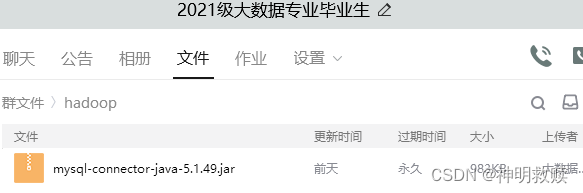

将mysql的链接jar包传到 hive安装目录下的 lib目录

初始化mysql 与hive

schematool -initSchema -dbType mysql (通过 schematool 相关命令执行初始化)

四.配置hbase

解压hbase

tar -zxf /opt/hbase-2.4.16-bin.tar.gz -C /usr/local/

配置环境变量

vi /etc/profile

export HBASE_HOME=/usr/local/hbase-2.4.16

export PATH=$PATH:$HBASE_HOME/bin

export ZOOKEEPER_HOME=/usr/local/apache-zookeeper-3.5.7-bin

export PATH=$PATH:$ZOOKEEPER_HOME/bin

输入source /etc/profile

测试hbase

cd $HBASE_HOME

配置hbase-env.sh

vi $HBASE_HOME/conf/hbase-env.sh

最前方添加

export JAVA_HOME=/usr/local/jdk1.8.0_221

export HBASE_CLASSPATH=/usr/local/hbase-2.4.16/conf

export HBASE_MANAGERS_ZK=true

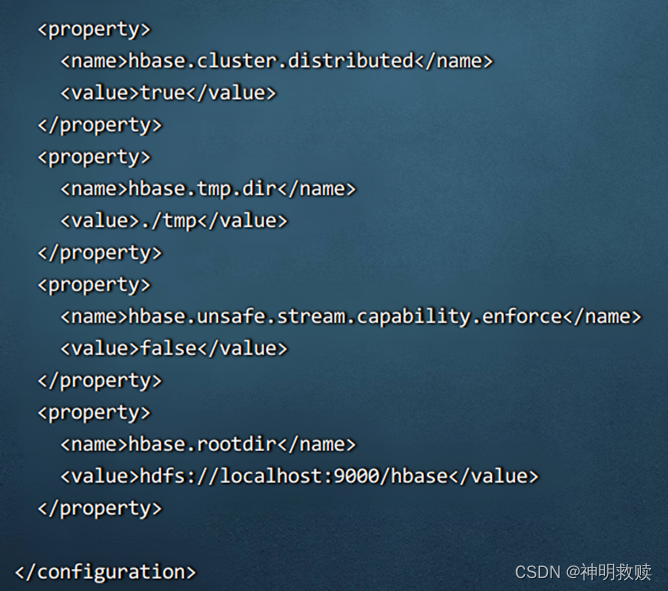

配置hbase-site.xml

vi $HBASE_HOME/conf/hbase-site.xml

…将原有的hbase.cluster.distributed的false属性改为true

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

在configuration文档最后添加如下内容,注意不要写到configuration外去

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:9000/hbase</value>

</property>

如下

启动hbase

启动hdfs yarn后 再启动hbase

cd $HADOOP_HOME

sbin/start-dfs.sh sbin/start-yarn.sh hbase shell

输入jps

HquorumPeer、Hmaster、HregionServer

五.zookeeper

解压zookeeper

tar -zxf /opt/apache-zookeeper-3.5.7-bin.tar.gz -C/usr/local/

配置环境变量

vi /etc/profile

export ZOOKEEPER_HOME=/usr/local/apache-zookeeper-3.5.7-bin

export PATH=$PATH:$ZOOKEEPER_HOME/bin

退出 输入source /etc/profile

配置zookeeper

cd $ZOOKEEPER_HOME/conf/

cp zoo_sample.cfgzoo.cfg

vi zoo.cfg

文末添加如下内容

server.1=192.168.184.250:3188:3288

server.2=192.168.184.251:3188:3288

server.3=192.168.184.252:3188:3288

六.spark搭建

启动hdfs yarn

cd $HADOOP_HOME

sbin/start-dfs.sh sbin/start-yarn.sh

解压spark

tar -zxf /opt/spark-3.3.2-bin-hadoop3.tgz -C /usr/local/

配置环境变量

vi /etc/profile

export SPARK_HOME=/usr/local/spark-3.3.2-bin-hadoop3

export PATH=$PATH:$SPARK_HOME/bin

退出 输入 source /etc/profile

计算pi

run-example SparkPi 10

cd $SPARK_HOME

spark-submit \

--class org.apache.spark.examples.SparkPi \

$SPARK_HOME/examples/jars/spark-examples_2.12-3.3.2.jar 10

修改配置文件

cd $SPARK_HOME/conf

cp spark-env.sh.template spark-env.sh

vi spark-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_221

export HADOOP_HOME=/usr/local/hadoop-3.2.4

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

启动spark

cd $SPARK_HOME

sbin/start-all.sh

输入jps

要有Master Worke

IT技术指南:伪分布与全分布Hadoop、Hive、HBase及Spark的部署与配置

IT技术指南:伪分布与全分布Hadoop、Hive、HBase及Spark的部署与配置

1516

1516

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?