2.7.1 设置k8s源

[root@k8s-master01 ~]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

2.7.2 设置docker源

[root@k8s-master01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-master01 ~]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

已加载插件:fastestmirror

adding repo from: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

grabbing file https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.repo

repo saved to /etc/yum.repos.d/docker-ce.repo

[root@k8s-master01 ~]# yum makecache fast

[root@k8s-master01 ~]# yum install ipvsadm ipset sysstat conntrack libseccomp –y

[root@k8s-master01 ~]# vim /etc/modules-load.d/ipvs.conf

[root@k8s-master01 ~]# cat /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

[root@k8s-master01 ~]# systemctl enable --now systemd-modules-load.service

[root@k8s-master01 ~]# cat < /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

[root@k8s-master01 ~]# sysctl --system

[root@k8s-master01 ~]# swapoff -a

[root@k8s-master01 ~]# vim /etc/fstab

[root@k8s-master01 ~]# cat /etc/fstab

#/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-master01 ~]# ssh-keygen -t rsa

[root@k8s-master01 ~]# for i in k8s-master01 k8s-master02 k8s-node01;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

[root@k8s-master01 ~]# ulimit -SHn 65535

=================================================================

(所有节点都要安装:)

[root@k8s-master01 ~]# wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.13-3.2.el7.x86_64.rpm

[root@k8s-master01 ~]# yum -y install containerd.io-1.2.13-3.2.el7.x86_64.rpm

[root@k8s-master01 ~]# yum -y install kubeadm kubelet kubectl --disableexcludes=kubernetes

已安装:

kubeadm.x86_64 0:1.19.1-0 kubectl.x86_64 0:1.19.1-0 kubelet.x86_64 0:1.19.1-0

作为依赖被安装:

cri-tools.x86_64 0:1.13.0-0 kubernetes-cni.x86_64 0:0.8.7-0

socat.x86_64 0:1.7.3.2-2.el7

[root@k8s-master01 ~]# systemctl enable --now kubelet

[root@k8s-master01 ~]# yum -y install docker-ce

已安装:

docker-ce.x86_64 3:19.03.12-3.el7

[root@k8s-master01 ~]# systemctl start docker && systemctl enable docker

[root@k8s-master01 ~]# vim /etc/docker/daemon.json

[root@k8s-master01 ~]# cat /etc/docker/daemon.json

{

“exec-opts”: [“native.cgroupdriver=systemd”],

“registry-mirrors”:[“https://655dds7u.mirror.aliyuncs.com”]

}

[root@k8s-master01 ~]# systemctl restart docker

[root@k8s-master01 ~]# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS=“–cgroup-driver=$DOCKER_CGROUPS --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.1”

EOF

====================================================================

(所有master节点安装:)

[root@k8s-master01 ~]# yum install keepalived haproxy –y

已安装:

haproxy.x86_64 0:1.5.18-9.el7 keepalived.x86_64 0:1.3.5-16.el7

(所有master节点配置相同:)

[root@k8s-master01 ~]# cd /etc/haproxy/

[root@k8s-master01 haproxy]# cp haproxy.cfg haproxy.cfg.bak

[root@k8s-master01 haproxy]# vim haproxy.cfg

[root@k8s-master01 haproxy]# cat haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server master01 192.168.1.3:6443 check

server master02 192.168.1.4:6443 check

4.3.1 修改master01配置文件

[root@k8s-master01 ~]# cd /etc/keepalived/

[root@k8s-master01 keepalived]# cp keepalived.conf keepalived.conf.bak

[root@k8s-master01 keepalived]# vim keepalived.conf

[root@k8s-master01 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script “/etc/keepalived/check_apiserver.sh”

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip 192.168.1.3

virtual_router_id 51

priority 150

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.100/24

}

track_script {

chk_apiserver

}

4.3.2 修改master02配置文件

[root@k8s-master02 ~]# cd /etc/keepalived/

[root@k8s-master02 keepalived]# cp keepalived.conf keepalived.conf.bak

[root@k8s-master02 keepalived]# vim keepalived.conf

[root@k8s-master02 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script “/etc/keepalived/check_apiserver.sh”

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

mcast_src_ip 192.168.1.4

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.100/24

}

track_script {

chk_apiserver

}

}

(所有master节点:)

[root@k8s-master01 ~]# vim /etc/keepalived/check_apiserver.sh

[root@k8s-master01 ~]# cat /etc/keepalived/check_apiserver.sh

#!/bin/bash

err=0

for k in $(seq 1 5)

do

check_code=$(pgrep kube-apiserver)

if [[ $check_code == “” ]]; then

err=$(expr $err + 1)

sleep 5

continue

else

err=0

break

fi

done

if [[ $err != “0” ]]; then

echo “systemctl stop keepalived”

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

[root@k8s-master01 ~]# systemctl start haproxy

[root@k8s-master01 ~]# systemctl enable haproxy

[root@k8s-master01 ~]# systemctl start keepalived

[root@k8s-master01 ~]# systemctl enable keepalived

==================================================================

(所有master节点:)

[root@k8s-master01 ~]# kubeadm config print init-defaults > init.default.yaml

[root@k8s-master01 ~]# vim init.default.yaml

[root@k8s-master01 ~]# cat init.default.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

-

groups:

-

system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

-

signing

-

authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.3

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

apiServer:

certSANs:

- 192.168.1.100

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.1.100:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.19.0

networking:

dnsDomain: cluster.local

podSubnet: 172.168.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

(所有master节点:)

[root@k8s-master01 ~]# kubeadm config images pull --config /root/init.default.yaml

[root@k8s-master01 ~]# kubeadm init --config /root/init.default.yaml --upload-certs

部分初始化内容:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run “kubectl apply -f [podnetwork].yaml” with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.1.100:16443 --token abcdef.0123456789abcdef \

–discovery-token-ca-cert-hash sha256:f0e18d595009a909d60378598bbf80895cf393b4ebf851fa75c73c88de5644cb \

–control-plane --certificate-key d092072bb3f05ad103537a3d371b429edc84f38d55cfe57e6f62d80e79b7455d

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

“kubeadm init phase upload-certs --upload-certs” to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.100:16443 --token abcdef.0123456789abcdef \

–discovery-token-ca-cert-hash sha256:f0e18d595009a909d60378598bbf80895cf393b4ebf851fa75c73c88de5644cb

按照要求创建目录:

[root@k8s-master01 ~]# mkdir -p $HOME/.kube

[root@k8s-master01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s-master01 ~]# chown ( i d − u ) : (id -u): (id−u):(id -g) $HOME/.kube/config

(所有master节点:)

[root@k8s-master01 ~]# cat <> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

[root@k8s-master01 ~]# source /root/.bashrc

[root@k8s-master01 ~]# curl https://docs.projectcalico.org/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 182k 100 182k 0 0 37949 0 0:00:04 0:00:04 --:–:-- 42388

[root@k8s-master01 ~]# vim calico.yaml

- name: CALICO_IPV4POOL_CIDR

value: “172.168.0.0/16”

[root@k8s-master01 ~]# kubectl apply -f calico.yaml

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 26m v1.19.1

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-c9784d67d-52ksp 1/1 Running 0 3m23s 172.168.32.130 k8s-master01

calico-node-947h8 1/1 Running 0 3m23s 192.168.1.3 k8s-master01

coredns-6c76c8bb89-qqc5h 1/1 Running 0 26m 172.168.32.131 k8s-master01

coredns-6c76c8bb89-wwtgh 1/1 Running 0 26m 172.168.32.129 k8s-master01

etcd-k8s-master01 1/1 Running 0 26m 192.168.1.3 k8s-master01

kube-apiserver-k8s-master01 1/1 Running 0 26m 192.168.1.3 k8s-master01

kube-controller-manager-k8s-master01 1/1 Running 0 26m 192.168.1.3 k8s-master01

kube-proxy-f4fgp 1/1 Running 0 26m 192.168.1.3 k8s-master01

kube-scheduler-k8s-master01 1/1 Running 0 26m 192.168.1.3 k8s-master01

5.6.1 master节点加入集群

[root@k8s-master02 ~]# kubeadm join 192.168.1.100:16443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:f0e18d595009a909d60378598bbf80895cf393b4ebf851fa75c73c88de5644cb --control-plane --certificate-key d092072bb3f05ad103537a3d371b429edc84f38d55cfe57e6f62d80e79b7455d

5.6.2 node节点加入集群

[root@k8s-node01 ~]# kubeadm join 192.168.1.100:16443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:f0e18d595009a909d60378598bbf80895cf393b4ebf851fa75c73c88de5644cb

6.7.3 在master查看集群节点

[root@k8s-master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 34m v1.19.1

k8s-master02 Ready master 4m42s v1.19.1

k8s-node01 NotReady 60s v1.19.1

此时查看所有pod:

[root@k8s-master01 ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-c9784d67d-52ksp 1/1 Running 1 123m 172.168.32.134 k8s-master01

calico-node-947h8 1/1 Running 1 123m 192.168.1.3 k8s-master01

calico-node-9hcfm 1/1 Running 0 117m 192.168.1.4 k8s-master02

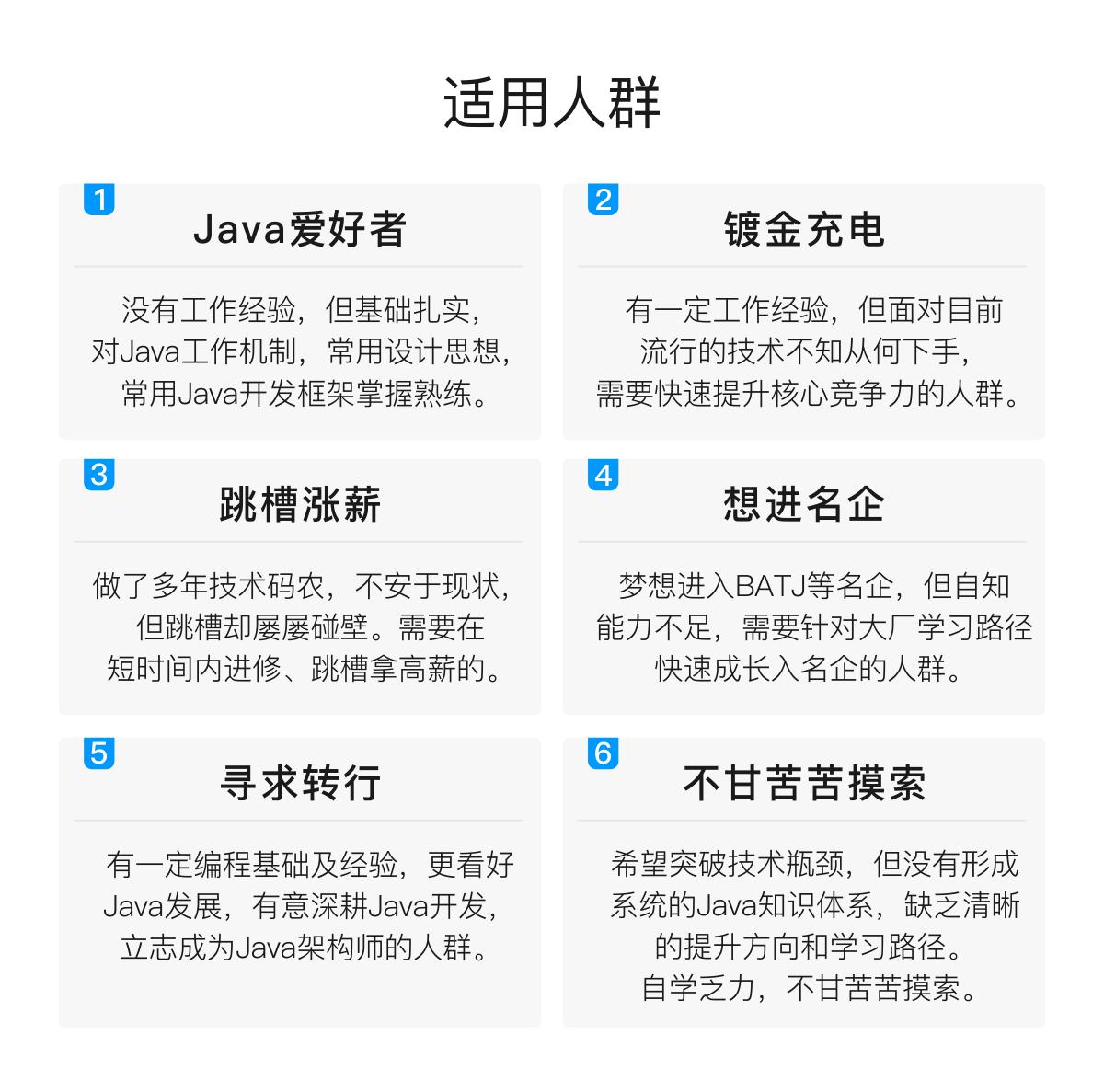

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,真正体系化!

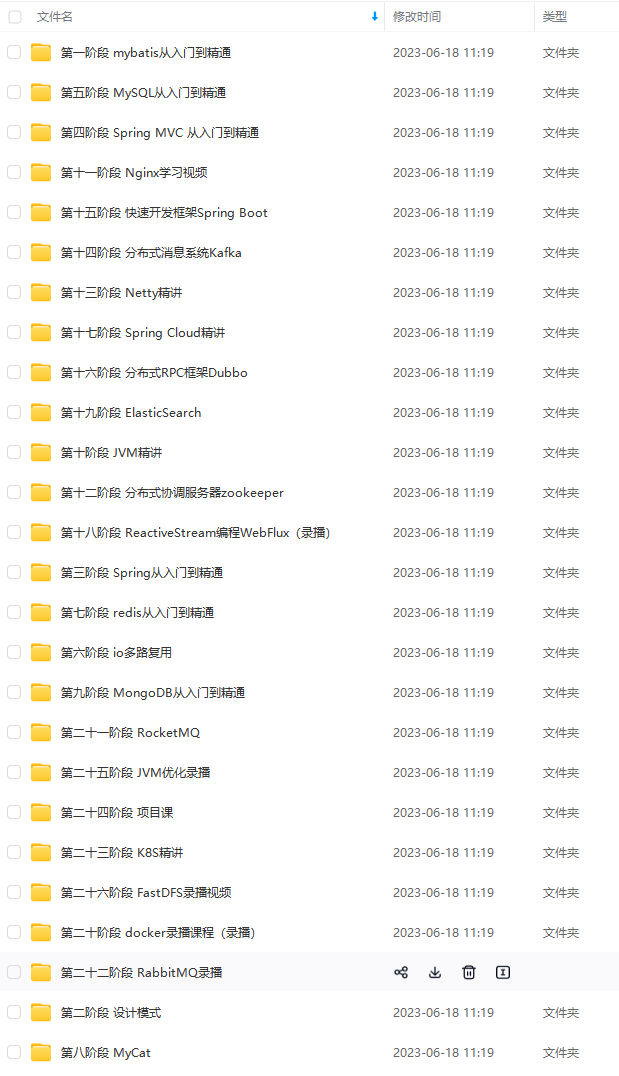

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

如果你觉得这些内容对你有帮助,可以扫码获取!!(备注Java获取)

最后

分享一些资料给大家,我觉得这些都是很有用的东西,大家也可以跟着来学习,查漏补缺。

《Java高级面试》

《Java高级架构知识》

《算法知识》

《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!

calico-node-9hcfm 1/1 Running 0 117m 192.168.1.4 k8s-master02

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数Java工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Java开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。[外链图片转存中…(img-3nbIEsEP-1713213764595)]

[外链图片转存中…(img-fhxnwsaT-1713213764596)]

[外链图片转存中…(img-BDFxLoVg-1713213764596)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上Java开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且会持续更新!

如果你觉得这些内容对你有帮助,可以扫码获取!!(备注Java获取)

最后

分享一些资料给大家,我觉得这些都是很有用的东西,大家也可以跟着来学习,查漏补缺。

《Java高级面试》

[外链图片转存中…(img-nBf4DN5f-1713213764596)]

《Java高级架构知识》

[外链图片转存中…(img-RSBpbgay-1713213764596)]

《算法知识》

[外链图片转存中…(img-c0FwD4e8-1713213764597)]

《互联网大厂面试真题解析、进阶开发核心学习笔记、全套讲解视频、实战项目源码讲义》点击传送门即可获取!

6617

6617

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?